# **Rust + WebAssembly: Building Infrastructure for Large Language Model Ecosystems**

*By: Sam Liu, Second State Engineer, CNCF’s WasmEdge Maintainer*

*Miley Fu, CNCF Ambassador, DevRel at WasmEdge*

>This is a talk at the track “The Programming Languages Shaping the Future of Software Development” at QCon 2023 Beijing on Sept 6th, 2023. The session aims to address the challenges faced by the current mainstream Python and Docker approach in building infrastructure for large language model(LLM) applications. It introduced the audience to the advantages of the Rust + WebAssembly approach, emphasizing its potential in addressing the performance, security, and efficiency concerns associated with the traditional approach.

>Throughout the session, Sam shared insights from practical projects, showcasing the real-world applications of Rust and WebAssembly in constructing robust AI infrastructures. The talk was enriched with references, code snippets, and visual aids to provide a comprehensive understanding of the topic.

## Introduction

In the ever-evolving world of technology, the application driven by large language models, commonly referred to as "LLM application", has become a driving force behind technological innovations across various industries. As such applications gain traction, the massive influx of user demands poses new challenges in terms of performance, security, and reliability of the underlying infrastructure.

Python and Docker have long been the mainstream choice for building machine learning applications. However, when it comes to building infrastructure for Large Language Model (LLM) applications, some of the drawbacks of this combination become more serious, such as Python's performance issues and Docker's cold start problems. In this talk, we will focus on the main scenario of building infrastructure for LLM ecosystems, and take a closer look at the problems with the Python and Docker combination, and more importantly, why Rust + WebAssembly (WASM) is superior to Python + Docker. Finally, we will demonstrate how to build a Code Review Bot on the [flows.network](https://flows.network/) [^flows] platform.

## The Current Landscape: Python + Docker Approach

In the field of machine learning, Python is almost the king, mainly due to the following three characteristics:

* **Easy to learn:** Python is a high-level language with a simple syntax, making it easy to learn and use. This can be an advantage for developers who are new to AI or who need to quickly prototype and test ideas.

* **Large community:** Python has a large and active community of developers, which means that there are many libraries and tools available for AI development. This can be an advantage for developers who need to quickly find solutions to common problems.

* **Flexibility:** Python is a versatile language that can be used for a wide range of AI tasks, including data analysis, machine learning, and natural language processing. This can be an advantage for developers who need to work on multiple AI projects.

As one of the most popular container management tools today, Docker containers provide great convenience for application deployment:

* **Portability:** Docker containers are designed to be portable, which means that they can be easily moved between different environments. This can be an advantage for developers who need to deploy AI applications to multiple platforms or cloud providers.

* **Isolation:** Docker containers provide a high level of isolation between the application and the host operating system, which can improve security and stability. This can be an advantage for organizations that require high levels of security.

* **Scalability:** Docker containers can be easily scaled up or down to meet changing demands, which can be an advantage for AI applications that require a lot of computation or that need to handle large datasets.

For the development and deployment of traditional machine learning applications, the Python + Docker mode has demonstrated its advantages. In the construction of infrastructure for LLM ecosystems, however, it faces challenges.

## Challenges with Python + Docker

Things often have two sides. The advantages of Python and Docker also naturally bring some shortcomings. However, in the process of building infrastructure for LLM ecosystems, these shortcomings become more prominent and become key obstacles. Let us see the issues Python has first.

### Disadvantages of Python

* **Performance Bottlenecks**

Python is an interpreted language, which means that it can be slower than compiled languages like C++ or Rust. This can be a disadvantage when working with large datasets or complex models that require a lot of computation.

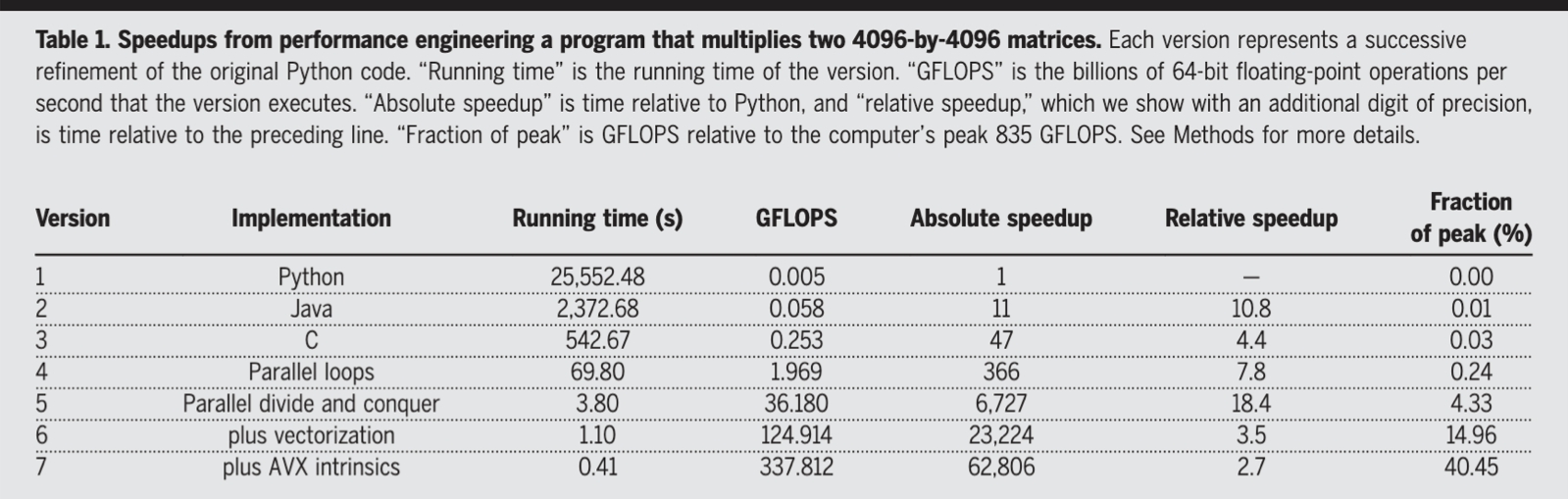

In Fig.1[^Fig.1], the first three rows show the performance of a programming that multiplies two 4096-by-4096 matrices, in Python, Java and C, respectively. From the statistics in the column `Running time (s)`, we can see that (1) Java (as a static programming language) is 10x faster than Python (as a dynamic programming language); (2) C (as a non-GC programming language) is 50x faster than Python (as a GC programming language).

<center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Fig.1 Speedups from performance engineering a program that multiplies two 4096-by-4096 matrices.</center>

* **Parallelism**

Python's Global Interpreter Lock (GIL) is often cited as a limitation when it comes to parallel execution. The GIL ensures that only one thread executes Python bytecode at a time in a single process, which can hinder the full utilization of multi-core processors and affect parallel performance.

* **Memory Management**

Python's dynamic typing and garbage collection can introduce overheads in memory management. While the garbage collector helps in automatic memory management, it can sometimes lead to inefficiencies, especially in scenarios where real-time performance is crucial.

### Mixed programming: Python + C/C++/Rust

To improve the performance issues of the Python language itself, a common approach is to use Python as a front-end language responsible for interacting with users, while selecting a high-performance programming language such as C/C++/Rust as a back-end language to handle heavy computing tasks. Many well-known libraries in the Python ecosystem use this approach to meet the demand for high-performance computing, such as Numpy. However, this mixed programming approach inevitably requires additional tools (or libraries) as a bridge to "connect" the two different programming languages. Consequently, this process can introduce new problems.

* **Maintenance Cost**

Assuming we want to "bind" Python and C++ APIs, we have to use third-party libraries to automate this conversion process, such as Pybind11. The example code in Fig.2[^Fig.2] shows how to "bind" C++ and Python programs using Pybind11. It is not difficult to see that even though Pybind11 greatly simplifies the conversion process, adding or removing any C++ API requires corresponding changes to the conversion code, and the difficulty of the changes is closely related to the content of the changes. From a cost perspective, this process not only increases the learning cost for developers, but also increases the development and maintenance costs of the project

<center class="half">

<img src="https://hackmd.io/_uploads/r1fQYS402.png" width="300"/><img src="https://hackmd.io/_uploads/Bk9EYHN02.png" width="300"/>

</center><center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Fig.2 "Glue" C++ and Python together.</center>

* **Portability Issues**

Mixed programming can introduce portability challenges. Code that seamlessly runs on one platform might face issues on another due to differences in how Python interacts with native libraries or system-level dependencies across different environments.

* **Integration Complexity**

As illustrated in Fig.2, binding Python to other languages often requires careful management of data types, memory allocation, and error handling. Even though there are third-party libraries that can improve the binding task, such as Pybind11, this "glue" process is still error-prone and demands a deep understanding of both Python and the other language in use. This will somewhat increase the development time and risk.

### Limitations of Docker Containers

* **Cold Start Performance**

Docker containers, while efficient, sometimes face challenges with cold start performance. A "cold start" refers to the time it takes for a container to start running after it has been instantiated. In the case of Docker, this startup time can often be on the scale of seconds. This might not seem like much, but in environments where rapid scaling and responsiveness are crucial, these seconds can lead to noticeable delays and reduced user satisfaction.

* **Disk Space Consumption**

Docker containers can sometimes be bulky, consuming disk space on the order of gigabytes (GB). This is especially true when containers include all the necessary dependencies and runtime environments. Such large container sizes can lead to increased storage costs, slower deployment times, and challenges in managing and distributing container images.

* **Hardware Accelerator Support**

While Docker containers can leverage hardware accelerators to boost performance, there's a catch. They often require specific versions of software to ensure compatibility. This means that organizations might need to maintain multiple versions of containers or update their hardware accelerators to match the software requirements, adding to the complexity and management overhead.

* **Portability Concerns**

One of Docker's primary selling points is its portability. However, this portability is sometimes contingent on the CPU architecture. While Docker containers are designed to run consistently across different environments, there can be discrepancies when moving between different CPU architectures. This can lead to challenges in ensuring consistent performance and behavior across diverse deployment environments.

* **Security Dependencies**

Docker containers rely on the host operating system's user permissions to ensure security. This means that the security of the container is, to an extent, dependent on the underlying OS's security configurations. If the host OS is compromised or misconfigured, it can potentially expose the containers to security vulnerabilities.

These limitations highlight the need for alternative solutions, like the Rust + WebAssembly, which promise to address some of these pain points and offer a more efficient and secure environment for deploying LLM applications.

## AGI will be built in Rust, and WebAssembly

Why Rust and WebAssembly can be the language of AGI?

<center class="half">

<img src="https://hackmd.io/_uploads/Sk6Kx8NRn.png" width="70%" height="70%"/>

</center>

### Rust: The Optimal Choice for the AGI Era

* **Performance.** Rust is a compiled language, known for its blazing-fast performance. When combined with WebAssembly, which is a binary instruction format for a stack-based virtual machine, the duo promises unparalleled execution speeds.

* **Memory Safety.** One of Rust's standout features is its emphasis on memory safety without sacrificing performance. This ensures that applications are both fast and secure.

* **Concurrency.** Rust's approach to concurrency is unique. It ensures that data races, one of the most common and challenging bugs in concurrent systems, are caught during compile time. This means developers can write concurrent code without the fear of introducing hard-to-detect runtime bugs.

* **Expressive Type System.** Rust boasts a powerful and expressive type system. This system not only helps in catching bugs at compile time but also allows developers to express their intentions in a clear and concise manner.

* **Modern Package Management.** Cargo, Rust's package manager, streamlines the process of managing dependencies, building projects, and even publishing libraries. It's a tool that has been praised for its ease of use and efficiency.

* **Rapidly Growing Ecosystem.** Rust's ecosystem is flourishing. Libraries like `ndarray`, `llm`, `candle`, and `burn` are testament to the community's active involvement in expanding Rust's capabilities.

### WASM Container: faster, lighter and safer

Shivraj Jadhav compares Docker container and WASM in multiple dimensions[^table.1].

<center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Table.1 WASM vs. Docker.</center>

* **Portability.** WebAssembly is designed to be a portable target for the compilation of high-level languages, allowing for deployment on the web and server side across devices.

* **Sandbox Mechanism.** WebAssembly introduces a sandbox mechanism that provides a safer production environment. This ensures that the code runs in an isolated environment, minimizing potential risks.

* **Protecting User Data and System Resources.** WebAssembly is designed with security in mind. It ensures that user data and system resources are protected from potential threats.

* **Bytecode Verification.** Before execution, WebAssembly bytecode undergoes a verification process to prevent malicious code from running. This adds an additional layer of security.

* **Isolated Execution Environment.** Modules in WebAssembly run in isolated environments. This means that even if one module faces issues, it won't affect the functioning of other modules.

* **Smaller Footprint.** With Rust and WebAssembly, developers can achieve more with less. The compiled code is often much smaller in size, leading to quicker load times and efficient execution.

**WASI-NN Standard**

Besides the advantages mentioned above, the WASI-NN standard of WebAssembly for machine learning applications is also an significant factor.

* **Mainstream Machine Learning Inference Engines.** WASI-NN is designed to work seamlessly with popular machine learning inference engines like TensorFlow, PyTorch, and OpenVINO.

* **Extensions for Large Language Models.** With tools and libraries like `Llama2.c` and `llama.cpp`, WASI-NN offers functionalities tailored for large model applications, ensuring that developers have the tools they need to work with extensive datasets and complex models.

## Use Case: Agent for Code Review

In this section, we will demonstrate how to use the `flows.network` platform to build an agent for code review. Before diving into the specific example, let's first see the concept model of `Agent` and the `flows.network` platform.

### Concept Model of Agent

This is a conceptual framework of an LLM-powered AI Agent raised by Lilian Weng[^Fig.3].

<center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Fig.3 Overview of LLM-powered autonomous agent system</center>

In this model, LLM functions play the role of the agent's brain, responsible for core reasoning and decision-making, but it still needs additional modules to enable key capabilities: planning, long/short-term memory, and tool use.

The `flows.network` platform is built based on the similar idea to Lilian's model. Fig.4 shows its major components. The entire platform is writen in Rust, compiled to wasm modules, and running on WasmEdge Runtime.

<center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Fig.4 The major components of flows.network</center>

### Agent for Code Review

On the `flows.network` platform, we provide an agent (a bot template) for helping maintainers of open-source projects on GitHub review PRs. We name it `Code Review Bot`.

The abstract design of the agent is presented in Fig.5. The red block `code-review-function` in the center of the diagram defines the core agent functions, while each dashed circle surrounding the red block matches the counterpart directly conneted to the `agent` block in Fig.3.

<center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Fig.5 Abstract Design of Code Review Bot</center>

Fig.6 depicts the architecture of `Code Review Bot`. Except for the external resources, such as GitHub Service, the agent consists of wasm modules and runs on WasmEdge Runtime. Integration wasm modules are reponsible for connecting WebAssembly functions to external resources via Web APIs. For example, the `code-review-function` wasm module extract the code in review into prompts, then the `openai-integration` wasm module sends prompts to the ChatGPT service and waits for the response; finally, sends the comments to the `code-review-function` wasm module.

<center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Fig.6 Architecture of Code Review Bot</center>

Fig.7 shows an example of a PR review summary by Code Review Bot. It summarizes the target PR, lists the hidden risks and major changes, and etc. These information would help reviewers put their focuses on the vital parts and save their time.

<center style="font-size:14px;color:#C0C0C0;text-decoration:underline">Fig.7 Example of PR review summary by Code Review Bot</center>

The Code Review Bot can be deployed in minutes. If you would like to use it in your projects, [this guide](https://https://www.cncf.io/blog/2023/06/06/a-chatgpt-powered-code-reviewer-bot-for-open-source-projects/) can help you.

## Conclusion

In the realm of AI infrastructure development, while Python and Docker have served us well, it's essential to explore and adopt newer technologies that promise better performance, security, and efficiency. The combination of Rust and WebAssembly is a testament to this evolution, offering a compelling alternative for developers and organizations alike.

*This article provides a comprehensive overview of the talk by Sam Liu on the topic of Rust + WebAssembly for building large model ecosystems. For a deeper dive and to explore the practical projects in detail, readers are encouraged to join the [WasmEdge discord](https://discord.com/invite/U4B5sFTkFc).*

## References

[^flows]: flows.network: A low-code platform for Rust developers. https://flows.network/

[^Fig.1]: Charles E. Leiserson et al. ,There’s plenty of room at the Top: What will drive computer performance after Moore’s law?.Science368,eaam9744(2020).DOI:10.1126/science.aam9744

[^Fig.2]: https://github.com/Xilinx/Vitis-AI/blob/master/src/vai_runtime/xir/src/python/wrapper/wrapper.cpp

[^table.1]: *WebAssembly (WASM) — Docker Vs WASM* by Shirvraj Jadhav. https://medium.com/@shivraj.jadhav82/webassembly-wasm-docker-vs-wasm-275e317324a1

[^Fig.3]: *LLM Powered Autonomous Agents* by Lilian Weng. Web link: https://lilianweng.github.io/posts/2023-06-23-agent/

Sign in with Wallet

Sign in with Wallet