# 2016q3 Homework5 (jit-compiler)

contributed by <`f5120125`>, <`diana0651`>

###### tags:

## [A09: jit-compiler](https://hackmd.io/s/SJ7AZJv1e)

### 預期目標

- [x] 學習設計 JIT 編譯器

- [x] 運用計算機組織的背景知識,進行深入效能評估和提出改善方案

- [ ] 延展程式語言,提供 concurrency 能力

## Brainfuck

- [Brainfuck wikipedia](https://en.wikipedia.org/wiki/Brainfuck)

- **Cell size** : the cells are of 8-bit size (cells are bytes)

- **Array size**

- 符合 Turing complete

- 一個萬能圖靈機:可以執行任何計算

- 具有無限存儲能力的通用程式語言

- 八個指令,其中兩個是 I/O 動作

- 對記憶體單元作直接的存取

- 透過data pointer在data cell中進行不同的指令

- each character is a token

- if the token is not one of the eight operators, it’s ignored

- the forward and backwards jumping operators should be matched for well formed input

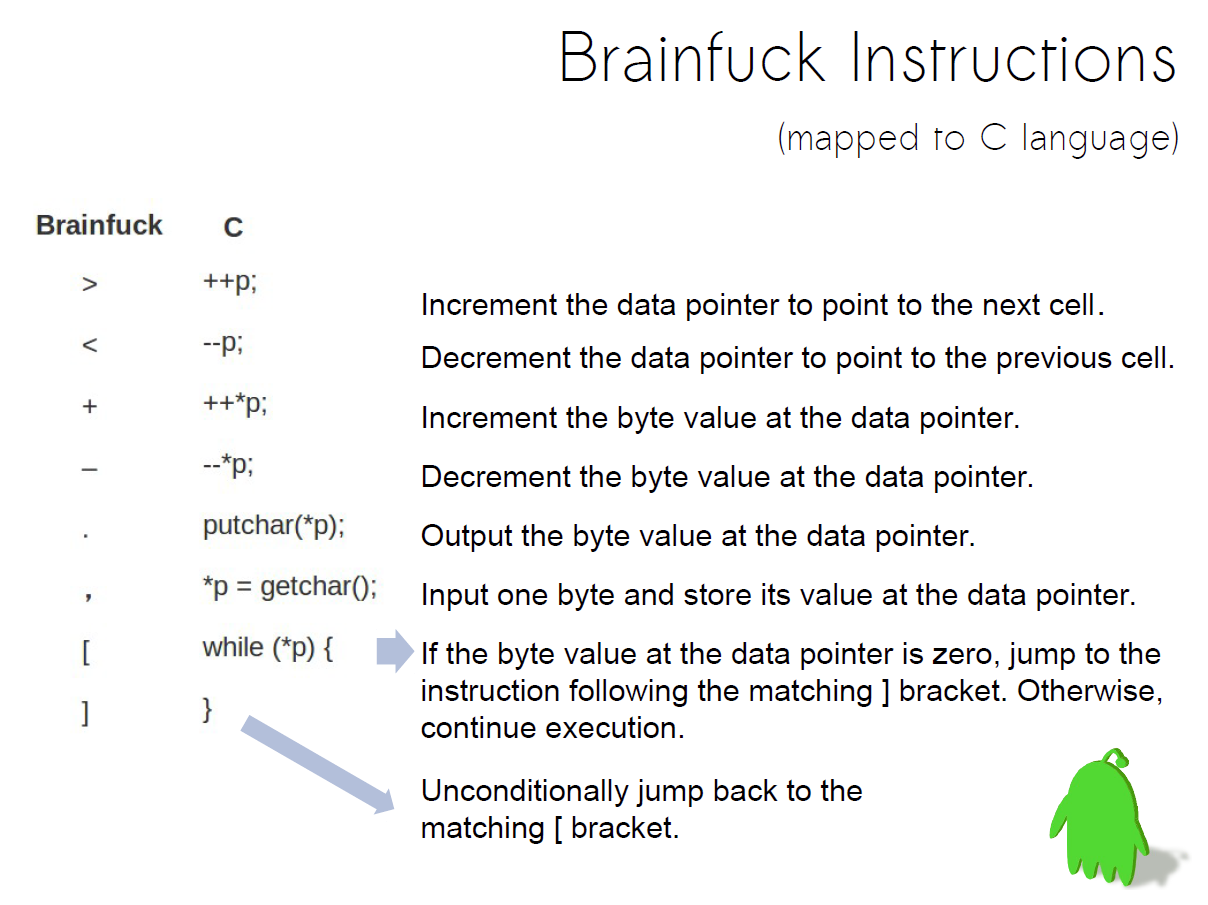

- 八個運算字元

- **> and <**: there’s potential for **stack buffer overrun** since we’re not performing bounds checks.

- **. and ,**: provide input or output

- writing the value pointed to by the instruction pointer to stdout as an ASCII value,

- reading one byte from stdin as an ASCII value and writing it to the cell pointed to by the instruction pointer.

- **[ and ]**: looping constructs

- The compiler is a pain when modifying and running a Brainfuck program. The trade off is that the compiled version is quite a bit faster.

- compile the Brainfuck program to assembly,

- assemble the assembly into an object file,

- link it into an executable,

- and run it whereas with the interpreter we can just run it.

- 發想: a translation & execution technique that didn’t force us to compile our code **every time we changed it and wanted to run it**, but also performance closer to that of compiled code. That’s where a JIT compiler comes in!

## [Compiler, Interpreter, Jit-compiler](http://nickdesaulniers.github.io/blog/2015/05/25/interpreter-compiler-jit/)

### Interpreter

```graphviz

digraph G{ label="Interpreter";

labelloc=<t>

"source code"[shape=box];

"pure interpreter"[shape=box];

"execution results"[shape=box];

"source code"->"pure interpreter"->"execution results";

}

```

- immediately execute some operation

- JavaScript, Ruby, Python, PHP, and Perl

### Compiler

```graphviz

digraph G{

s1[label="source code 1", shape=cds]

s2[label="source code 2", shape=cds]

obj1[shape=folder]

obj2[shape=folder]

linker[shape=parallelogram]

libs[label="external libs", shape=box3d]

exe[label="execution results", shape=box]

subgraph cluster_compiler{

rank=same

label="compiler"

lex[label="lexical analyzer", shape=oval]

syn[label="syntax analyzer", shape=oval]

sem[label="semantic analyzer", shape=oval]

gen[label="code generator", shape=oval]

lex->syn->sem->gen

}

s1->lex;

s2->lex;

gen->obj1->linker

gen->obj2->linker

libs->linker->exe

}

```

- assembly instructions to the interpreter

- C, C++

### Just-In-Time Compiler

```graphviz

digraph G{

subgraph cluster_compiler{

label="compile time";

s1[label="source code", shape=cds];

cp[label="compiler"];

cil[label="intermediate code"];

}

subgraph cluster_runtime{

label="runtime";

jit[label="JIT compiler"];

code[label="Native code"];

}

s1->cp->cil->jit->code

}

```

- between interpretation and compilation that combines the best of both worlds

- binary opcodes to executable memory, manually perform relocation

- have macros for each operation in the JIT would perform

- the code would look more like the compiler rather than raw arrays of bytes (though the preprocessor would translate those macros into such)

- Java

### Comparison

- interpreter

- an order of magnitude slower than the compiled result or run of the JIT

- isn’t able to jump back and forth as efficiently as the compiler or JIT

- it scans back and forth for matching brackets O(N)

- the other two can jump to where they need to go in a few instructions O(1)

- translate the higher level language to a byte code, and calculate the offsets used for jumps directly, rather than scanning back and forth.

- compiler

- fastest

- doesn’t have the overhead the JIT does of having to read the input file or build up the instructions to execute at runtime.

- read and translate the input file ahead of time.

- The interpreter bouces back and forth between looking up an operation, then doing something based on the operation, then lookup, etc.. The compiler and JIT preform the translation first, then the execution, not interleaving the two.

- JIT

- compilation time is **cheap**: as is the case with our Brainfuck compiler & JIT, it makes sense to prefer the **JIT**; it allows us to quickly make changes to our code and re-run it.

- compilation is expensive: we might only want to pay the compilation tax once, especially if we plan on running the program repeatedly.

- more complex to implement.

- repeatedly re-parse input files and re-build instruction streams at runtime.

- bridge the gap for dynamically typed languages where the runtime itself is much more dynamic, and thus harder to optimize ahead of time.

- jump into JIT’d native code from an interpreter and back gives you the best of both (interpreted and compiled) worlds.

## [Comparison of Compilation Methods](http://scholarexchange.furman.edu/cgi/viewcontent.cgi?article=1879&context=furmanengaged)

### What is a compiler?

- perform optimizations on code while translating it

- unroll loops

- convert complex mathematical operations into groups of simpler operations

- Most compilers can be divided into the category of **ahead-of-time** or **justin-time**.

### Ahead-of-Time(AOT) Compilation

- run a separate compilation command **prior to runtime**, generating a separate file that is then executed by the computer

- machine code is specific to one computer or type of computer.

- faster than just-in-time counterparts

- there is more time available to optimize code.

- C, C++, and Pascal.

### Just-in-Time(JIT) Compilation

- a user or another program calls the program to run.

- **higher startup overhead** than AOT.

- have access to data(values of variables) about the program at runtime

- help make optimizations that an AOT compiler cannot(advanced branch prediction)

- Java and C#.

## [DynASM](https://corsix.github.io/dynasm-doc/tutorial.html)(Dynamic Assembler)

### [DynASM Toolchain](http://luajit.org/dynasm_features.html)

Toolchain 是一套能讓你編譯、連結、除錯程式的軟體,例如 GCC、LD、GDB、AS 與 glibc 等。

- DynASM is a **pre-processing** assembler.

- DynASM converts **mixed C/Assembler source** to plain C code.

- The generated C code is extremely **small and fast**.

- A tiny **embeddable C library** helps with the process of **dynamically assembling, relocating and linking** machine code.

- DynASM itself (the pre-processor) is written in **Lua**.

### [Examples](https://luapower.com/dynasm)

- Self-contained module

- Code gen / build split

- Load code from a string

- Translate from Lua

- Included demo/tutorial

- Brainfuck JIT compiler

### [lua](https://www.lua.org/about.html)

- Lua is a proven, robust language

- Lua is fast

- Lua is portable

- Lua is embeddable

- Lua is powerful (but simple and small)

- Lua is free

## [Optimization Strategies](http://calmerthanyouare.org/2015/01/07/optimizing-brainfuck.html)

### 未最佳化之數據:

輸入`make bench-jit-x64`

測試結果:

```

```

### 1. Clear loops

- clear loop:```[-]```功能為清空目前所在的 cell (1個byte), 因此優化的方式為直接利用```mov```將零覆蓋到 cell 上

- 修改 jit-x64.dasc:

- 設計一個function可以判斷pattern```[-]```

```clike=

int clear_loop(char *p){

if(*(p+1) == '-'&&*(p+2) == ']')

return 1;

else

return 0;

}

```

- 以下圖表顯示新增的功能(用粗體表示)

| IR | C |

|:------ |:----------- |

| Add | mem[p]++; |

| Sub | mem[p] - -; |

| Right | p++; |

| Left | p - -; |

| Out | putchar(mem[p]); |

| In | mem[p] = getchar(); |

| Open | while(mem[p]) { |

| Add | } |

| ==**Clear**== | ==**mem[p] = 0;**== |

- 執行結果

```

Executing Brainf*ck benchmark suite. Be patient.

progs/awib.b GOOD 79.0ms

progs/mandelbrot.b GOOD 3234.4ms

progs/hanoi.b GOOD 3252.9ms

```

hanoi.b 大幅降低執行時間,大量`[-]`敘述

awib.b 只有少數`[-]`敘述

### 2. Contraction

- 在 BrainF\*ck 語言中, 充滿著```>```, ```<```, ```+```, ```-```的連用

- 修改 jit-x64.dasc:

- 設計一個 function 可以去計算連用了多少個符號

```clike=

int continuous_count(char *p)

{

char *ptr = p;

int count = 0;

while (*ptr == *p) {

count++;

ptr++;

}

return count;

}

```

- 計算完 count 數量後, 根據對應的符號, 對 PTR 或 [PTR] 做相符的運算(以```>```為例)

```clike=

case '>':

count=continuous_count(p);

p+=count-1;

| add PTR, count

break;

```

- 以下圖表顯示新增的功能(用粗體表示)

| IR | C |

|:------ |:----------- |

| ==**Add(x)**== | ==**mem[p] += x;**== |

| ==**Sub(x)**== | ==**mem[p] -= x;**== |

| ==**Right(x)**== | ==**p+=x;**== |

| ==**Left(x)**== | ==**p-=x;**== |

| Out | putchar(mem[p]); |

| In | mem[p] = getchar(); |

| Open | while(mem[p]) { |

| Add | } |

| **Clear** | **mem[p] = 0;** |

- 執行結果

```

Executing Brainf*ck benchmark suite. Be patient.

progs/awib.b GOOD 47.5ms

progs/mandelbrot.b GOOD 1229.6ms

progs/hanoi.b GOOD 130.2ms

```

3個程式都降低很多

### 3. Copy loops

- 考慮 BrainF\*ck 程式碼的片段```[->+>+<<]```, 雖然仍有清空目前所在的 cell 但在迴圈中有對於其他 cell 的操作

- 新增的Copy()的操作, 但實際上可以把這個function改成較泛用的multiplication loops

| IR | C |

|:------ |:----------- |

| ==**Copy(x)**== |==**mem[p+x] += mem[p];**== |

### 4. Multiplication loops

- [Assembly Tutorial](https://www.tutorialspoint.com/assembly_programming/index.htm)

- 根據copy loops的想法, 對其他cell的```+```操作可以先統計起來

- ```_index```

最後check_loops回傳為紀錄需要執行multiplication loops的次數

- ```*index```

紀錄目前cell離原本cell的offset

- ```*mul```

在某個cell上, 執行```+```的次數

- ```*p_offset```

紀錄pointer ```p```在迴圈執行完後的offset

```clike=

int check_loops(char *p,int *index,int *mult, int *p_offset)

{

int count=0;

int res,offset = 0,_index = 0;

if (*(p+1) != '-') return -1;

p += 2;

count+=2;

while (*p != ']') {

if (*p == '[' || *p == '-' ||

*p == '.' || *p == ',')

return -1;

res = continuous_count(p);

if (*p == '>') offset += res;

else if (*p == '<') offset -= res;

else if (*p == '+') {

index[_index] = offset;

mult[_index] = res;

_index++;

}

p += res;

count+=res;

}

if (offset != 0) return -1;

*p_offset = count;

return _index;

}

```

- 以下圖表顯示新增的功能(用粗體表示)

| IR | C |

|:------ |:----------- |

| **Add(x)** | **mem[p] += x;** |

| **Sub(x)** | **mem[p] -= x;** |

| **Right(x)** | **p+=x;** |

| **Left(x)** | **p-=x;** |

| Out | putchar(mem[p]); |

| In | mem[p] = getchar(); |

| Open | while(mem[p]) { |

| Add | } |

| **Clear** | **mem[p] = 0;** |

| ==**Mul(x)**== |==**mem[p+x] += mem[p] * y;**== |

- 執行結果

```

Executing Brainf*ck benchmark suite. Be patient.

progs/awib.b GOOD 27.6ms

progs/mandelbrot.b GOOD 1527.1ms

progs/hanoi.b GOOD 59.4ms

```

### 5. Scan loops

`[<]` and `[>]` : move the pointer (left or right) until a zero cell is reached

- scan loop 會花很多時間在掃描龐大的記憶體

- C 的函式庫提供 memchr() 可以做最佳化, 平行比對8-bit 的字串

- 經由參考資料顯示 只有`[<]`,` [>]`較增進效能 其他如`[<<<<<<<<<] `沒有增進太多效能

- 以下圖表顯示可以新增的功能(用粗體表示)

| IR | C |

|:------ |:----------- |

| **Add(x)** | **mem[p] += x;** |

| **Sub(x)** | **mem[p] -= x;** |

| **Right(x)** | **p+=x;** |

| **Left(x)** | **p-=x;** |

| Out | putchar(mem[p]); |

| In | mem[p] = getchar(); |

| Open | while(mem[p]) { |

| Add | } |

| **Clear** | **mem[p] = 0;** |

| **Mul(x)** |**mem[p+x] += mem[p] * y;** |

| ==**ScanLeft**== |==**p -= (long)((void \*)(mem + p) - memrchr(mem, 0, p + 1));**== |

| ==**ScanRight**== |==**p += (long)(memchr(mem + p, 0, sizeof(mem)) - (void \*)(mem + p));**== |

### 6. Operation offsets

long sequences of non-loop operations and these sequences typically contain a fair number of < and >.

## Reference

- [Interpreter, Compiler, JIT from scratch by Jserv](http://wiki.csie.ncku.edu.tw/embedded/summer2015/jit-compiler.pdf)

Sign in with Wallet

Sign in with Wallet