# Magma Overview

[TOC]

## Introduction

Magma is an open-source software platform that gives network operators an open, flexible and extendable mobile core network solution. Magma enables better connectivity by:

* Allowing operators to offer cellular service without vendor lock-in with a modern, open source core network

* Enabling operators to manage their networks more efficiently with more automation, less downtime, better predictability, and more agility to add new services and applications

* Enabling federation between existing MNOs and new infrastructure providers for expanding rural infrastructure

* Allowing operators who are constrained with licensed spectrum to add capacity and reach by using Wi-Fi and CBRS

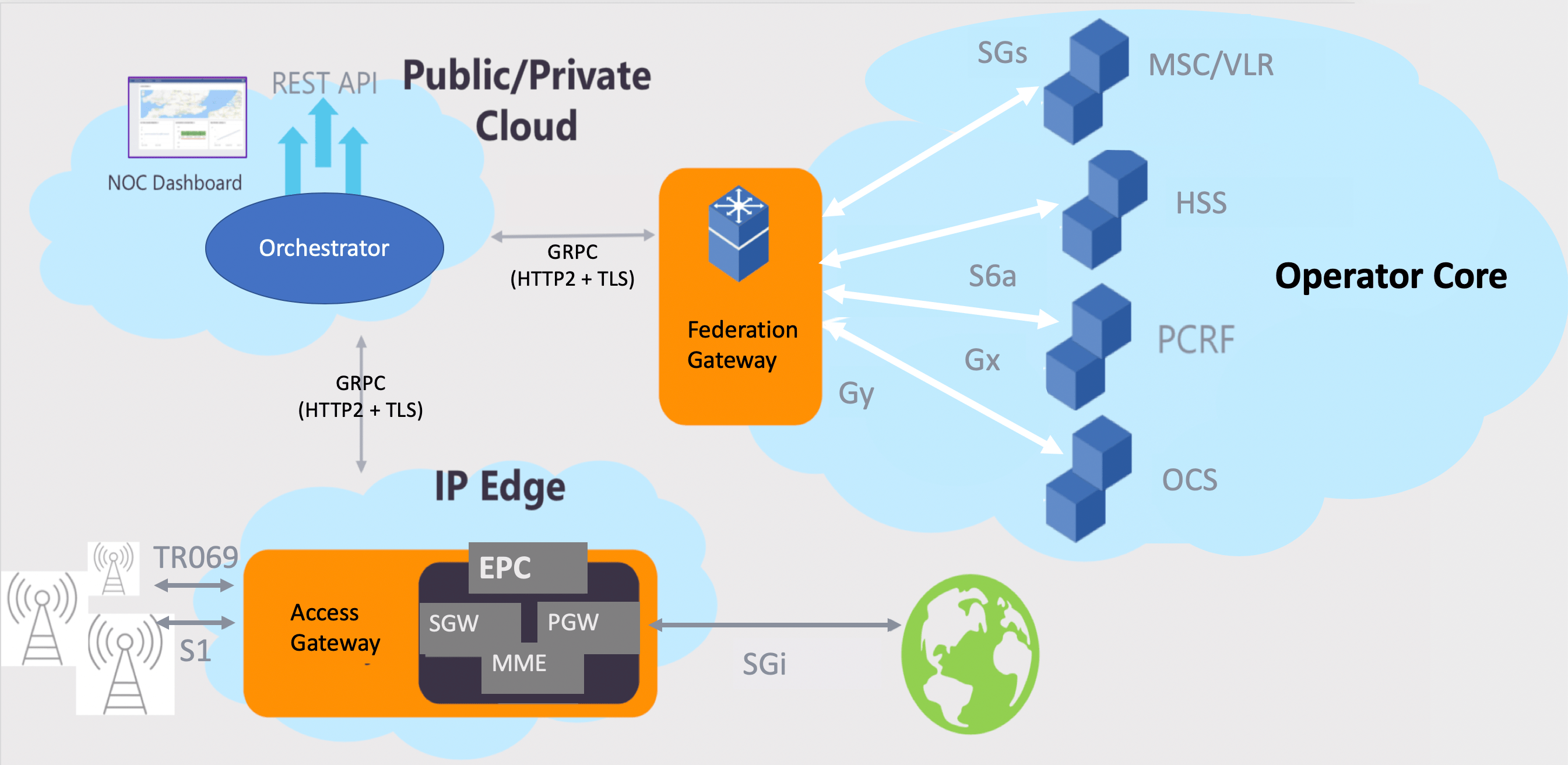

**Magma Architecture**

The figure below shows the high-level Magma architecture. Magma is designed to be 3GPP generation and access network (cellular or WiFi) agnostic. It can flexibly support a radio access network with minimal development and deployment effort.

Magma has three major components:

* **Access Gateway**: The Access Gateway (AGW) provides network services and policy enforcement. In an LTE network, the AGW implements an evolved packet core (EPC), and a combination of an AAA and a PGW. It works with existing, unmodified commercial radio hardware.

* **Orchestrator**: Orchestrator is a cloud service that provides a simple and consistent way to configure and monitor the wireless network securely. The Orchestrator can be hosted on a public/private cloud. The metrics acquired through the platform allows you to see the analytics and traffic flows of the wireless users through the Magma web UI.

* **Federation Gateway**: The Federation Gateway integrates the MNO core network with Magma by using standard 3GPP interfaces to existing MNO components. It acts as a proxy between the Magma AGW and the operator's network and facilitates core functions, such as authentication, data plans, policy enforcement, and charging to stay uniform between an existing MNO network and the expanded network with Magma.

## Access Gateway (AGW)

The main service within AGW is *magmad*, which orchestrates the lifecycle of all other AGW services. The only service *not* managed by magmad is the *sctpd* service (when the AGW is running in stateless mode).

The major services and components hosted within the AGW include

- OVS shapes the data plane

- *control_proxy* proxies control-plane traffic

- *enodebd* manages eNodeBs

- *health* checks and reports health metrics to Orchestrator

- *mme* encapsulates MME functionality and parts of SPGW control plane features in LTE EPC

- *mobilityd* manages subscriber mobility

- *pipelined* programs OVS

- *policydb* holds policy information

- *sessiond* coordinates session lifecycle

- *subscriberdb* holds subscriber information

Note: The *mme* service will be renamed soon to *accessd*, as its main purpose is to normalize the control signaling with the 4G/5G RAN nodes.

```mermaid

graph LR;

A[[S1AP]] --- AGW ---|control| Orc8r --- FeG --- HSS[(HSS)];

AGW ---|data| Z[[magma_trfserver]];

```

Together, these components help to facilitate and manage data both to and from the user.

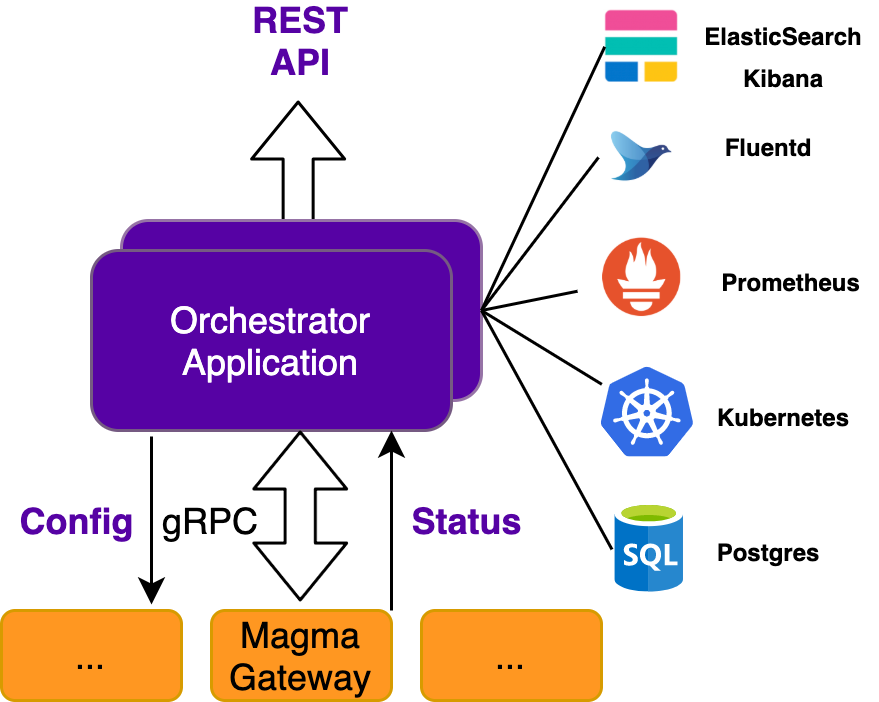

## Orchestrator (Ocr8r)

Magma's Orchestrator is a centralized controller for a set of networks. Orchestrator handles the control plane for various types of gateways in Magma. Orchestrator functionality is composed of two primary components

- A standardized, vendor-agnostic [**northbound REST API**](https://app.swaggerhub.com/apis/MagmaCore/Magma/1.0.0) which exposes

configuration and metrics for network devices

- **A southbound interface** which applies device configuration and reports device status

At a lower level, Orchestrator supports the following functionality

- Network entity configuration (networks, gateway, subscribers, policies, etc.)

- Metrics querying via Prometheus and Grafana

- Event and log aggregation via Fluentd and Elasticsearch Kibana

- Config streaming for gateways, subscribers, policies, etc.

- Device state reporting (metrics and status)

- Request relaying between access gateways and federated gateways

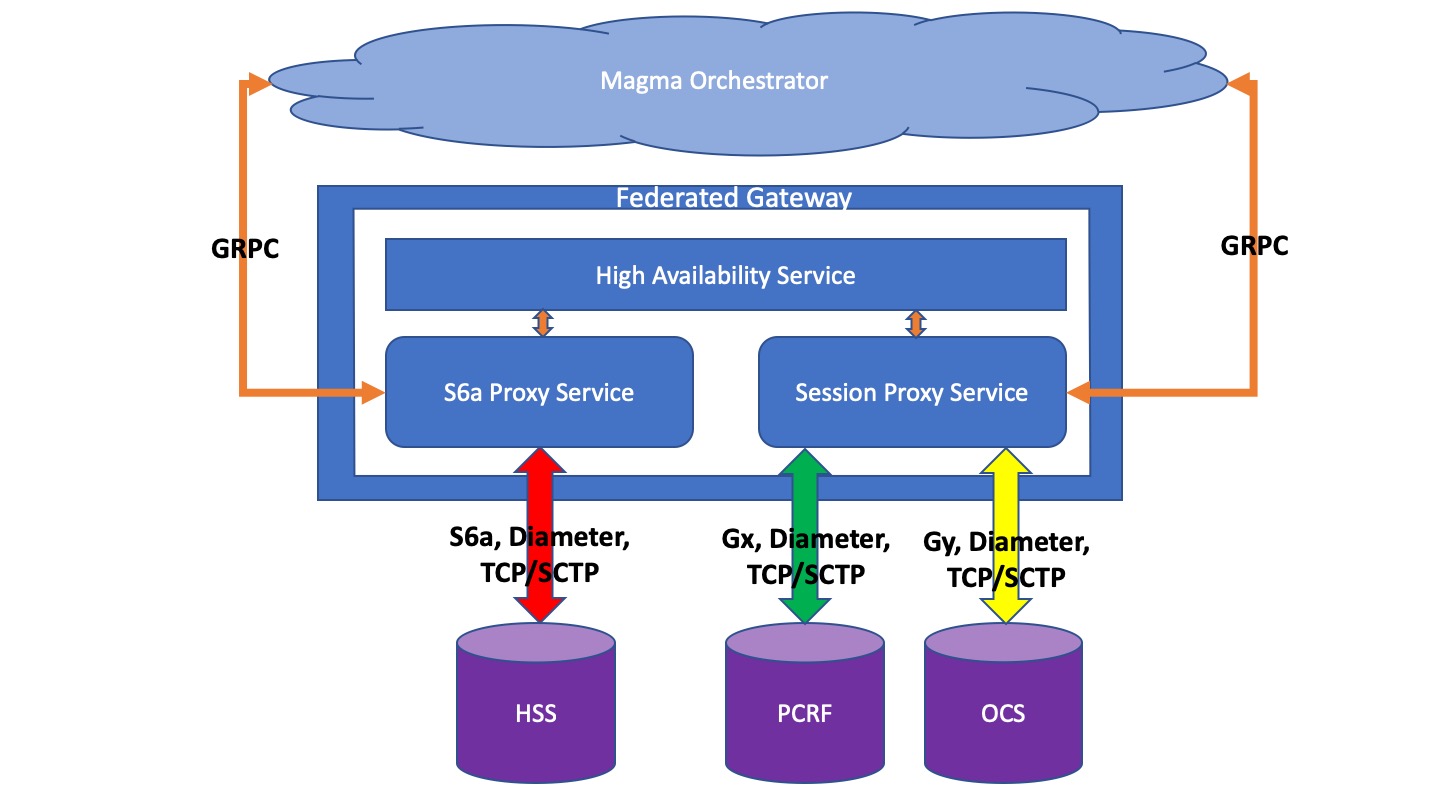

## Federated Gateway (FEG)

The federated gateway provides remote procedure call (GRPC) based interfaces to standard 3GPP components, such as HSS (S6a, SWx), OCS (Gy), and PCRF (Gx). The exposed RPC interface provides versioning & backward compatibility, security (HTTP2 & TLS) as well as support for multiple programming languages. The Remote Procedures below provide simple, extensible, multi-language interfaces based on GRPC which allow developers to avoid dealing with the complexities of 3GPP protocols. Implementing these RPC interfaces allows networks running on Magma to integrate with traditional 3GPP core components.

The main service within AGW is *magmad*, which orchestrates the lifecycle of all other AGW services. The only service *not* managed by magmad is the *sctpd* service (when the AGW is running in stateless mode).

The Federated Gateway supports the following features and functionalities:

1. Hosting centralized control plane interface towards HSS, PCRF, OCS and MSC/VLR on behalf of distributed AGW/EPCs.

2. Establishing diameter connection with HSS, PCRF and OCS directly as 1:1 or via DRA.

3. Establishing SCTP/IP connection with MSC/VLR.

4. Interfacing with AGW over GRPC interface by responding to remote calls from EPC (MME and Sessiond/PCEF) components, converting these remote calls to 3GPP compliant messages and then sending these messages to the appropriate core network components such as HSS, PCRF, OCS and MSC. Similarly the FeG receives 3GPP compliant messages from HSS, PCRF, OCS and MSC and converts these to the appropriate GRPC messages before sending them to the AGW.

## Network Management System (NMS)

Magma NMS provides an enterprise grade GUI for provisioning and operating magma based networks. It enables operators to create and manage:

- Organizations

- Networks

- Gateways and eNodeBs

- Subscribers

- Policies and APNs

It also aims to simplify operating magma networks by providing

- A dashboard of all access gateways and top-level status to determine overall health of infrastructure components at a glance

- KPIs and status of a specific access gateway to triage potential infrastructure issues that might need escalation

- A dashboard of all subscribers and their top-level status to determine the overall health of internet service at a glance

- KPIs and service status of an individual subscriber to triage issues reported by that subscriber

- Firing alerts displayed on the dashboard to escalate/remediate the issue appropriately

- Fault remediation procedures including the ability to remotely reboot gateways

- The ability to peform gateway software upgrades

- Ability to remotely troubleshoot through [call tracing features.](../howtos/call_tracing)

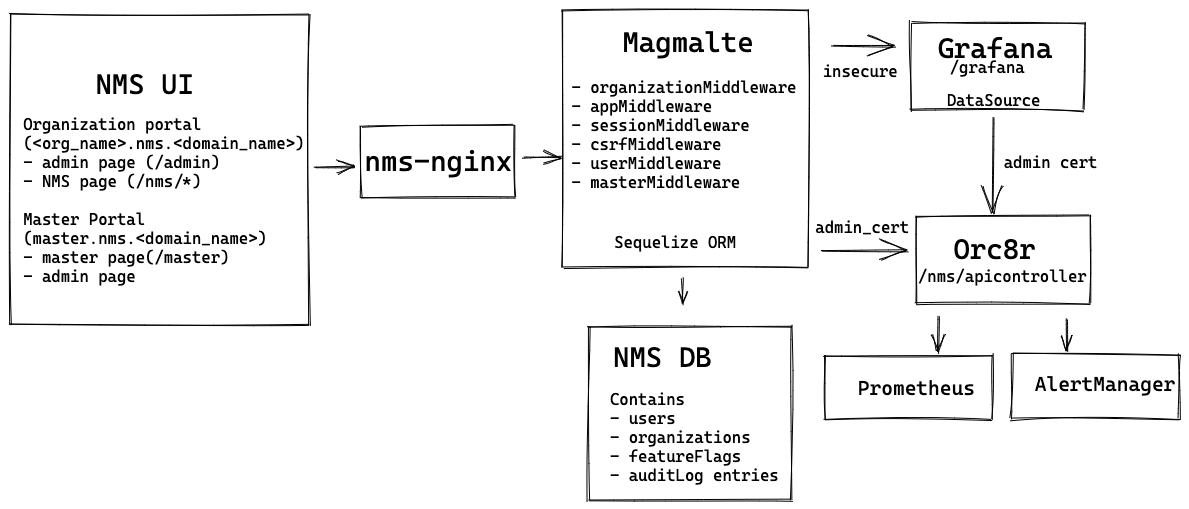

**Architecture Connection**

### NMS UI

NMS UI is a React App that uses hooks to manage the internal state. The NMS UI contains various react components to implement NMS page, admin page and the host page. NMS UI primarily uses most of the front end components from Material UI framework.

#### Host page

Host page contains components for displaying organizations, features, metrics and users in the host portal.

#### NMS Page

NMS page contains components for displaying dashboard, equipment, networks, policies/APNs, call tracing, subscribers, metrics and alerts. It also contains a network selector button to switch between various networks.

#### Admin Page

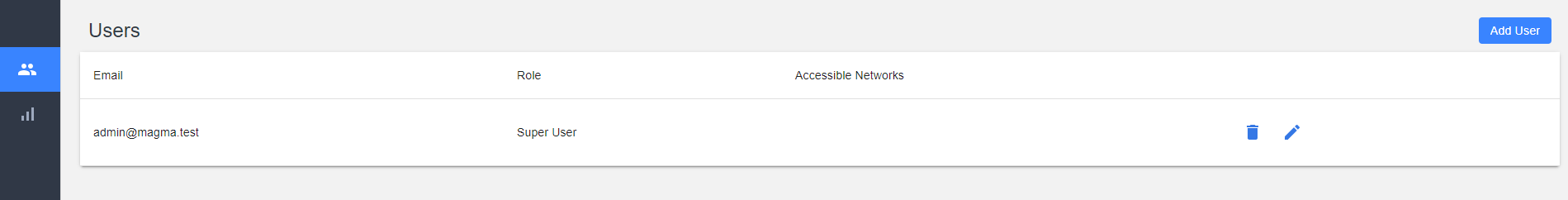

Admin page consists of UI components providing the ability to add networks and users to the respective organizations.

### Magmalte

Magmalte is a microservice built using express framework. It contains set of application and router level middlewares.

It uses sequelize ORM to connect to the NMS DB for servicing any routes involving DB interaction.

We will describe the middlewares used in the magmalte app below.

#### AuthenticationMiddleware

Magmalte uses passport authentication middleware for authenticating incoming requests. Passport authentication within magmalte currently supports

- local strategy(where username and password is validated against the information stored in db)

- SAML strategy(for enabling enterprise SSO). For enabling this, operator will have to configure the organization entrypoint, issuer name and cert.

#### SessionMiddleware

Session middleware helps with creating the session, setting the session cookie(use secure cookie in prod deployments) and creating the session object in req. The session token(from environment or hardcoded value) is used to sign the session cookie. The session information is stored in the NMS DB through sequelize. We only serialize userID as part of the session information.

#### csrfMiddleware

This protects against any CSRF attacks. For more understanding, check this (<https://github.com/pillarjs/understanding-csrf#csrf-tokens>). Magmalte server includes the csrf tokens when it responds to the client and client submits the form with the token. (nms/app/common/axiosConfig.js)

#### appMiddleware

Sets some generic app level configuration. Including expecting the body to be json, body not to exceed 1mb and adds compression to the responses.

#### userMiddleware

Router middlewares to handle login routes for the user

#### hostOrgMiddleware

Router middleware to ensure that only requests from “host” organization is handled by host routes.

#### Notes on Routes handling

#### apiControllerRouter

All Orc8r API calls are handled by apiControllerRouter. ApiControllerRouter acts as a proxy and makes calls to orc8r using th e admin certs present on the container. ApiController Router has networkID filter to ensure that the networkID invoked in the request is part of the organization making the request. On the response side, there is a similar decorator to ensure that networkIDs which belong to the organization is only passed. Additionally, the apicontroller router has a audit log decorator which logs the mutating requests in a auditlogtable in the NMS DB.

#### networkRouter

Network creation calls are handled by this router and the network is added to the organization and passed on to the apiController.

#### grafana router

Grafana router is used for displaying the grafana component within iframe in UI. It handles all requests and proxies it to the underlying grafana service. It attempts to synchronize default grafana state when the route is invoked. The default state includes syncing the tenants, user information, datasource and dashboards. The datasource URL is a Orc8r tenants URL(magma/v1/tenants/<org_name>/metrics). NMS certs is also passed along with the datasource information so that grafana can use this to query orc8r and retrieve relevant metrics.

The default state synchronized helps display set of default dashboards to the users. The default dashboards include dashboards for gateways, subscribers, internal metrics etc.

Finally grafana router uses the tenant information synced to ensure that we only retrieve information for networks associated with a particular organization.

### NMS DB

NMS DB is the underlying SQL store for magmalte service. NMS DB contains following tables with associated schema

- Users

```text

id

email character varying(255),

organization character varying(255),

password character varying(255),

role integer,

"networkIDs" json DEFAULT '[]'::json NOT NULL,

tabs json,

"createdAt" timestamp with time zone NOT NULL,

"updatedAt" timestamp with time zone NOT NULL

```

- Organization

```text

id integer NOT NULL,

name character varying(255),

tabs json DEFAULT '[]'::json NOT NULL,

"csvCharset" character varying(255),

"customDomains" json DEFAULT '[]'::json NOT NULL,

"networkIDs" json DEFAULT '[]'::json NOT NULL,

"ssoSelectedType" public."enum_Organizations_ssoSelectedType" DEFAULT 'none'::public."enum_Organizations_ssoSelectedType" NOT NULL,

"ssoCert" text DEFAULT ''::text NOT NULL,

"ssoEntrypoint" character varying(255) DEFAULT ''::character varying NOT NULL,

"ssoIssuer" character varying(255) DEFAULT ''::character varying NOT NULL,

"ssoOidcClientID" character varying(255) DEFAULT ''::character varying NOT NULL,

"ssoOidcClientSecret" character varying(255) DEFAULT ''::character varying NOT NULL,

"ssoOidcConfigurationURL" character varying(255) DEFAULT ''::character varying NOT NULL,

"createdAt" timestamp with time zone NOT NULL,

"updatedAt" timestamp with time zone NOT NULL

```

- FeatureFlags - features enabled for the NMS

```text

id integer NOT NULL,

"featureId" character varying(255) NOT NULL,

organization character varying(255) NOT NULL,

enabled boolean NOT NULL,

"createdAt" timestamp with time zone NOT NULL,

"updatedAt" timestamp with time zone NOT NULL

```

- AuditLogEntries - Table containing the mutations(POST/PUT/DELETE) made on NMS

```text

id integer NOT NULL,

"actingUserId" integer NOT NULL,

organization character varying(255) NOT NULL,

"mutationType" character varying(255) NOT NULL,

"objectId" character varying(255) NOT NULL,

"objectDisplayName" character varying(255) NOT NULL,

"objectType" character varying(255) NOT NULL,

"mutationData" json NOT NULL,

url character varying(255) NOT NULL,

"ipAddress" character varying(255) NOT NULL,

status character varying(255) NOT NULL,

"statusCode" character varying(255) NOT NULL,

"createdAt" timestamp with time zone NOT NULL,

"updatedAt" timestamp with time zone NOT NULL

```

Additionally the DB also contains session table to hold current set of sessions.

```text

sid character varying(36) NOT NULL,

expires timestamp with time zone,

data text,

"createdAt" timestamp with time zone NOT NULL,

"updatedAt" timestamp with time zone NOT NULL

```

# Magma Hands-On

## System Design

**Magma Setup**

**System Specification**

All need to be remote troughout `17617` port with my private key. Then for additional information, all the VM running on top of Openstack private cloud.

| Feature | VM Orchestrator | VM AGW | VM srsRAN |

|---------------------------|-----------------------------|-----------------------------|-----------------------------|

| OS Used | Ubuntu 22.04 LTS | Ubuntu 22.04 LTS | Ubuntu 22.04 LTS |

| vCPU | 8 | 4 | 4 |

| RAM (GB) | 16 | 8 | 6 |

| Disk (GB) | 100 | 70 | 80 |

| Home user | ubuntu/magma | ubuntu | ubuntu |

| Number of NICs | 2 (ens3, ens4) | 2 (ens3, ens4) | 2 (ens3, ens4) |

**Network Specification**

| VM Name - Function | Interface | IP Address | MAC Address |

|-------|-----------|-------------|-------------------|

| Orchestrator - LB | ens3 | 10.0.1.23 | fa:16:3e:cf:1d:de |

| Orchestrator - OAM | ens4 | 10.0.2.180 | fa:16:3e:23:d2:c2 |

| AGW - Free | ens160 | 10.0.2.102 | fa:16:3e:02:01:01 |

| AGW - OAM | ens192 | 10.0.3.102 | fa:16:3e:02:02:02 |

| srsRAN-UE-1 | ens160 | 10.0.2.103 | fa:16:3e:03:01:01 |

| srsRAN-UE-2 | ens192 | 10.0.3.103 | fa:16:3e:03:02:02 |

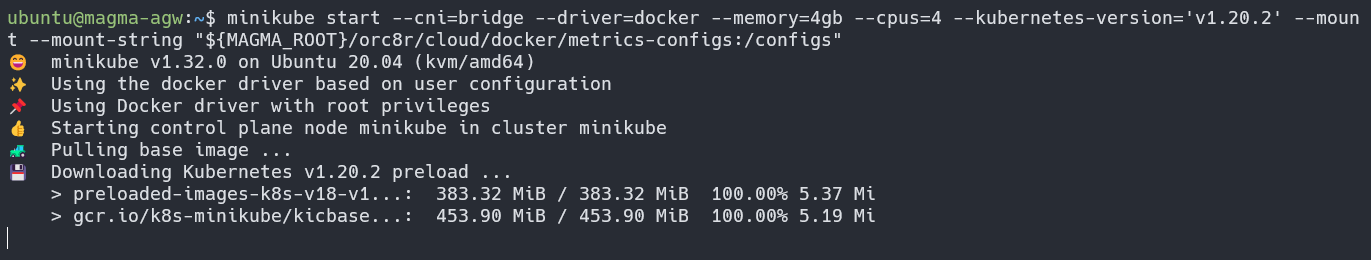

## Orchestrator Setup

**Step-by-Step Orchestrator Installation**

> Installation using Ansible way

1. Clone `magma-galaxy` github repository

```bash

git clone https://github.com/ShubhamTatvamasi/magma-galaxy.git

cd magma-galaxy/

```

2. Configure the `hosts.yaml` to align the IP and user-port

```bash

vi hosts.yaml

```

<details><summary>hosts.yaml</summary>

``` o

---

all:

# Update your VM or bare-metal IP address

hosts: 10.0.2.180

vars:

ansible_user: "ubuntu"

ansible_port: "17617"

orc8r_domain: "galaxy.shubhamtatvamasi.com"

# deploy_on_aws: true

# deploy_domain_proxy: true

# magma_docker_registry: "shubhamtatvamasi"

# magma_docker_tag: "666a3f3"

# orc8r_helm_repo: "https://shubhamtatvamasi.github.io/magma-charts-04-09-2023"

```

</details>

3. Execute the `deploy-orc8r.sh` to align the IP and user-port

```bash

sudo bash deploy-orc8r.sh

```

Fill the question with default input, except for the LoadBalancer IP.

4. Create a key to auto-access `magma` user without using a username or password form ubuntu user

```bash

ssh-keygen

# Generating public/private rsa key pair.

# Enter file in which to save the key (/root/.ssh/id_rsa):

# Enter passphrase (empty for no passphrase):

# Enter same passphrase again:

# Your identification has been saved in /root/.ssh/id_rsa.

# Your public key has been saved in /root/.ssh/id_rsa.pub.

# The key fingerprint is:

# SHA256:t0qr5YF1pg9GqE2MvwVkjzamiNYUIvLUsc/KX47znis root@kubernetes

# The key's randomart image is:

# +---[RSA 2048]----+

# | . |

# | . o |

# |o...o o |

# |oo. .B + |

# | ... % S + |

# | .oo X * = . |

# |....= + X . |

# |. .E@ B |

# | =*X.. |

# +----[SHA256]-----+

# Copy the public key to ubuntu authorized_keys

cat ~/.ssh/id_rsa.pub >> /home/ubuntu/.ssh/authorized_keys

# Copy the public key to magma authorized_keys

sudo su

cat /home/ubuntu/.ssh/id_rsa.pub >> /home/magma/.ssh/authorized_keys

```

Test if you can access magma user without password trough `ubuntu` user.

```bash

ssh magma@10.0.2.180 -p 17617

```

5. Create a key to auto-access `ubuntu` user without using a username or password form `magma` user

```bash

ssh-keygen

# Generating public/private rsa key pair.

# Enter file in which to save the key (/root/.ssh/id_rsa):

# Enter passphrase (empty for no passphrase):

# Enter same passphrase again:

# Your identification has been saved in /root/.ssh/id_rsa.

# Your public key has been saved in /root/.ssh/id_rsa.pub.

# The key fingerprint is:

# SHA256:t0qr5YF1pg9GqE2MvwVkjzamiNYUIvLUsc/KX47znis root@kubernetes

# The key's randomart image is:

# +---[RSA 2048]----+

# | . |

# | . o |

# |o...o o |

# |oo. .B + |

# | ... % S + |

# | .oo X * = . |

# |....= + X . |

# |. .E@ B |

# | =*X.. |

# +----[SHA256]-----+

# Copy the public key to ubuntu authorized_keys

cat ~/.ssh/id_rsa.pub >> /home/magma/.ssh/authorized_keys

# Copy the public key to magma authorized_keys

sudo su

cat /home/magma/.ssh/id_rsa.pub >> /home/ubuntu/.ssh/authorized_keys

```

Test if you can access magma user without password trough `magma` user.

```bash

ssh ubuntu@10.0.2.180 -p 17617

```

6. Now it's time to configure it on `magma` user side

```bash

ssh magma@10.0.2.180 -p 17617

vi magma-galaxy/rke/cluster.yml

```

<details><summary>cluster.yml</summary>

``` o

# If you intended to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: 10.0.2.180

port: "17617"

internal_address: ""

role:

- controlplane

- worker

- etcd

hostname_override: ""

user: magma

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: /home/magma/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

pod_security_configuration: ""

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: ['/var/openebs/local:/var/openebs/local']

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: calico

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.5.9

alpine: rancher/rke-tools:v0.1.96

nginx_proxy: rancher/rke-tools:v0.1.96

cert_downloader: rancher/rke-tools:v0.1.96

kubernetes_services_sidecar: rancher/rke-tools:v0.1.96

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.22.28

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.22.28

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.22.28

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.6

coredns: rancher/mirrored-coredns-coredns:1.10.1

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.6

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.22.28

kubernetes: rancher/hyperkube:v1.27.8-rancher2

flannel: rancher/mirrored-flannel-flannel:v0.21.4

flannel_cni: rancher/flannel-cni:v0.3.0-rancher8

calico_node: rancher/mirrored-calico-node:v3.26.3

calico_cni: rancher/calico-cni:v3.26.3-rancher1

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.26.3

calico_ctl: rancher/mirrored-calico-ctl:v3.26.3

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.26.3

canal_node: rancher/mirrored-calico-node:v3.26.3

canal_cni: rancher/calico-cni:v3.26.3-rancher1

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.26.3

canal_flannel: rancher/mirrored-flannel-flannel:v0.21.4

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.26.3

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.7

ingress: rancher/nginx-ingress-controller:nginx-1.9.4-rancher1

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v20231011-8b53cabe0

metrics_server: rancher/mirrored-metrics-server:v0.6.3

windows_pod_infra_container: rancher/mirrored-pause:3.7

aci_cni_deploy_container: noiro/cnideploy:6.0.3.2.81c2369

aci_host_container: noiro/aci-containers-host:6.0.3.2.81c2369

aci_opflex_container: noiro/opflex:6.0.3.2.81c2369

aci_mcast_container: noiro/opflex:6.0.3.2.81c2369

aci_ovs_container: noiro/openvswitch:6.0.3.2.81c2369

aci_controller_container: noiro/aci-containers-controller:6.0.3.2.81c2369

aci_gbp_server_container: ""

aci_opflex_server_container: ""

ssh_key_path: /home/magma/magma-galaxy/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: none

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

default_ingress_class: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null

```

</details>

After that you **have** to change `docker_socket: /var/run/docker.sock` permisson

```bash

sudo chmod 666 /var/run/docker.sock

```

8. Then back to the `ubuntu` user to execute the `deploy-orc8r.sh` to align the IP and user-port

```bash

sudo bash deploy-orc8r.sh

```

Fill the question with default input, except for the LoadBalancer IP.

5. Configure the `deploy-orc8r.sh` to modify my custom script environment

```bash

vi deploy-orc8r.sh

```

Execute this modified script if your're trying to run it for the second time, because of the error on ansible task installing Docker or RKE at the previous step.

<details><summary>deploy-orc8r.sh</summary>

``` o

#!/usr/bin/env bash

set -e

# Check if the system is Linux

if [ $(uname) != "Linux" ]; then

echo "This script is only for Linux"

exit 1

fi

# Run as root user

if [ $(id -u) != 0 ]; then

echo "Please run as root user"

exit 1

fi

ORC8R_DOMAIN="magma.local"

NMS_ORGANIZATION_NAME="magma-test"

NMS_EMAIL_ID_AND_PASSWORD="admin"

ORC8R_IP=$(ip a s $(ip r | head -n 1 | awk '{print $5}') | awk '/inet/ {print $2}' | cut -d / -f 1 | head -n 1)

GITHUB_USERNAME="ShubhamTatvamasi"

MAGMA_ORC8R_REPO="magma-galaxy"

MAGMA_USER="magma"

MAGMA_PORT="17617"

HOSTS_FILE="hosts.yml"

# Take input from user

read -rp "Your Magma Orchestrator domain name: " -ei "${ORC8R_DOMAIN}" ORC8R_DOMAIN

read -rp "NMS organization(subdomain) name you want: " -ei "${NMS_ORGANIZATION_NAME}" NMS_ORGANIZATION_NAME

read -rp "Set your email ID for NMS: " -ei "${NMS_EMAIL_ID_AND_PASSWORD}" NMS_EMAIL_ID

read -rp "Set your password for NMS: " -ei "${NMS_EMAIL_ID_AND_PASSWORD}" NMS_PASSWORD

read -rp "Do you wish to install latest Orc8r build: " -ei "Yes" LATEST_ORC8R

read -rp "Set your LoadBalancer IP: " -ei "${ORC8R_IP}" ORC8R_IP

case ${LATEST_ORC8R} in

[yY]*)

ORC8R_VERSION="latest"

;;

[nN]*)

ORC8R_VERSION="stable"

;;

esac

# Add repos for installing yq and ansible

#add-apt-repository --yes ppa:rmescandon/yq

#add-apt-repository --yes ppa:ansible/ansible

# Install yq and ansible

#apt install yq ansible -y

# Create magma user and give sudo permissions

#useradd -m ${MAGMA_USER} -s /bin/bash -G sudo

#echo "${MAGMA_USER} ALL=(ALL) NOPASSWD:ALL" >> /etc/sudoers

# switch to magma user

su - ${MAGMA_USER} -c bash <<_

# Genereta SSH key for magma user

#ssh-keygen -t rsa -f ~/.ssh/id_rsa -N ''

#cp ~/.ssh/id_rsa.pub ~/.ssh/authorized_keys

# Clone Magma Galaxy repo

git clone https://github.com/${GITHUB_USERNAME}/${MAGMA_ORC8R_REPO} --depth 1

cd ~/${MAGMA_ORC8R_REPO}

# Depoly latest Orc8r build

if [ "${ORC8R_VERSION}" == "latest" ]; then

sed "s/# magma_docker/magma_docker/g" -i ${HOSTS_FILE}

sed "s/# orc8r_helm_repo/orc8r_helm_repo/" -i ${HOSTS_FILE}

fi

# export variables for yq

export ORC8R_IP=${ORC8R_IP}

export MAGMA_USER=${MAGMA_USER}

export MAGMA_PORT=${MAGMA_PORT}

export ORC8R_DOMAIN=${ORC8R_DOMAIN}

export NMS_ORGANIZATION_NAME=${NMS_ORGANIZATION_NAME}

export NMS_EMAIL_ID=${NMS_EMAIL_ID}

export NMS_PASSWORD=${NMS_PASSWORD}

# Update values to the config file

yq e '.all.hosts = env(ORC8R_IP)' -i ${HOSTS_FILE}

yq e '.all.vars.ansible_user = env(MAGMA_USER)' -i ${HOSTS_FILE}

yq e '.all.vars.ansible_port = env(MAGMA_PORT)' -i ${HOSTS_FILE}

yq e '.all.vars.orc8r_domain = env(ORC8R_DOMAIN)' -i ${HOSTS_FILE}

yq e '.all.vars.nms_org = env(NMS_ORGANIZATION_NAME)' -i ${HOSTS_FILE}

yq e '.all.vars.nms_id = env(NMS_EMAIL_ID)' -i ${HOSTS_FILE}

yq e '.all.vars.nms_pass = env(NMS_PASSWORD)' -i ${HOSTS_FILE}

# Deploy Magma Orchestrator

ansible-playbook deploy-orc8r.yml

```

</details>

7. Access API to make sure that is running

- Now make sure that the API and your certs are working

```bash

# Tab 1

kubectl -n magma port-forward $(kubectl -n magma get pods -l app.kubernetes.io/component=nginx-proxy -o jsonpath="{.items[0].metadata.name}") 9443:9443

```

```bash

# Tab 2

curl -k \

--cert MAGMA_ROOT/orc8r/cloud/helm/orc8r/charts/secrets/.secrets/certs/admin_operator.pem \

--key MAGMA_ROOT/orc8r/cloud/helm/orc8r/charts/secrets/.secrets/certs/admin_operator.key.pem \

https://localhost:9443

"hello"

```

8. Access NMS in your browser

- Create an admin user

```bash

kubectl exec -it -n magma \

$(kubectl get pod -n magma -l app.kubernetes.io/component=controller -o jsonpath="{.items[0].metadata.name}") -- \

/var/opt/magma/bin/accessc add-existing -admin -cert /var/opt/magma/certs/admin_operator.pem admin_operator

```

- Port-forward nginx

```bash

kubectl -n magma port-forward svc/nginx-proxy 8443:443

```

Login to NMS at `https://magma-test.localhost:8443` using credentials: `admin@magma.test/password1234`

## Access Gateway Setup

**Step-by-Step AGW Installation**

> Setting up AGW on Docker Environment

1. Do pre-installation steps

Copy your `rootCA.pem` file from orc8r to the following location:

```bash

mkdir -p /var/opt/magma/certs

vim /var/opt/magma/certs/rootCA.pem

```

<details><summary>Expected Output</summary>

</details>

2. Run AGW installation

Download AGW docker install script

```bash

wget https://github.com/magma/magma/raw/v1.8/lte/gateway/deploy/agw_install_docker.sh

bash agw_install_docker.sh

```

3. Configure AGW

Once you see the output `Reboot this machine to apply kernel settings`, reboot your AGW host.

- Create `control_proxy.yml` file with your orc8r details:

```bash

cat << EOF | sudo tee /var/opt/magma/configs/control_proxy.yml

cloud_address: controller.orc8r.magmacore.link

cloud_port: 443

bootstrap_address: bootstrapper-controller.orc8r.magmacore.link

bootstrap_port: 443

fluentd_address: fluentd.orc8r.magmacore.link

fluentd_port: 24224

rootca_cert: /var/opt/magma/certs/rootCA.pem

EOF

```

- Start your access gateway

```bash

cd /var/opt/magma/docker

sudo docker compose --compatibility up -d

```

- Now get Hardware ID and Challenge key and add AGW in your orc8r:

```bash

docker exec magmad show_gateway_info.py

```

- Then restart your access gateway:

```bash

sudo docker compose --compatibility up -d --force-recreate

```

## Connecting AGW to NMS

> ON PROGRESS

### Motivation

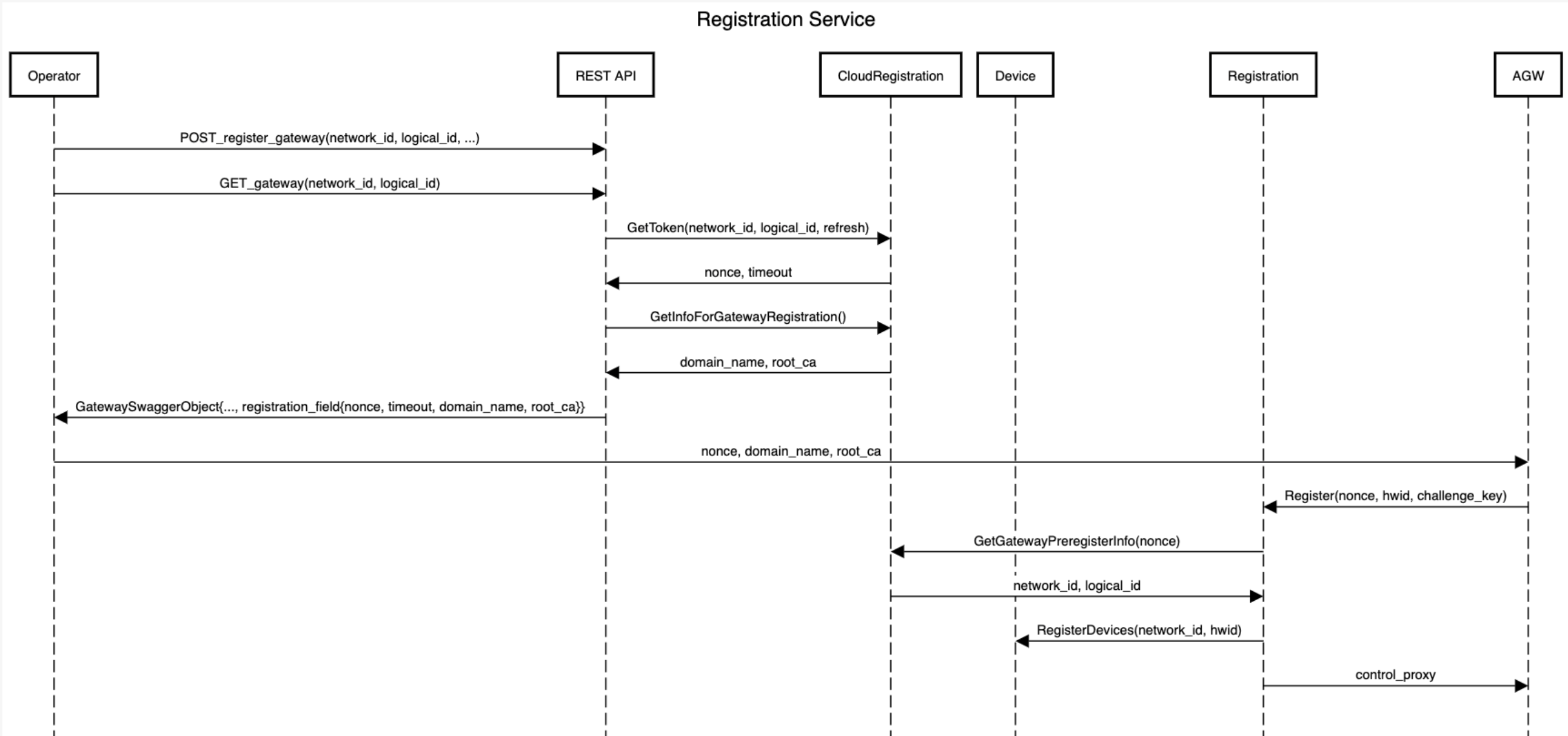

Previously, to register a gateway, the operator would have to gather the gateway information (both the hardware ID and the challenge key) through `show_gateway_info.py` script, then provision the gateway with the correct values of `control_proxy.yml` and `rootCA.pem`.

Then, the operator would have to register each gateway through the NMS UI and validate the gateway registration by running `checkin_cli.py`.

In efforts to simplify gateway registration, the new registration process only requires two steps: registering the gateway at Orc8r and then running `register.py` at the gateway.

### Solution Overview 1

The overview of gateway registration is as follows:

Note: The operator should set per-tenant default `control_proxy.yml` through the API endpoint `\tenants\{tenant_id}\control_proxy` as a prerequisite.

The control proxy must have `\n` characters as line breaks. Here is a sample request body:

```json

{

"control_proxy": "nghttpx_config_location: /var/tmp/nghttpx.conf\n\nrootca_cert: /var/opt/magma/certs/rootCA.pem\ngateway_cert: /var/opt/magma/certs/gateway.crt\ngateway_key: /var/opt/magma/certs/gateway.key\nlocal_port: 8443\ncloud_address: controller.magma.test\ncloud_port: 7443\n\nbootstrap_address: bootstrapper-controller.magma.test\nbootstrap_port: 7444\nproxy_cloud_connections: True\nallow_http_proxy: True"

}

```

1. The operator registers a gateway partially (i.e., without its device field) through Orc8r through an API call. It will receive a registration token, the `rootCA.pem`, and its Orc8r's domain name in the response body under the field registration info. For example,

```json

{

"registration_info": {

"domain_name": "magma.test",

"registration_token": "reg_h9h2fhfUVuS9jZ8uVbhV3vC5AWX39I",

"root_ca": "-----BEGIN CERTIFICATE-----\nMIIDNTCCAh2gAwIBAgIUAX6gmuNG3v/vv7uZjL5sUKYflJ0wDQYJKoZIhvcNAQEL\nBQAwKTELMAkGA1UEBhMCVVMxGjAYBgNVBAMMEXJvb3RjYS5tYWdtYS50ZXN0MCAX\nDTIxMTAwMTIwMzYyOVoYDzMwMjEwMjAxMjAzNjI5WjApMQswCQYDVQQGEwJVUzEa\nMBgGA1UEAwwRcm9vdGNhLm1hZ21hLnRlc3QwggEiMA0GCSqGSIb3DQEBAQUAA4IB\nDwAwggEKAoIBAQDN6k/+7buO/KwgJgRjE/LM5wmNvMWpxDfKJpdpUH6DrjQkEpZB\n8E8Ts9qwR6RSTh8H/jL/qkoHpTbIdHZhOtayY/t/zreIClAytWyJSaJfGoRfXzsV\nyzjD7Bk79YrgAja9cAJcqy26gURQsB173opnlKTzMCfiirpY3gbiJEy74s0M6uII\njGvxx1uvXauFBO5mbbAPmxG4fFXTBGJMcxvHtdU8Vizf2YkZXqoXni0gJ0TJFK4O\nVeZe8EWuUXsD1iEbxz/H752I4yfQ2Djuj6emjRJlAeKnPsQWSsR4Qt3Po0R5YOmn\nEEsOmlfH6vOm3eiYrhxlIQ7uEFw760IDe0OLAgMBAAGjUzBRMB0GA1UdDgQWBBT6\nVQqTB+bVV7foz2xPo3sUfAqnhDAfBgNVHSMEGDAWgBT6VQqTB+bVV7foz2xPo3sU\nfAqnhDAPBgNVHRMBAf8EBTADAQH/MA0GCSqGSIb3DQEBCwUAA4IBAQASxJHc6JTk\n5iZJOBEXzl8iWqIO9K8z3y46Jtc9MA7DnYO5v6HvYE8WnFn/FRui/MLiOb1OAsVk\nJpNHRkJJMB1KxD5RkyfXTcIE+LSu/XUJQDc2F4RnZPYhPExK8tcmqHTDV78m+LHl\nswOIjhQVn9r6TncsfOhLs0YkqikHSJz1i4foJGFiOmM5R91KuOvwOG4qQ1Xw1J64\n7sHA4OElf/CIt0ul7xfAlzbLXOaPBb8z82dR5H28+3srGayPgauM9EGIHulm1J53\nM4uFtM9sA/X/EWMLF1T5ACDTjpD74yhxX98hFNlDuABacer/RN1UB/iTG7eMMhIO\nWLRlFB4QVm8w\n-----END CERTIFICATE-----\n"

}

}

```

The registration token expires every 30 minutes and automatically refreshes every time the operator fetches this unregistered gateway.

2. The operator runs the `register.py` script at the gateway with the registration token and its Orc8r's domain name.

```shell

MAGMA-VM [/home/vagrant]$ sudo /home/vagrant/build/python/bin/python3 ~/magma/orc8r/gateway/python/scripts/register.py [-h] [--ca-file CA_FILE] [--cloud-port CLOUD_PORT] [--no-control-proxy] DOMAIN_NAME REGISTRATION_TOKEN

```

The operator can optionally set the root CA file with the `--ca-file CA_FILE` flag or disable writing to the control proxy file with the `--no-control-proxy` flag.

`sudo` permission is necessary because the script needs write access to the file `/var/opt/magma/configs/control_proxy.yml` for configuring the gateway.

For example, in a testing environment with the `rootCA.pem` and `control_proxy.yml` configured, the operator could run

```shell

MAGMA-VM [/home/vagrant]$ sudo /home/vagrant/build/python/bin/python3 ~/magma/orc8r/gateway/python/scripts/register.py magma.test reg_t5S4zjhD0tXRTmkYKQoN91FmWnQSK2 --cloud-port 7444 --no-control-proxy

```

Upon success, the script will print the gateway information that was registered and run `checkin_cli.py` automatically. Below is an example of the output of a successful register attempt.

```shell

> Registered gateway

Hardware ID

-----------

id: "aabf4fb9-0933-4039-95a8-b87ae7144d71"

Challenge Key

-----------

key_type: SOFTWARE_ECDSA_SHA256

key: "MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEQrZVdmuZpvciEXdznTErWUelOcgdBwPKQfOZDL7Wkl8ALSBtKvJWDPyhS6rkW9/xJdgPD4QK3Jqc4Eox5NT6SVYYuHWLv7b28493rwFvuC2+YurmfYj+LZh9VBVTvlwk"

Control Proxy

-----------

nghttpx_config_location: /var/tmp/nghttpx.conf

rootca_cert: /var/opt/magma/certs/rootCA.pem

gateway_cert: /var/opt/magma/certs/gateway.crt

gateway_key: /var/opt/magma/certs/gateway.key

local_port: 8443

cloud_address: controller.magma.test

cloud_port: 7443

bootstrap_address: bootstrapper-controller.magma.test

bootstrap_port: 7444

proxy_cloud_connections: True

allow_http_proxy: True

> Waiting 60.0 seconds for next bootstrap

> Running checkin_cli

1. -- Testing TCP connection to controller.magma.test:7443 --

2. -- Testing Certificate --

3. -- Testing SSL --

4. -- Creating direct cloud checkin --

5. -- Creating proxy cloud checkin --

Success!

```

### Solution Overview 2

The Network Management System (NMS) can be used as UI to manage the AGW connection. This UI is the easy way to perform the connection between elements. Please consider the next:

- Assume that the Orchestrator `bootstrapper` service IP is `192.168.1.248`.

- Assume that the Orchestrator `controller` service IP is `192.168.1.248`.

- Assume that the `fluentd` service IP is `192.168.1.247`.

- Assume that the NMS service IP is `10.233.39.105`.

- Assume that the AGW IP is `192.168.1.88` and the user of VM is `magma`.

- It is assumed that you have already deployed the orchestrator using Helm. Please see sections:

- [Deploy Magma Orchestrator using Helm](installation-magma/Deploy-Magma-Orchestrator-using-Helm)

In this case, consider the next environment variables before continue the procedure:

```bash

export MAGMA_ROOT=~/magma_v1.6

```

**Step-by-Step Integration**

1. Check the Certificate Validation

The next sections asumes that you have already deployed an Orchestrator and have the certificates needed for connection to AGW. The next commands can help you to verify these points.

```bash

# In the deployment machine:

cd $MAGMA_ROOT/orc8r/cloud/helm/orc8r/charts/secrets/.secrets/certs

ls -1

# admin_operator.key.pem

# admin_operator.pem

# admin_operator.pfx

# bootstrapper.key

# certifier.key

# certifier.pem

# controller.crt

# controller.csr

# controller.key

# rootCA.key

# rootCA.pem

# rootCA.srl

# vpn_ca.crt

# vpn_ca.key

```

In the next sections, the `rootCA.pem` certificate is neccesary. For this reason, later in this documentation is explained how to transfer the certificate to AGW VM.

2. Copy the Certificate

Copy the `rootCA.pem` certificate from the orchestrator deployment in the AGW machine and put it in the `/var/opt/magma/certs/` folder. Use the `kubectl` command to get the certificate:

```bash

kubectl get secret/orc8r-secrets-certs -n magma -o "jsonpath={.data.rootCA\.pem}" | base64 --decode

# -----BEGIN CERTIFICATE-----

# MIIDTTCCAjWgAwIBAgIUF/aIL9e3VgoUTtyleH2/AeS6YpAwDQYJKoZIhvcNAQEL

# BQAwNjELMAkGA1UEBhMCVVMxJzAlBgNVBAMMHnJvb3RjYS5tYWdtYS5zdmMuY2x1

# c3Rlci5sb2NhbDAeFw0yMTA4MTcyMTIyNTZaFw0zMTA4MTUyMTIyNTZaMDYxCzAJ

# BgNVBAYTAlVTMScwJQYDVQQDDB5yb290Y2EubWFnbWEuc3ZjLmNsdXN0ZXIubG9j

# YWwwggEiMA0GCSqGSIb3DQEBAQUAA4IBDwAwggEKAoIBAQDPDlEquQfdrDI1IBxp

# jyHFLvyxJdzu1cUFGFZXo3XZbHWOUG68fHis+YPBIQUGlrqAKT48SM3xwYK2+lzp

# soka7SKlNkJbZ9ngiBFS/md8VWupmBnkSjMG6SjF54kNFIauNBJdhbcqcCMXbI0Q

# rqFOVDbgIV3J2+YghmC/DaDJf4m+hRR5RpO9yqFVjb8469d8i7vhRksR49lw0iVb

# sUIPGieg+6+PJyf9+n7ZKJKTttYU3V/lrm3hLD7pYgoSyIO7AduuW8HEtSXJfIYQ

# qxQ6Bhjez77+Lar61oqXaM9uI4LcQ5r5fuUVMX0ZGPZpSI/sqBjPQXEs9WqOPikk

# chOlAgMBAAGjUzBRMB0GA1UdDgQWBBTU4qYYNP7NmdEJqy2psJHabKsMfzAfBgNV

# HSMEGDAWgBTU4qYYNP7NmdEJqy2psJHabKsMfzAPBgNVHRMBAf8EBTADAQH/MA0G

# CSqGSIb3DQEBCwUAA4IBAQAdA2W1+kYR/NxF7QHnVy9aIDocTspswHw5pP8wAPsw

# Ahs/Mue3vo2uW2nFmD4FdAmvU8ktPzuCTMvk5mLG4TOk1tuGmvhdloQEmBQrC7RP

# jOuK7aLxj8xfeB7aCDWLik1O3Z0e5s09hv7kHUi2Km19lU9Lw68BbSuj+Sae8bB6

# 1wgkWQ/dw+ttS8SDNtHJ7y1d6sdbQpR33KW1pRoKjLNqpd8pa6qwT44SKO9Z0Fti

# H83Snc1zrxb+hnt3g3Zegy32wmRtNNtglSfrgP6xUp7V7eI4IQ69If40kFpN2Fep

# uO1Khv54UwVgdzHpdwJ8OIaXg2bCM1vu5CQnCQLKw/Xe

# -----END CERTIFICATE-----

```

3. Configure the Certificate

In AGW put the `rootCA.pem` in `/var/opt/magma/certs/` folder:

```bash

ls -1 /var/opt/magma/certs/

# gateway.crt

# gateway.key

# gw_challenge.key

# rootCA.pem <--- Certificate for auth to orc8r

```

> **NOTE:** Also, you can transfer the `rootCA.pem` cert using `sftp` or `scp`:

> ```bash

> sudo sftp ubuntu@<secrets_ip>:$MAGMA_ROOT/orc8r/cloud/helm/orc8r/charts/secrets/.secrets/certs/rootCA.pem .

> ```

4. Modify the `/etc/hosts` in AGW Machine

Next, modify the `/etc/hosts` file in AGW machine for DNS resolution. Be sure to use the IPs assigned by the cluster for each service of interest. In this case, the services are specified in the beginning table:

```bash

sudo vi /etc/hosts

## Add the next lines

# 192.168.1.248 controller.magma.svc.cluster.local

# 192.168.1.248 bootstrapper-controller.magma.svc.cluster.local

# 192.168.1.247 fluentd.magma.svc.cluster.local

```

5. Validation Connectivity test

Make sure that you have connectivity to the orchestrator services:

```bash

ping -c 4 controller.magma.svc.cluster.local

ping -c 4 bootstrapper-controller.magma.svc.cluster.local

pinc -c 4 fluentd.magma.svc.cluster.local

```

6. Edit Proxy Configuration

By default, the `control_proxy.yml` file have a configuration that use wrong ports to talk with the orchestrator. It is necessary edit the ports as shown below:

```bash

sudo vi /etc/magma/control_proxy.yml

```

<details><summary>control_proxy.yml</summary>

```yaml

---

#

# Copyright (c) 2016-present, Facebook, Inc.

# All rights reserved.

#

# This source code is licensed under the BSD-style license found in the

# LICENSE file in the root directory of this source tree. An additional grant

# of patent rights can be found in the PATENTS file in the same directory.

# nghttpx config will be generated here and used

nghttpx_config_location: /var/tmp/nghttpx.conf

# Location for certs

rootca_cert: /var/opt/magma/certs/rootCA.pem

gateway_cert: /var/opt/magma/certs/gateway.crt

gateway_key: /var/opt/magma/certs/gateway.key

# Listening port of the proxy for local services. The port would be closed

# for the rest of the world.

local_port: 8443

# Cloud address for reaching out to the cloud.

cloud_address: controller.magma.svc.cluster.local

cloud_port: 8443

bootstrap_address: bootstrapper-controller.magma.svc.cluster.local

bootstrap_port: 8444

fluentd_address: fluentd.magma.svc.cluster.local

fluentd_port: 24224

# Option to use nghttpx for proxying. If disabled, the individual

# services would establish the TLS connections themselves.

proxy_cloud_connections: True

# Allows http_proxy usage if the environment variable is present

allow_http_proxy: True

```

</details>

Please see how the ports for `controller.magma.svc.cluster.local`, `bootstrapper-controller.magma.svc.cluster.local` and `fluentd.magma.svc.cluster.local` are `8443`. `8444` and `24224` respectively.

7. Generate a new key for the AGW

If you try before to connect the AGW, the orchestrator saves the Hardware UUID and the Challenge Key in a local database that prevents a connection of an AGW with the same combination. For this reason, we suggest to generate a new key and then, perform the connection. In the AGW machine execute the next commands:

```bash

sudo snowflake --force-new-key

cd && show_gateway_info.py # Verify the new key

# Example of output:

# Hardware ID

# -----------

# e828ecb7-64d4-4b7c-b163-11ff3f8abbb7

#

# Challenge key

# -------------

# MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAERR/0pjjOI/3VVFUJmphurMHhlgxyVHxkJ9QDovgLC99lImKOvUHzKEqlNwsqxmCyWyuyZnNpf8lOaWNmoclbGcXFwh1EeTlIUZmvW1rqj8iPO17AQRgpHJYN5F30eaHa

#

# Notes

# -----

# - Hardware ID is this gateway's unique identifier

# - Challenge key is this gateway's long-term keypair used for

# bootstrapping a secure connection to the cloud

```

8. Now delete old AGW certificates and restart services

```bash

sudo rm /var/opt/magma/certs/gateway*

# Restart magma services

sudo service magma@* stop

sudo service magma@magmad restart

sudo service magma@* status

```

9. Verify that you have `200` response in HTTPv2 messages in control_proxy service

> Execute this after do NMS Configuration below

sudo service magma@control_proxy status

<details> <summary>Expected Output</summary>

```o

sudo service magma@control_proxy status

# ● magma@control_proxy.service - Magma control_proxy service

# Loaded: loaded (/etc/systemd/system/magma@control_proxy.service; disabled; vendor preset: enabled)

# Active: active (running) since Mon 2021-05-10 21:38:37 UTC; 2 days ago

# Process: 22550 ExecStartPre=/usr/bin/env python3 /usr/local/bin/generate_nghttpx_config.py (code=exited, status=0/SUCCESS)

# Main PID: 22582 (nghttpx)

# Tasks: 2 (limit: 4915)

# Memory: 2.7M (limit: 300.0M)

# CGroup: /system.slice/system-magma.slice/magma@control_proxy.service

# ├─22582 nghttpx --conf /var/opt/magma/tmp/nghttpx.conf

# └─22594 nghttpx --conf /var/opt/magma/tmp/nghttpx.conf

# May 13 16:31:09 magma-dev control_proxy[22582]: 2021-05-13T16:31:09.069Z [127.0.0.1 -> metricsd-controller.magma.test,8443] "POST /magma.orc8r.MetricsController/Collect HTTP/2" 200 5bytes 0.030s

# May 13 16:31:09 magma-dev control_proxy[22582]: 2021-05-13T16:31:09.069Z [127.0.0.1 -> metricsd-controller.magma.test,8443] "POST /magma.orc8r.MetricsController/Collect HTTP/2" 200 5bytes 0.038s

# May 13 16:31:09 magma-dev control_proxy[22582]: 2021-05-13T16:31:09.069Z [127.0.0.1 -> metricsd-controller.magma.test,8443] "POST /magma.orc8r.MetricsController/Collect HTTP/2" 200 5bytes 0.038s

# May 13 16:31:09 magma-dev control_proxy[22582]: 2021-05-13T16:31:09.069Z [127.0.0.1 -> metricsd-controller.magma.test,8443] "POST /magma.orc8r.MetricsController/Collect HTTP/2" 200 5bytes 0.042s

# May 13 16:31:09 magma-dev control_proxy[22582]: 2021-05-13T16:31:09.069Z [127.0.0.1 -> metricsd-controller.magma.test,8443] "POST /magma.orc8r.MetricsController/Collect HTTP/2" 200 5bytes 0.043s

# May 13 16:31:20 magma-dev control_proxy[22582]: 2021-05-13T16:31:20.479Z [127.0.0.1 -> streamer-controller.magma.test,8443] "POST /magma.orc8r.Streamer/GetUpdates HTTP/2" 200 2054bytes 0.022s

# May 13 16:31:33 magma-dev control_proxy[22582]: 2021-05-13T16:31:33.682Z [127.0.0.1 -> streamer-controller.magma.test,8443] "POST /magma.orc8r.Streamer/GetUpdates HTTP/2" 200 287bytes 0.019s

# May 13 16:31:41 magma-dev control_proxy[22582]: 2021-05-13T16:31:41.716Z [127.0.0.1 -> streamer-controller.magma.test,8443] "POST /magma.orc8r.Streamer/GetUpdates HTTP/2" 200 67bytes 0.013s

# May 13 16:31:41 magma-dev control_proxy[22582]: 2021-05-13T16:31:41.716Z [127.0.0.1 -> streamer-controller.magma.test,8443] "POST /magma.orc8r.Streamer/GetUpdates HTTP/2" 200 31bytes 0.012s

# May 13 16:31:41 magma-dev control_proxy[22582]: 2021-05-13T16:31:41.716Z [127.0.0.1 -> streamer-controller.magma.test,8443] "POST /magma.orc8r.Streamer/GetUpdates HTTP/2" 200 7bytes 0.010s

```

</details>

### NMS Configuration

When you start all AGW services, the AGW is able to connect with the orchestrator. For perform this task, there are two possible ways:

1. Using the API that orchestrator provides.

2. Using the NMS interface.

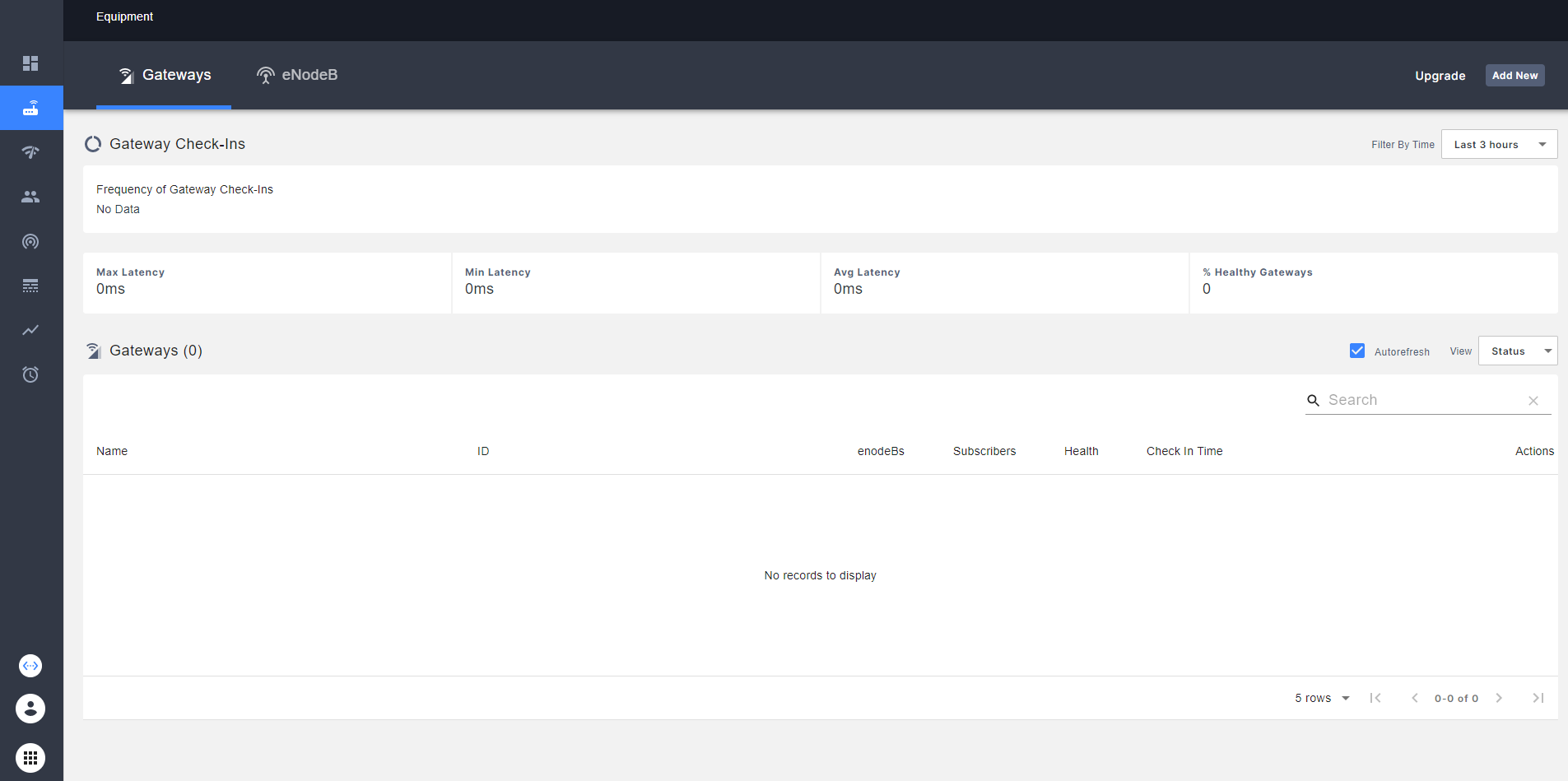

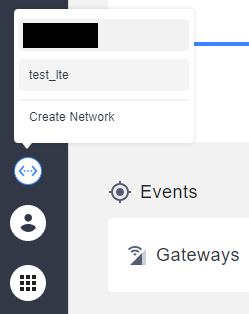

In this case, **the second option is considered**. First, access to the UI. You should see something like the next image:

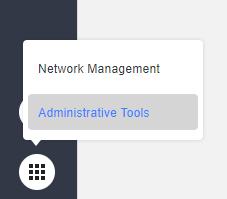

Once in the NMS, go to the _Administrative Tools_ option:

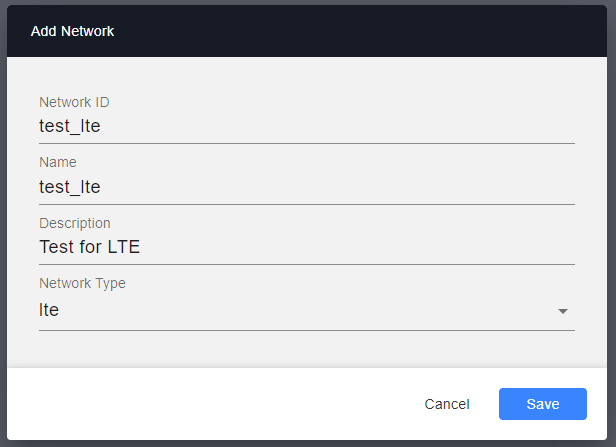

Next, go to the _Networks_ tab and add a new network.

> **NOTE:** You can have multiple AGWs on the same network.

With the network created, you can go to the _Network Management_ option and, next, go to the network that you created in the previous step. These steps are shown in the following figures:

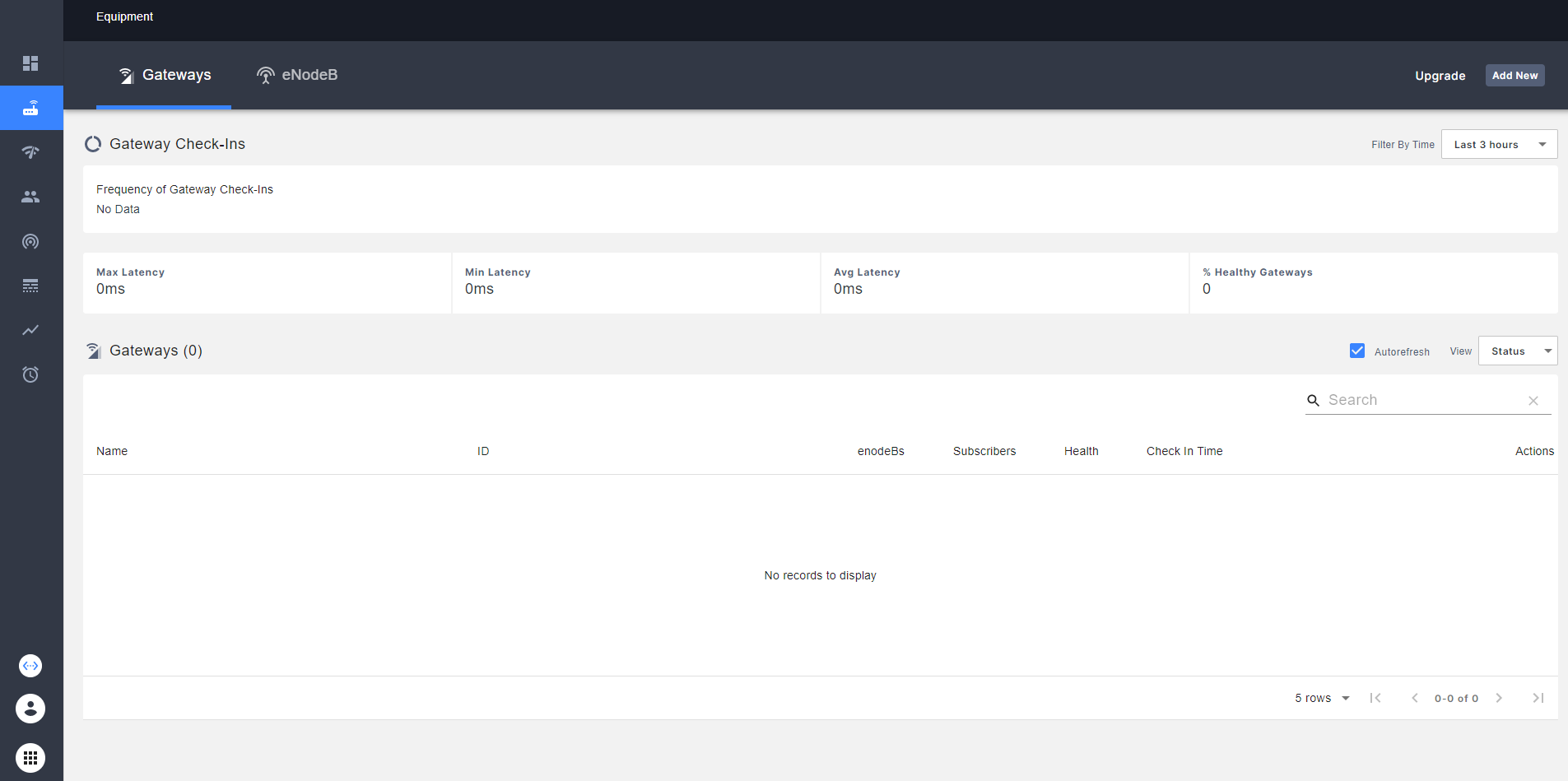

In the emerged interface, go to the _Equipment_ tab and select it. You should see all the AGW that you add to the current network. Also, you can see the health of each AGW, latency and another statistics.

In the up-right part of the UI, select the **Add New** option for add a new AGW:

You should see a new interface where needed some AGW information.

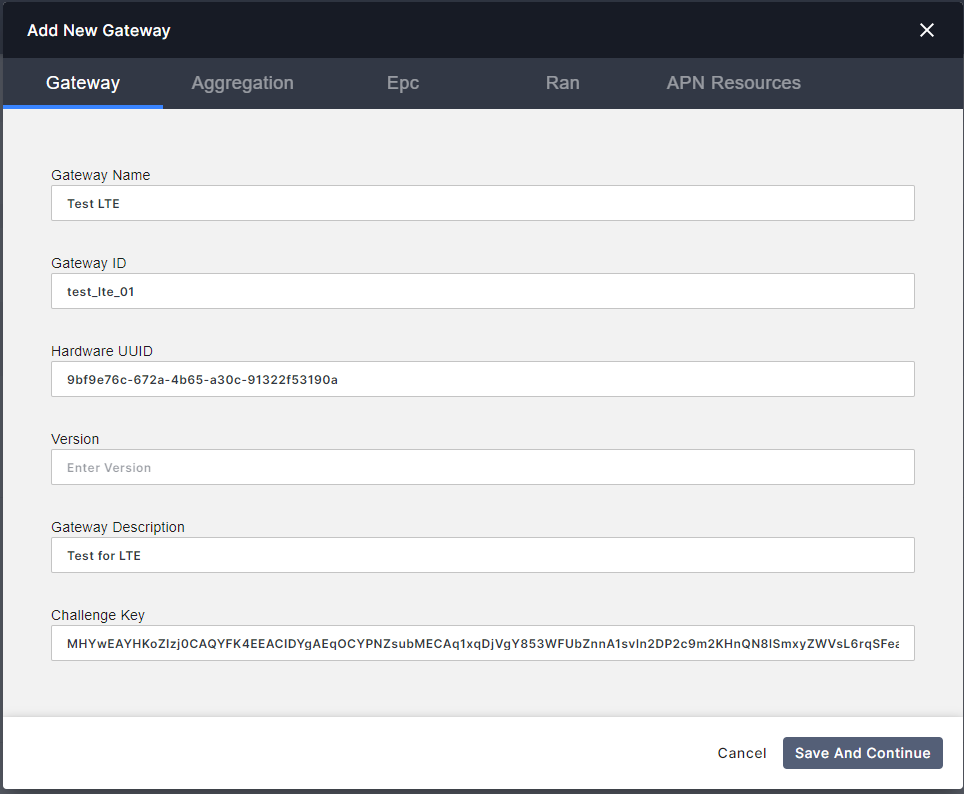

Please follow all info considering next:

* **Gateway Name:** Any. We suggest _lte_everis_

* **Gateway ID:** Must be unique. We suggest IDs like _agw01_, _agw02_, etc.

* **Hardware UUID:** Go to AGW VM and run `show_gateway_info.py`. An example of the output is showed below:

```bash

# In the AGW machine:

cd && show_gateway_info.py

# Hardware ID:

# ------------

# 9bf9e76c-672a-4b65-a30c-91322f53190a

#

# Challenge Key:

# -----------

# MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAEqOCYPNZsubMECAq1xqDjVgY853WFUbZnnA1svln2DP2c9m2KHnQN8lSmxyZWVsL6rqSFeaqRLnn/bq8dbSBFUNPT505OtBgsQnevDsmWoFGfOQtaAe8ga+ZujJzGbPU+

```

* **Version:** Leave it empty. The orchestrator must be recognize the version when the AGW Check In Successful.

* **Gateway Description:** Any.

For Aggregation tab leave it by default.

For EPC tab:

* **Nat Enabled:** True.

* **IP Block:** Depends on number of eNBs and users. Leave it by default if there are few.

* **DNS Primary:** We suggest leave empty. In any case, it is configurable after.

* **DNS Secondary:** We suggest leave empty. In any case, it is configurable after.

> **NOTE:** If your AGW is connected to an operator's network, use the DNS primary/secondary that they suggest.

For RAN leave it all by default.

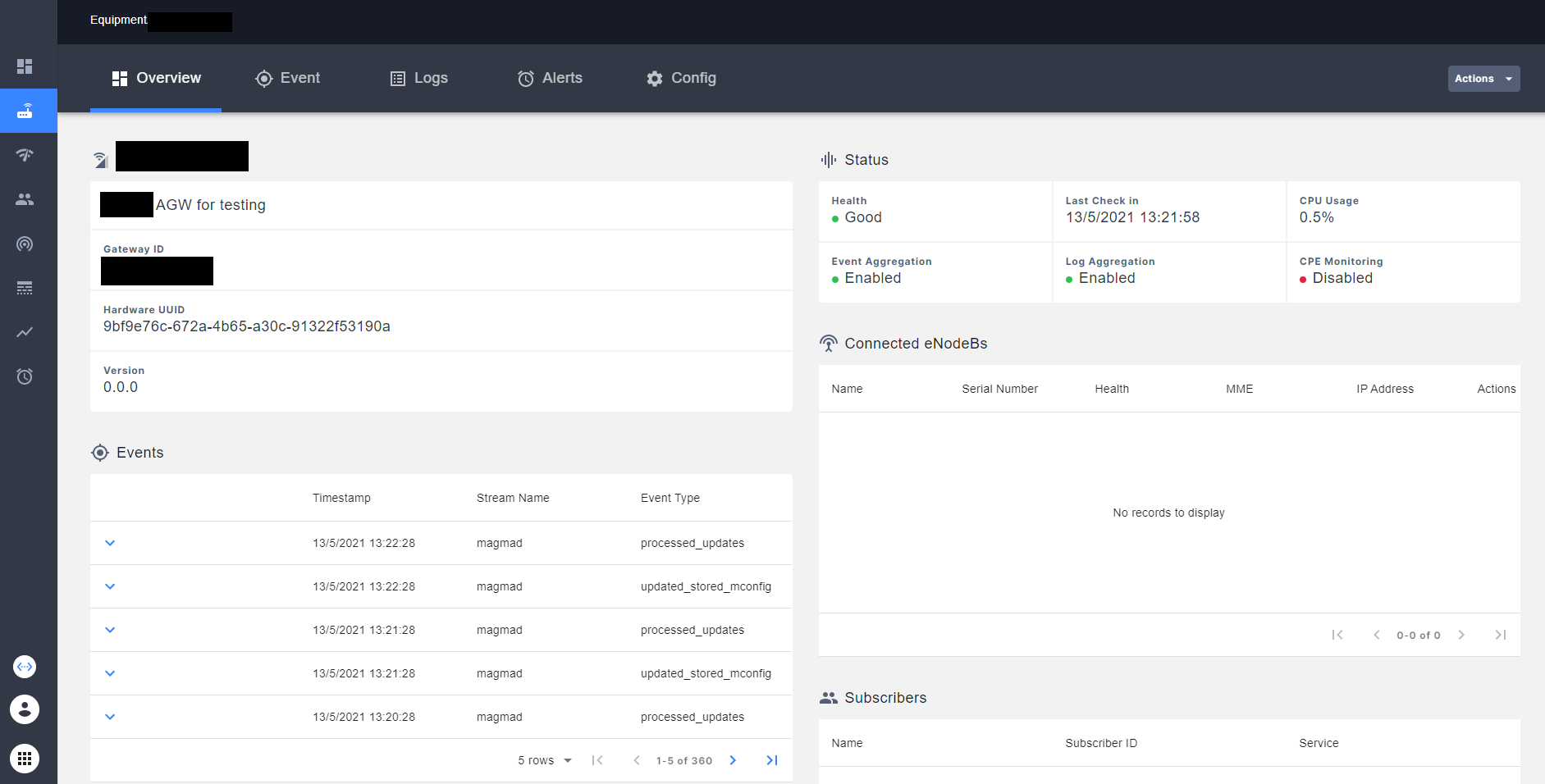

In Equipment tab you should see the AGW with a _Good Health_. You can see that, in NMS interface, this AGW has a good Health.

## End-to-End Setup

> ON PROGRESS USING srsRAN

### Introduction

srsRAN is a 4G and 5G software radio suite. Usually eNodeB and UE are used with actual RF front-ends for over-the-air transmissions. There are, however, a number of use-cases for which RF front-ends might not be needed or wanted. Those use-cases include (but are not limited to) the use of srsRAN for educational or research purposes, continuous integration and delivery (CI/CD) or development and debugging.

With srsRAN this can be achieved by replacing the radio link between eNodeB and UE with a mechanism that allows to exchange baseband IQ samples over an alternative transport. For this purpose, we’ve implemented a ZeroMQ-based RF driver that essentially acts as a transmit and receive pipe for exchanging IQ samples over TCP or IPC.

### ZeroMQ Installation

First thing is to install ZeroMQ and build srsRAN. On Ubuntu, ZeroMQ development libraries can be installed with:

```bash

# Install prerequisites

sudo apt-get -y install libzmq3-dev build-essential cmake libfftw3-dev libmbedtls-dev libboost-program-options-dev libconfig++-dev libsctp-dev libtool autoconf automake

# Install libzmq

git clone https://github.com/zeromq/libzmq.git ~/libzmg && cd ~/libzmg

./autogen.sh

./configure

make

sudo make install

sudo ldconfig

# Install czmq

git clone https://github.com/zeromq/czmq.git ~/czmq && cd ~/czmq

./autogen.sh

./configure

make

sudo make install

sudo ldconfig

# Compile srsRAN

git clone https://github.com/srsRAN/srsRAN.git ~/srsRAN && cd ~/srsRAN

mkdir build && cd build

cmake ../

make

```

Put extra attention in the cmake console output. Make sure you read the following line:

```

...

-- FINDING ZEROMQ.

-- Checking for module 'ZeroMQ'

-- No package 'ZeroMQ' found

-- Found libZEROMQ: /usr/local/include, /usr/local/lib/libzmq.so

...

```

### Running the RAN

We will run each srsRAN application in a seperate terminal instance. To run any element, it is necessary to be in the compilation folder first and to guarantee that the configuration files are in the `/etc/srsran/` folder.

```bash

sudo mkdir -p /etc/srsran/

sudo cp ~/srsRAN/srsenb/sib.conf.example /etc/srsran/sib.conf

sudo cp ~/srsRAN/srsenb/rr.conf.example /etc/srsran/rr.conf

sudo cp ~/srsRAN/srsenb/drb.conf.example /etc/srsran/drb.conf

cd ~/srsRAN/build/

```

**Step-by-Step eNB & UE Setup**

1. Running the eNB

Let’s now launch the eNodeB. **The eNB can be launched without root permissions**.

```bash

cd ~/srsRAN/build/

./srsenb/src/srsenb ~/ran_configurations/enb.conf

```

The `~/ran_configurations/enb.conf` file have the next information:

<p>

<details>

<summary>enb.conf</summary>

```conf=

#####################################################################

# srsENB configuration file

#####################################################################

#####################################################################

# eNB configuration

#

# enb_id: 20-bit eNB identifier.

# mcc: Mobile Country Code

# mnc: Mobile Network Code

# mme_addr: IP address of MME for S1 connnection

# gtp_bind_addr: Local IP address to bind for GTP connection

# gtp_advertise_addr: IP address of eNB to advertise for DL GTP-U Traffic

# s1c_bind_addr: Local IP address to bind for S1AP connection

# n_prb: Number of Physical Resource Blocks (6,15,25,50,75,100)

# tm: Transmission mode 1-4 (TM1 default)

# nof_ports: Number of Tx ports (1 port default, set to 2 for TM2/3/4)

#

#####################################################################

[enb]

enb_id = 0x19B

mcc = 901

mnc = 70

mme_addr = 192.168.1.243

gtp_bind_addr = 192.168.1.109

s1c_bind_addr = 192.168.1.109

#n_prb = 50

#tm = 4

#nof_ports = 2

#####################################################################

# eNB configuration files

#

# sib_config: SIB1, SIB2 and SIB3 configuration file

# note: when enabling mbms, use the sib.conf.mbsfn configuration file which includes SIB13

# rr_config: Radio Resources configuration file

# drb_config: DRB configuration file

#####################################################################

[enb_files]

sib_config = sib.conf

rr_config = rr.conf

drb_config = drb.conf

#####################################################################

# RF configuration

#

# dl_earfcn: EARFCN code for DL (only valid if a single cell is configured in rr.conf)

# tx_gain: Transmit gain (dB).

# rx_gain: Optional receive gain (dB). If disabled, AGC if enabled

#

# Optional parameters:

# dl_freq: Override DL frequency corresponding to dl_earfcn

# ul_freq: Override UL frequency corresponding to dl_earfcn (must be set if dl_freq is set)

# device_name: Device driver family.

# Supported options: "auto" (uses first found), "UHD", "bladeRF", "soapy" or "zmq".

# device_args: Arguments for the device driver. Options are "auto" or any string.

# Default for UHD: "recv_frame_size=9232,send_frame_size=9232"

# Default for bladeRF: ""

# time_adv_nsamples: Transmission time advance (in number of samples) to compensate for RF delay

# from antenna to timestamp insertion.

# Default "auto". B210 USRP: 100 samples, bladeRF: 27.

#####################################################################

[rf]

#dl_earfcn = 3350

#tx_gain = 80

#rx_gain = 40

#device_name = auto

# For best performance in 2x2 MIMO and >= 15 MHz use the following device_args settings:

# USRP B210: num_recv_frames=64,num_send_frames=64

# And for 75 PRBs, also append ",master_clock_rate=15.36e6" to the device args

# For best performance when BW<5 MHz (25 PRB), use the following device_args settings:

# USRP B210: send_frame_size=512,recv_frame_size=512

#device_args = auto

#time_adv_nsamples = auto

# Example for ZMQ-based operation with TCP transport for I/Q samples

device_name = zmq

device_args = fail_on_disconnect=true,tx_port=tcp://*:2000,rx_port=tcp://localhost:2001,id=enb,base_srate=23.04e6

#####################################################################

# Packet capture configuration

#

# MAC-layer packets are captured to file a the compact format decoded

# by the Wireshark. For decoding, use the UDP dissector and the UDP

# heuristic dissection. Edit the preferences (Edit > Preferences >

# Protocols > DLT_USER) for DLT_USER to add an entry for DLT=149 with

# Protocol=udp. Further, enable the heuristic dissection in UDP under:

# Analyze > Enabled Protocols > MAC-LTE > mac_lte_udp and MAC-NR > mac_nr_udp

# For more information see: https://wiki.wireshark.org/MAC-LTE

# Configuring this Wireshark preferences is needed for decoding the MAC PCAP

# files as well as for the live network capture option.

#

# Please note that this setting will by default only capture MAC

# frames on dedicated channels, and not SIB. You have to build with

# WRITE_SIB_PCAP enabled in srsenb/src/stack/mac/mac.cc if you want

# SIB to be part of the MAC pcap file.

#

# S1AP Packets are captured to file in the compact format decoded by

# the Wireshark s1ap dissector and with DLT 150.

# To use the dissector, edit the preferences for DLT_USER to

# add an entry with DLT=150, Payload Protocol=s1ap.

#

# mac_enable: Enable MAC layer packet captures (true/false)

# mac_filename: File path to use for packet captures

# s1ap_enable: Enable or disable the PCAP.

# s1ap_filename: File name where to save the PCAP.

#

# mac_net_enable: Enable MAC layer packet captures sent over the network (true/false default: false)

# bind_ip: Bind IP address for MAC network trace (default: "0.0.0.0")

# bind_port: Bind port for MAC network trace (default: 5687)

# client_ip: Client IP address for MAC network trace (default "127.0.0.1")

# client_port Client IP address for MAC network trace (default: 5847)

#####################################################################

[pcap]

enable = false

filename = /tmp/enb.pcap

s1ap_enable = false

s1ap_filename = /tmp/enb_s1ap.pcap

mac_net_enable = false

bind_ip = 0.0.0.0

bind_port = 5687

client_ip = 127.0.0.1

client_port = 5847

#####################################################################

# Log configuration

#

# Log levels can be set for individual layers. "all_level" sets log

# level for all layers unless otherwise configured.

# Format: e.g. phy_level = info

#

# In the same way, packet hex dumps can be limited for each level.

# "all_hex_limit" sets the hex limit for all layers unless otherwise

# configured.

# Format: e.g. phy_hex_limit = 32

#

# Logging layers: rf, phy, phy_lib, mac, rlc, pdcp, rrc, gtpu, s1ap, stack, all

# Logging levels: debug, info, warning, error, none

#

# filename: File path to use for log output. Can be set to stdout

# to print logs to standard output

# file_max_size: Maximum file size (in kilobytes). When passed, multiple files are created.

# If set to negative, a single log file will be created.

#####################################################################

[log]

all_level = warning

all_hex_limit = 32

filename = /tmp/enb.log

file_max_size = -1

[gui]

enable = false

#####################################################################

# Scheduler configuration options

#

# sched_policy: User MAC scheduling policy (E.g. time_rr, time_pf)

# max_aggr_level: Optional maximum aggregation level index (l=log2(L) can be 0, 1, 2 or 3)

# pdsch_mcs: Optional fixed PDSCH MCS (ignores reported CQIs if specified)

# pdsch_max_mcs: Optional PDSCH MCS limit

# pusch_mcs: Optional fixed PUSCH MCS (ignores reported CQIs if specified)

# pusch_max_mcs: Optional PUSCH MCS limit

# min_nof_ctrl_symbols: Minimum number of control symbols

# max_nof_ctrl_symbols: Maximum number of control symbols

#

#####################################################################

[scheduler]

#policy = time_pf

#policy_args = 2

#max_aggr_level = -1

#pdsch_mcs = -1

#pdsch_max_mcs = -1

#pusch_mcs = -1

#pusch_max_mcs = 16

#min_nof_ctrl_symbols = 1

#max_nof_ctrl_symbols = 3

#pucch_multiplex_enable = false

#####################################################################

# eMBMS configuration options

#

# enable: Enable MBMS transmission in the eNB

# m1u_multiaddr: Multicast addres the M1-U socket will register to

# m1u_if_addr: Address of the inteferface the M1-U interface will listen for multicast packets.

# mcs: Modulation and Coding scheme for MBMS traffic.

#

#####################################################################

[embms]

#enable = false

#m1u_multiaddr = 239.255.0.1

#m1u_if_addr = 127.0.1.201

#mcs = 20

#####################################################################

# Channel emulator options:

# enable: Enable/Disable internal Downlink/Uplink channel emulator

#

# -- AWGN Generator

# awgn.enable: Enable/disable AWGN generator

# awgn.snr: Target SNR in dB

#

# -- Fading emulator

# fading.enable: Enable/disable fading simulator

# fading.model: Fading model + maximum doppler (E.g. none, epa5, eva70, etu300, etc)

#

# -- Delay Emulator delay(t) = delay_min + (delay_max - delay_min) * (1 + sin(2pi*t/period)) / 2

# Maximum speed [m/s]: (delay_max - delay_min) * pi * 300 / period

# delay.enable: Enable/disable delay simulator

# delay.period_s: Delay period in seconds.

# delay.init_time_s: Delay initial time in seconds.

# delay.maximum_us: Maximum delay in microseconds

# delay.minumum_us: Minimum delay in microseconds

#

# -- Radio-Link Failure (RLF) Emulator

# rlf.enable: Enable/disable RLF simulator

# rlf.t_on_ms: Time for On state of the channel (ms)

# rlf.t_off_ms: Time for Off state of the channel (ms)

#

# -- High Speed Train Doppler model simulator

# hst.enable: Enable/Disable HST simulator

# hst.period_s: HST simulation period in seconds

# hst.fd_hz: Doppler frequency in Hz

# hst.init_time_s: Initial time in seconds

#####################################################################

[channel.dl]

#enable = false

[channel.dl.awgn]

#enable = false

#snr = 30

[channel.dl.fading]

#enable = false

#model = none

[channel.dl.delay]

#enable = false

#period_s = 3600

#init_time_s = 0

#maximum_us = 100

#minimum_us = 10

[channel.dl.rlf]

#enable = false

#t_on_ms = 10000

#t_off_ms = 2000

[channel.dl.hst]

#enable = false

#period_s = 7.2

#fd_hz = 750.0

#init_time_s = 0.0

[channel.ul]

#enable = false

[channel.ul.awgn]

#enable = false

#n0 = -30

[channel.ul.fading]

#enable = false

#model = none

[channel.ul.delay]

#enable = false

#period_s = 3600

#init_time_s = 0

#maximum_us = 100

#minimum_us = 10

[channel.ul.rlf]

#enable = false

#t_on_ms = 10000

#t_off_ms = 2000

[channel.ul.hst]

#enable = false

#period_s = 7.2

#fd_hz = -750.0

#init_time_s = 0.0

#####################################################################

# Expert configuration options

#

# pusch_max_its: Maximum number of turbo decoder iterations (Default 4)

# pusch_8bit_decoder: Use 8-bit for LLR representation and turbo decoder trellis computation (Experimental)

# nof_phy_threads: Selects the number of PHY threads (maximum 4, minimum 1, default 3)

# metrics_period_secs: Sets the period at which metrics are requested from the eNB.

# metrics_csv_enable: Write eNB metrics to CSV file.

# metrics_csv_filename: File path to use for CSV metrics.

# tracing_enable: Write source code tracing information to a file.

# tracing_filename: File path to use for tracing information.

# tracing_buffcapacity: Maximum capacity in bytes the tracing framework can store.

# pregenerate_signals: Pregenerate uplink signals after attach. Improves CPU performance.

# tx_amplitude: Transmit amplitude factor (set 0-1 to reduce PAPR)

# rrc_inactivity_timer Inactivity timeout used to remove UE context from RRC (in milliseconds).

# max_prach_offset_us: Maximum allowed RACH offset (in us)

# nof_prealloc_ues: Number of UE memory resources to preallocate during eNB initialization for faster UE creation (Default 8)

# eea_pref_list: Ordered preference list for the selection of encryption algorithm (EEA) (default: EEA0, EEA2, EEA1).

# eia_pref_list: Ordered preference list for the selection of integrity algorithm (EIA) (default: EIA2, EIA1, EIA0).

#

#####################################################################

[expert]

#pusch_max_its = 8 # These are half iterations

#pusch_8bit_decoder = false

nof_phy_threads = 1

#metrics_period_secs = 1

#metrics_csv_enable = false

#metrics_csv_filename = /tmp/enb_metrics.csv

#report_json_enable = true

#report_json_filename = /tmp/enb_report.json

#alarms_log_enable = true

#alarms_filename = /tmp/enb_alarms.log

#tracing_enable = true

#tracing_filename = /tmp/enb_tracing.log

#tracing_buffcapacity = 1000000

#pregenerate_signals = false

#tx_amplitude = 0.6

#rrc_inactivity_timer = 30000

#max_nof_kos = 100

#max_prach_offset_us = 30

#nof_prealloc_ues = 8

#eea_pref_list = EEA0, EEA2, EEA1

#eia_pref_list = EIA2, EIA1, EIA0

```

</details>

</p>

2. Running the UE

Lastly we can launch the UE, again **with root permissions** to create the TUN device.

```bash

cd ~/srsRAN/build/

sudo ./srsue/src/srsue ~/ran_configurations/ue.conf

```

The `~/ran_configurations/ue.conf` file have the next information:

<p>

<details>

<summary>ue.conf</summary>

```conf=

#####################################################################

# srsUE configuration file

#####################################################################

#####################################################################

# RF configuration

#

# freq_offset: Uplink and Downlink optional frequency offset (in Hz)

# tx_gain: Transmit gain (dB).

# rx_gain: Optional receive gain (dB). If disabled, AGC if enabled

#

# nof_antennas: Number of antennas per carrier (all carriers have the same number of antennas)

# device_name: Device driver family. Supported options: "auto" (uses first found), "UHD" or "bladeRF"

# device_args: Arguments for the device driver. Options are "auto" or any string.

# Default for UHD: "recv_frame_size=9232,send_frame_size=9232"

# Default for bladeRF: ""

# device_args_2: Arguments for the RF device driver 2.

# device_args_3: Arguments for the RF device driver 3.

# time_adv_nsamples: Transmission time advance (in number of samples) to compensate for RF delay

# from antenna to timestamp insertion.

# Default "auto". B210 USRP: 100 samples, bladeRF: 27.

# continuous_tx: Transmit samples continuously to the radio or on bursts (auto/yes/no).

# Default is auto (yes for UHD, no for rest)

#####################################################################

[rf]

# freq_offset = 0

tx_gain = 80

#rx_gain = 40

#nof_antennas = 1

# For best performance in 2x2 MIMO and >= 15 MHz use the following device_args settings:

# USRP B210: num_recv_frames=64,num_send_frames=64

# For best performance when BW<5 MHz (25 PRB), use the following device_args settings:

# USRP B210: send_frame_size=512,recv_frame_size=512

#device_args = auto

#time_adv_nsamples = auto

#continuous_tx = auto

# Example for ZMQ-based operation with TCP transport for I/Q samples

device_name = zmq

device_args = tx_port=tcp://*:2001,rx_port=tcp://localhost:2000,id=ue,base_srate=23.04e6

#####################################################################

# EUTRA RAT configuration

#

# dl_earfcn: Downlink EARFCN list.

#

# Optional parameters:

# dl_freq: Override DL frequency corresponding to dl_earfcn

# ul_freq: Override UL frequency corresponding to dl_earfcn

# nof_carriers: Number of carriers

#####################################################################

[rat.eutra]

#dl_earfcn = 3350

#nof_carriers = 1

#####################################################################

# NR RAT configuration

#

# Optional parameters:

# bands: List of support NR bands seperated by a comma (default 78)

# nof_carriers: Number of NR carriers (must be at least 1 for NR support)

#####################################################################

[rat.nr]

# bands = 78

# nof_carriers = 0

#####################################################################

# Packet capture configuration

#

# Packet capture is supported at the MAC, MAC_NR, and NAS layer.

# MAC-layer packets are captured to file a the compact format decoded

# by the Wireshark. For decoding, use the UDP dissector and the UDP

# heuristic dissection. Edit the preferences (Edit > Preferences >

# Protocols > DLT_USER) for DLT_USER to add an entry for DLT=149 with

# Protocol=udp. Further, enable the heuristic dissection in UDP under:

# Analyze > Enabled Protocols > MAC-LTE > mac_lte_udp and MAC-NR > mac_nr_udp

# For more information see: https://wiki.wireshark.org/MAC-LTE

# Using the same filename for mac_filename and mac_nr_filename writes both

# MAC-LTE and MAC-NR to the same file allowing a better analysis.

# NAS-layer packets are dissected with DLT=148, and Protocol = nas-eps.

#

# enable: Enable packet captures of layers (mac/mac_nr/nas/none) multiple option list

# mac_filename: File path to use for MAC packet capture

# mac_nr_filename: File path to use for MAC NR packet capture

# nas_filename: File path to use for NAS packet capture

#####################################################################

[pcap]

#enable = none

#mac_filename = /tmp/ue_mac.pcap

#mac_nr_filename = /tmp/ue_mac_nr.pcap

#nas_filename = /tmp/ue_nas.pcap

#####################################################################

# Log configuration

#

# Log levels can be set for individual layers. "all_level" sets log

# level for all layers unless otherwise configured.

# Format: e.g. phy_level = info

#

# In the same way, packet hex dumps can be limited for each level.

# "all_hex_limit" sets the hex limit for all layers unless otherwise

# configured.

# Format: e.g. phy_hex_limit = 32

#

# Logging layers: rf, phy, mac, rlc, pdcp, rrc, nas, gw, usim, stack, all

# Logging levels: debug, info, warning, error, none

#

# filename: File path to use for log output. Can be set to stdout

# to print logs to standard output

# file_max_size: Maximum file size (in kilobytes). When passed, multiple files are created.

# If set to negative, a single log file will be created.

#####################################################################

[log]

all_level = error

phy_lib_level = none

all_hex_limit = 32

filename = /tmp/ue.log

file_max_size = -1

#####################################################################

# USIM configuration

#

# mode: USIM mode (soft/pcsc)

# algo: Authentication algorithm (xor/milenage)

# op/opc: 128-bit Operator Variant Algorithm Configuration Field (hex)

# - Specify either op or opc (only used in milenage)

# k: 128-bit subscriber key (hex)

# imsi: 15 digit International Mobile Subscriber Identity

# imei: 15 digit International Mobile Station Equipment Identity

# pin: PIN in case real SIM card is used

# reader: Specify card reader by it's name as listed by 'pcsc_scan'. If empty, try all available readers.

#####################################################################

[usim]

#mode = soft

#algo = xor

opc = E734F8734007D6C5CE7A0508809E7E9C

k = 8BAF473F2F8FD09487CCCBD7097C6862

imsi = 901700100001111

imei = 353490069873319

#reader =

#pin = 1234

#####################################################################

# RRC configuration

#

# ue_category: Sets UE category (range 1-5). Default: 4

# release: UE Release (8 to 15)

# feature_group: Hex value of the featureGroupIndicators field in the

# UECapabilityInformation message. Default 0xe6041000

# mbms_service_id: MBMS service id for autostarting MBMS reception

# (default -1 means disabled)

# mbms_service_port: Port of the MBMS service

# nr_measurement_pci: NR PCI for the simulated NR measurement. Default: 500

# nr_short_sn_support: Announce PDCP short SN support. Default: true

#####################################################################

[rrc]

#ue_category = 4

#release = 15

#feature_group = 0xe6041000

#mbms_service_id = -1

#mbms_service_port = 4321

#####################################################################

# NAS configuration

#

# apn: Set Access Point Name (APN)

# apn_protocol: Set APN protocol (IPv4, IPv6 or IPv4v6.)

# user: Username for CHAP authentication

# pass: Password for CHAP authentication

# force_imsi_attach: Whether to always perform an IMSI attach

# eia: List of integrity algorithms included in UE capabilities

# Supported: 1 - Snow3G, 2 - AES

# eea: List of ciphering algorithms included in UE capabilities

# Supported: 0 - NULL, 1 - Snow3G, 2 - AES

#####################################################################

[nas]

apn = idt.apn

apn_protocol = ipv4

#user = srsuser

#pass = srspass

#force_imsi_attach = false

#eia = 1,2

#eea = 0,1,2

#####################################################################

# GW configuration

#

# netns: Network namespace to create TUN device. Default: empty

# ip_devname: Name of the tun_srsue device. Default: tun_srsue

# ip_netmask: Netmask of the tun_srsue device. Default: 255.255.255.0

#####################################################################

[gw]

#netns =

ip_devname = tun_srsue

ip_netmask = 255.255.255.0

#####################################################################

# GUI configuration

#

# Simple GUI displaying PDSCH constellation and channel freq response.

# (Requires building with srsGUI)

# enable: Enable the graphical interface (true/false)

#####################################################################

[gui]

enable = false

#####################################################################

# Channel emulator options: