---

title: Virtual Productions Pt 2

description: We are researching how remote collaboration with 3D avatars saves time and democratizes content creation further with real-time animation capabilities using only consumer grade hardware.

image: https://xrdevlog.com/img/studios.jpg

robots: index, follow

lang: en

dir: ltr

breaks: true

disqus: xrdevlog

---

# Virtual Productions Part 2

> Link to part 1: https://xrdevlog.com/podcast.html

###### tags: `devlog` `vrchat` `unity` `webvr`

We're building a complex of real-time animation studios inside of free multi-user virtual reality platforms. Part of our research is about how networked avatars for remote collaboration saves time in the production process and democratizes content creation further.

The bigger vision is to completely virtualize the physical soundstage itself and build a portal hub of these worlds relevant to productions (soundstages, galleries, avatar change rooms, studios, etc).

Adoption of this technology in bigger studios is being limited due to the fact that the real-time process is turning into a situation where many people are worried they'll be automated out of a job. [For indies](https://nofilmschool.com/2018/03/virtual-production-indie-filmmakers) however, there has never been a more opportune moment where the playing field is leveling at an accelerating rate.

> Overall, it's the application of real-time animation in a VR environment that has the most potentially disruptive effect on Hollywood, since it can be used to create a virtual studio to create TV shows, training videos, and so forth. [Source](https://www.inc.com/geoffrey-james/this-new-tech-will-spawn-billionaires-almost-nobody-sees-it-coming.html)

---

## Podcast Studio

Discussion around the "Virtual Economy" has been bubbling in various communities recently. In VRChat they've recently posted a new role looking for a [Virtual Economy Manager](https://vrchat.com/careers/virtual-economy) to lead the development of VRC's economy, currency, and marketplace. Pretty big deal for the industry since VRChat is the largest social VR platform by the numbers. The [M3 group](https://github.com/M3-org/proposals) has also been discussing the XR economy and prototyping pieces along the way.

Because this topic is interesting to so many in different communities and has deep implications for how it shapes the future of platforms it was taken upon a M3 member to [organize a time and place](https://github.com/M3-org/proposals/issues/6) to facilitate discussion in an engaging way so that it can be unpacked and shared.

You may notice that this is the same podcast studio from [part 1](https://i.imgur.com/AMcPA35.jpg) but with some changes to fit a panel style production:

---

### Pre-rehearsal

The day before rehearsal we gathered to bug test the studio, adjust cameras, finalize decorations, and get a feel for how everything will go.

---

### Rehearsal

The day before the film shoot all but one of the panelists showed up to meet and greet, go over the topics, show format, and check out the studio. Usually can expect the first 20 minutes for new users of VRChat to adjust into their environments and figure out controls or issues they may have.

This particular art on the wall is a tokenized piece by [Josie](https://josie.io) who ships AR updates to her pieces through the app [Artivive](https://artivive.com/).

During rehearsal we also planned to pick out avatars for all the participants or atleast learn how to incase some are still undecided.

---

### Film Day

After 2 weeks of planning it was time to film the actual event. An avatar of one of the participants [Bai](https://twitter.com/bai0) was created using a 3D scan of himself then accessorized with a hat and sunglasses. We launched a private invite-only link into the studio and waited to gather everyone.

We recorded about 100 minutes of deep discussion on virtual economy, productions, NFTs, and open source XR with the camera view and green screen of the panelists as well.

Producers have chosen to record, not stream, the event so that we can add in a proper intro/outro to the production.

---

## Final Video

<iframe width="100%" height="400" src="https://www.youtube.com/embed/WAhPut6YKdE" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

Write-up and notes: https://m3-org.github.io/research/panel.html

---

## WebVR archives

Video was taken of the room and processed into a photogrammetry scan.

The gltF model of the studio was optimized and exported from Blender to [Vesta](https://vesta.janusvr.com) to build the webvr archive with. Building the scene in Janus took 5 minutes after which the green screen video was placed behind the table.

From Janus we can export the static HTML to a WebVR compatible version like JanusWeb or most recently Aframe also. With JanusWeb it just took a small modification to `blend_src` and `blend_dest` to make the green transparent. Afterwords, the results speak for themselves:

Demo link coming soon!

---

## Photo Studio

This studio built by [GM3](https://gm3.github.io/devportfolio/) features a sophisticated control panel to control lighting, colors, 9 cameras and a camera crane with object tracking.

**Update***: The core system of the Photo Studio is now open source on Github! https://github.com/gm3/virtualproduction-vrchat

The audio in the studio is adjusted non-positional so that everyone can hear each other from anywhere in the studio. This way we can capture audio from the world in high quality and coordinate together as well.

Pressing `F` in the world fullscreens the current camera view to desktop. From here footage is simply recorded/streamed using software like [OBS](https://obsproject.com/).

The camera crane has seating and a control panel onboard to operate with.

The screen shows the current focused camera (one at a time). One can move the entire crane while everyone is sitting on it like a vehicle. There's a button to also turn the camera crane invisible if need be.

Those on the set have a live preview of the focused camera as well so that they can get an idea of what the producers see also.

---

---

## Set Design

> Set dressing is a term that comes from theater and film and entails decorating a particular set with curtains and furniture, filling shelves and generally making it look real and lived-in. In the world of animated movies and video games, you must accomplish the same tasks with your virtual sets. [Source](https://www.pluralsight.com/blog/film-games/dress-importance-set-dressing)

### VRChat

Objects given colliders in Unity can also be set to grabbable. In the examples below you can see a person in VR decorating the set.

World link: https://vrchat.com/home/launch?worldId=wrld_b8dc1a6a-4b46-4b06-a3ed-7b0394060db5&instanceId=96079

The main issue however, is that the changes are not persistent. If a user were to log off, crash, or visit another world then the set and all its changes will be reset again.

### JanusVR

There are many big advantages to using JanusVR for set design and decorating.

- The clients and networking servers are completely [open source](https://github.com/janusvr)

- Drag and drop models, images, audio from 2D web or file system

- Real-time and collaborative with voice and text chat communication built in

- Export scene as HTML/JSON with support for Janus and Aframe markup

- Persistence of content and version control with services like Etherpad and Github

Janus brings the social layer closer to the development environment in a way where there is little to no distinction. To compare, **it is as if VRChat and Unity3D were one and the same**.

Here's a sped up version of 3 people set dressing a virtual store using low-poly assets.

The results can then be saved as HTML and imported into a number of other development environments such as Unity or Blender for further work using [Janus Tools](https://github.com/janusvr/janus-tools). Not only does Janus accelerate the workflow with avatar embodied collaboration, it's much more fun to develop this way too! The fun factor combined with a no-code approach is powerful for onboarding.

---

## Street Interviews

Creator Kaban-Chan has allowed a newscaster avatar with a shoulder mounted camera and microphone to be cloned. We're using it to capture street-style interviews with human users dressed as avatars.

Even though the camera is a prop it has a psychological effect on users to compose themselves differently.

Also, the effect of having an avatar (mask) gives the person being interviewed more freedom to be honest in their responses.

---

## Workflows

Read this intro guide to virtual production workflows and terminology: **https://www.foundry.com/trends/vr-ar-mr/virtual-production-workflows**

### Pitchvis

Pitchvis is used to visualize a sequence or idea from a script prior to production. Similar to a rough draft or trailer of a previs, the purpose is to easily communicate your vision to help get your project funded or greenlit by investors or production companies.

During this process writers and directors turn their imaginations inside out to provide imagery, stylistic choices, mood, and core story concepts of the movie or game. Sometimes there's no budget for a trailer so producers might mash together clips from other sources:

> [Rip-O-Matic](http://www.emergingscreenwritersurvivalguide.com/whats-rip-o-matic-need-one/): A very rough rendition of a proposed ad composed of images and sounds borrowed (ripped-off) from other commercials or broadcast materials. [Source](https://www.oxfordreference.com/view/10.1093/oi/authority.20110803100422257)

Pitchvis benefits from a hacker's mindset to transfer the vision from imagination to the viewer while on a shoestring budget. Here are some methods we're exploring to quickly prototype:

#### Environments

There are millions of environments from video games to consider and over 50,000 user created virtual worlds that are live in VRChat that a camera crew can visit and explore.

[Meshroom](https://github.com/alicevision/meshroom) is open source photogrammetry to 3D scan from physical and virtual locations. All it takes is a 1-2 minutes of video and dump the frames to generate a high quality model.

See tutorials: https://sketchfab.com/blogs/community/tutorial-meshroom-for-beginners/

Other affordable scanning equipment:

- Asus Zenfone AR (buy used only)

- Structure Sensor https://structure.io/structure-sensor/mark-ii (pre-order)

- Matterport https://matterport.com/

After the mesh export of a location, we can stylize using style transfer to match the mood.

<iframe width="100%" height="400" src="https://www.youtube.com/embed/j6NBrDjgN9k" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

#### Avatars

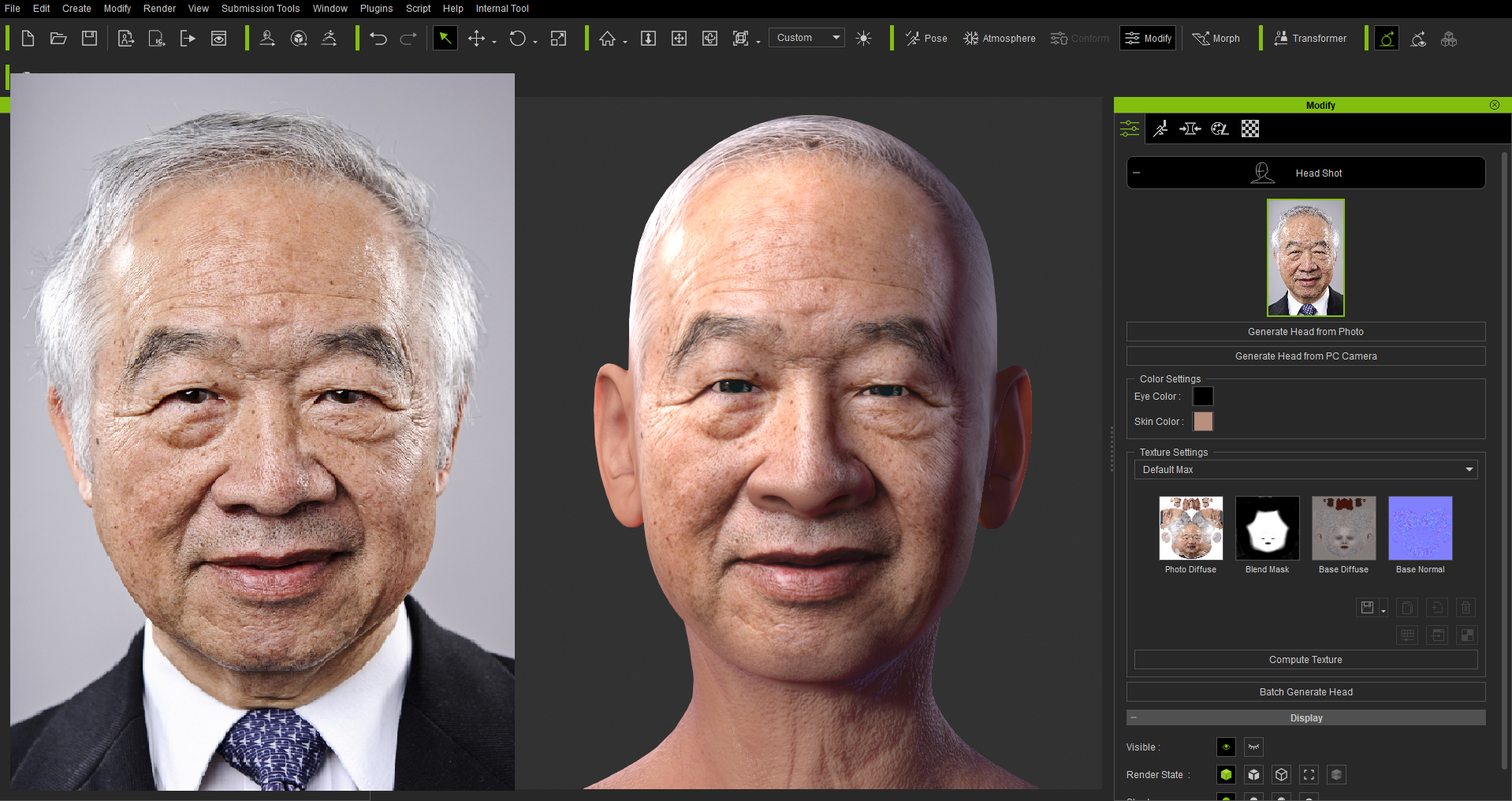

3D face reconstruction with [**vrn**](https://github.com/AaronJackson/vrn) (open source MIT) or [Headshot](https://blog.reallusion.com/2019/07/29/reallusion-unveils-headshot-ai-iclone-unreal-live-link-and-new-digital-human-shader-at-siggraph-2019/) (exports full-body model) is useful when creating an avatar that carries the likeness of your actors to import into [VRChat](https://vrchat.com).

Headshot is due to be released in Q4 2019 and will be bundled with [Character Creator 3](https://www.reallusion.com/store/product.html?l=1&p=cc).

AR face filters like [Face-Swap](https://github.com/deepfakes/faceswap) (GPL licensed) and [Lens Studio](https://lensstudio.snapchat.com/) can be also useful when you have clips you want to use but want to transfer the actors face to keep consistency with the trailer.

<iframe width="100%" height="400" src="https://www.youtube.com/embed/r1jng79a5xc" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

### Animation

Our current animation studio is based in [VRChat](https://vrchat.com/developer-faq) which has support for full body avatars with lip sync, eye tracking/blinking, and complete range of motion. These are human actors:

[Everybody Dance Now](https://carolineec.github.io/everybody_dance_now/) is a deep learning approach to motion transfer. Comes with no code.

---

### Previs

Previs is like producing a movie before actually making the movie so that the director and everyone else involved in a production can come to an agreement on what they'd like to do.

<iframe width="100%" height="400" src="https://www.youtube.com/embed/CHdzXECbH4w" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

See: http://thethirdfloorinc.com/what-we-do/film-tv/virtual-production/simulcam/#previs

> "Previs is getting closer and closer to final-level quality every year with the new advancements in the technology and the software.” [Source](https://cinefex.com/blog/how-real-time-technology-is-making-better-movies/)

### Techvis

Hand in hand with previs is techvis – the process of technically planning out how to achieve various shots in production.

---

## Conclusion

The industry is now approaching a tipping point where real-time technology can produce final pixel quality during previs and allow digital producers to rapidly iterate VFX instantly rather than wait for hours or days for a render.

See: https://cinefex.com/blog/how-real-time-technology-is-making-better-movies/

> The way I see it, these tools bring the freedom we enjoy in game development to the world of filmmaking. They give directors and cinematographers a way to have more control, iterate on their creative vision, and nail every possible aspect of their shot. Creators can experiment easily and really discover the best version out of trying many alternatives. Without a real-time 3D platform, there is no way to do this. [Source](https://blog.siggraph.org/2019/03/an-irresistible-way-to-make-films-using-real-time-technology-for-book-of-the-dead.html/)

Many of the top virtual production tools available to consumers such as [Cinetracer](https://store.steampowered.com/app/904960/Cine_Tracer/) do not yet support multiplayer or VR mode. The cost for IK trackers and VR gear has drastically come down in recent times: $1200 will get you a system full body tracking available to you in your living room. Combined with virtual camera systems we have discovered effective workflows that can streamline previs by collaborating with remote actors in real-time on virtual sets.

Framestore’s chief creative officer Tim Webber excitedly calls virtual production “an opportunity to redesign the whole process of filmmaking.” Once final pixel quality can be achieved in real time, the effects on filmmaking are sure to be quite revolutionary.

> “Our quality of previs has evolved over the years so much to the point where some directors now are like, ‘Wow, this is looking like a final piece of production,’” observed Brad Alexander, previs supervisor at Halon. “It’s getting there; we’re starting to hit a bleeding edge of the quality matching to a final of an animated feature.” [Source](https://cinefex.com/blog/how-real-time-technology-is-making-better-movies/)

It's predicted that in the next 10 years Hollywood will become decentralized as the next generation of directors will have the tools to create without a geo-located industry. The social layer to virtual productions will have a compounding effect on the future of storytelling and content creation. I'm interested in how this technology will continue to evolve as the convergence of film, gaming, and web continues to shape and democratize the playing field.

---

### Links

- https://www.unrealengine.com/en-US/programs/virtual-production

- https://www.unrealengine.com/en-US/blog/virtual-production-all-the-way-fast-creative-filmmaking-with-mr-factory

- https://unity.com/solutions/film-animation-cinematics

- https://cinefex.com/blog/how-real-time-technology-is-making-better-movies/

- https://www.inc.com/geoffrey-james/this-new-tech-will-spawn-billionaires-almost-nobody-sees-it-coming.html

- https://www.reallusion.com/iclone/

- https://janusvr.github.io/guide/#/

- https://medium.com/@alfredos/beyond-green-screens-sharing-a-design-vision-for-showing-people-inside-ar-vr-creations-92b9eb4dc33e

- https://hackmd.io/@xr/webvrchat

#### To-do List

- [x] Film the panel

- [x] Export podcast studio to glTF 2.0

- [x] Compose green screen video in WebVR space

- [ ] Record street interviews

- [ ] Edit panel video