# 當LLM的觸角延伸到表格資料,有機會一統江湖嗎?

表格資料(Tabular data)是生活中常見的資料類型,在金融、醫療、製造業領域都很常見,相信也是許多人踏入機器學習領域的第一份資料。十幾年來,得益於半導體製程的進步,深度學習蓬勃發展,在電腦視覺、自然語言處理的領域不斷突破;然而對於表格資料,當今的深度學習模型仍難以勝過XGBoost、LightGBM這類梯度提升樹(Gradient-boosted trees)進行集成(Ensemble)後的模型,在[Kaggle](https://www.kaggle.com)或[T-Brain](https://tbrain.trendmicro.com.tw)這類的競賽平台,常常可以發現這個現象,這點競賽常客應該格外地有感觸。

## TabLLM

隨著大型語言模型(Large Language Model, LLM)在各領域取得的成功,資料科學家也開始思考,我們能不能將LLM背後驚人的知識量,應用在表格資料的任務上呢?「TabLLM: Few-shot Classification of Tabular Data with Large Language Models」實現了這個想法,並在表格資料的分類任務上取得有趣的成果。

### 整體架構

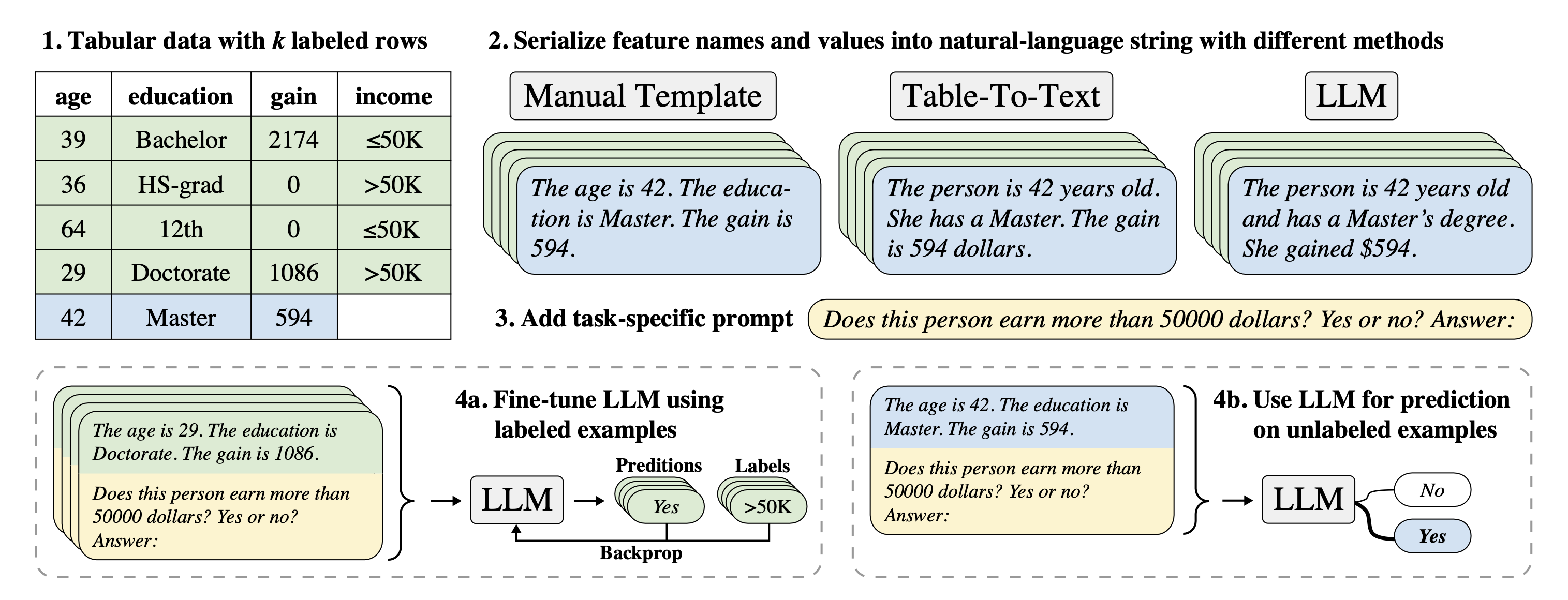

▲ 圖1:TabLLM整體架構圖,圖取自Hegselmann et al. (2023)。

圖1描述了TabLLM的幾個重要步驟,大致上是先將表格資料先分為有標籤和無標籤,對於*k*個有標籤的樣本,先將其特徵值部分轉換為類似於自然語言的描述,再搭配特定格式的提示詞(Prompt)輸入LLM來進行微調(Fine-tune),最後就能以微調後的LLM來針對無標籤資料進行預測。

### 不同的Serialization方法

Serialization(國教院中譯為串列化)是指將表格資料轉為近似於自然語言的處理過程,也是TabLLM探討的重點。TabLLM進行了一項有趣的實驗,他們使用了以下9種不同的Serialization來測試對結果的影響:

1. List Template: 直接條列特徵項目及其值,且保持固定特徵順序

2. Text Template: 用接近口語的方式逐項說明特徵及其值,如圖1裡面「Manual Template」下方的文字

3. Text T0: 將特徵項目、特徵值配對,再使用T0並搭配提示詞「“Write this information as a sentence:”」來得到自然語言的描述

4. Text GPT-3: 與Text T0類似,但因為GPT-3可直接將條列好的特徵轉換為自然語言的描述,因此只需要輔以提示詞“Rewrite all list items in the input as a natural text.”就能得到自然語言輸出

5. Table-To-Text: 使用已針對Table-To-Text任務微調後的語言模型進行轉換

除了上述5種,還基於以上的方法做了進階的嘗試:

6. List Only Values: 刪去特徵名稱,目的是觀察特徵名稱是否影響了結果

7. List Permuted Names: 擾亂特徵名稱,可以觀察特徵名稱與值之間的關係是否重要

8. List Permuted Values: 擾亂特徵值,觀察模型是否使用了數值型特徵的細顆粒度(Fine-grained)資訊

9. List Short: 將List Template限制在最多只能使用10個特徵,超過的部分直接捨去,這一項的目的觀察受限的資訊量是否會影響模型的判斷

提示詞部分,TabLLM採用如圖1所示的方式來結合Serialization後的字串,然而針對不同資料集,會因資料特性以及為了符合語言模型的輸入限制,而有一些細微的差異。

### 資料集與語言模型

TabLLM總共進行了兩個階段的分類任務,對每一個資料集的分類結果計算[AUC](https://developers.google.com/machine-learning/crash-course/classification/roc-and-auc?hl=zh-tw)。

* 第一階段:對[Bank](https://archive.ics.uci.edu/dataset/222/bank+marketing#)、[Diabetes](https://www.kaggle.com/datasets/uciml/pima-indians-diabetes-database)以及其他總共9個公開資料集進行分類任務,其中也有兩個來自[Kaggle](https://www.kaggle.com)平台,皆為二類別或多類別

* 第二階段:進行二類別分類,共有End of Life、Surgical Procedure以及Likelihood of Hospitalization,這些資料由美國的某壽險公司提供,並經去識別化處理

TabLLM使用[Hugging Face](https://huggingface.co)上的[T0](https://huggingface.co/bigscience/T0) (`bigscience/T0pp`),並以[T-few](https://arxiv.org/abs/2205.05638)進行微調。T0擁有110億參數,token限制為1,024,在原文中研究團隊也嘗試以[T0_3B]((https://huggingface.co/bigscience/T0_3B)) (`bigscience/T0_3B`)作為語言模型來進行實驗,結果顯示效能相較於T0略為降低。

## 實驗結果

TabLLM以兩個圖表說明重要的實驗結果,我們從以下幾個面向來切入:

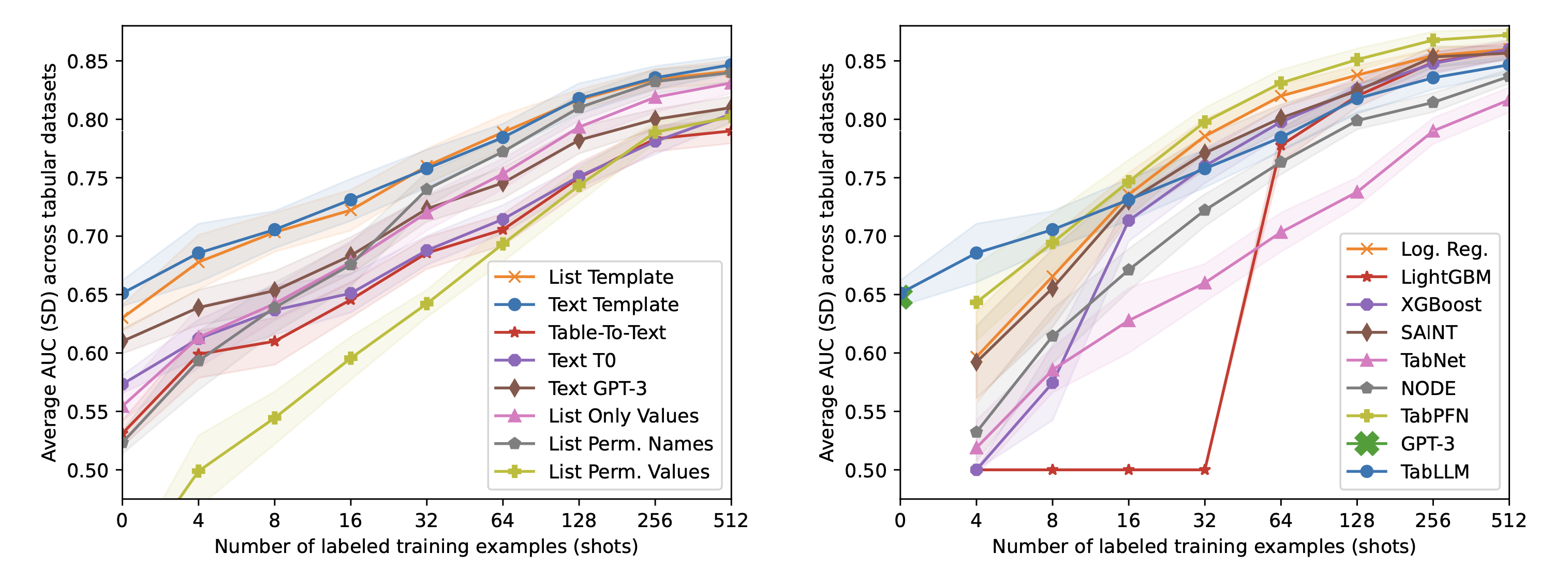

▲ 圖2:左圖是TabLLM在9個資料集的實驗結果;右圖則是與不同模型的效能比較,橫軸皆為使用的資料筆數,縱軸為AUC平均值,並以網底顯示標準差。圖取自Hegselmann et al. (2023)。

### Serialization對分類結果的影響

透過圖2我們發現,當樣本數約略在0到16時,Text(以接近口語的方式逐項描述特徵)比List(直接將特徵條列)還要好一點,但隨著樣本數繼續增加,變得幾乎沒有差距。當使用語言模型,搭配適當的提示詞進行Serialization,AUC大致上與模型參數量有相同趨勢,在3個使用的模型中,參數量分別為:GPT-3有1,750億,T0有110億以及BLOOM table-to-text有5.6億。有趣的是,語言模型似乎對某些樣本產生了幻覺,例如在[Car](https://archive.ics.uci.edu/dataset/19/car+evaluation)資料集裡,GPT-3擅自替某一筆樣本加上了"this car is a good choice"的描述,對同一筆樣本,T0則是在開頭直接說了"The refrigerator has three doors and is very cheap"。這些多餘或甚至不相干的資訊,可能多少影響了分類結果,而我們也的確發現,用語言模型進行Serialization的AUC不如直接使用Text或List。

### 對特徵動手腳後,LLM會被影響嗎?

如果我們刻意擾亂特徵項目與值之間的關聯,語言模型還能正確判斷嗎?為了測試語言模型是否確實讀懂特徵描述,作者設計兩種擾亂的方法,一是List Permuted Names,二是

List Permuted Values,前者調換了特徵名稱,後者則擾亂了特徵值。圖2顯示如果我們將名稱調換,和原先的List比起來,在16個樣本數以下的實驗,AUC都有明顯的下降,而當特徵值被擾亂,我們就看到更大的下滑幅度。這個現象透露了比起名稱,模型更在乎特徵的值。

### 和其他模型的比較

圖2右側展示了TabLLM與其他模型在分類任務的AUC比較,TabLLM部分是以Text作為Serialization方法。在zero-shot的測試中,T0能夠與多出十餘倍參數量的GPT-3並駕齊驅,而跟LightGBM、XGBoost比起來,TabLLM只有在樣本數低於64時較好,在樣本數4或8時則有明顯差距。比較意外的是,羅吉斯迴歸只輸給以Transformer為基礎的[TabPFN](https://github.com/automl/TabPFN),同時在16個樣本數以上的測試完勝TabLLM。

TabLLM憑藉大型語言模型擁有的先備知識,在部分資料集以few-shot甚至是zero-shot超越目前對表格資料較為擅長的模型;然而當樣本數持續增加,XGBoost以及LightGBM依然能夠超越TabLLM。考量到光是不同Serialization就造成明顯的效能差異,如果再針對提示詞進行調整,的確有機會再提升效能,但如果又將微調的運算成本、硬體需求納入考量,我認為LightGBM或XGBoost這類較「輕量」的模型仍會是首選。

### LLM的先備知識是否運用在判斷樣本上?

在[Income](https://www.kaggle.com/datasets/wenruliu/adult-income-dataset)資料集的分類結果,TabLLM於zero-shot得到了0.84的AUC,且XGBoost一直到使用所有樣本進行訓練時才超越TabLLM,因此作者試著比較TabLLM以及羅吉斯迴歸的特徵權重,結果如表1。兩模型大致上對特徵權重有類似的排序,在TabLLM排在前5的特徵(可以看到多為教育程度特徵),全出現在羅吉斯迴歸的前7名中,然而羅吉斯迴歸對教育程度的排序似乎比較合理一點。此外,Income有幾項特徵與金錢有關,由於T0是在2021年訓練,而Income建立於1994年,如果沒有針對這27年間的通貨膨脹數據進行調整,所得到的AUC僅有0.80。

以上幾項實驗,TabLLM的特徵權重是以原始特徵作為輸入、預測結果作為標籤,訓練出一個羅吉斯迴歸模型,接著以該模型的特徵權重代表TabLLM。

▼ 表1:TabLLM以及羅吉斯迴歸模型的特徵權重,僅列出排名前五以及末五名。取自Hegselmann et al. (2023)。

接著是健康醫療類型的資料集End of Life (EoL),這個資料集的目的是預測樣本的死亡率,每個樣本都有記載疾病史與基本資料作為特徵。將TabLLM的特徵權重和[相對風險(Relative risk)](https://wd.vghtpe.gov.tw/fdc/Fpage.action?muid=7565&fid=7919)對照後,如表2,發現TabLLM排名前5的特徵,其相對風險值皆偏高(以罹患疾病為例,相對風險的95%信賴區間如果都高於1,表示暴露組罹患疾病的風險顯著高於非暴露組;若都低於1則相反),大致上有權重愈高的特徵相對風險也愈高的趨勢。留意表中的性別特徵,無論是TabLLM與相對風險的數值,都一致認為性別對結果有不同的影響。

▼ 表2:TabLLM的特徵權重與Relative risk (RR)。取自Hegselmann et al. (2023)。

## 結論

TabLLM成功運用語言模型具備的領域知識,降低了所需要的訓練資料量,甚至達到zero-shot;然而,在模型訓練與測試資料的年代相差過大時,確實會對降低模型的zero-shot效能,未來在實作上需要留意。不同Serialization方式的測試結果,說明了輸入文字的些微變化,會對語言模型的輸出有一定程度的影響,然而實驗結果也顯示當訓練資料數量上升,彼此間的差異就逐漸降低。另一方面,大型語言模型可能因為訓練資料而帶有些微偏見或刻板印象,當被用來判斷較為敏感的資料,勢必得謹慎的處理。從TabLLM我們看到大型語言模型的知識,也能被用在表格資料上,隨著語言模型能力日漸提升,可以期待未來的發展。

## 參考資料

Hegselmann, S., Buendia, A., Lang, H., Agrawal, M., Jiang, X., & Sontag, D. (2023, April). Tabllm: Few-shot classification of tabular data with large language models. In International Conference on Artificial Intelligence and Statistics (pp. 5549-5581). PMLR.

Chen, K. Y., Chiang, P. H., Chou, H. R., Chen, T. W., & Chang, T. H. (2023). Trompt: Towards a Better Deep Neural Network for Tabular Data. arXiv preprint arXiv:2305.18446.

Sign in with Wallet

Sign in with Wallet