<!--

Docs for making Markdown slide deck on HackMD using Revealjs

https://hackmd.io/s/how-to-create-slide-deck

https://revealjs.com

-->

#### :yin_yang: zen3geo: Guiding :earth_asia: data on its path to enlightenment

<small> [Pangeo Machine Learning working group presentation <br> Monday 7 Nov 2022, 17:00-17:15 (UTC)](https://discourse.pangeo.io/t/monday-november-07-2022-machine-learning-working-group-presentation-zen3geo-guiding-earth-observation-data-on-its-path-to-enlightenment-by-wei-ji-leong/2883) </small>

_by **[Wei Ji Leong](https://github.com/weiji14)**_

<!-- Put the link to this slide here so people can follow -->

<small> P.S. Slides are at https://hackmd.io/@weiji14/2022zen3geo</small>

----

### Why **not** zen3geo

> [Yet another GeoML library](https://github.com/weiji14/zen3geo/discussions/70)

> It thinks [spatial is special](https://web.archive.org/web/20221013135644/https://www.linkedin.com/pulse/what-special-spatial-willy-simons)

> No data visualization tools

----

### Why zen3geo

> [Worse is better](https://www.jwz.org/doc/worse-is-better.html)

> [Simple is better than complex](https://peps.python.org/pep-0020/#the-zen-of-python)

> [Let each part do one thing and do it well](https://en.wikipedia.org/wiki/Unix_philosophy#Do_One_Thing_and_Do_It_Well)

---

### Design patterns

| | :yin_yang: [zen3geo](https://github.com/weiji14/zen3geo/tree/v0.5.0) | [torchgeo](https://github.com/microsoft/torchgeo/tree/v0.3.1) | [eo-learn](https://github.com/sentinel-hub/eo-learn/tree/v1.3.0) | [raster-vision](https://github.com/azavea/raster-vision/tree/v0.13.1) |

|--|--:|--:|--:|--:|

| [Design](https://www.brandons.me/blog/libraries-not-frameworks) | [**Lightweight** library](https://github.com/weiji14/zen3geo/blob/v0.5.0/pyproject.toml#L22-L26) | [Heavyweight library](https://github.com/microsoft/torchgeo/blob/v0.3.1/setup.cfg#L27-L66) | [Library](https://github.com/sentinel-hub/eo-learn/tree/v1.2.1#installation) / [Framework](https://github.com/sentinel-hub/eo-learn/blob/v1.2.1/setup.py#L41-L50) | [Framework](https://github.com/azavea/raster-vision/blob/master/requirements.txt#L1-L7) |

| [Paradigm](https://en.wikipedia.org/wiki/Composition_over_inheritance) | **Composition** | Inheritance | Inheritance | Chained-inheritance |

| Data model | **xarray** & geopandas | GeoDataset (numpy & shapely) | EOPatch (numpy & geopandas) | DatasetConfig (numpy & geopandas) |

<small>Relation of GeoML libraries - https://github.com/weiji14/zen3geo/discussions/70</small>

----

### Minimal core, optional extras

| `pip install ...` | Dependencies |

|:-------------------------------|---------------|

| `zen3geo` | rioxarray, torchdata |

| `zen3geo[raster]` | ... + xbatcher |

| `zen3geo[spatial]` | ... + datashader, spatialpandas |

| `zen3geo[stac]` | ... + pystac, pystac-client, stackstac |

| `zen3geo[vector]` | ... + pyogrio[geopandas] |

<small>[_Write libraries, not frameworks_](https://www.brandons.me/blog/libraries-not-frameworks)</small>

----

### Composition over Inheritance

Chaining or 'Pipe'-ing a series of operations, rather than subclassing

<small>E.g. RioXarrayReader - Given a source list of GeoTIFFs, read them into an xarray.DataArray one by one</small>

```python=

class RioXarrayReaderIterDataPipe(IterDataPipe):

def __init__(self, source_datapipe, **kwargs) -> None:

self.source_datapipe: IterDataPipe[str] = source_datapipe

self.kwargs = kwargs

def __iter__(self) -> Iterator:

for filename in self.source_datapipe:

yield rioxarray.open_rasterio(filename=filename, **self.kwargs)

```

<small>I/O readers, custom processors, joiners, chippers, batchers, etc. More at https://zen3geo.rtfd.io/en/v0.5.0/api.html</small>

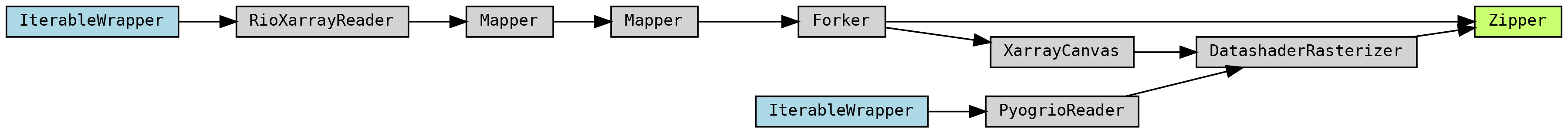

[](https://zen3geo.readthedocs.io/en/v0.5.0/vector-segmentation-masks.html#combine-and-conquer)

----

### Xarray data model

Labelled multi-dimensional data arrays!

```

<xarray.DataArray (band: 1, y: 2743, x: 3538)>

[9701196 values with dtype=uint16]

Coordinates:

* x (x) float64 2.478e+05 2.479e+05 ... 5.307e+05 5.308e+05

* y (y) float64 3.146e+05 3.145e+05 ... 9.532e+04 9.524e+04

* band (band) int64 1

spatial_ref int64 0

Attributes:

TIFFTAG_DATETIME: 2019:12:16 07:41:53

TIFFTAG_IMAGEDESCRIPTION: Sentinel-1A IW GRD HR L1

TIFFTAG_SOFTWARE: Sentinel-1 IPF 003.10

```

<small>Store multiple bands/variables, time indexes, and metadata!</small>

---

### The features you've been waiting for

| | [zen3geo](https://github.com/weiji14/zen3geo/tree/v0.5.0) | [torchgeo](https://github.com/microsoft/torchgeo/tree/v0.3.1) | [eo-learn](https://github.com/sentinel-hub/eo-learn/tree/v1.3.0) | [raster-vision](https://github.com/azavea/raster-vision/tree/v0.13.1) |

|--|--:|--:|--:|--:|

| Spatiotemporal Asset Catalogs (STAC) | [Yes](https://github.com/weiji14/zen3geo/discussions/48) | [No^](https://github.com/microsoft/torchgeo/issues/403) | No | [Yes?](https://github.com/azavea/raster-vision/pull/1243) |

| BYO custom function | [Easy](https://zen3geo.readthedocs.io/en/v0.5.0/vector-segmentation-masks.html#transform-and-visualize-raster-data) | [Hard](https://torchgeo.readthedocs.io/en/v0.3.1/tutorials/transforms.html) | [Hard](https://eo-learn.readthedocs.io/en/latest/examples/core/CoreOverview.html#EOTask) | [Hard](https://docs.rastervision.io/en/0.13/pipelines.html#rastertransformer) |

----

### Cloud-native geospatial with [STAC](https://stacspec.org)

Standards based spatiotemporal metadata!

<small>From querying STAC APIs to stacking STAC Items,

stream data directly from cloud to compute!</small>

```mermaid

graph LR

subgraph STAC DataPipeLine

A["IterableWrapper (list[dict])"] --> B

B["PySTACAPISearcher (list[pystac_client.ItemSearch])"] --> C

C["StackstacStacker (list[xarray.DataArray])"]

end

```

<small>More info at https://github.com/weiji14/zen3geo/discussions/48</small>

----

### Transforms as functions, not classes

```python

def linear_to_decibel(dataarray: xr.DataArray) -> xr.DataArray:

# Mask out areas with 0 so that np.log10 is not undefined

da_linear = dataarray.where(cond=dataarray != 0)

da_decibel = 10 * np.log10(da_linear)

return da_decibel

dp_decibel = dp.map(fn=linear_to_decibel)

```

<small>Do conversions in original data structure (`xarray`)

[vs](https://torchgeo.readthedocs.io/en/v0.3.1/tutorials/transforms.html)

on tensors with no labels (`torch`) via subclassing</small>

```python

class LinearToDecibel(nn.Module):

def __init__(self):

super().__init__()

def forward(self, tensor: torch.Tensor) -> torch.Tensor:

tensor_linear = tensor.where(tensor != 0, other=torch.FloatTensor([torch.nan]))

tensor_decibel = 10 * torch.log10(input=tensor_linear)

return tensor_decibel

dataset_decibel = DatasetClass(..., transforms=LinearToDecibel())

```

---

### Features you never thought you needed

| | [zen3geo](https://github.com/weiji14/zen3geo/tree/v0.5.0) | [torchgeo](https://github.com/microsoft/torchgeo/tree/v0.3.1) | [eo-learn](https://github.com/sentinel-hub/eo-learn/tree/v1.3.0) | [raster-vision](https://github.com/azavea/raster-vision/tree/v0.13.1) |

|--|--:|--:|--:|--:|

| Multi-CRS without reprojection | [Yes](https://zen3geo.readthedocs.io/en/v0.5.0/chipping.html#pool-chips-into-mini-batches) | [No](https://github.com/microsoft/torchgeo/issues/278) | No? | No? |

| Multi-dimensional (beyond 2D+bands) | [Yes](https://zen3geo.readthedocs.io/en/v0.5.0/stacking.html), via xarray | No | No | No |

----

### Multiple coordinate reference systems

*without reprojecting!*

[E.g. if you have many satellite scenes spanning several UTM zones](https://zen3geo.readthedocs.io/en/v0.5.0/chipping.html#pool-chips-into-mini-batches)

```python

# Pass in list of Sentinel-2 scenes from different UTM zones

urls = ["S2_52SFB.tif", "S2_53SNU.tif", "S2_54TWN.tif", ...]

dp = torchdata.datapipes.iter.IterableWrapper(iterable=urls)

# Read into xr.DataArray and slice into 32x32 chips

dp_rioxarray = dp.read_from_rioxarray()

dp_xbatcher = dp_rioxarray.slice_with_xbatcher(input_dims={"y": 32, "x": 32})

# Create batches of 10 chips each and shuffle the order

dp_batch = dp_xbatcher.batch(batch_size=10)

dp_shuffle = dp_batch.shuffle()

...

```

<small>Enabled by torchdata's data agnostic Batcher iterable-style DataPipe</small>

----

### Multiple dimensions

[*stack co-located data*](https://zen3geo.readthedocs.io/en/v0.5.0/stacking.html)

E.g. [time-series data](https://zen3geo.readthedocs.io/en/v0.5.0/stacking.html#sentinel-1-polsar-time-series) or multivariate climate/ocean model outputs

```

<xarray.Dataset>

Dimensions: (time: 15, x: 491, y: 579)

Coordinates:

* time (time) datetime64[ns] 2022-01-30T1...

* x (x) float64 6.039e+05 ... 6.186e+05

* y (y) float64 1.624e+04 ... -1.095e+03

Data variables:

vh (time, y, x) float16 dask.array<chunksize=(1, 579, 491), meta=np.ndarray>

vv (time, y, x) float16 dask.array<chunksize=(1, 579, 491), meta=np.ndarray>

dem (y, x) float16 dask.array<chunksize=(579, 491), meta=np.ndarray>

Attributes:

spec: RasterSpec(epsg=32647, bounds=(603870, -1110, 618600, 16260)...

crs: epsg:32647

transform: | 30.00, 0.00, 603870.00|\n| 0.00,-30.00, 16260.00|\n| 0.00,...

resolution: 30

```

<small>Enabled by xarray's rich data structure</small>

---

### Beyond zen3geo v0.5.0

| | [zen3geo](https://github.com/weiji14/zen3geo/tree/v0.5.0) | [torchgeo](https://github.com/microsoft/torchgeo/tree/v0.3.1) | [eo-learn](https://github.com/sentinel-hub/eo-learn/tree/v1.3.0) | [raster-vision](https://github.com/azavea/raster-vision/tree/v0.13.1) |

|--|--|--|--|--|

| Multi-resolution | DIY, [Yes^](https://github.com/xarray-contrib/xbatcher/issues/93) via [datatree](https://github.com/TomNicholas/datatree) | [No](https://github.com/microsoft/torchgeo/issues/74) | [Yes](https://github.com/sentinel-hub/eo-learn/blob/v1.2.1/geometry/eolearn/geometry/superpixel.py) | No |

| ML Library coupling | Pytorch or [None^](https://github.com/pytorch/data/issues/293) | Pytorch | None | Pytorch, Tensorflow (DIY) |

----

### Multiple spatiotemporal resolutions

*10m, 20m, 60m, ...*

- Handle in xbatcher via datatree - https://github.com/xarray-contrib/xbatcher/issues/93

- Allows for:

- Super-resolution tasks

- Multimodal sensors (Optical/SAR/Gravity/etc)

----

### From `Pytorch` to `None`

Once `torchdata` becomes standalone from `pytorch`,

see https://github.com/pytorch/data/issues/293

<small>Dropping the `torch` dependency frees up space for other ML libraries - e.g. cuML, Tensorflow, etc</small>

> [Perfection is achieved,

> not when there is nothing more to add,

> but when there is nothing left to take away](https://www.goodreads.com/quotes/19905-perfection-is-achieved-not-when-there-is-nothing-more-to)

---

### Unleash your :earth_asia: data

- Install - https://pypi.org/project/zen3geo

- Discuss - https://github.com/weiji14/zen3geo/discussions

- Contribute - https://zen3geo.readthedocs.io/en/v0.5.0/CONTRIBUTING.html

> 水涨船高,泥多佛大

> <small>Rising water lifts all boats, more clay makes for a bigger statue</small>

{"metaMigratedAt":"2023-06-17T11:38:01.980Z","metaMigratedFrom":"YAML","breaks":true,"description":"Pangeo Machine Learning working group presentation","slideOptions":"{\"theme\":\"simple\",\"width\":\"80%\"}","title":"zen3geo: Guiding Earth Observation data on its path to enlightenment","showTags":"false","lang":"en-NZ","contributors":"[{\"id\":\"c1f3f3d8-2cb7-4635-9d54-f8f7487d0956\",\"add\":29433,\"del\":17795}]"}