# Philippe Bouchet - Portfolio

---

## Presentations

- Google DSC Deep Learning workshop

## Main projects:

- MICCAI BraTS

- MICCAI iSeg

- CNN Classifier

- DeepSea: C Deep learning framework

- U-Net++ paper implementation

- GPU accelerated Mandelbrot

## Miscellaneous projects:

- Chess engine

- Web server

- Rubik’s cube engine

- Data science project

- Bash clone

- Raytracer

- Find clone

- Make clone

---

# End of year project

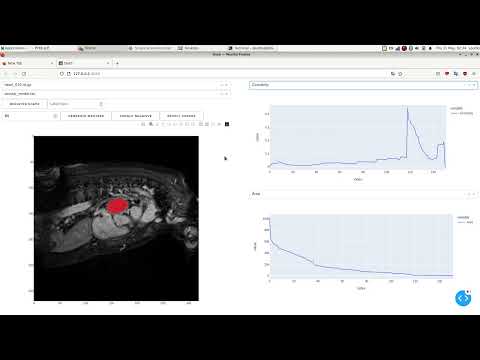

[Here is a demo](https://www.youtube.com/watch?v=bK6VoZeMIDU) of my end of year project in which I demonstrate the applications of mathematical morphology in medical image segmentation using my web UI POC:

[](https://www.youtube.com/watch?v=bK6VoZeMIDU)

# Presentations

### Google DSC Deep Learning workshop:

- **Introductory course**: I organized an introductory course to initiate Sorbonne and Paris-Saclay students to the field of deep learning. The video to my presentation can be found [here](https://www.youtube.com/watch?v=5OR9c6IZFnQ&t=15s).

- **Workshop**: In order to allow the students to understand the theoretical concepts seen in the presentation, I prepared a task to solve in a [Jupyter notebook](https://github.com/GDSC-EPITA/gdsc-deep_learning_intro-epita/blob/master/GDSC_DL_notebook.ipynb). The task is image classification on the cifar10 dataset, using TensorFlow. In this notebook, we go over:

- Design of a CNN, including:

- Optimizers (*SGD*)

- Loss functions (*Categorical crossentropy*)

- Pre-processing methods for datasets (*normalization, one-hot encoding*)

- Understanding the different metrics during training (*loss, accuracy*)

# Main projects:

### MICCAI BraTS:

- **MICCAI BraTS**: We published a paper describing our research to the MICCAI organization (*[An Efficient Cascade of U-Net-like Convolutional Neural Networks devoted to Brain Tumor Segmentation **P. Bouchet et al.**](https://www.lrde.epita.fr/dload/papers/boutry.22.miccai.pdf)*). This is the result of our AI network’s segmentation on a slice of an MRI scan:

In the top row, we can see a brain scan with four different MRI modalities (FLAIR, T1, T2, T1ce). And in the bottom row, we can see the networks prediction’s of where the brain tumor, and its sub-regions are located. From left to right, we see:

- The network’s segmentation of the whole tumor, occupying the largest surface area.

- Next we can see the tumor core, a smaller sub-region of the tumor.

- Then we have the extended tumor, which is the part of the tumor that practitioners and doctors have trouble detecting.

- Finally, we have the superposition of all three sub-regions.

Some problems were encountered during the project, one main one being RAM saturation. Storing hundreds of gigabytes of data on RAM to train the network quickly saturated the memory at our disposal, and another solution was need. Using Tensorflow's data API, we implemented an efficient data pipeline. The data was now loaded in batches, eliminating the risk of saturation.

Our network achieved an accuracy of 93%. This project was carried out in Python using, Numpy, Tensorflow, Keras, and Nibabel.

### MICCAI iSeg:

- [**MICCAI iSeg**: ](https://colab.research.google.com/drive/1a7nWvIO2qDZ1GyjIlmiSaTZV_ZLi39Ei?usp=sharing)A project I undertook that introduced me to the world of medical segmentation. The goal of this project was to create a CNN on a small dataset capable of segmentating gray matter and white matter from an MRI scan of the brain, with T1 and T2 modalities. The network was written in Python, using Numpy, Tensorflow, and Keras.

Here are the results of my network's prediction, compared to the input image's ground truth.

Here is a visual representation of the network's segmentation of a patient's brain MRI. We can see:

- The brain in light blue

- Gray matter in dark blue

- White matter in yellow

Some issues were encountered during the network's training, as the dataset is *incredibly* small (only 10 patients!), we only have a limited amount of patients to train the network with. This being the case, the network can easily stagnate, and fall into a *plateau* during gradient descent. In order to combat this, I decided to implement data augmentation in order to provide the network with an enriched dataset, thus avoiding the plateau during gradient descent.

A quick reminder of the gradient descent and plateaus:

The point of the gradient descent is to find a local minimum within the cost function, in order to tune the network's parameters.

Mathematically explained, we have $∇C = 0$, with $C$ as the cost function.

When reaching a plateau, the network ceases to learn, and remains stuck on the plateau. This is what we see in this example, with the SGD algorithm (in red). The red ball gets stuck at the saddle point, and no progress is made towards the local or global minimum. By providing more data to the network, we decrease the chances of getting stuck on a plateau, and allow the red ball to progress towards a local or global minimum.

### CNN classifier:

- [**Classifier**: ](https://www.kaggle.com/code/spurdo/navires-libre-philippe-bouchet-victor-litoux/notebook) The goal of the project was to create an accurate CNN model capable of correctly classifying different types of boats, while being limited to a small, and low quality dataset. The project was carried out using Python, Numpy, Tensorflow, Keras and Pandas. This task was achieved by using image processing concepts in order to enhance the dataset. Moreover, we performed data analysis on the dataset, here is a histogram representing the amount of images for each boat type:

We notice that the dataset is not balanced at all. *Class weights* were used to balance out the data and avoid the network being biased towards a certain type of boat.

Once the network was trained, we evaluated its performance on the testing dataset. Here is a confusion matrix. On the y axis of the matrix, we have the *expected* categorical outcome, and on the x axis, we have the actual *predicted* outcome.

The network struggles with correctly detecting *destroyers*, with a high false positive rate among this categories. This can be explained due to the fact that images of the *destroyer* are much more prevalent (*cf. histogram above*), thus biasing the network. The effects of this bias have been mitigated thanks to the use of the class weights mentioned earlier.

### DeepSea:

- [**DeepSea**: ](https://github.com/sudomane/ocr) A Deep Learning framework completely implemented from scratch in the C programming language, using only the C standard library. The framework's network implements a Multilayer Perceptron model, or a Fully Connected network. It was designed with mini-batch gradient descent, as it allows the gradient to converge quickly, yet carefully towards the local, or global minimum of the cost function. The framework also comes with an MNIST dataset API, written from scratch.

An OCR was implemented with the framework, and was tested with the MNIST hand written digits dataset on a small network with the following parameters:

- **784** input neurons

- **2** hidden layers

- **16** hidden neurons each

- **10** output neurons

- **100** epochs

- Learning rate of **0.1**

- Batch size of **64**

- **4096** training images (*out of 60000*)

The MNIST dataset consisted of:

- **60000** Training images and labels

- **10000** Testing images and labels

The OCR achieved an accuracy of 82%, while only being trained on 6.82% of the total training dataset, and tested with the entirety of the testing dataset. Here are the results, as well as the prediction values of an image picked at random:

This project allowed me to understand the vital mathematical aspect behind neural networks, providing me with a solid foundation in machine learning. The reason as to why I decided to implement a Deep Learning framework from scratch in C is because I wanted to give myself a greater challenge, as implementing it in Python, or a high level language seemed to be too easy. Moreover, by implementing it in C, I have the possibility to delve in to hyper-optimization, via CPU multithreading, or even GPU computing in order to speed up the network.

### U-Net++:

- [**L-Sized U-Net++ algorithm**: ](https://github.com/sudomane/unetpp) Designed and implemented an algorithm capable of creating an efficient $L$-sized U-Net++ network. The implementation is based on the paper by *Z. Zhou et al*. ([*Link to the paper*](https://arxiv.org/pdf/1807.10165.pdf)). The creation of this algorithm was a necessity, as it was vital to our success for the MICCAI BraTS challenge. We selected the U-Net++ model for its power and precision. The network was written in Python, using Numpy, Tensorflow and Keras.

Here is a representation of a U-Net++ network of size $L=4$.

### Mandelbrot:

- **Parallelized Mandelbrot algorithm**: Implemented and parallelized the Mandelbrot algorithm. The parallelization was carried out in CUDA using C++, with a clean and efficient usage of the GPU. Colors were applied using the escape time algorithm, by applying a certain color depending on the "depth" of the pixel. To apply the colors, it was necessary to first compute the image's cumulative histogram. Using a parallelized mapping pattern, we could therefore massively apply a map operation on each pixel of the image, associatin the correct color to the corresponding pixel.

Using low-level GPU optimizations, such as shared memory to minimize memory access latency, and properly distributing the workload among the threads, it took approximately 3m42s to generate this image of dimension 19200 x 10800 with 1000000 iterations, thus providing the image with a great amount of detail. You can see for yourself by right clicking on this image, and clicking "*Open Image in New Tab*"!

---

# Miscellaneous

### Chess engine:

- [**Chess**: ](https://gitlab.com/42hd/chess) Chess engine and PGN parser written in C++.

### Web server:

- [**Spider web server**: ](https://gitlab.com/42hd/sws) Webserver written in C++.

### Rubik's cube engine:

- [**Librubik**: ](https://gitlab.com/spurdo/librubik) Rubik's cube engine written in C++.

### Data science project:

- [**Delta project**: ](https://github.com/sudomane/delta/tree/main/pbmc_accidents_routiers)Classification of vehicular accidents in France, written in Python using: Pandas, Plotly and Dash.

### Bash clone:

- **42sh**: POSIX compliant Bash clone written in C.

### Raytracer:

- [**RayTracer**:](https://gitlab.com/spurdo/raytracing-philippe.bouchet) Added multithreading, reflections, and Anti Alisasing to an existing raytracer written in C.

### Find clone:

- [**Myfind**: ](https://gitlab.com/spurdo/myfind)Reimplementation of Bash's "find" command written in C.

### Make clone:

- [**Minimake**: ](https://gitlab.com/spurdo/minimake)Reimplementation of Bash's "make" command written in C.

***Note: EPITA has not allowed the publication of some projects to a public repository. (MICCAI BraTS, Mandelbrot and 42sh)***