# Best Practices for Data Ingestion

Many of the enterprise's mission-critical engines, including business intelligence, predictive analytics, data science, and machine learning, are powered by data. Like any fuel, data must be plentiful, accessible, and clean in order to be completely useful.

The [stages](https://www.ibm.com/cloud/learn/elt) of extract (taking the data from its current place), transform (cleaning and normalizing the data), and load are typically included in the data ingestion process, which prepares data for analysis (placing the data in a database where it can be analyzed). Extract and load are normally simple for businesses, but transform is often a challenge. As a result, if no data has been ingested for processing, an analytical engine may lie idle.

## What is Data Ingestion?

Data ingestion is described as the procedure of gathering data from several sources and sending it to a destination where it may be deposited and examined. The destination can generally be a database, data warehouse, document storage system, or data mart. However, there are a variety of sources available, including spreadsheets, web data extractions (also known as web scrapings), internal programmes, and SaaS data.

[Source](https://www.striim.com/blog/what-is-data-ingestion-and-why-this-technology-matters/)

Business data is frequently stored in several sources and formats. For instance, Salesforce.com keeps sales data whereas [relational DBMSs](https://www.geeksforgeeks.org/relational-model-in-dbms/) store product information. Since this data comes from many sources, it needs to be cleaned and transformed into a format that can be easily examined for decision-making using a simple data intake tool. If this is not done, you'll be stuck with pieces that can't be stitched together.

## Best Pracitces for Data Ingestion

Here are some recommendations for data ingesting best practices to take into account:

### Plan Ahead

Data ingestion's dirty little secret is that the gathering and cleaning stages of the [data lifecycle management](https://lakefs.io/what-is-data-lifecycle-management/) process allegedly consumes 60 to 80 percent of the time allotted for every analytics project. It is our assumption that data scientists spend the majority of their time running algorithms, analyzing the outcomes, and then improving their algorithms for the upcoming run. This is no doubt the exciting part of the job, but data scientists actually spend most of their time attempting to organize the data so they can start their analytical work. This aspect of the work expands as big data's size continues to increase.

Many businesses start [data analytics](https://www.aristotle.com/blog/2022/04/data-analytics-best-practices/) initiatives without realizing this, and when the data intake process takes longer than expected, they are shocked or unhappy. While the data ingestion attempt fails, other teams have created analytical engines that rely on the existence of clean imported data and are left waiting impatiently.

There isn't a magic solution that will make these problems go away. Prepare for them by anticipating them. For instance, you can allocate extra time for data intake, put more staff on it, hire outside help, or postpone starting to create the analytical engine until the project's data ingestion portion is well under way.

### Automate the Process

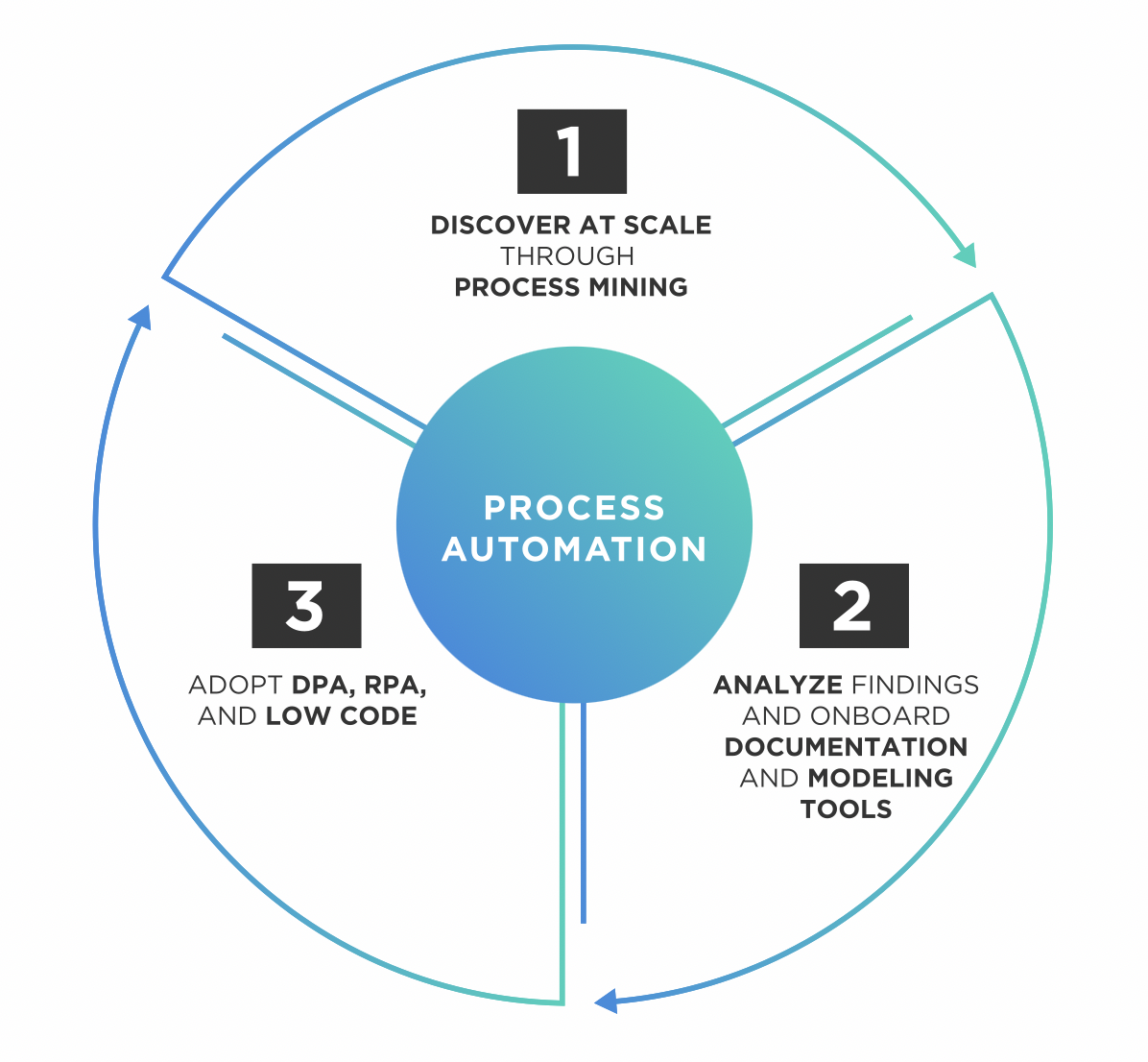

[Source](https://www.tibco.com/reference-center/what-is-process-automation)

Data ingestion could be done manually in the good old days, when data was tiny and only existed in a few dozen tables at most. A programmer was assigned to each local data source to determine how it should be translated into the global schema after a human developed a global schema. In their preferred scripting languages, individual programmers created mapping and cleaning procedures, then executed them as necessary.

The amount and diversity of data available now makes manual curation impossible. Wherever feasible, you must create technologies that automate the ingestion process. For instance, a user should specify a table's metadata, such as its schema or rules concerning the minimum and maximum acceptable values, in a definition language rather than by hand.

By itself, this kind of automation can lighten the load of data input. But because of the enormous number of tables involved, it frequently does not remove the ingestion bottleneck. It is preferable to fill up thousands of spreadsheets rather than thousands of ingestion scripts when thousands of tables need to be ingested. It still isn't a scalable or doable task, though.

### Artificial Intelligence

In order to automatically infer information about data being ingested and essentially reduce the need for manual work, a range of technologies have been created that use machine learning and statistical algorithms. The following are a few processes that can be automated:

- From the local tables mapped to it, infer the global schema.

- Determine which global table a local table should be swallowed into.

- Find other words for data normalization. For instance, the words "inches" and the abbreviations "in." and "in," as well as a straight double-quotation mark (") are all interchangeable.

- Using fuzzy matching, find duplicate records. The same individual goes by the names "Moshin Krat" and "M. Krat," for instance.

These systems rely on people to supply training data and to handle ambiguous situations when the algorithm cannot.

## Summary

By this point, you should be aware of what data intake is and how to use it effectively. Data intake solutions can also aid in enhancing corporate intelligence and business decision-making. It makes it simpler to combine data from many sources and gives you the flexibility to work with different data types and formats.

Additionally, a successful data input procedure may extract useful insights from data in a simple and orderly manner. Automation, self-service data intake, and problem-prediction techniques may improve your process and make it more frictionless, quick, dynamic, and error-free.