# Graph Neural Networks and Maximum Independent Sets

written by [@marc_lelarge](https://twitter.com/marc_lelarge)

Some thoughts about [Cracking nuts with a sledgehammer: when modern graph neural networks do worse than classical greedy algorithms](https://arxiv.org/abs/2206.13211) by Maria Chiara Angelini, Federico Ricci-Tersenghi

## Summary of the paper

Focusing on the problem of [Maximum Independent Sets](https://en.wikipedia.org/wiki/Independent_set_%28graph_theory%29) (MIS) on $d$-regular random graphs, this paper shows that a very simple greedy algorithm performs much better than GNN studied in Ref. [4] [Combinatorial Optimization with Physics-Inspired Graph Neural Networks](https://arxiv.org/abs/2107.01188) by Martin J. A. Schuetz, J. Kyle Brubaker, Helmut G. Katzgraber

The authors conclude ($n$ is the number of vertices in the graph):

> "Obviously, a good optimization algorithm should run in a time polynomial (better if linear) in $n$, and the quality of solutions found should be better than simple existing algorithms and should not degrade increasing $n$. In our opinion, at present, optimizers based on neural networks (like the one presented in Ref. [4]) do not satisfy the above requirements and are not able to compete with simple standard algorithms to solve hard optimization problems. We believe it is of primary importance to bring forward the research in this direction to understand whether neural networks can become competitive in this fundamental task or whether there is any deeper reason for their failure."

It turns out there are known reasons explaining the failure of GNN for MIS.

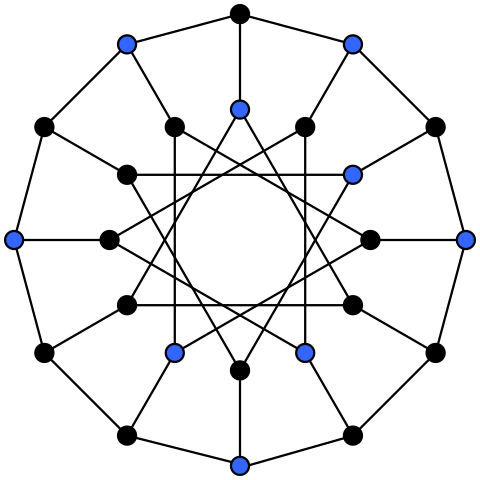

## Message passing GNN and regular graphs

Ref. [4] uses a graph convolutional network (GCN) belonging to the class of Message passing GNN (MGNN). It is well-known that MGNN cannot distinguish any two $d$-regular graphs (results for [equivariant](https://dataflowr.github.io/website/modules/graph3/) GNNs can be found in [Expressive Power of Invariant and Equivariant Graph Neural Networks](https://arxiv.org/abs/2006.15646) Prop. 3). Hence any MGNN will output the same answer for the 2D grid and any other 4-regular graph. This is bad: clearly on large 2D grids, you can build independent sets with density $0.5$ but the best rigorous upper-bound for the density of MIS in large $4$-regular random graphs is $0.41120<0.5$ (see Table 1 in [Replica bounds by combinatorial interpolation for diluted spin systems](https://arxiv.org/abs/1708.02457)). As a direct consequence, **any equivariant MGNN will be bad for the MIS problem on the grid**!

This limitation of equivariant GNNs is only valid if no feature is given in addition with the graph $G$ but Ref. [4] takes care to add random features on the nodes (see p. 5):

>"Because the nodes do not carry any inherent features, in our setup the node embeddings $h^0_v$ are initialized randomly."

Each node has a random embedding as input, like an identifier and hence, the MGNN will be more powerful as it can use this information to distinguish neighbors... But even in this case, **it is not clear if a MGNN can emulate the simple degree-based algorithm (DGA)?**

## Message passing GNN as a local algorithm

In the theoretical computer science / math literature, there is a notion of local algorithm. Here is the definition taken from [Local algorithms for independent sets are half-optimal](https://arxiv.org/abs/1402.0485):

> "The input to the algorithm is a graph $G$. The algorithm decorates $G$ by putting i.i.d. labels on the vertices. The output is $(f (i(v));v∈G)$ where $f$ depends on the isomorphism class $i(v)$ of the labelled, rooted $r$-neighbourhood of $v$ for some fixed $r$. The process $(f (i(v));v∈G)$ generated by the local algorithm will be called a factor of i.i.d."

There is a clear analogy with MGNN: the i.i.d. labels correspond to the initial node embedding and the mapping $f$ is the learned mapping of the MGNN where $r$ is the number of layers. There is a small difference as typically, the output of a MGNN is a real number associated to each node and this output needs to be transformed into a MIS.

For large $d$, the density of the largest independent sets in random $d$-regular graphs is of order $2log(d)/d$ while local algorithms have only produced independent sets with density of order $log(d)/d$. The main result of [Local algorithms for independent sets are half-optimal](https://arxiv.org/abs/1402.0485) is:

> "we prove that for any $ε>0$, local algorithms cannot find independent sets in random $d$-regular graphs of density larger than $(1+ε)(log(d))/d$ if $d$ is sufficiently large. In practice, iterative search algorithms that use local moves at each step fail to find independent sets with density exceeding the critical threshold of $log(d)/d$ in random $d$-regular graphs."

This result does not contradict what we saw above as it is only valid for large values of $d$. Indeed for $d=4$, we already saw that the best rigorous upper-bound for the density of MIS is $0.41120$ and a local algorithm devised in [Local algorithms, regular graphs of large girth, and random regular graphs](https://arxiv.org/abs/1308.0266) is able to find MIS with density $0.39213$ (see Table 1 in [Replica bounds by combinatorial interpolation for diluted spin systems](https://arxiv.org/abs/1708.02457) for other values of $d$) so we see that the approximation ration is larger than $0.39213/0.41120 = 0.953...$ which is much larger than $1/2$. Looking at results in [Monte Carlo algorithms are very effective in finding the largest independent set in sparse random graphs](https://arxiv.org/abs/1904.02231) suggests that even for larger values of $d$, the performance of simple algorithms (like DGA) is still much higher than $1/2$: for $d=20$, the approximation ratio is $0.77...$ and for $d=100$ it is $0.66...$ In this paper, Maria Chiara Angelini, Federico Ricci-Tersenghi give Monte-Carlo based algorithms with even better performances.

## Some final remarks

MIS on $d$-regular random graphs is a nice benchmark because there are already a lot of results in the literature (in math, computer science or physics). It also means that any new algorithm will have fierce competitors in place. MGNN being a special case of local algorithm, it is still surprising that their performances are much lower than simple DGA as shown by Maria Chiara Angelini and Federico Ricci-Tersenghi. Understanding if this gap can be filled is certainly an interesting question.

Another possible interesting question: there are non-local GNN like FGNN which are known to be more expressive (see [Expressive Power of Invariant and Equivariant Graph Neural Networks](https://arxiv.org/abs/2006.15646)). It would be interesting to see their performances but these architectures cannot scale to large graphs as they do not take advantage of the sparsity of the graphs.

A last point: the discussion above concentrates on the MIS on regular random graphs when the number of vertices tend to infinity. This setting is natural: in theoretical computer science, running time of algorithms is expressed as a function of $n$ for large $n$ and in statistical physics, large random regular graphs are seen as a kind of mean-field limit (see [Bethe lattice](https://en.wikipedia.org/wiki/Bethe_lattice)). As said above, it is not clear that GNN will improve SOTA in such a setting but this is definitely not the end the story! There might be practical settings, where solving the MIS is interesting on graphs very different from random regular graphs, graphs of possible medium sizes with recurring patterns, with noise (like missing edges)... and in such a setting GNN could have a better chance to shine. [If You're in a Dogfight, Become a Cat!](http://cup.columbia.edu/book/if-youre-in-a-dogfight-become-a-cat/9780231174824)

###### tags: `public` `reading` `GNN` `MIS`