# [Linux 核心 Copy On Write - Process](https://hackmd.io/@linD026/Linux-kernel-COW-content)

contributed by < [`linD026`](https://github.com/linD026) >

###### tags: `Linux kernel COW` , `linux2021`

> <font size = 2>此篇所引用之原始程式碼皆以用 Linux Kernel 5.10 版本,並且有關於硬體架構之程式碼以 arm 為主。

> [前篇: Linux 核心 Copy On Write - Memory Region](https://hackmd.io/@linD026/Linux-kernel-COW-memory-region)</font>

---

## Page Fault

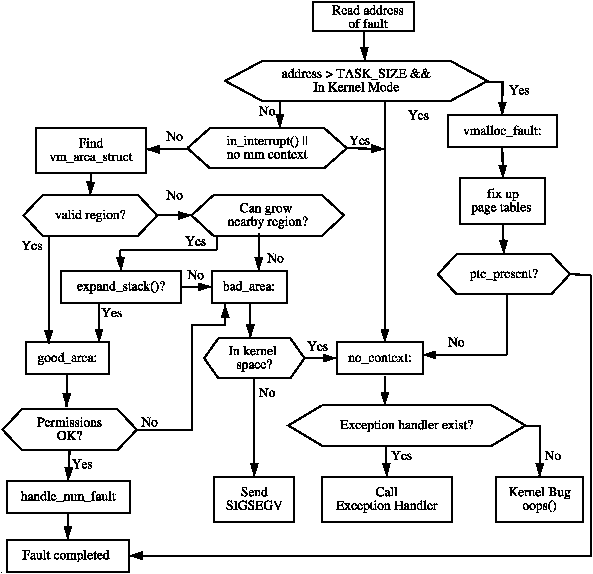

There are two situations where a bad reference may occur.

The first is where a process sends an invalid pointer to the kernel via a system call which the kernel must be able to safely trap as the only check made initially is that the address is below PAGE_OFFSET.

The second is where the kernel uses copy_from_user() or copy_to_user() to read or write data from userspace.

> The assembler function startup_32() is responsible for enabling the paging unit in arch/i386/kernel/head.S. While all normal kernel code in vmlinuz is compiled with the base address at PAGE_OFFSET + 1MiB, the kernel is actually loaded beginning at the first megabyte (0x00100000) of memory. The first megabyte is used by some devices for communication with the BIOS and is skipped. The bootstrap code in this file treats 1MiB as its base address by subtracting __PAGE_OFFSET from any address until the paging unit is enabled so before the paging unit is enabled, a page table mapping has to be established which translates the 8MiB of physical memory to the virtual address PAGE_OFFSET.

At compile time, the linker creates an exception table in the `__ex_table` section of the kernel code segment which starts at `__start___ex_table` and ends at `__stop___ex_table`. Each entry is of type exception_table_entry which is a pair consisting of an execution point and a fixup routine. When an exception occurs that the page fault handler cannot manage, it calls search_exception_table() to see if a fixup routine has been provided for an error at the faulting instruction. If module support is compiled, each modules exception table will also be searched.

> [/include/linux/mm_types.h](https://elixir.bootlin.com/linux/v5.10.35/source/include/linux/mm_types.h#L686)

```cpp

/**

* typedef vm_fault_t - Return type for page fault handlers.

*

* Page fault handlers return a bitmask of %VM_FAULT values.

*/

typedef __bitwise unsigned int vm_fault_t;

/**

* enum vm_fault_reason - Page fault handlers return a bitmask of

* these values to tell the core VM what happened when handling the

* fault. Used to decide whether a process gets delivered SIGBUS or

* just gets major/minor fault counters bumped up.

*

* @VM_FAULT_OOM: Out Of Memory

* @VM_FAULT_SIGBUS: Bad access

* @VM_FAULT_MAJOR: Page read from storage

* @VM_FAULT_WRITE: Special case for get_user_pages

* @VM_FAULT_HWPOISON: Hit poisoned small page

* @VM_FAULT_HWPOISON_LARGE: Hit poisoned large page. Index encoded

* in upper bits

* @VM_FAULT_SIGSEGV: segmentation fault

* @VM_FAULT_NOPAGE: ->fault installed the pte, not return page

* @VM_FAULT_LOCKED: ->fault locked the returned page

* @VM_FAULT_RETRY: ->fault blocked, must retry

* @VM_FAULT_FALLBACK: huge page fault failed, fall back to small

* @VM_FAULT_DONE_COW: ->fault has fully handled COW

* @VM_FAULT_NEEDDSYNC: ->fault did not modify page tables and needs

* fsync() to complete (for synchronous page faults

* in DAX)

* @VM_FAULT_HINDEX_MASK: mask HINDEX value

*

*/

enum vm_fault_reason {

VM_FAULT_OOM = (__force vm_fault_t)0x000001,

VM_FAULT_SIGBUS = (__force vm_fault_t)0x000002,

VM_FAULT_MAJOR = (__force vm_fault_t)0x000004,

VM_FAULT_WRITE = (__force vm_fault_t)0x000008,

VM_FAULT_HWPOISON = (__force vm_fault_t)0x000010,

VM_FAULT_HWPOISON_LARGE = (__force vm_fault_t)0x000020,

VM_FAULT_SIGSEGV = (__force vm_fault_t)0x000040,

VM_FAULT_NOPAGE = (__force vm_fault_t)0x000100,

VM_FAULT_LOCKED = (__force vm_fault_t)0x000200,

VM_FAULT_RETRY = (__force vm_fault_t)0x000400,

VM_FAULT_FALLBACK = (__force vm_fault_t)0x000800,

VM_FAULT_DONE_COW = (__force vm_fault_t)0x001000,

VM_FAULT_NEEDDSYNC = (__force vm_fault_t)0x002000,

VM_FAULT_HINDEX_MASK = (__force vm_fault_t)0x0f0000,

};

```

* [/mm/memory.c](https://elixir.bootlin.com/linux/v5.10.39/source/mm/memory.c#L4109)

```cpp

/*

* We enter with non-exclusive mmap_lock (to exclude vma changes,

* but allow concurrent faults).

* The mmap_lock may have been released depending on flags and our

* return value. See filemap_fault() and __lock_page_or_retry().

* If mmap_lock is released, vma may become invalid (for example

* by other thread calling munmap()).

*/

static vm_fault_t do_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

struct mm_struct *vm_mm = vma->vm_mm;

vm_fault_t ret;

/*

* The VMA was not fully populated on mmap() or missing VM_DONTEXPAND

*/

if (!vma->vm_ops->fault) {

/*

* If we find a migration pmd entry or a none pmd entry, which

* should never happen, return SIGBUS

*/

if (unlikely(!pmd_present(*vmf->pmd)))

ret = VM_FAULT_SIGBUS;

else {

vmf->pte = pte_offset_map_lock(vmf->vma->vm_mm,

vmf->pmd,

vmf->address,

&vmf->ptl);

/*

* Make sure this is not a temporary clearing of pte

* by holding ptl and checking again. A R/M/W update

* of pte involves: take ptl, clearing the pte so that

* we don't have concurrent modification by hardware

* followed by an update.

*/

if (unlikely(pte_none(*vmf->pte)))

ret = VM_FAULT_SIGBUS;

else

ret = VM_FAULT_NOPAGE;

pte_unmap_unlock(vmf->pte, vmf->ptl);

}

} else if (!(vmf->flags & FAULT_FLAG_WRITE))

ret = do_read_fault(vmf);

else if (!(vma->vm_flags & VM_SHARED))

ret = do_cow_fault(vmf);

else

ret = do_shared_fault(vmf);

/* preallocated pagetable is unused: free it */

if (vmf->prealloc_pte) {

pte_free(vm_mm, vmf->prealloc_pte);

vmf->prealloc_pte = NULL;

}

return ret;

}

```

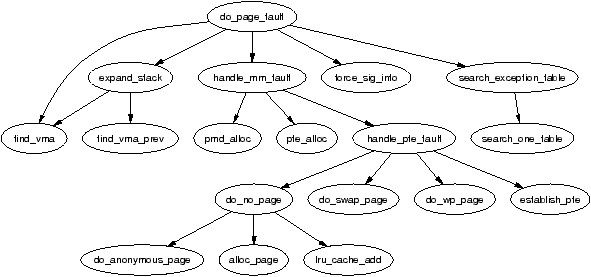

[arch/arm/mm/fault.c](https://elixir.bootlin.com/linux/v5.10.39/source/arch/arm/mm/fault.c#L203)

> Linux kernel 2.6 - x86

>

>

---

## fork

[man page fork](https://man7.org/linux/man-pages/man2/fork.2.html)

[copy_nonpresent_pte - copy one vm_area from one task to the other.](https://elixir.bootlin.com/linux/v5.10.46/source/mm/memory.c#L698)

```cpp

/*

* This routine handles present pages, when users try to write

* to a shared page. It is done by copying the page to a new address

* and decrementing the shared-page counter for the old page.

*

* Note that this routine assumes that the protection checks have been

* done by the caller (the low-level page fault routine in most cases).

* Thus we can safely just mark it writable once we've done any necessary

* COW.

*

* We also mark the page dirty at this point even though the page will

* change only once the write actually happens. This avoids a few races,

* and potentially makes it more efficient.

*

* We enter with non-exclusive mmap_lock (to exclude vma changes,

* but allow concurrent faults), with pte both mapped and locked.

* We return with mmap_lock still held, but pte unmapped and unlocked.

*/

```

[`do_wp_page` ( break COW )](https://elixir.bootlin.com/linux/v5.10.46/source/mm/memory.c#L3083)

The call graph for this function is shown in Figure 4.17. This function handles the case where a user tries to write to a private page shared amoung processes, such as what happens after fork(). Basically what happens is a page is allocated, the contents copied to the new page and the shared count decremented in the old page.

```cpp

#include <stdio.h>

#include <sys/types.h>

#include <unistd.h>

int main(void) {

printf("%d", getpid());

fork();

return 0;

}

```

```cpp

...

8) | handle_mm_fault() {

8) 0.147 us | mem_cgroup_from_task();

8) 0.193 us | __count_memcg_events();

8) | __handle_mm_fault() {

8) 0.152 us | pmd_devmap_trans_unstable();

8) 0.205 us | _raw_spin_lock();

8) | do_wp_page() {

8) 0.152 us | vm_normal_page();

8) | reuse_swap_page() {

8) 0.151 us | page_trans_huge_mapcount();

8) 0.483 us | }

8) 0.147 us | unlock_page();

8) | wp_page_copy() {

8) | alloc_pages_vma() {

8) 0.147 us | __get_vma_policy();

8) | get_vma_policy.part.0() {

8) 0.148 us | get_task_policy.part.0();

8) 0.456 us | }

8) 0.152 us | policy_nodemask();

8) 0.146 us | policy_node();

8) | __alloc_pages_nodemask() {

8) | _cond_resched() {

8) 0.148 us | rcu_all_qs();

8) 0.463 us | }

8) 0.149 us | should_fail_alloc_page();

8) | get_page_from_freelist() {

8) 0.202 us | __inc_numa_state();

8) 0.146 us | __inc_numa_state();

8) | prep_new_page() {

8) 0.152 us | kernel_poison_pages();

8) 0.171 us | page_poisoning_enabled();

8) 0.149 us | page_poisoning_enabled();

8) 1.299 us | }

8) 2.307 us | }

8) 3.526 us | }

8) 5.322 us | }

8) | mem_cgroup_charge() {

8) 0.168 us | get_mem_cgroup_from_mm();

8) 0.179 us | try_charge();

8) | mem_cgroup_charge_statistics.isra.0() {

8) 0.157 us | __count_memcg_events();

8) 0.450 us | }

8) 0.149 us | memcg_check_events();

8) 1.687 us | }

8) | cgroup_throttle_swaprate() {

8) 0.147 us | kthread_blkcg();

8) 0.522 us | }

8) | _cond_resched() {

8) 0.142 us | rcu_all_qs();

8) 0.430 us | }

8) 0.155 us | _raw_spin_lock();

8) | ptep_clear_flush() {

8) | flush_tlb_mm_range() {

8) | flush_tlb_func_common.constprop.0() {

8) 0.374 us | native_flush_tlb_one_user();

8) 0.673 us | }

8) 1.129 us | }

8) 1.478 us | }

8) | page_add_new_anon_rmap() {

8) | __mod_lruvec_state() {

8) 0.156 us | __mod_node_page_state();

8) 0.156 us | __mod_memcg_state();

8) 0.804 us | }

8) 0.145 us | __page_set_anon_rmap();

8) 1.407 us | }

8) | lru_cache_add_active_or_unevictable() {

8) 0.160 us | lru_cache_add();

8) 0.444 us | }

8) | page_remove_rmap() {

9) # 1431.344 us | }

8) 0.147 us | lock_page_memcg();

8) | unlock_page_memcg() {

8) 0.146 us | __unlock_page_memcg();

8) 0.434 us | }

9) 0.369 us | sched_idle_set_state();

8) 1.047 us | }

8) + 14.156 us | }

9) # 1433.709 us | }

8) + 15.735 us | }

9) # 1434.562 us | }

8) + 16.787 us | }

9) # 1434.981 us | }

8) + 17.746 us | }

...

```

---

## Function Trace

### events

```cpp

// cost

tlb:tlb_flush

// read-only

exceptions:page_fault_kernel

exceptions:page_fault_user

```

### function

```cpp

/* VA page table */

// insert_pages

vm_insert_pages

// free page

free_pages

////////////////////////////////////

/* COW */

// -> handle_pte_fault -> do_wp_page

(_)handle_mm_fault

// break cow

do_wp_page

// do_fault -> do_cow_fault

do_fault

anon_vma_clone

anon_vma_fork

```

---

## Copy On Write

PTE entry is marked as un-writeable, But VMA is marked as writeable. Page fault handler notices difference.

- Must mean COW

- Make a duplicate of physical page

- Update PTEs, flush TLB entry

- do_wp_page

* 4.6.1 Handling a Page Fault

The second option is if the page is being written to. If the PTE is write protected, then do_wp_page() is called as the page is a Copy-On-Write (COW) page. A COW page is one which is shared between multiple processes(usually a parent and child) until a write occurs after which a private copy is made for the writing process. A COW page is recognised because the VMA for the region is marked writable even though the individual PTE is not. If it is not a COW page, the page is simply marked dirty as it has been written to.

[4.6.4 Copy On Write (COW) Pages](https://www.kernel.org/doc/gorman/html/understand/understand007.html)

During fork, the PTEs of the two processes are made read-only so that when a write occurs there will be a page fault. Linux recognises a COW page because even though the PTE is write protected, the controlling VMA shows the region is writable. It uses the function do_wp_page() to handle it by making a copy of the page and assigning it to the writing process. If necessary, a new swap slot will be reserved for the page. With this method, only the page table entries have to be copied during a fork.

[The /proc Filesystem](https://www.kernel.org/doc/html/latest/filesystems/proc.html)

* [`handle_pte_fault`](https://elixir.bootlin.com/linux/v5.10.56/source/mm/memory.c#L4391) pte entry marked un-writeable, vma fault in FAULT_FLAG_WRITE

```cpp

if (vmf->flags & FAULT_FLAG_WRITE) {

if (!pte_write(entry))

return do_wp_page(vmf);

entry = pte_mkdirty(entry);

}

```

#### TODO find vma writeable ( write trace )

#### guess : vma writeable => get the pte entry => write pte => get fault (pte un-writeable)

* [bootlin.com - /mm/memory.c - do_wp_page](https://elixir.bootlin.com/linux/v4.6/source/mm/memory.c#L2345)

---

https://elixir.bootlin.com/linux/v4.6/source/mm/memory.c#L2345

https://elixir.bootlin.com/linux/v4.6/source/include/linux/mm.h#L728

https://elixir.bootlin.com/linux/latest/source/include/linux/rmap.h#L29

https://www.kernel.org/doc/gorman/html/understand/understand007.html

[`do_fault` -> `do_cow_fault`](https://blog.csdn.net/u012489236/article/details/112643986)

[`do_translation_fault`](https://elixir.bootlin.com/linux/v5.10.46/source/arch/arm/mm/fault.c#L391)

[`do_page_fault`](https://elixir.bootlin.com/linux/v5.10.46/source/arch/arm/mm/fault.c#L240)

[`__do_page_fault`](https://elixir.bootlin.com/linux/v5.10.46/source/arch/arm/mm/fault.c#L204)

[`handle_mm_fault`](https://elixir.bootlin.com/linux/v5.10.46/source/mm/memory.c#L4598)

[`__handle_mm_fault`](https://elixir.bootlin.com/linux/v5.10.46/source/mm/memory.c#L4442)

[`handle_pte_fault`](https://elixir.bootlin.com/linux/v5.10.46/source/mm/memory.c#L4349)

[`do_wp_page` ( break COW )](https://elixir.bootlin.com/linux/v5.10.46/source/mm/memory.c#L3083)

---

## COW problem

[lwn.net](https://lwn.net/Articles/849638/)

[commit - 17839856fd588f4ab6b789f482ed3ffd7c403e1f](https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/commit/?id=17839856fd588f4ab6b789f482ed3ffd7c403e1f)

[patch](https://patchwork.kernel.org/project/linux-mm/patch/20200808223802.11451-1-peterx@redhat.com/#23539473)