# Image filter

Contributed by < [Eric Lin](https://github.com/ericlinsechs) >

#### Source code: https://github.com/ericlinsechs/filter

## Aim

* Explain how to implement these image filters in C:

* Grayscale Conversion

* Horizontal Reflection

* Image Blurring

* Edge Detection

## Background

**Images** are made up of tiny dots called **pixels**, and each pixel can have a different color. In **black-and-white images**, we use 1 bit for each pixel, where 0 represents black and 1 represents white. For **colorful images**, more bits are needed per pixel. Formats like BMP, JPEG, or PNG with "24-bit color" use **24 bits per pixel**. In a 24-bit BMP, 8 bits represent the amount of red, 8 bits for green, and 8 bits for blue in a pixel's color. This **combination** of red, green, and blue is known as **RGB color**.

> For more information, please refer [bitmaps](https://cs50.harvard.edu/x/2023/psets/4/filter/more/#bitmaps).

## Image Filtering

Filtering an image means altering each pixel of an original image to create a specific effect in the resulting image. It involves modifying the pixels to achieve a desired outcome.

## Features

The following filters are included in this project:

* Grayscale Conversion

* Horizontal Reflection

* Image Blurring

* Edge Detection

## Code implementation

### Structure

This structure describes a color consisting of relative intensities of red, green, and blue.

```c

typedef struct

{

BYTE rgbtBlue;

BYTE rgbtGreen;

BYTE rgbtRed;

} __attribute__((__packed__))

RGBTRIPLE;

```

### Grayscale Conversion

To create a grayscale filter for an image, we aim to convert it to black-and-white. This is achieved by ensuring that the red, green, and blue values of each pixel are the same.

To determine the grayscale value for a pixel, we take the average of its red, green, and blue values. This helps maintain the overall brightness or darkness of the image.

```c

// Convert image to grayscale

void grayscale(int height, int width, RGBTRIPLE image[height][width])

{

// Change all black pixels to a color of your choosing

for (int i = 0; i < height; i++) {

for (int j = 0; j < width; j++) {

// Calculate the average intensity value for the pixel

int sum = image[i][j].rgbtRed + image[i][j].rgbtGreen +

image[i][j].rgbtBlue;

int ave = round((float) sum / 3);

RGBTRIPLE gray_scale = {

.rgbtRed = ave, .rgbtGreen = ave, .rgbtBlue = ave};

image[i][j] = gray_scale;

}

}

return;

}

```

### Horizontal Reflection

The "reflect" filter mirrors the original image, creating a result as if you placed the original in front of a mirror. In this filter, pixels on the left side of the image move to the right, and vice versa.

The reflection effect is achieved by rearranging the placement of the existing pixels rather than introducing new ones.

```c

// Reflect image horizontally

void reflect(int height, int width, RGBTRIPLE image[height][width])

{

int mid = round((float) width / 2);

for (int i = 0; i < height; i++) {

for (int j = 0; j < mid; j++)

// Swap pixels on the left with pixels on the right

swap(&image[i][j], &image[i][width - 1 - j]);

}

return;

}

```

### Image Blurring

To achieve a blurred or softened effect in an image, one method is the "box blur." This technique involves taking each pixel and, for each color value, assigning it a new value by averaging the color values of neighboring pixels.

In the context of a 3x3 box around each pixel, the new value of a pixel is the average of the color values of all pixels within 1 row and column of the original pixel.

> For more information, please refer [blur](https://cs50.harvard.edu/x/2023/psets/4/filter/more/#blur).

```c

// Blur image

void blur(int height, int width, RGBTRIPLE image[height][width])

{

RGBTRIPLE tmp_img[height][width];

for (int i = 0; i < height; i++) {

for (int j = 0; j < width; j++) {

int sum_r = 0;

int sum_g = 0;

int sum_b = 0;

unsigned int cnt = 0;

// Iterate through the neighboring pixels for the blur operation

for (int x = i - 1; x <= (i + 1); x++) {

if (x < 0 || x >= height)

continue;

for (int y = j - 1; y <= (j + 1); y++) {

if (y < 0 || y >= width)

continue;

sum_r += image[x][y].rgbtRed;

sum_g += image[x][y].rgbtGreen;

sum_b += image[x][y].rgbtBlue;

cnt++;

}

}

// Calculate the average RGB values

tmp_img[i][j].rgbtRed = round((float) sum_r / cnt);

tmp_img[i][j].rgbtGreen = round((float) sum_g / cnt);

tmp_img[i][j].rgbtBlue = round((float) sum_b / cnt);

}

}

memcpy(image, tmp_img, sizeof(RGBTRIPLE) * height * width);

return;

}

```

### Edge Detection

#### The Sobel operator

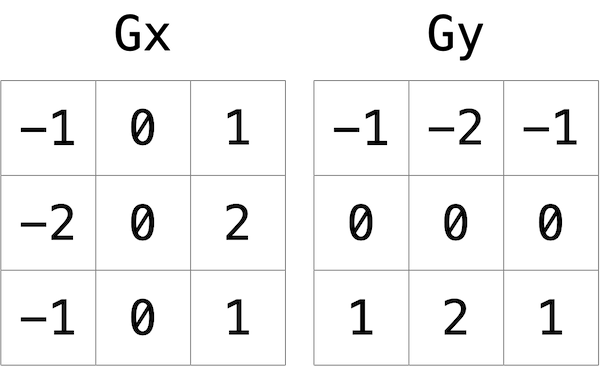

The [Sobel operator](https://en.wikipedia.org/wiki/Sobel_operator) achieves edges detection by modifying each pixel based on its 3x3 pixel grid surroundings. Unlike simple averaging, the Sobel operator calculates a weighted sum of surrounding pixels. It computes two sums: one for detecting edges in the x-direction and one for the y-direction, using specific kernels.

> source: [cs50:filter](https://cs50.harvard.edu/x/2023/psets/4/filter/more)

The kernels are designed to emphasize differences in color between neighboring pixels. For instance, in the Gx direction, pixels to the right are multiplied by a positive number, and those to the left by a negative number. This amplifies differences, indicating potential object boundaries. A similar principle applies to the y-direction.

To generate Gx and Gy values for each color channel of a pixel, the original color values in the 3x3 box are multiplied by corresponding kernel values through the [convolution operation](https://en.wikipedia.org/wiki/Kernel_(image_processing)#Convolution):

> source: [wikipedia: Convolution](https://en.wikipedia.org/wiki/Kernel_(image_processing)#Convolution)

The Sobel filter combines Gx and Gy into a final value by calculating the square root of Gx^2 + Gy^2.

> source: [wikipedia: Sobel_operator](https://en.wikipedia.org/wiki/Sobel_operator)

```c

// Perform convolution at a given pixel position

int *convolution(const int height,

const int width,

const int col,

const int row,

const int kernel[3][3],

RGBTRIPLE image[height][width])

{

int *sum = malloc(sizeof(int) * 3);

if (!sum)

return NULL;

// Initialize the sum values

sum[0] = 0; // Red

sum[1] = 0; // Green

sum[2] = 0; // Blue

// Iterate through the kernel and accumulate the sum

for (int x = col - 1, kx = 0; kx <= 2 && x < height; x++, kx++) {

if (x < 0)

continue;

for (int y = row - 1, ky = 0; ky <= 2 && y < width; y++, ky++) {

if (y < 0)

continue;

// Perform the convolution for each color channel

sum[0] += image[x][y].rgbtRed * kernel[kx][ky];

sum[1] += image[x][y].rgbtGreen * kernel[kx][ky];

sum[2] += image[x][y].rgbtBlue * kernel[kx][ky];

}

}

return sum;

}

// Combine gx and gy to obtain a gradient value

BYTE combine(int gx, int gy)

{

unsigned long gradient = (unsigned long) round(sqrt(gx * gx + gy * gy));

// Ensure the gradient value is within the BYTE range

if (gradient > 0xff)

gradient = 0xff;

return (BYTE) gradient;

}

void edges(int height, int width, RGBTRIPLE image[height][width])

{

// Define Sobel kernels

int mx[3][3] = {{-1, 0, 1}, {-2, 0, 2}, {-1, 0, 1}};

int my[3][3] = {{-1, -2, -1}, {0, 0, 0}, {1, 2, 1}};

RGBTRIPLE tmp_img[height][width];

for (int i = 0; i < height; i++) {

for (int j = 0; j < width; j++) {

int *gx, *gy;

gx = convolution(height, width, i, j, mx, image);

gy = convolution(height, width, i, j, my, image);

tmp_img[i][j].rgbtRed = combine(gx[0], gy[0]);

tmp_img[i][j].rgbtGreen = combine(gx[1], gy[1]);

tmp_img[i][j].rgbtBlue = combine(gx[2], gy[2]);

free(gx);

free(gy);

}

}

memcpy(image, tmp_img, sizeof(RGBTRIPLE) * height * width);

return;

}

```