---

tags: research

---

# [Experiment] Prompt Design

- 筆記目標:

- 設計多個 SA 相關任務資料集的 prompts

- 不需要將 prompt 設計到爐火純青,足夠 inference、有所本,且 prompt 內的解釋夠清晰即可。

- 對於並非 Few-class Classification 的任務,如何創造 prompt? 例如 aspect category classification。

- 對於對話式的 ABSA,如何給 In-Context Examples (挑選,排序,輸入輸出格式)較佳?

- Task Coverage

- IE: Information Extraction

- ABSA: Aspect-Based Sentiment Analysis

- 只專注讀 Prompt 設計的地方

---

## To Do List

- n-way k-shot few-shot learning

- dialogue

---

## arXiv

- 2023.05.17 Keyword Hits

- keywords: `dialogue opinion mining`

- https://arxiv.org/pdf/1905.02947.pdf

- 2023.05.15 Keyword Hits

- keywords: `in-context opinion mining`

- [Opinion Mining from YouTube Captions Using ChatGPT: A Case Study of Street Interviews Polling the 2023 Turkish Elections](https://arxiv.org/abs/2304.03434)

---

## Opinion Mining from YouTube Captions Using ChatGPT: A Case Study of Street Interviews Polling the 2023 Turkish Elections

- Tugrulcan Elmas, ilker Giil (Indiana University of Bloomington)

- 2023 四月放上 ArXiv,還不知道有沒有投去哪裡或有沒有上

- 2023 Turkish elections poll/interview 影片,使用 Youtube auto-gen captions 來做 Ground-Truth 標記(325 interviews fom 10 videos published by 3 channels)。

- 2 Annotation tasks:

- For 1 citizen:

1. Preferred candidate (3 candidates + other)

2. Motivation (13 classes)

- Ground-Truth 標記:人工標記+ low-agreement 時用 GPT-4

-

- Related Works 寫了

1. Opinion Mining, Sentiment Analysis

2. Using LLMs for data annotation

3. Elections Polling using Social Media Data

- 0-shot inference: ChatGPT was given the Turkish version of the following prompt (i.e., annotation question).

- Overall Results

We show the channel-wise and combined results in Table 1 and concept-wise results in Table 2. On 325 respondents, we report 0.97 precision and recall for candidate prediction and 0.70 for concept classification,if the captions are processed, i.e., the speakers are providedto ChatGPT. This means that ChatGPT may reliably detectpeople’s stances and the frames they mention from YouTubedata.

## InstructABSA

- T*k*-Instruct

- 不太嚴謹。沒有舉出 noaspectterm 的 in-context examples。

- 他的 Positive, Negative, Neutral Examples 是完全 in terms of sentiment。

- 他沒有提出他的 prompt 中的 in-context examples 是怎麼從 training set 中揀選出來的(why these 6 instead of others?)。他的 in-context examples 也是固定的,不是像 Jiachang Li et al 的那篇是動態挑選的。

- ATE

-

- ATSC

-

- Joint Task: ATE + ATSC

-

## What Makes Good In-Context Examples for GPT-3?

- [paper](https://aclanthology.org/2022.deelio-1.10/)

- Jiachang Liu et al, 2021 (Microsoft Dynamics 365 AI, Internship)

- <font color=blue>主要是研究 Prompt 中 Demonstrations (In-context examples) 的選擇、排列順序等如何增進 LLM 的 few-shot 表現。</font>

- (paper claims) 第一個研究 the sensitivity of GPT-3’s few-shot capabilities with respect to the selec-tion of in-context example

- Table 1 shows that the results of GPT tends to fluctutate significantly with different in-context examples chosen.

- 2.3 kNN Augmented IC Examples Selection

- 非 iterative,直接 encode 然後排序。

- 使用 sentence encoders: 第一類是 1-time pretrained 的 BERT, RoBERTa, XLNet,第二類是 twice-pretrained (eg. BERT + STS fine-tuned) 的 BERT, RoBERTa, XLNet。

- 計算量昂貴,新的 test sample $x_{test}$進來後要重新計算一次整個 train set $D_T$ 和 $x_{test}$ 的相似度。

-

- 使用一個額外的 retrieval module,「是可以訓練的」

- 有在 SA 上面做實驗,實驗設定有點 transfer learning 感,是SST-2 trainset 裏拿出 IC examples,然後從 IMDB 資料集拿出 test sample

## (GAS) Towards Generative Aspect-Based Sentiment Analysis

- Wnxuan Zhang et al, 2021(Alibaba Group)

- **Prefix Prompt (No instruction-following):**

-

- (自然語言形式)restaurant general <- (本來的標注模式)RESTAURANT#GENERAL

- <font color=blue>訓練形式:full finetune,在 finetune 時讓模型學習到有哪些 Aspect Category 可以選擇。沒有在 Prefix Prompt 內加入 Category 選項,因此不適合做 no gradient-update 的 zero-shot/few-shot learning。</font>

- 採用模型: pre-trained T5 model

- Prediction Normalization 後處理「預測的正規化」

- the predictions of a generation model may exhibit morphology shift from theground-truths,e.g., fromsingletopluralnouns.

- 實驗結果:Eval Metrics: Triplet/Pair F1 Metrics (span 範圍全對,所有 elements 都要對)

-

## Cross-Task Generalization via Natural Language Crowdsourcing Instructions

- [paper](https://aclanthology.org/2022.acl-long.244.pdf)

- 先不讀,因為 prompt design 看起來內容會跟 Yizhong Wang et al. 那篇重疊。

## (T*k*-Instruct) Super-NaturalInstructions: Generalization via Declarative Instructions on 1600+ NLP Tasks

- [paper](https://arxiv.org/abs/2204.07705)

- Yizhong Wang et al., 2022 (Allen AI)

-

- Instruction Schema,參考來源:Mishra et al.(2022b), though it is simplified.

- Mishra 2022b: Swaroop Mishra, Daniel Khashabi, Chitta Baral, and Hannaneh Hajishirzi. 2022b.Cross-Task Generalization via Natural Language Crowdsourcing Instructions. In Annual Meeting of the Association forComputational Linguistics(ACL).

- **Definition** defines a given task in natural language. This is a complete definition of how aninput text (e.g., a sentence or a document) is expected to be mapped to an output text.

- **Positive Examples** are samples of inputs and

theircorrectoutputs, along with a short explanation for each.

- **Negative Examples** are samples of inputsand their *incorrect/invalid outputs*, along witha short explanation for each. 很酷,範例給的 output 是(故意是)錯的。

## Large Language Models are Few-Shot Clinical Information Extractors

[paper (EMNLP, 2022)](https://arxiv.org/abs/2205.12689)

- 作者背景:[(Monica Agrawal)](https://people.csail.mit.edu/magrawal/) recently completed my PhD at MIT CSAIL, advised by David Sontag in the Clinical Machine Learning group. Standford CS Bachelor and Master.

- Terminology:

把答案從 LLM 的回答中後處理回來的過程/執行的程式碼:resolve/resolver

- Introduce 3 new annotated datasets for benchmarking few-shot clinical IE tasks.

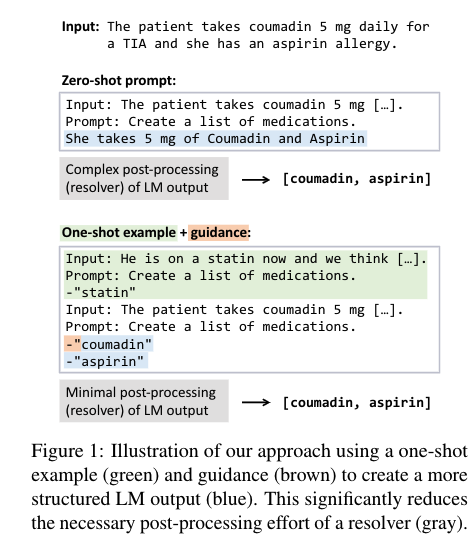

- <font color=blue> 以方便後處理為導向的 prompt design,稱作Guided Prompt Design: easy-to-structure output and resolvers </font>

- Method 3.1 Predicting Structured Outputs with LLMs 介紹為何 easy-to-structure prompt 是重要的(resolvers 複雜程度變高)

- Section 6, 7 介紹 Coref Resolution 和 Medication Extraction 兩個任務的 prompt 設計

- Section 7: Medication Extraction,例子 see Appendix C.4

- Token-level and phrase-level,兩種都很像 BIOS tagging(類似直接叫 model output 出 BIOS tagging 的格式),然後有分 one-shot example 和 zero-shot。

-