In my last diary [entry](https://www.openstreetmap.org/user/ZeLonewolf/diary/401697), I described how I hosted the [tile.ourmap.us]() planet vector tileserver for the [OSM Americana](https://github.com/ZeLonewolf/openstreetmap-americana) project using Amazon Web Services (AWS). This approach is good, but it costs more than necessary and is expensive if you want the tiles to update continuously!

While I was at [State of the Map US](https://2023.stateofthemap.us/) in Richmond, VA this summer, I ran into [Brandon Liu](https://twitter.com/bdon), the creator of [protomaps](https://protomaps.com/) and more importantly, the [PMTiles](https://github.com/protomaps/PMTiles) file format. PMTiles provides several advantages over [mbtiles](https://wiki.openstreetmap.org/wiki/MBTiles) which allow us to create an ultra low-cost setup. He shared with me the key elements of this recipe, and I highly recommend his guides for building and hosting tile servers.

With this setup, I am able to run [tile.ourmap.us](https://tile.ourmap.us/) for $1.61 per month, with full-planet updates every 9 hours.

## Eliminating things that cost money

The first thing that cost money is running a cloud rendering server. I would spin up a very hefty server with at least 96Gb ram and 64 CPUs, which could render a planet in about a half hour. However, thanks to improvements in [planetiler](https://github.com/onthegomap/planetiler), we can now run planet builds on hardware with less ram (provided there is free disk space), at the expense of the builds taking longer.

I happened to have a Dell Inspiron 5593 laptop lying around that I wasn't using, because it had a [hardware defect](https://www.dell.com/community/en/conversations/inspiron/inspiron-5593-keyboard-issues-n-b-arrow-space-bar/647f94ddf4ccf8a8de74389f) where several keys on the keyboard stopped working, even after a keyboard replacement. It had decent specs - an 8-core processor (Intel(R) Core(TM) i7-1065G7), 64Gb of ram, and a 500Gb SSD hard drive. Rather than let it continue to collected dust, I plugged in a keyboard and installed Ubuntu so it could be my new render server.

I did have to add one piece of hardware to complete the setup -- a [USB-to-gigabit ethernet adapter](https://www.amazon.com/dp/B09GRL3VCN), which I bought on Amazon for $13. The built-in hardwire ethernet jack was limited to 10/100 (11MiB/s), and the built-in wifi proved to be unstable at high speeds. The USB ports on this model laptop are USB 2.0, so it's limited to 480Mbps rather than the full gigabit, but that's still fast enough to upload a planet file in less than 20 minutes rather than over an hour. This one addition brought the 11-hour build loop down to a 9-hour build loop.

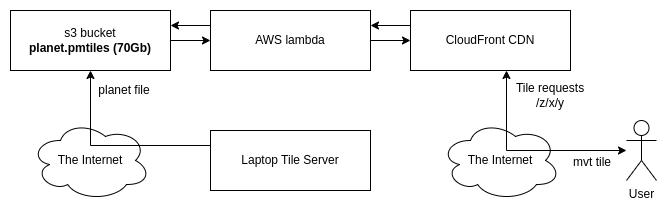

The next two things that cost money are the computer to run a tileserver (an EC2 t4g.micro instance) and an EFS network file share to store the planet file. We can replace both of these costs by switching to [PMTiles](https://github.com/protomaps/PMTiles), which is now supported by planetiler as an output option. The advantage of PMTiles over mbtiles is that the PMTiles format is a raw, indexed archive of tiles, while mbtiles is an sqlite database. That means that code to retrieve individual tiles from the archive using [HTTP range requests](https://developer.mozilla.org/en-US/docs/Web/HTTP/Range_requests) is possible using so-called *serverless functions* rather than having to run a tile server such as [TileServerGL](http://tileserver.org/).

A "serverless function" is simply a bit of code that runs in cloud infrastructure without dedicating a specific machine to it, and therefore it is significantly cheaper -- the cloud provider can group your function along with other customers' functions on shared hardware. The AWS solution for serverless functions is called [AWS lambda](https://aws.amazon.com/lambda/), and it can access files stored on AWS's [Simple Storage System (s3)](https://aws.amazon.com/s3/), which supports HTTP range requests. In this case, the function takes z/x/y tile requests, performs a few lookups on the PMTiles file to determine where in the file the tile is located, and retrieves the tile as a block from that location, all without the overhead of a database layer.

This setup is advantageous because an AWS lambda is a fraction of the cost of running an EC2 node, and s3 is a fraction of the cost of EFS. Additionally, we can run the CloudFront Content Delivery Network (CDN), which further reduces our costs by reducing the number of times that our serverless function is invoked.

Here's the cost breakdown between the two approaches

**EC2 + EFS architecture:**

* EC2 t4g.micro: $6.13 / month (This cost further can be reduced by up to 40% by purchasing a reserved instance)

* EFS storage (30¢ per GB-month x 70Gb) = $21.00 / month

**Lambda + s3 + CloudFront architecture:**

* Lambda: **FREE** for the first 1 million requests per month

* s3 storage: 2.3¢ per GB x 70Gb = $1.61 / month

* CloudFront: **FREE** for the first 1TB of outbound bandwidth

If you exceed these "free tier" limits, you will start to incur costs. However, under the EC2+EFS architecture, you'd have the same issue, even if you put a CDN in front of it. In practice so far, this has reduced my tile server cloud hosting costs down to near-zero.

Additionally, uploads to s3 are totally free (up to a limit that we won't exceed), and as long as all of your services -- lambda, s3, and CloudFront -- are running in the same region and availability zone, you'll incur no data transfer charges between them. Additionally, PUT operations on s3 buckets are atomic, so the file will be cleanly swapped out when you upload it.

## The technical setup

The setup is rather simple:

1. The 70Gb planet file is hosted in an s3 bucket

2. A lambda converts /z/x/y HTTP requests to HTTP range requests on the s3 bucket

3. The CloudFront instance applies HTTPS and caches requests

4. The laptop tile server uploads directly to the s3 bucket

This setup is essentially what Brandon describes in his [Protomaps on AWS](https://protomaps.com/docs/cdn/aws) guide, and I was able to use his lambda code with no modification.

It's important that the planet pmtiles file hosted on your s3 bucket isn't directly downloadable, otherwise, someone might download the entire 70Gb file, leaving you with the bill for bandwidth!

For the laptop build server, I've published my [build scripts](https://github.com/ZeLonewolf/planetiler-scripts/tree/main/local) on GitHub. First, I downloaded a copy of the [planet](https://planet.openstreetmap.org/) using bittorrent. Then, each time the build runs, the script does the following:

1. Updates an [RSS feed](https://tile.ourmap.us/rss.xml) to indicate that a build has started, and links a [seashells.io](https://seashells.io/) console that shows the live build in action

2. Updates the `planet.osm.pbf` file to the most recent hourly diff using [pyosmium-up-to-date](https://docs.osmcode.org/pyosmium/latest/tools_uptodate.html)

3. Renders the planet in pmtiles format using planetiler

4. Uploads the generated `planet.pmtiles` to s3

5. Invalidates the CDN cache so users start seeing the new tiles

6. Deletes the local `planet.pmtiles` file

7. Updates the RSS feed to indicate that that the build has completed, and reports how long the build took.

Note that the cache invalidation is a somewhat optional step. You can choose to set a cache timeout instead, and simply allow users to received cached tiles until the CDN's time to live (TTL) expires.

Since I'm producing an RSS feed, I can hook it up to a Slack channel, which I've done in the [OSM US Slack](https://slack.openstreetmap.us/), at the channel [#ourmap-tile-render](https://osmus.slack.com/archives/C05NXBE936H). This allows me to easily check in on the status of my build server when I'm away from home and can't log into the laptop on my home network.

## Full automation

Since we're using hourly diffs, it makes sense to kick off a build just after each hourly diff is published. Hourly diffs are published at [2 minutes past the hour](https://wiki.openstreetmap.org/wiki/Planet.osm/diffs). However, since the build takes longer than an hour, we'll need to implement a lock file to make sure only one build runs at a time. We'll also wait a minute after the hourly diff publish time to make sure that the file is available.

Therefore, the full-automation setup looks like this:

1. A cron job deletes the lock file (if it exists) at system startup

2. Every hour, at 3 minutes past the hour, start a build if there's no lock file

3. Create a lock file

4. Run the build

5. Delete the lock file

Voilà! We now have a continuously-updating vector planet tile server, hobbyist-style.