Note 07 : Data Structures - [Homepage](http://gddh.github.io/)

============

# Stack

Intuitively understand this as a real life stack of objects, let's say dirty laundry. Assuming you stack your dirty laundry in a laundry basket, you will keep putting your clothes on top of each other. When you take it to wash, the clothes that you put in most recently will be on top. It will also be the first thing that you put into the washing machine.

## Infix to Postfix

### Problem Parameter

**Infix notation:** operand1 op operand2

```

ex1: 4 + 2

ex2: 13 * ( 43 + 4 ) - 2 / 3

```

**Postfix notation:** operand1 operand2 op

```

ex1: 4 2 +

ex2: 13 43 4 + * 2 3 / -

```

How can I convert from **Infix** to **Postfix**?

Iterate through the input string. Given a valid input, we would have spaces, operators, and operands. When we see an operand, use the stack to hold the operators.

# Queue

## Implementation Tips

- When do I resize?

- initial idea: Use the front and the back pointers and find relation between them for resize.

- This doesn't work b/c the front and back pointers are assigned the index of the value at the front and back. It could work if you wanted to resize when you are full, but it is possible that when the current insertion results in a full, you don't want to resize.

- Intuitively: Resize when the queue is full.

- A variable that keeps track of the number of elements in our queue can be used to determine whether or not the queue before the current insertion is already full.

## Sliding Window Problem

We can solve the sliding window problem by adding a bit to the general implementation of a queue. Let's define the problem parameter first.

### Problem Parameter

Taken from Leetcode

Given an array nums, there is a sliding window of size k which is moving from the very left of the array to the very right. You can only see the k numbers in the window. Each time the sliding window moves right by one position.

For example,

Given nums = ``[1,3,-1,-3,5,3,6,7]``, and k = 3.

```

Window position Max

--------------- -----

[1 3 -1] -3 5 3 6 7 3

1 [3 -1 -3] 5 3 6 7 3

1 3 [-1 -3 5] 3 6 7 5

1 3 -1 [-3 5 3] 6 7 5

1 3 -1 -3 [5 3 6] 7 6

1 3 -1 -3 5 [3 6 7] 7

```

Therefore, return the max sliding window as ```[3,3,5,5,6,7]```.

**Note:** You may assume k is always valid, ie: 1 ≤ k ≤ input array's size for non-empty array.

### Solution Discussion

My initial solution was to go through the array and for each subset of size k, find the maximum by iterating through the subset. This takes O(kN) time because for each element, we will need to iterate through the subset to find the max.

We improve our runtime by using a [deque](http://interactivepython.org/runestone/static/pythonds/BasicDS/WhatIsaDeque.html) (double-ended queue).

The deque will hold the subset that we are considering.

**How it Works**

- The solution size is the given array size - k + 1

- Iterate through the initial array.

1. Fill the deque up to size k - 1.

2. Initialize a trailing pointer

3. At each of the following elements (we update index and trailing pointers)

1. While the current element is greater than the back of the array

- dequeue from the back of the array.

2. Enqueue the current element

3. If trailing pointer == front of the arr

- dequeue from the front.

**Why it Works**

Observe:

- Each element will be enqueued into the array.

- We will remove an element from the deque:

- if the trailing pointer (the left part of the window) is pointing to it because it isn't in the next window.

- if the current element that we are considering is bigger than the elements in the queue because in the current subset, we've already found a bigger element, so we should get rid of all the smaller ones

- By doing this, the deque acts as a dynamic subset that keeps track of possible maximums.

Because we are iterating through the elements only once, the runtime is O(N)

# Heaps / Priority Queues

## Motivation

- *Problem:* Given a dynamic collection of elements, process the elements starting from the largest/smallest item. Dynamic implies that elements will be added and removed.

- Essentially, be able to quickly:

- get the smallest/largest item in our collection of elements

- add to the collection

- remove from the collection

- *Solution:* Priority Queue (PQs)

- Data Structure for PQs: Binary Heaps

### Basic definitions:

**Priority Queue**. A Max Priority Queue (or PQ for short) is an ADT that supports at least the insert and delete-max operations. A MinPQ supports insert and delete-min.

**Heaps**. A max (min) heap is an array representation of a binary tree such that every node is larger (smaller) than all of its children. This definition naturally applies recursively, i.e. a heap of height 5 is composed of two heaps of height 4 plus a parent.

### Basic Concept ( [CS 61B/Prof Hug, ](https://sp18.datastructur.es/)[Algorithms 4th ed.](https://algs4.cs.princeton.edu/24pq/))

There are two types of heaps: Min heap and max heap. We will consider a min heap (logic is symmetrical for max heap)

**Min Heap** - a *balanced* binary tree (not binary search tree) where each node must hold smaller values than the value of the node children. This means that the root will hold the smallest value of all the nodes.

- A Balanced binary tree is a binary tree that is "completely bushy". Each level is completely filled, except the last level - which is filled from left to right.

## How Min Heap Works

Here is a short [animation](https://docs.google.com/presentation/d/1VEd2Pm_3OuvkC1M8T5XAhsBTQFxVHs386L79hktkDRg/pub?start=false&loop=false&delayms=3000&slide=id.g11ecaeaf56_0_0) from Hug's lecture.

### Insert

1. Add the element to the right most position in the last row or the first position of a new row of the binary tree to keep the balanced property.

2. "Swim"/promote the element up to its rightful place.

- Compare the element to its parent. If the element is smaller, swap the element with its parent.

- Repeat until no smaller parent exists or the element is at root.

### Remove Min

1. Keep value of root as the min value.

2. Swap the root with the last element in the tree.

3. "Sink" the element down to its rightful place

- Compare the element with the smaller of the left and right child. If element is bigger, swap the element with child.

- Repeat until it is at the bottom level or is smaller than child.

4. Return min value

## Implementation Details

By understanding the relationship between the parent and the child, we will see that arrays offer a convenient approach for heaps.

Consider the following min heap and see if you can find the parent index given the child index.

Given the parent index, the child index is:

parent(i) = (i - 1) / 2

We can make the implementation easier by using just a little bit more space. If we keep the 0th index empty, we can make the math for the general relationship even easier.

parent(i) = i / 2

left_child(i) = i * 2

right_child(i) = i * 2 + 1

## Runtime Analysis

Some comparison - same as 61B Hug lecture

<table>

<tr>

<th></th>

<th>Ordered Array</th>

<th>Bushy BST</th>

<th>Hash Table</th>

<th>HEAP</th>

</tr>

<tr>

<td>Add</td>

<td>Θ(n)</td>

<td>Θ(logn)</td>

<td>Θ(1)</td>

<td>Θ(logn)</td>

</tr>

<tr>

<td>getSmallest</td>

<td>Θ(1)</td>

<td>Θ(logn)</td>

<td>Θ(n)</td>

<td>Θ(1)</td>

</tr>

<tr>

<td>Remove Smallest</td>

<td>Θ(n)</td>

<td>Θ(logn)</td>

<td>Θ(n)</td>

<td>Θ(logn)</td>

</tr>

</table>

## Applications

From geeks for geeks

1) Heap Sort: Heap Sort uses Binary Heap to sort an array in O(nLogn) time.

2) Priority Queue: Priority queues can be efficiently implemented using Binary Heap because it supports insert(), delete() and extractmax(), decreaseKey() operations in O(logn) time. Binomoial Heap and Fibonacci Heap are variations of Binary Heap. These variations perform union also efficiently.

3) Graph Algorithms: The priority queues are especially used in Graph Algorithms like Dijkstra’s Shortest Path and Prim’s Minimum Spanning Tree.

4) Many problems can be efficiently solved using Heaps. See following for example.

a) K’th Largest Element in an array.

b) Sort an almost sorted array.

c) Merge K Sorted Arrays.

# Binary Search Tree

## Basic Definitions

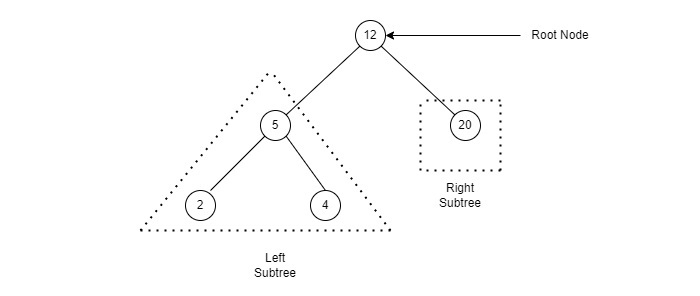

Binary Search Tree - Rooted binary trees where each node has 0, 1, or 2 children and is organized in a sorted order.

**Sorted Order**

The following principles follow from the nature of the sorted order:

1. For any elements a, b: a < b or b < a

2. For any elements a, b, c: if a < b and b < c, then a < c

3. Following from the above two principles, no two elements can have the same value.

The sorted nature allows us to search the tree for elements.

**Tree**

A set of vertices with edges, where there exists only one path between any pair of vertices.

**Rooted**

A rooted tree means that every node with the exception of the "root" of the tree has one parent. The "root node" has no parents. In this way,

**Binary**

Every node can have 0, 1, or 2 children.

**Example**

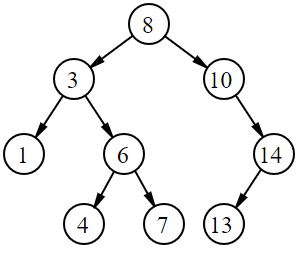

Notice how any node will be smaller than what is on the right of it and will be larger than what is on the left of it.

**Completeness**

Every level is full with the possible exception of the last level, which will be filled from left to right

**Fullness**

Every node has either 0 or 2 children.

**Height**

The height of the tree is the number of edges away from the tree. This means if there is only a root, height is 0. The completeness example above has a height of 3.

## Implementation Details

[My implementation of BST.](https://github.com/gddh/practice/tree/master/data_structures/bst)

### Basic Structure

A binary tree is made up of nodes, so we use a struct to represent the nodes:

```

typedef struct s_node

{

int data;

struct s_node *left;

struct s_node *right;

} t_node;

```

Each node will hold:

1. the data (in this case ```int```)

2. pointer to the left child

3. pointer to the right child

Just as in linked list, it would be easier if we had a constructor to create nodes. ```t_node *create_node(int data)```

### Recursive Format

**Arms Length Recursion**

When writing the insert function, your initial instinct may be to write code that is similar to:

```

t_node *insert(t_node *node, int data)

{

if (node->left == NULL)

node->left = create_node(data);

if (node->right == NULL)

node->right = create_node(data);

}

```

DON'T DO IT!!! Professor Hug called this arms length recursion because you stop the recursion when you are arms length away from the base case. It is a bad practice for these [reasons](https://www.quora.com/What-is-arms-length-recursion?utm_medium=organic&utm_source=google_rich_qa&utm_campaign=google_rich_qa)

Allow the recursion to go all the way through. Rather than checking the children, allow the recursion to go to the children. Then check the current node. The following part has an example of a "better" format.

**Setting the Children**

A verbose insert function.

```

t_node *insert(t_node *node, int data)

{

if (!node)

return create_node(data);

if (node->data < data)

{

node->right = insert(node->right, data); <---- Set right child to return val of recur. call

return (node); <--- return the current node

}

if (node->data > data)

{

node->left = insert(node->left, data); <---- Set left child to return val of recur. call

return (node); <--- return the current node

}

else //node->data == data

return (node); <--- return the current node

}

```

**Notice**

1. We set the child to the return value of the recursive call.

2. We return the current node.

This ensures the integrity of the tree as we make modifications to the tree. For example, let's say ```function_A``` uses this recursive structure, then when I do the following:```t->right = function_A(t->right, data1);``` I know that ```function_A``` will return ```t->right``` to me after ```function_A``` has made its modifications. We will revisit this when we discuss the ```delete``` function.

### Insert

We can use this function to write ```insert.``` The ```insert``` function will take a node and the data to insert as its parameters. The function will return the node.

If the data is already in the tree, the ```insert``` function will simply return that node.

When inserting a data, we start at the root node. We compare the data with the root node.

- If the data is smaller than the node's data, the data belongs on the left side of the node on the tree. So we will recurse onto the left child of the node. The returned value will be set to the left child.

- If the data is larger than the node, then the data belongs on the right side of the node on the tree. So we will recurse onto the right child of the node. The returned value will be set to the right child.

- If the data is equal to the node's data, then we will simply return the node because by definition, BSTs cannot have two nodes with the same information.

- If we've recursed to a ```NULL``` node, then we've hit the end of the tree. Create a node and return the node.

- **Note:** this newly returned node will be set to either the left child or the right child of the parent node.

### Delete

When deleting from a BST there are three possible cases.

1. The node that we are deleting has no child. In this case, we recurse to the node, free the node and then return NULL.

2. The node that we are deleting has one child. In this case, we recurse to the node, free the node, return the node's child. (You will probably need a temp node).

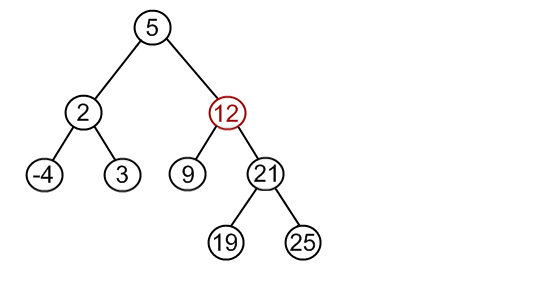

3. The node that we are deleting has two children. In this case, we need to find the closest substitute. Let's say we have the following tree and the node we are deleting is 12:

- The closest substitute will either be the left child's biggest node (9) or the right child's smallest node (19). (for us, let's choose the right child's smallest node).

- The subustitute (19) will effectively take the place of node we are deleting (12). However, if we were to just substitute, we are left with two 19s.

- This means that we must recursively delete the right child's smallest node after we've moved it up.

- This is one example of the helpful nature of the recursive structure because we can set the return value of deleting the right child's smallest node from the right child's subtree.

- We can speed things up by writing a ```deleteSmallest``` function.

Picture from usfca.cs

Here is an [animation](https://www.cs.usfca.edu/~galles/visualization/BST.html) you can play with to develop a better understanding of BSTs.

## Tree Traversal

Picture from tutorialspoint

### Inorder Traversal.

Inorder traversal for above bst: 10 14 19 27 31 35 42

**Algorithm:**

```

process my left progeny

process myself

process my right progeny

```

**NOTE:** Easily reverse the sort by swapping the first and third step in the algorithm

- Equivalent to reading the tree from left to right.

- Returns the information in sorted order.

### Postorder Traversal.

Postorder traversal for above bst: 10 19 14 31 42 35 27

**Algorithm:**

```

process my left descendants

process my right descendants

process myself.

```

Postorder traversal is used:

- for freeing the entire tree.

- to get reverse polish notation/post fix notation for expressions

### Preorder Traversal.

Preorder traversal for above bst: 27 14 10 19 35 31 42

**Algorithm**

```

process myself

process my left descendants

process my right descendants

```

Preorder traversal is used:

- For making a copy of the tree.

- To get polish notation/pre fix notation for expressions

**Picture Copied for your Convenience**

Picture from tutorialspoint

### Levelorder Traversal.

Levelorder traversal for the above bst: 27 14 35 10 19 31 42

**Implementation 1: Using Functions**

```

void print_level(t_node *node, int level)

{

if (level == 0)

printf("%d ");

print_level(node->left, level - 1);

print_level(node-right, level - 1);

}

void levelorder(t_node *root)

{

for (int i = 0; i <= height(root); i++)

print_level(root, level);

}

```

As you've probably realized this implementation of level order is not the most efficient implementation as there is alot of retraversal.

**Implementation 2: BFS with a queue**

https://www.cs.cmu.edu/~adamchik/15-121/lectures/Trees/code/BST.java