# Typography Research Collection

[[hackmd]](https://hackmd.io/s/B1BZCZpPX)[[github]](https://github.com/IShengFang/TypographyResearchCollection)[[github pages]](https://ishengfang.github.io/TypographyResearchCollection/)

Typography is the cross between technology and liberal arts.

This page is a research collection that includes computer graphics, computer vision, machine learning that related to typography.

If you discover relevant new work, please feel free to contact the major maintainer, [I-Sheng Fang](https://ishengfang.github.io/). 🙂

## Contents

- [Large Language Model](#large-language-model)

- [Vision Language Model](#vision-language-model)

- [Visual Text Generation and Editing](#visual-text-generation-and-editing)

- [Font Stye Transfer and Glyph Generation](#font-stye-transfer-and-glyph-generation)

- [NN Approach](#nn-approach)

- [Other Approach](#other-approach)

- [Struture Learning](#struture-learning)

- [Dataset](#dataset)

- [Other Application](#other-application)

## Large Language Model

### 2025

- **Font-Agent: Enhancing Font Understanding with Large Language Models**

- Yingxin Lai · Cuijie Xu · Haitian Shi · Guoqing Yang · Xiaoning Li · Zhiming Luo · Shaozi Li

- CVPR 2025

- [[CVF]](https://openaccess.thecvf.com/content/CVPR2025/html/Lai_Font-Agent_Enhancing_Font_Understanding_with_Large_Language_Models_CVPR_2025_paper.html)

## Vision Language Model

- **FontCLIP: A Semantic Typography Visual-Language Model for Multilingual Font Applications**

- Yuki Tatsukawa, I-Chao Shen, Anran Qi, Yuki Koyama, Takeo Igarashi, Ariel Shamir

- Eurographics 2024

- [[project page]](https://yukistavailable.github.io/fontclip.github.io/)[[paper]](https://jdily.github.io/resource/fontclip/fontCLIP_paper_open.pdf)[[arxiv]](https://arxiv.org/abs/2403.06453)[[code]](https://github.com/yukistavailable/FontCLIP)

## Visual Text Generation and Editing

### 2025

- **TextMaster: A Unified Framework for Realistic Text Editing via Glyph-Style Dual-Control**

- Zhenyu Yan, Jian Wang, Aoqiang Wang, Yuhan Li, Wenxiang Shang, Ran Lin

- ICCV 2025

- [[Paper]](https://arxiv.org/abs/2410.09879)

- **FonTS: Text Rendering with Typography and Style Controls**

- Wenda Shi, Yiren Song, Dengming Zhang, Jiaming Liu, Xingxing Zou

- ICCV 2025

- [[Paper]](https://arxiv.org/abs/2412.00136)[[Project Page]](https://wendashi.github.io/FonTS-Page/)[[Code]](https://github.com/ArtmeScienceLab/FonTS)[[Model]](https://huggingface.co/SSS/FonTS-SCA)[[Dataset]](https://huggingface.co/datasets/SSS/SC-artext)

- **FontAnimate: High Quality Few-shot Font Generation via Animating Font Transfer Process**

- Bin Fu · Zixuan Wang · Kainan Yan · Shitian Zhao · Qi Qin · Jie Wen · Junjun He · Peng Gao

- ICCV 2025

- **OracleFusion: Assisting the Decipherment of Oracle Bone Script with Structurally Constrained Semantic Typography**

- Caoshuo Li, Zengmao Ding, Xiaobin Hu, Bang Li, Donghao Luo, AndyPian Wu, Chaoyang Wang, Chengjie Wang, Taisong Jin, SevenShu, Yunsheng Wu, Yongge Liu, Rongrong Ji

- ICCV 2025

- [[Paper]](https://arxiv.org/abs/2506.21101)[[Code]](https://github.com/lcs0215/OracleFusion)

- **Dynamic Typography: Bringing Text to Life via Video Diffusion Prior**

- Zichen Liu*, Yihao Meng*, Hao Ouyang, Yue Yu, Bolin Zhao, Daniel Cohen-Or, Huamin Qu

- ICCV 2025

- [[Paper]](https://arxiv.org/abs/2404.11614)[[Project Page]](https://animate-your-word.github.io/demo/)[[Code]](https://github.com/zliucz/animate-your-word)

- **FLUX-Text: A Simple and Advanced Diffusion Transformer Baseline for Scene Text Editing**

- Rui Lan*, Yancheng Bai*, Xu Duan, Mingxing Li, Dongyang Jin, Ryan Xu, Lei Sun, Xiangxiang Chu

- [[Paper]](https://arxiv.org/abs/2505.03329)[[Project Page]](https://amap-ml.github.io/FLUX-text/)[[Model]](https://huggingface.co/GD-ML/FLUX-Text)

- **GlyphMastero: A Glyph Encoder for High-Fidelity Scene Text Editing**

- Tong Wang, Ting Liu, Xiaochao Qu, Chengjing Wu, Luoqi Liu, Xiaolin Hu

- CVPR 2025

- [[Paper]](https://arxiv.org/abs/2505.04915)

### 2024

- **Glyph-ByT5-v2: A Strong Aesthetic Baseline for Accurate Multilingual Visual Text Rendering**

- Zeyu Liu, Weicong Liang, Yiming Zhao, Bohan Chen, Lin Liang, Lijuan Wang, Ji Li, Yuhui Yuan

- [[Paper]](https://arxiv.org/abs/2406.10208)[[Project Page]](https://glyph-byt5-v2.github.io/)

- **Glyph-ByT5: A Customized Text Encoder for Accurate Visual Text Rendering**

- Zeyu Liu, Weicong Liang, Zhanhao Liang, Chong Luo, Ji Li, Gao Huang, Yuhui Yuan

- [[Paper]](https://arxiv.org/abs/2403.09622)[[Project Page]](https://glyph-byt5.github.io/)

- **GlyphDraw2: Automatic Generation of Complex Glyph Posters with Diffusion Models and Large Language Models**

- Jian Ma, Yonglin Deng, Chen Chen, Nanyang Du, Haonan Lu, Zhenyu Yang

- [[Paper]](https://arxiv.org/abs/2407.02252)[[Code]](https://github.com/OPPO-Mente-Lab/GlyphDraw2)

- **MATIC: Multilingual Accurate Textual Image Customization via Joint Generative Artificial Intelligence**

- Chiao-Hsin Wu, I-Wei Lai

- PacificVis 2025 Short Paper (Visnote) Track

- **AnyText: Multilingual Visual Text Generation And Editing**

- Yuxiang Tuo, Wangmeng Xiang, Jun-Yan He, Yifeng Geng∗, Xuansong Xie

- ICLR 2024

- [[paper]](https://arxiv.org/abs/2311.03054)[[code]](https://github.com/tyxsspa/AnyText?tab=readme-ov-file)[[demo]](https://modelscope.cn/studios/damo/studio_anytext/summary)

### 2023

- **GlyphControl: Glyph Conditional Control for Visual Text Generation**

- Yukang Yang, Dongnan Gui, Yuhui Yuan, Weicong Liang, Haisong Ding, Han Hu, Kai Chen

- NeurIPS 2023

- [[paper]](https://arxiv.org/pdf/2305.18259.pdf)[[code]](https://github.com/AIGText/GlyphControl-release)

- **DiffUTE: Universal Text Editing Diffusion Model**

- Haoxing Chen, Zhuoer Xu1, Zhangxuan Gu*, Jun Lan, Xing Zheng, Yaohui Li, Changhua Meng, Huijia Zhu, Weiqiang Wang

- ∗Corresponding author

- NeurIPS 2023

- [[paper]](https://arxiv.org/abs/2305.10825)[[code]](https://github.com/chenhaoxing/DiffUTE/tree/main)

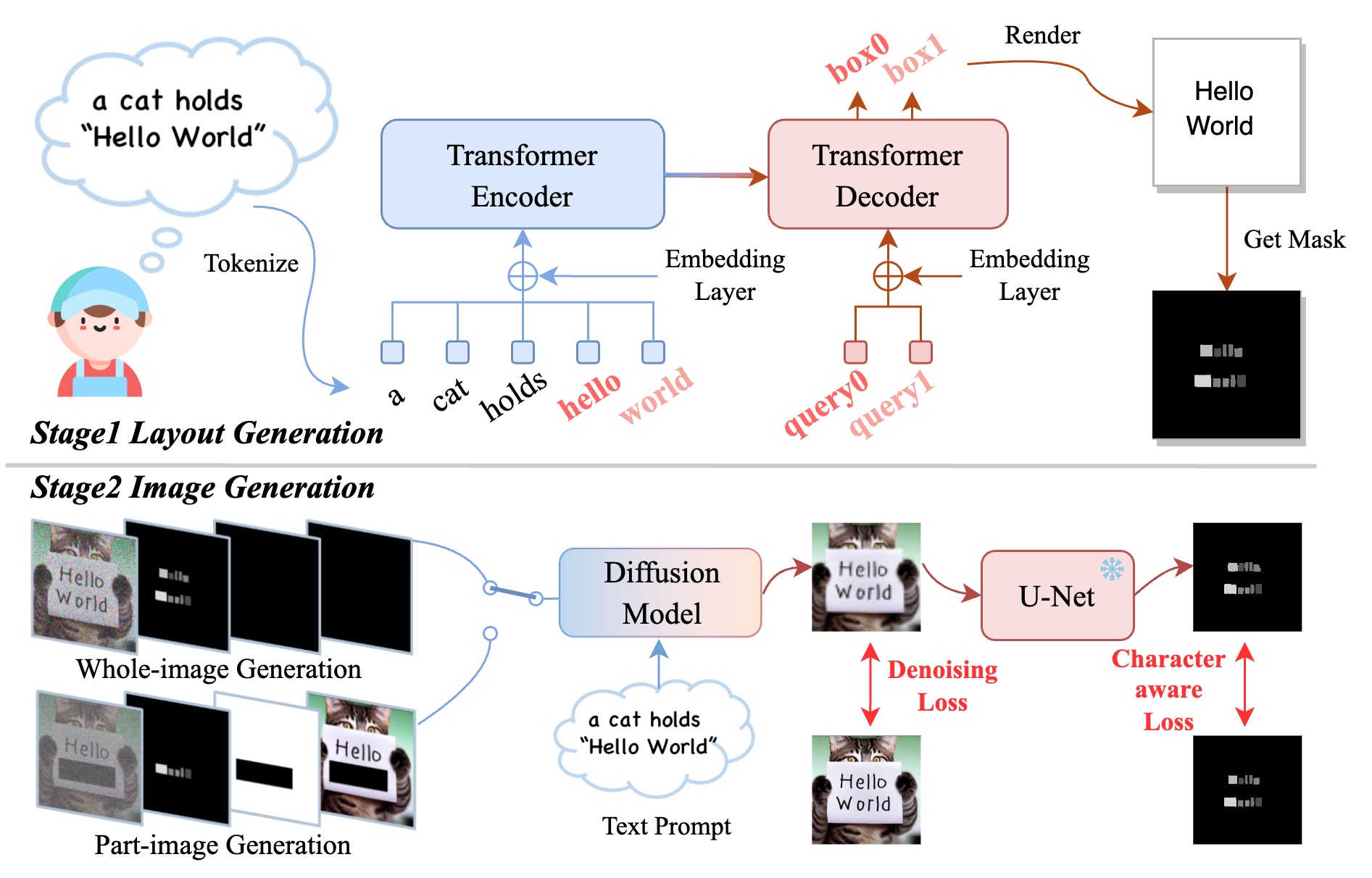

- **TextDiffuser: Diffusion Models as Text Painters**

- Jingye Chen*, Yupan Huang*, Tengchao Lv, Lei Cui, Qifeng Chen, Furu Wei

- *Equal Contribution

- NeurIPS 2023

- [[project page]](https://jingyechen.github.io/textdiffuser/)[[paper]](https://arxiv.org/abs/2305.10855)[[code]](https://github.com/microsoft/unilm/tree/master/textdiffuser)[[demo]](https://huggingface.co/spaces/JingyeChen22/TextDiffuser)[[colab]](https://colab.research.google.com/drive/115Qw0l5dhjlTtrbywMWRwhz9IxKE4_Dg?usp=sharing)

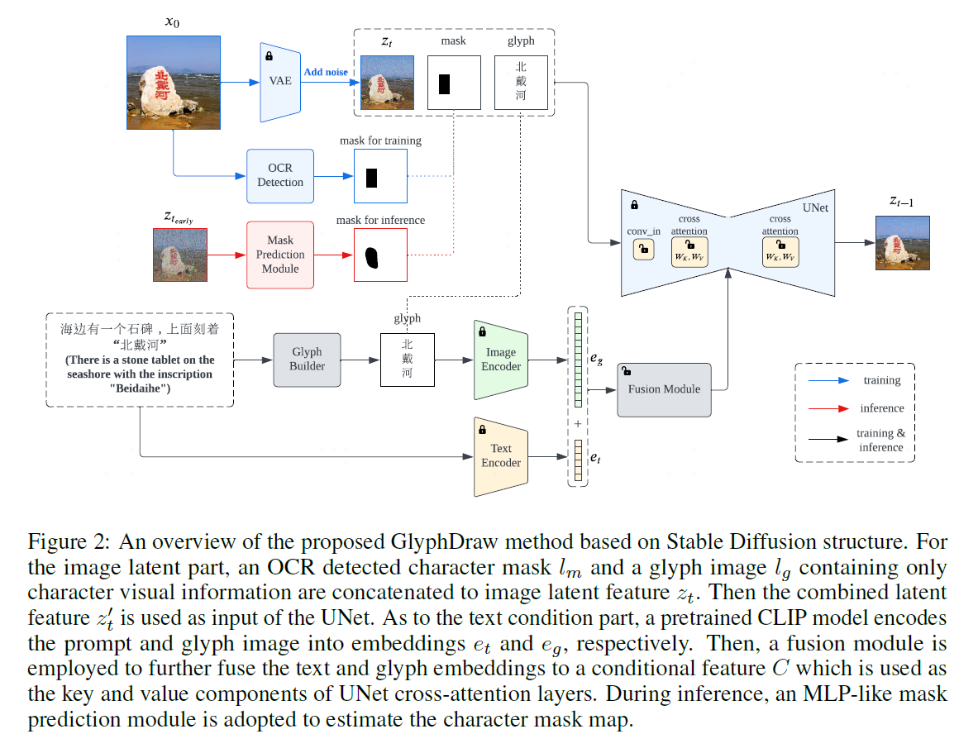

- **GlyphDraw: Learning to Draw Chinese Characters in Image Synthesis Models Coherently**

- Jian Ma, Mingjun Zhao, Chen Chen, Ruichen Wang, Di Niu, Haonan Lu, Xiaodong Lin

- arxiv 2023

- [[project page]](https://1073521013.github.io/glyph-draw.github.io/)[[paper]](https://arxiv.org/abs/2303.17870)[[code]](https://github.com/1073521013/GlyphDraw)

- **DeepFloyd IF**

- Inspired by "Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding", NeurIPS2022

- aka Imagen

- [[code]](https://github.com/deep-floyd/IF?tab=readme-ov-file)

- **Character-Aware Models Improve Visual Text Rendering**

- arxiv

- Rosanne Liu∗, Dan Garrette∗, Chitwan Saharia, William Chan, Adam Roberts, Sharan Narang, Irina Blok, RJ Mical, Mohammad Norouzi, Noah Constant∗

- *Equal contribution

- [[paper]](https://arxiv.org/pdf/2212.10562.pdf)

- **OCR-VQGAN: Taming Text-within-Image Generation**

- Juan A. Rodríguez, David Vázquez, Issam Laradji, Marco Pedersoli, Pau Rodríguez

- WACV2023

- [[paper(CVF)]](https://openaccess.thecvf.com/content/WACV2023/papers/Rodriguez_OCR-VQGAN_Taming_Text-Within-Image_Generation_WACV_2023_paper.pdf)[[paper(arxiv)]](https://arxiv.org/abs/2210.11248)[[code]](https://github.com/joanrod/ocr-vqgan)

## Font Stye Transfer and Glyph Generation

### NN Approach

#### 2025

- **Zero-Shot Styled Text Image Generation, but Make It Autoregressive**

- Vittorio Pippi1∗, Fabio Quattrini1∗, Silvia Cascianelli, Alessio Tonioni, Rita Cucchiara

- CVPR 2025

- [[arxiv]](https://arxiv.org/abs/2503.17074)[[CVF]](https://openaccess.thecvf.com/content/CVPR2025/html/Pippi_Zero-Shot_Styled_Text_Image_Generation_but_Make_It_Autoregressive_CVPR_2025_paper.html)

#### 2024

- **TypeDance: Creating Semantic Typographic Logos from Image through Personalized Generation**

- Shishi Xiao, Liangwei Wang, Xiaojuan Ma, Wei Zeng

- CHI 2024

- [[arxiv]](https://arxiv.org/abs/2401.11094) [[code]](https://github.com/SerendipitysX/TypeDance)

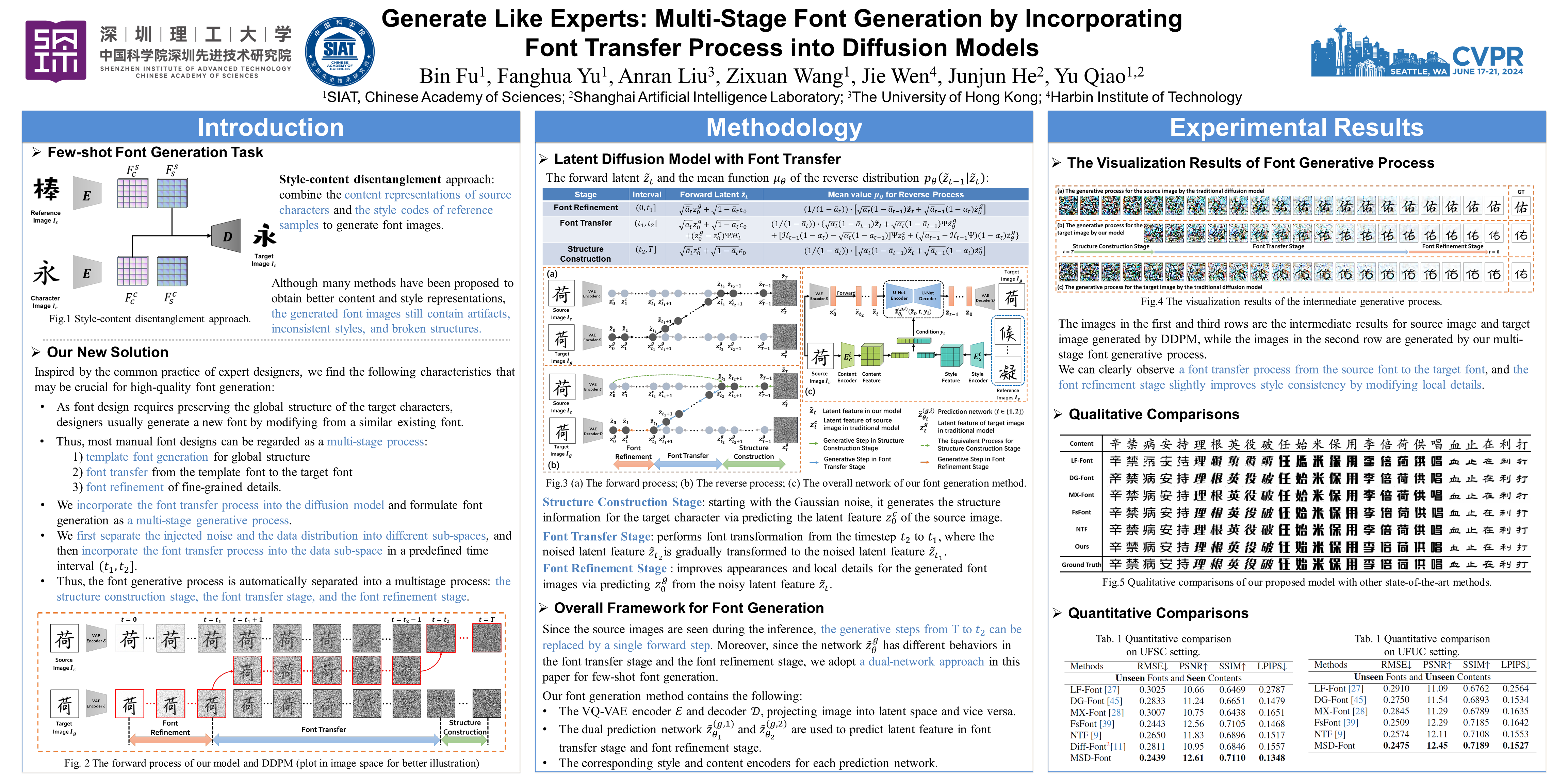

- **Generate Like Experts: Multi-Stage Font Generation by Incorporating Font Transfer Process into Diffusion Models**

- Bin Fu, Fanghua Yu, Anran Liu, Zixuan Wang, Jie Wen, Junjun He, Yu Qiao

- [CVPR 2024](https://cvpr.thecvf.com/virtual/2024/poster/30809)

- [[CVF]](https://openaccess.thecvf.com/content/CVPR2024/html/Fu_Generate_Like_Experts_Multi-Stage_Font_Generation_by_Incorporating_Font_Transfer_CVPR_2024_paper.html)[[code]](https://github.com/fubinfb/MSD-Font)

- **VecFusion: Vector Font Generation with Diffusion**

- Vikas Thamizharasan, Difan Liu, Shantanu Agarwal, Matthew Fisher, Michael Gharbi, Oliver Wang, Alec Jacobson, Evangelos Kalogerakis

- [CVPR 2024](https://cvpr.thecvf.com/virtual/2024/poster/30338)

- [[arxiv]](https://arxiv.org/abs/2312.10540) [[project page]](https://vikastmz.github.io/VecFusion/)

- **FontDiffuser: One-Shot Font Generation via Denoising Diffusion with Multi-Scale Content Aggregation and Style Contrastive Learning**

- Zhenhua Yang, Dezhi Peng, Yuxin Kong, Yuyi Zhang, Cong Yao, Lianwen Jin

- AAAI 2024

- [[project page]](https://yeungchenwa.github.io/fontdiffuser-homepage/)[[arxiv]](https://arxiv.org/abs/2312.12142)[[code]](https://github.com/yeungchenwa/FontDiffuser)[[demo]](https://huggingface.co/spaces/yeungchenwa/FontDiffuser-Gradio)

#### 2023

- **DS-Fusion: Artistic Typography via Discriminated and Stylized Diffusion**

- Maham Tanveer, Yizhi Wang, Ali Mahdavi-Amiri, Hao Zhang

- ICCV2023

- [[project page]](https://ds-fusion.github.io/)[[paper]](https://arxiv.org/abs/2303.09604)[[code]](https://github.com/tmaham/DS-Fusion) [[demo]](https://huggingface.co/spaces/tmaham/DS-Fusion-Express)

- **Word-As-Image for Semantic Typography**

- Shir Iluz*, Yael Vinker*, Amir Hertz, Daniel Berio, Daniel Cohen-Or, Ariel Shamir

- *Denotes equal contribution

- SIGGRAPH 2023 - Honorable Mention Award

- [[project page]](https://wordasimage.github.io/Word-As-Image-Page/)[[paper]](https://arxiv.org/abs/2303.01818)[[code]](https://github.com/WordAsImage/Word-As-Image)[[demo]](https://huggingface.co/spaces/SemanticTypography/Word-As-Image)

- **DeepVecFont-v2: Exploiting Transformers to Synthesize Vector Fonts with Higher Quality**

- Yuqing Wang, Yizhi Wang, Longhui Yu, Yuesheng Zhu, Zhouhui Lian

- CVPR 2023

- [[paper(CVPR)]](https://openaccess.thecvf.com/content/CVPR2023/papers/Wang_DeepVecFont-v2_Exploiting_Transformers_To_Synthesize_Vector_Fonts_With_Higher_Quality_CVPR_2023_paper.pdf)[[code]](https://arxiv.org/abs/2303.14585)

#### 2022

- **StrokeStyles: Stroke-based Segmentation and Stylization of Fonts**

- Daniel Berio, Frederic Fol Leymarie, Paul Asente, Jose Echevarria

- ACM Transactions on Graphics Volume 41 Issue 3 Article No.: 28 pp 1–21

- [[paper]](https://dl.acm.org/doi/10.1145/3505246)

#### 2021

- **DeepVecFont: Synthesizing High-quality Vector Fonts via Dual-modality Learning**

- Yizhi Wang, Zhouhui Lian

- SIGGRAPH Asia 2021

- [[project page]](https://yizhiwang96.github.io/deepvecfont_homepage/)[[code]](https://github.com/yizhiwang96/deepvecfont)[[paper]](https://arxiv.org/abs/2110.06688)

- **DG-Font: Deformable Generative Networks for Unsupervised Font Generation**

- Yangchen Xie, Xinyuan Chen, Li Sun, Yue Lu

- [[paper(CVPR)]](https://openaccess.thecvf.com/content/CVPR2021/html/Xie_DG-Font_Deformable_Generative_Networks_for_Unsupervised_Font_Generation_CVPR_2021_paper.html)[[paper(arxiv)]](https://arxiv.org/abs/2104.03064)[[code]](https://github.com/ecnuycxie/DG-Font)

- CVPR2021

- **Multiple Heads are Better than One:Few-shot Font Generation with Multiple Localized Experts**

- Song Park, Sanghyuk Chun, Junbum Cha, Bado Lee, Hyunjung Shim

- [[paper]](https://arxiv.org/abs/2104.00887)[[code]](https://github.com/clovaai/mxfont)

- ICCV 2021

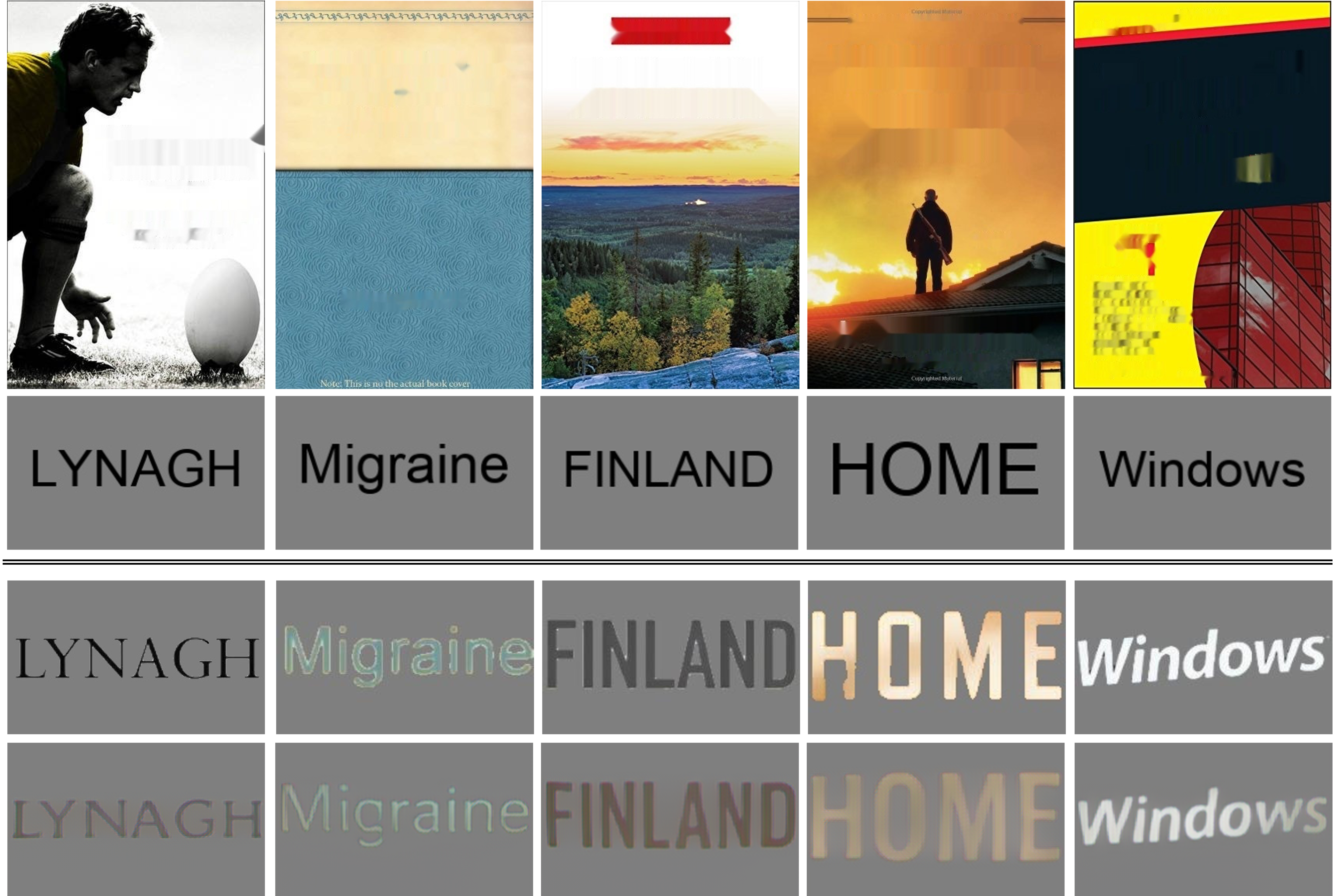

- **Font Style that Fits an Image -- Font Generation Based on Image Context**

- [[paper]](https://arxiv.org/abs/2105.08879)[[code]](https://github.com/Taylister/FontFits)

- Taiga Miyazono, Brian Kenji Iwana, Daichi Haraguchi, and Seiichi Uchida

- arxiv 2021

- **Few-shot Font Generation with Localized Style Representations and Factorization**

- Song Park*, Sanghyuk Chun*, Junbum Cha, Bado Lee, Hyunjung Shim1

- *: equal contribution

- [[project page]](https://cvml.yonsei.ac.kr/projects/few-shot-font-generation) [[paper]](https://arxiv.org/abs/2009.11042)[[code]](https://github.com/clovaai/lffont)

- AAAI 2021

- **Handwritten Chinese Font Generation with Collaborative Stroke Refinement**

- [[paper]](https://arxiv.org/abs/1904.13268)[[WACV2021]](https://openaccess.thecvf.com/content/WACV2021/html/Wen_Handwritten_Chinese_Font_Generation_With_Collaborative_Stroke_Refinement_WACV_2021_paper.html)[[code(dead)]](https://github.com/s024/fontml-research)

- Chuan Wen, Jie Chang, Ya Zhang, Siheng Chen, Yanfeng Wang, Mei Han, Qi Tian

- WACV 2021

#### 2020

- **RD-GAN: Few/Zero-Shot Chinese Character Style Transfer via Radical Decomposition and Rendering**

- Yaoxiong Huang, Mengchao He, Lianwen Jin, Yongpan Wang

- [[paper(ECVA)]](https://www.ecva.net/papers/eccv_2020/papers_ECCV/html/4880_ECCV_2020_paper.php)

- ECCV 2020

- **Few-shot Compositional Font Generation with Dual Memory**

- Junbum Cha, Sanghyuk Chun, Gayoung Lee, Bado Lee, Seonghyeon Kim, Hwalsuk Lee.

- [[paper]](https://arxiv.org/abs/2005.10510) [[code]](https://github.com/clovaai/dmfont)

- ECCV 2020

- **CalliGAN: Style and Structure-aware Chinese Calligraphy Character Generator**

- [[paper]](https://arxiv.org/abs/2005.12500)[[code]](https://github.com/JeanWU/CalliGAN)

- Shan-Jean Wu, Chih-Yuan Yang and Jane Yung-jen Hsu

- AI for Content Creation Workshop CVPR 2020.

#### 2019

- **A Learned Representation for Scalable Vector Graphics**

- [[paper]](https://arxiv.org/abs/1904.02632)

- Raphael Gontijo Lopes, David Ha, Douglas Eck, Jonathon Shlens

- ICCV 2019

- ICLR workshop 2019

- **Large-scale Tag-based Font Retrieval with Generative Feature Learning**

- [[paper]](https://arxiv.org/abs/1909.02072)[[page]](https://www.cs.rochester.edu/u/tchen45/font/font.html)

- Tianlang Chen, Zhaowen Wang, Ning Xu, Hailin Jin, Jiebo Luo

- ICCV 2019

- **DynTypo: Example-based Dynamic Text Effects Transfer**

- [[paper]](http://www.icst.pku.edu.cn/zlian/docs/2019-04/20190418093151169110.pdf)

- Yifang Men, Zhouhui Lian, Yingmin Tang, Jianguo Xiao

- CVPR 2019

- <iframe width="560" height="315" src="https://www.youtube.com/embed/FkFQ6bV1s-o" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

- **Typography with Decor: Intelligent Text Style Transfer**

- Wenjing Wang, Jiaying Liu, Shuai Yang, and Zongming Guo

- CVPR2019

- [[project page]](https://daooshee.github.io/Typography2019/) [[paper]](https://openaccess.thecvf.com/content_CVPR_2019/papers/Wang_Typography_With_Decor_Intelligent_Text_Style_Transfer_CVPR_2019_paper.pdf) [[code]](https://github.com/daooshee/Typography-with-Decor)

- **DeepGlyph**

- [[websites]](https://deepglyph.app/)

- <iframe width="560" height="315" src="https://www.youtube.com/embed/T70k-0qgccs" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

- **TET-GAN: Text Effects Transfer via Stylization and Destylization**

- [[paper]](https://www.aaai.org/ojs/index.php/AAAI/article/view/3919)

- Shuai Yang, Jiaying Liu, Wenjing Wang, Zongming Guo

- AAAI2019

- **SCFont: Structure-Guided Chinese Font Generation via Deep Stacked Networks**

- [[paper]](https://aaai.org/ojs/index.php/AAAI/article/view/4294)

- Yue Jiang, Zhouhui Lian*, Yingmin Tang, Jianguo Xiao

- AAAI 2019

#### 2018

- **Coconditional Autoencoding Adversarial Networks for Chinese Font Feature Learning**

- [[paper]](https://arxiv.org/abs/1812.04451)

- arxiv 2018

- Zhizhan Zheng, Feiyun Zhang

- **Separating Style and Content for Generalized Style Transfer**

- [[paper]](http://openaccess.thecvf.com/content_cvpr_2018/html/Zhang_Separating_Style_and_CVPR_2018_paper.html)[[code]](https://github.com/zhyxun/Separating-Style-and-Content-for-Generalized-Style-Transfer)

- Yexun Zhang, Ya Zhang, Wenbin Cai

- CVPR 2018

- Networks

- results

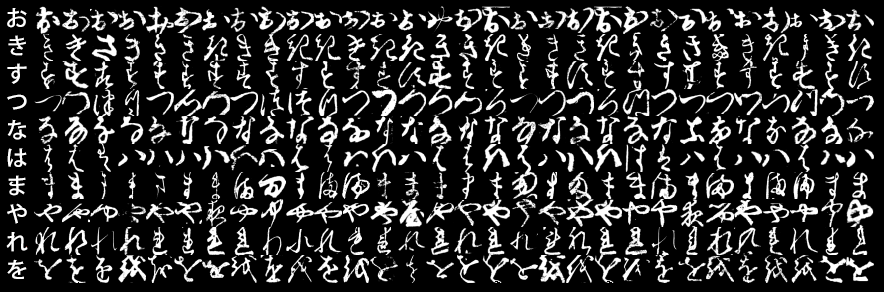

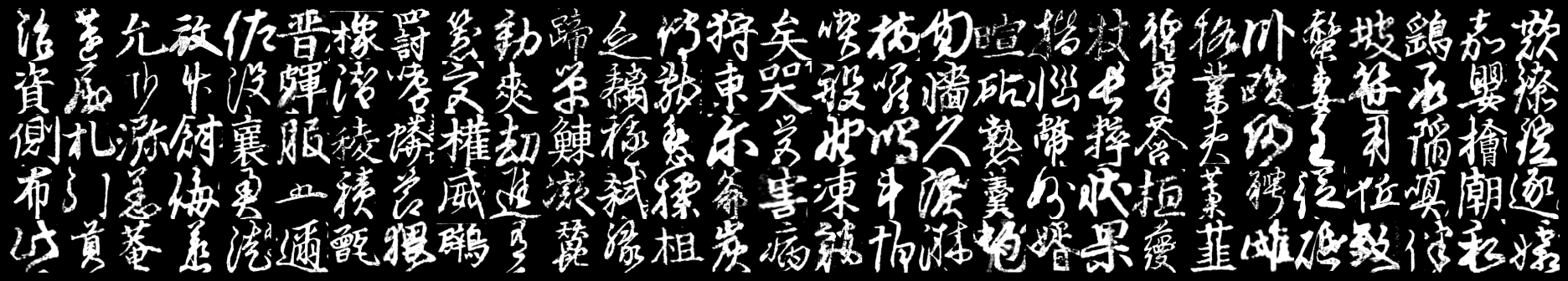

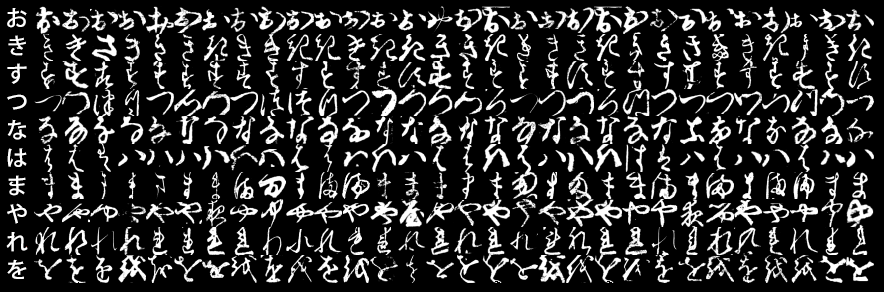

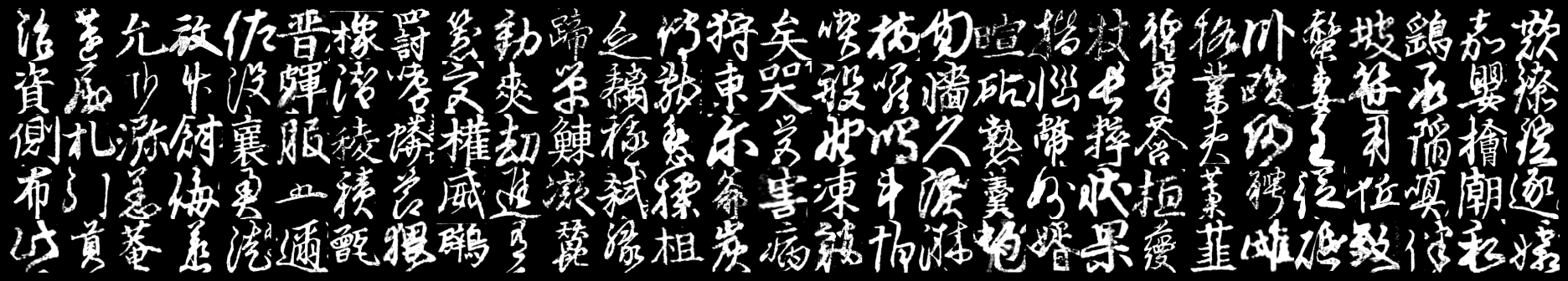

- **Deep Learning for Classical Japanese Literature**

- [[paper]](https://arxiv.org/abs/1812.01718)[[GitHub]](https://github.com/rois-codh/kmnist)

- Tarin Clanuwat, Mikel Bober-Irizar, Asanobu Kitamoto, Alex Lamb, Kazuaki Yamamoto, David Ha

- NeurIPS 2018

- Kuzushiji-MNIST,Kuzushiji-49 and Kuzushiji-Kanji

- Kuzushiji-MNIST

- Kuzushiji-Kanji

- **Multi-Content GAN for Few-Shot Font Style Transfer**

- [[code]](https://github.com/azadis/MC-GAN)[[paper]](https://arxiv.org/abs/1712.00516)[[blog]](https://bair.berkeley.edu/blog/2018/03/13/mcgan/)

- Azadi, Samaneh, Matthew Fisher, Vladimir Kim, Zhaowen Wang, Eli Shechtman, and Trevor Darrell.

- CVPR2018

- **Learning to Write Stylized Chinese Characters by Reading a Handful of Examples**

- [[paper]](https://www.ijcai.org/proceedings/2018/0128.pdf)

- Danyang Sun∗, Tongzheng Ren∗, Chongxuan Li, Hang Su†, Jun Zhu†

- IJCAI 2018

- SA-VAE

#### 2017

- **DCFont: An End-To-End Deep Chinese Font Generation System**

- [[paper]](http://www.icst.pku.edu.cn/zlian/docs/20181024110234919639.pdf)

- Juncheng Liu, Zhouhui Lian, Jianguo Xiao

- SIGGRAPH Asia 2017

- **zi2zi: Master Chinese Calligraphy with Conditional Adversarial Networks**

- [[blog]](https://kaonashi-tyc.github.io/2017/04/06/zi2zi.html) [[code(TensorFlow)]](https://github.com/kaonashi-tyc/zi2zi) [[code(pytorch)(xuan-li)]](https://github.com/xuan-li/zi2zi-pytorch) [[code(pytorch)( EuphoriaYan)]](https://github.com/EuphoriaYan/zi2zi-pytorch)

- Yuchen Tian

- 2017

#### 2016

- **Rewrite: Neural Style Transfer For Chinese Fonts**

- [[code]](https://github.com/kaonashi-tyc/Rewrite)

- Yuchen Tian

- 2016

- **Automatic generation of large-scale handwriting fonts via style learning**

- [[paper]](https://dl.acm.org/citation.cfm?id=3005371)

- Zhouhui Lian, Bo Zhao, and Jianguo Xiao

- SIGGRAPH ASIA 2016

- **A Book from the Sky 天书: Exploring the Latent Space of Chinese Handwriting**

- [[blog]](http://genekogan.com/works/a-book-from-the-sky/)

- [Gene Kogan](http://genekogan.com/)

#### 1993

- **Letter Spirit: An Emergent Model of the Perception and Creation of Alphabetic Style**

- [[paper]](https://pdfs.semanticscholar.org/e03a/21a4dfaa34b3d43ab967a01d7d9f6f7adccf.pdf)

- Douglas Hofstadter, Gary McGraw

- 1993

### Other Approach

- **Automatic Generation of Typographic Font from a Small Font Subset**

- [[paper]](https://arxiv.org/abs/1701.05703)

- Tomo Miyazaki, Tatsunori Tsuchiya, Yoshihiro Sugaya, Shinichiro Omachi, Masakazu Iwamura, Seiichi Uchida, Koichi Kise

- 2017

- **Awesome Typography: Statistics-Based Text Effects Transfer**

- [[paper]](https://arxiv.org/pdf/1611.09026.pdf)[[code]](https://github.com/williamyang1991/Text-Effects-Transfer)

- Shuai Yang, Jiaying Liu, Zhouhui Lian and Zongming Guo

- CVPR 2017

- **FlexyFont: Learning Transferring Rules for Flexible Typeface Synthesis**

- [[paper]](https://doi.org/10.1111/cgf.12763)

- H. Q. Phan, H. Fu, and A. B. Chan

- 2015 Computer Graphics Forum

- **Automatic shape morphing for Chinese characters**

- [[paper]](https://dl.acm.org/citation.cfm?id=2407748)

- Zhouhui Lian, and Zhouhui Lian

- SIGGRAPH Asia 2012

-

- **Easy generation of personal Chinese handwritten fonts**

- [[paper]](https://ieeexplore.ieee.org/document/6011892/)

- Baoyao Zhou, Weihong Wang, and Zhanghui Chen

- 2011 ICME(IEEE International Conference on Multimedia and Expo)

## Struture Learning

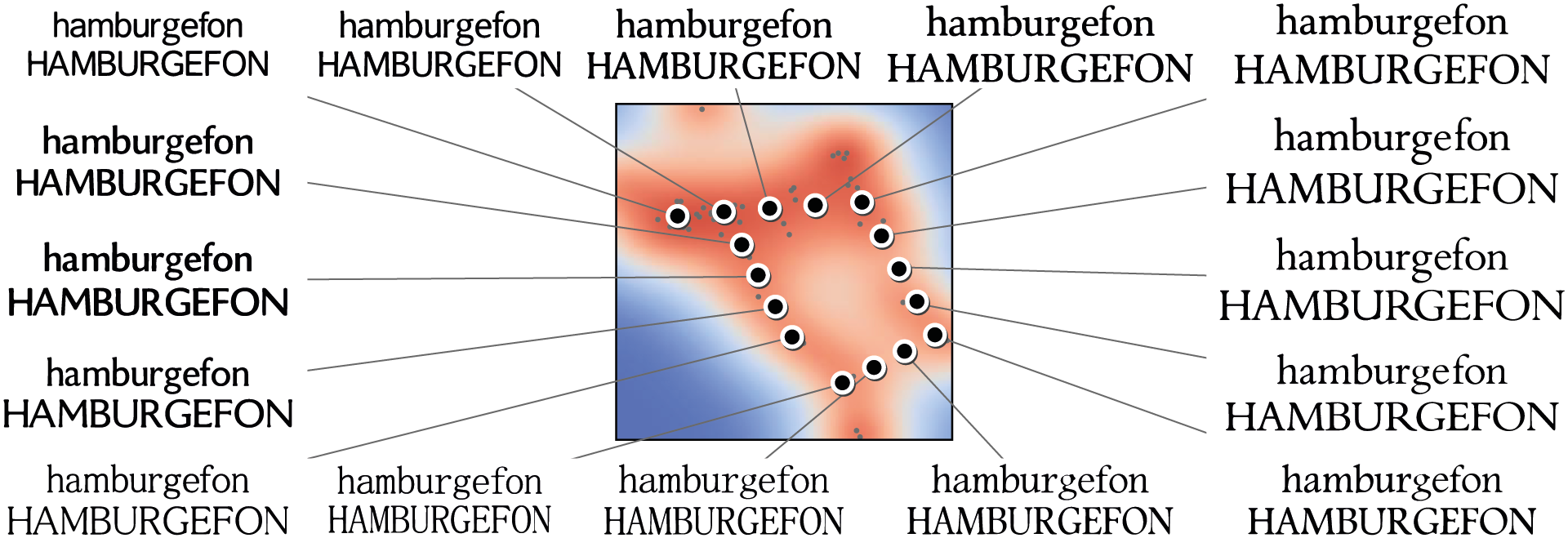

- **Deep Factorization of Style and Structure in Fonts**

- [[paper]](https://arxiv.org/abs/1910.00748)

- Nikita Srivatsan, Jonathan T. Barron, Dan Klein, Taylor Berg-Kirkpatrick

- EMNLP 2019

- **Analyzing 50k fonts using deep neural networks**

- [[blog]](https://erikbern.com/2016/01/21/analyzing-50k-fonts-using-deep-neural-networks.html)

- [Erik Bernhardsson](https://erikbern.com/about.html)

- 2016

- **Learning a Manifold of Fonts**

- [[paper]](http://vecg.cs.ucl.ac.uk/Projects/projects_fonts/papers/siggraph14_learning_fonts.pdf)[[project(demo)]](http://vecg.cs.ucl.ac.uk/Projects/projects_fonts/projects_fonts.html)

- Neill D.F. Campbell, and Jan Kautz

- SIGGRAPH 2014

## Dataset

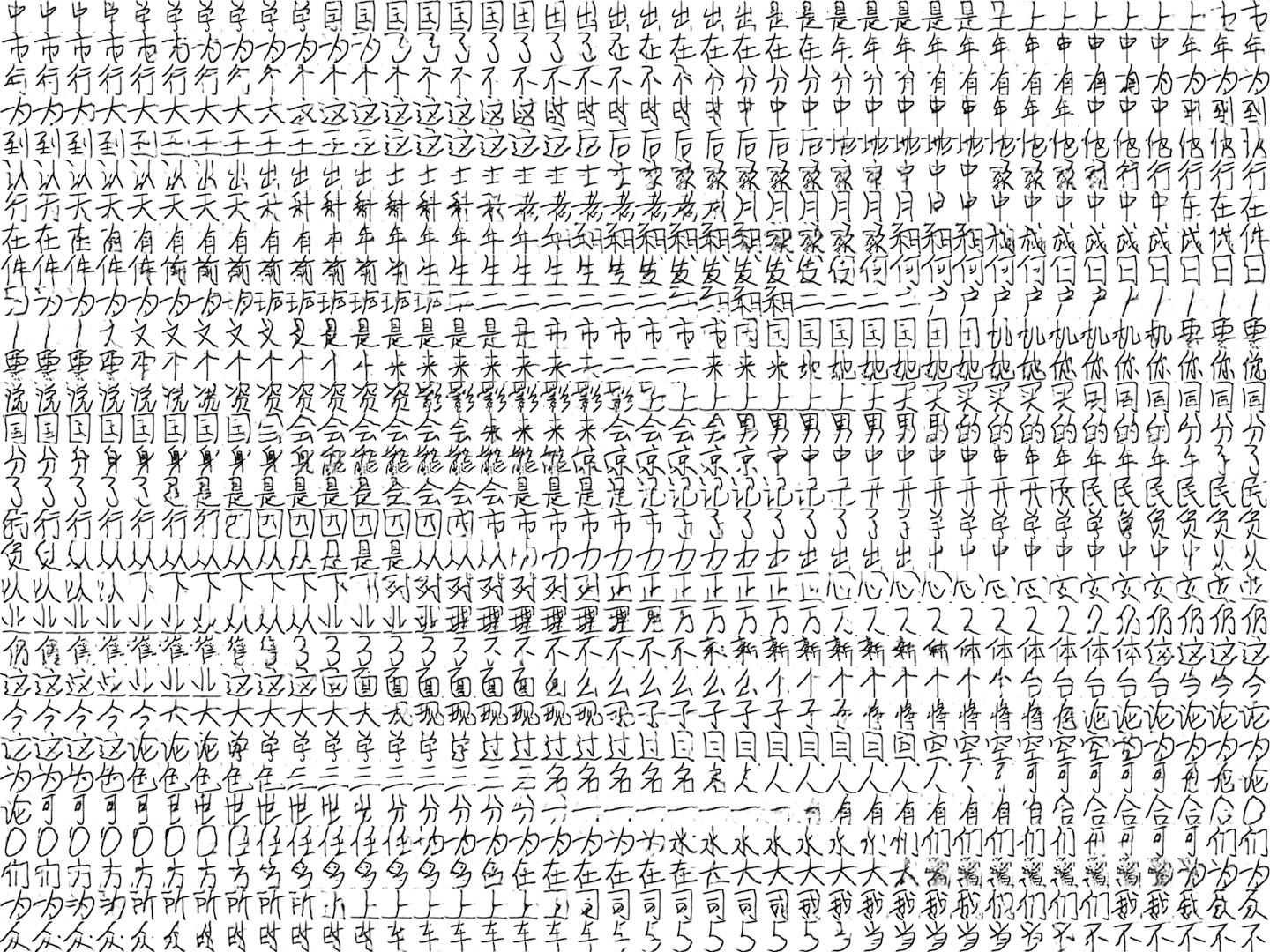

- **Chinese Handwriting Recognition Competition 2013**

- [[paper]](https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=3&ved=2ahUKEwipwtmZ3NXdAhUJyrwKHYBuAp4QFjACegQIAxAC&url=http%3A%2F%2Fblog.sciencenet.cn%2Fhome.php%3Fmod%3Dattachment%26id%3D48833&usg=AOvVaw3z6eOWVROK6CCdPly6Te5n)[[download]](http://www.nlpr.ia.ac.cn/databases/handwriting/Download.html)

- **Deep Learning for Classical Japanese Literature**

- [[paper]](https://arxiv.org/abs/1812.01718)[[GitHub]](https://github.com/rois-codh/kmnist)

- Tarin Clanuwat, Mikel Bober-Irizar, Asanobu Kitamoto, Alex Lamb, Kazuaki Yamamoto, David Ha

- NeurIPS 2018

- Kuzushiji-MNIST,Kuzushiji-49 and Kuzushiji-Kanji

- Kuzushiji-MNIST

- Kuzushiji-Kanji

## Other Application

- **FontCode: Embedding Information in Text Documents Using Glyph Perturbation**

- [[paper]](http://www.cs.columbia.edu/cg/fontcode/fontcode.pdf)[[project page]](http://www.cs.columbia.edu/cg/fontcode/)

- Chang Xiao, Cheng Zhang, Changxi Zheng

- SIGGRAPH 2018

- <iframe width="560" height="315" src="https://www.youtube.com/embed/dejrBf9jW24" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe>

Sign in with Wallet

Sign in with Wallet

Sign in with Wallet

Sign in with Wallet