# [kubernetes]kubernetes學習筆記 (三)

###### tags `kubernetes`

#### 9.k8s+prometheus+grafana实现集群监控

- Prometheus组件与架构

- Prometheus Server:收集指标和存储时间序列数据,并提供查询接口

- ClientLibrary:客户端库

- Push Gateway:短期存储指标数据,主要用于临时性的任务

- Exporters:采集已有的第三方服务监控指标并暴露metrics

- Alertmanager:告警

- Web UI:简单的web控制台

- 监控插件

- Node exporter —> 用于监控linux

- Mysql server exporter —> 用于监控Mysql

- cAdvisor —> 用于监控Docker

- JMX exporter —>用于监控JAVA

```shell

#监控插件官方地址

https://prometheus.io/docs/instrumenting/exporters/

#grafana监控模板下载地址

https://grafana.com/grafana/dashboards/

#下载yaml文件

wget http://oss.linuxtxc.com/k8s-prometheus-grafana.zip

#解压

unzip k8s-prometheus-grafana.zip

#将storageclass修改成自己的

sed -i 's/managed-nfs-storage/nfs-storageclass/g' ./prometheus/*.yaml

sed -i 's/managed-nfs-storage/nfs-storageclass/g' ./grafana/*.yaml

sed -i 's/managed-nfs-storage/nfs-storageclass/g' ./*.yaml

#创建命名空间

kubectl create ns ops

#创建资源

cd k8s-prometheus-grafana/

kubectl apply -f prometheus/

kubectl apply -f grafana/

kubectl apply -f kube-state-metrics.yaml

#使用docker安装grafana与Prometheus

#Prometheus

#docker run -d --name=prometheus -p 9090:9090 prom/prometheus

#grafana

#docker run -d --name=grafana -p 3000:3000 grafana/grafana

```

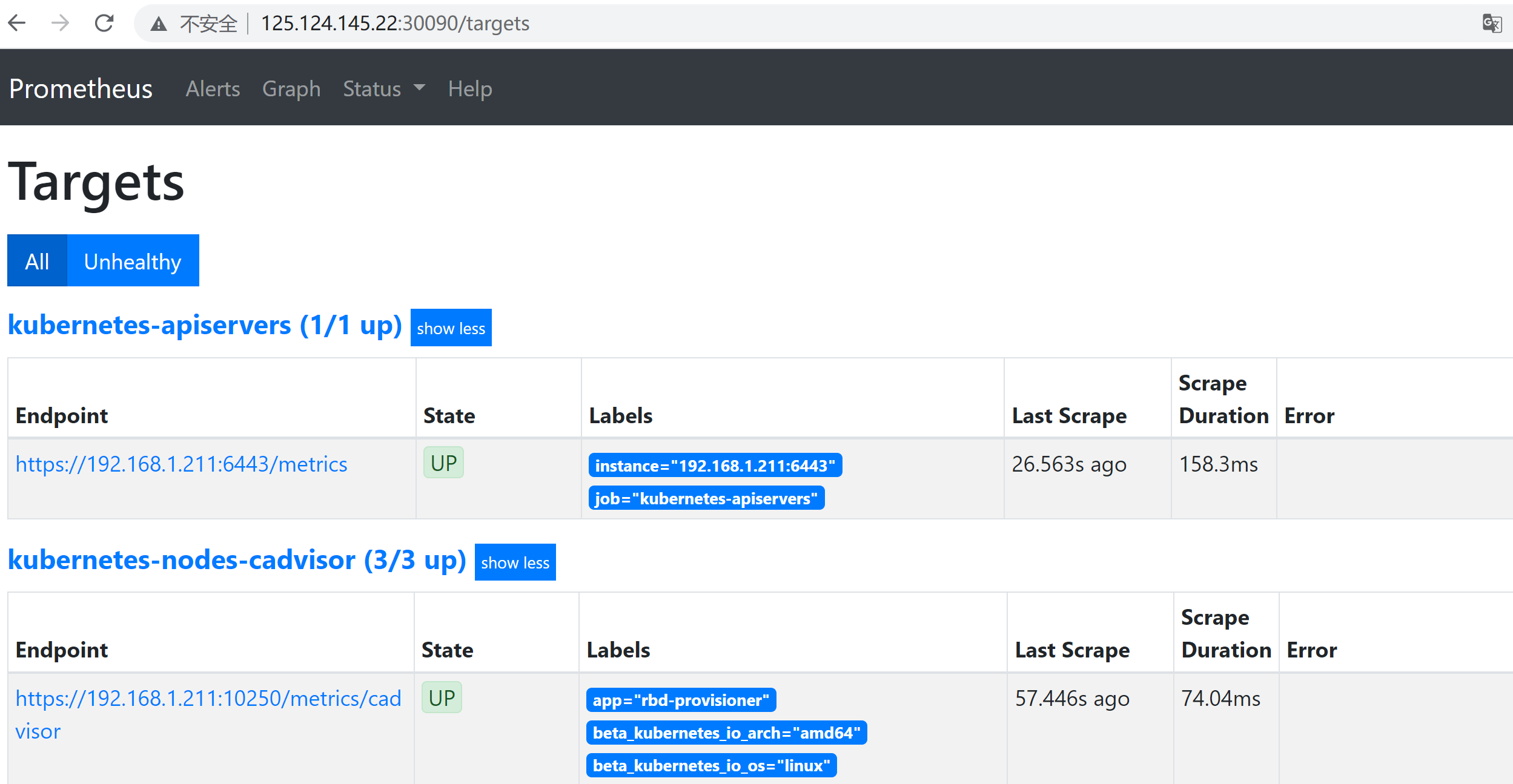

- 访问prometheus(http://125.124.145.22:30090/targets)

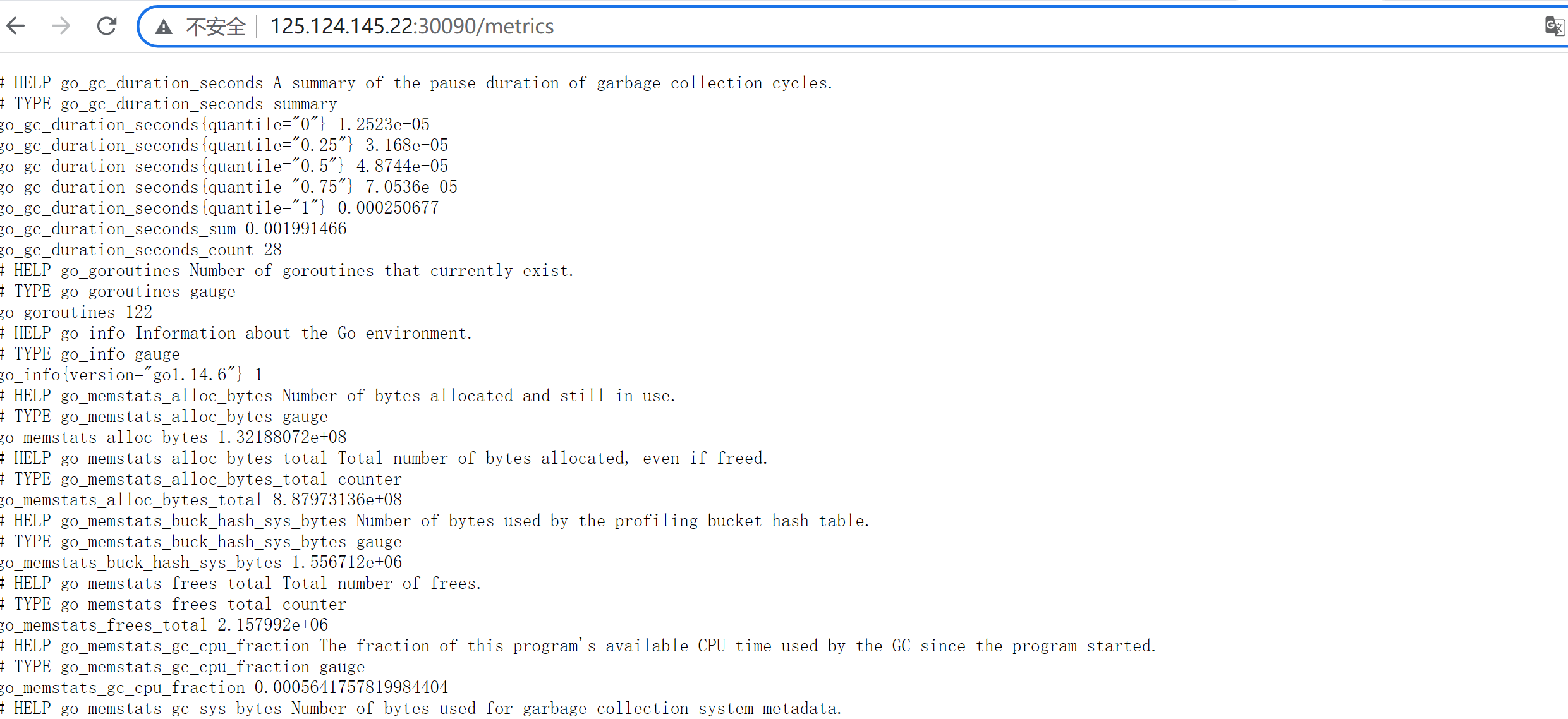

- 访问node-exporter(http://125.124.145.22:30090/metrics)

- 访问Grafana(http://125.124.145.22:30030/login),默认用户密码均为admin

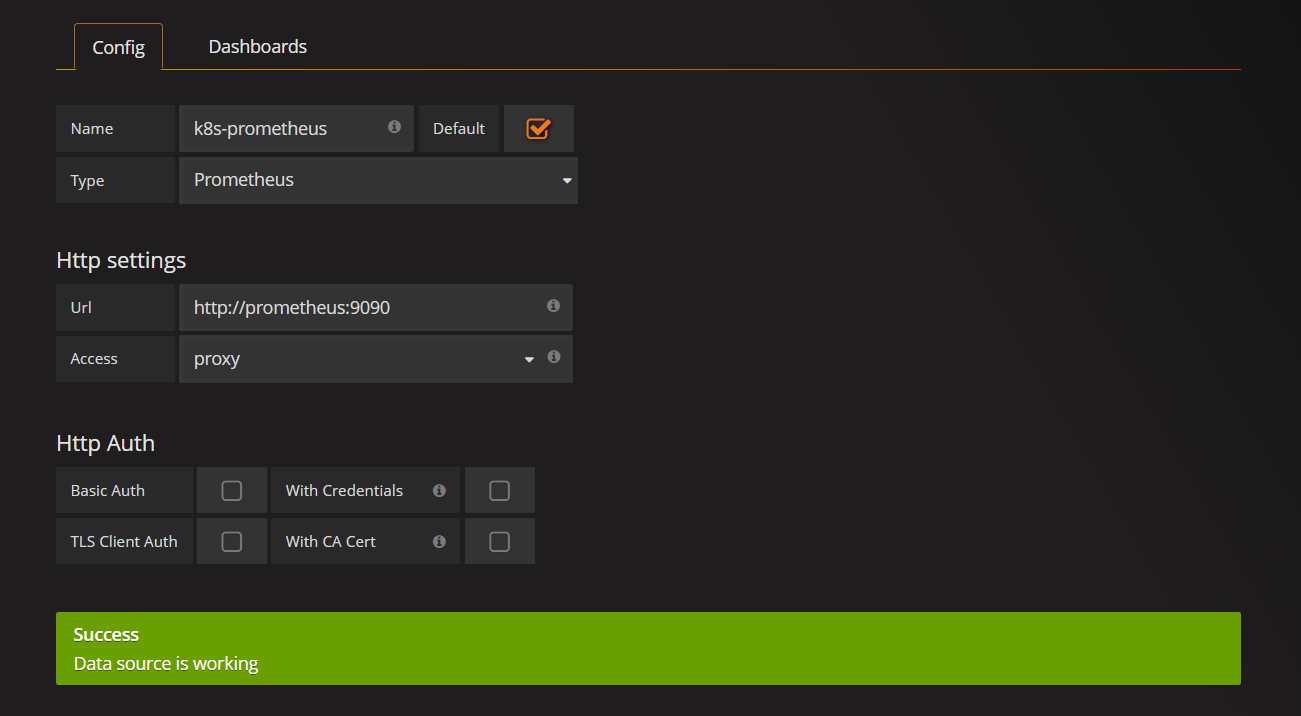

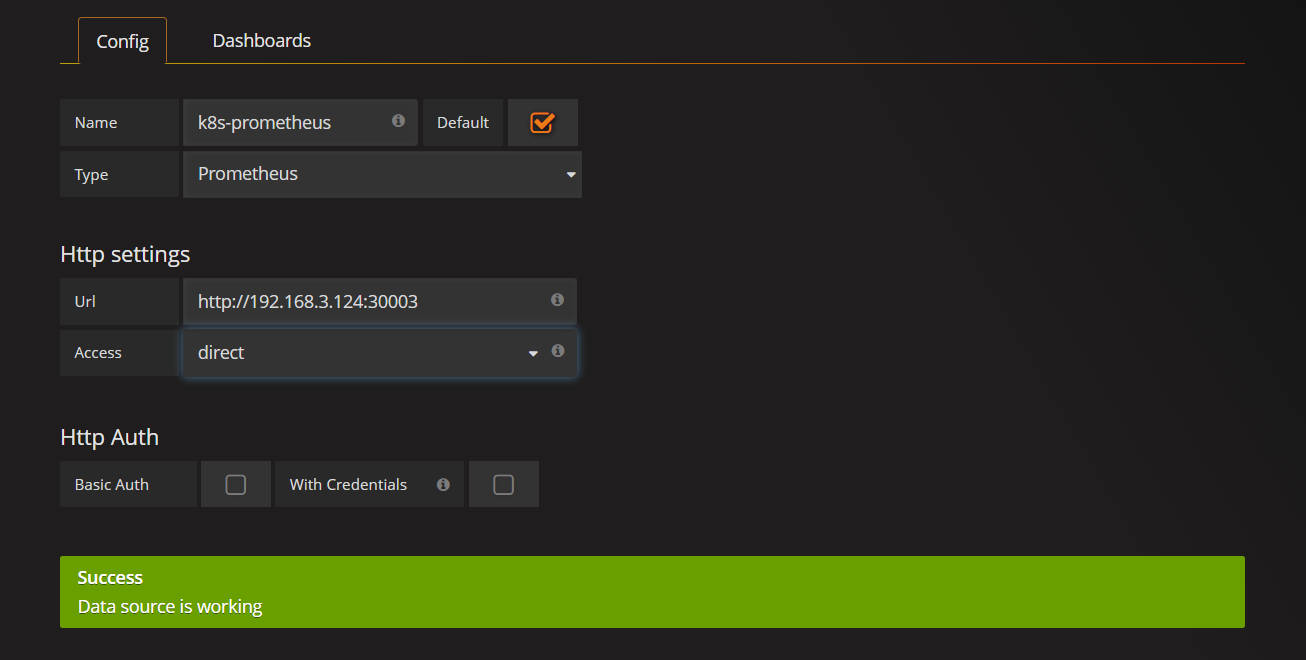

- 添加数据源,以下使用的代理模式(推荐),仅限k8s内部访问

- 也可以使用直接访问模式

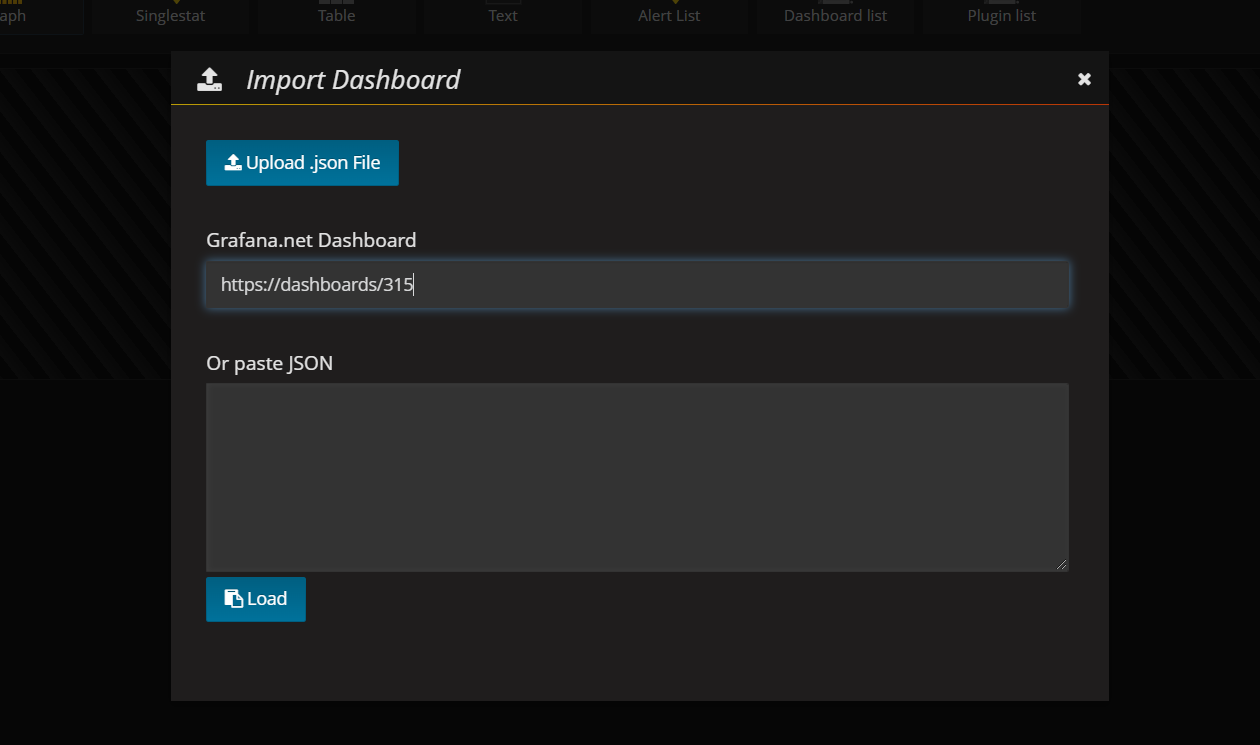

- 导入面板:Dashboards->Import(导入面板,可以直接输入模板编号315在线导入,node_exporter可以使用9276,或者下载好对应的json模板文件本地导入)

```shell

ls k8s-prometheus-grafana/dashboard

---

jnaK8S工作节点监控-2021.json jnaK8S集群资源监控-2021.json jnaK8S资源对象状态监控-2021.json

```

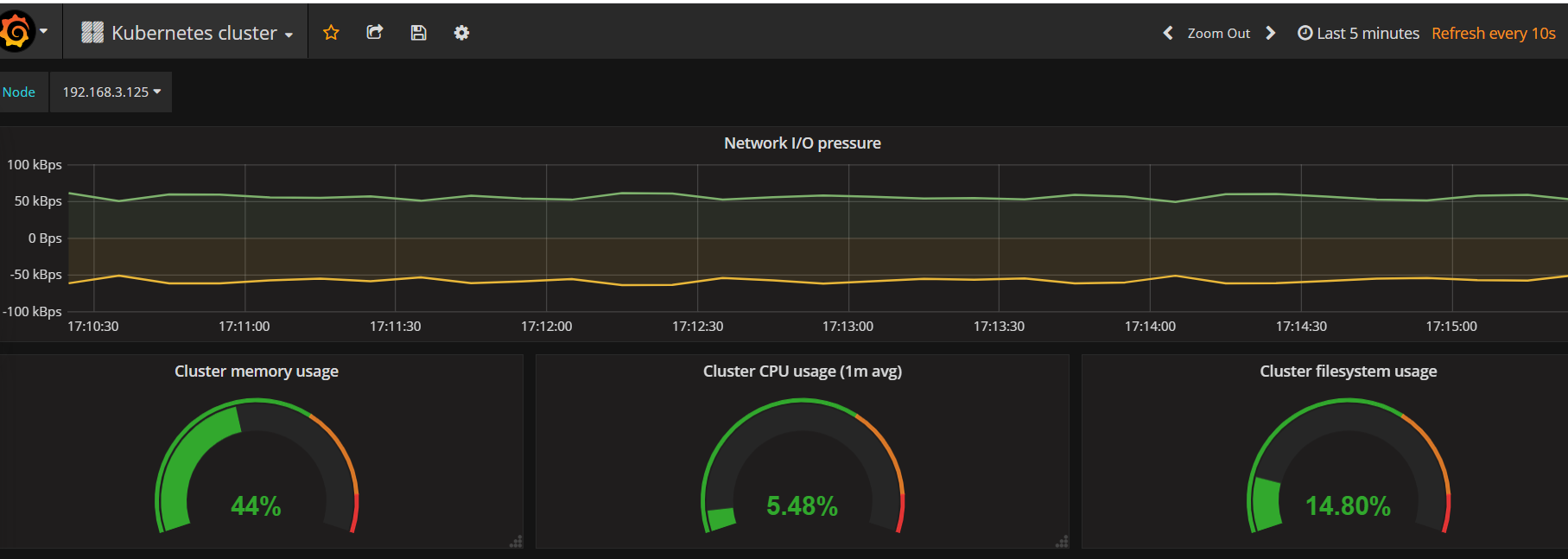

- 查看展示效果

- Prometheus基本使用:查询数据

- 支持条件查询,操作符,并内建了大量内置函数,供我们针对监控数据的各种维度进行查询

- 数据模型

- Prometheus将所有数据存储为时间序列;

- 具有相同度量名称以及标签属于同一指标;

- 每个时间序列都由度量标准名称和一组键值对(称为标签)唯一标识;

- 指标格式

- <metric name> {<label name>=<label value>,...}

- 监控思路

| 监控指标 | 具体实现 | 举例 |

| ----------- | ------------------ | -------------------------- |

| Pod性能 | cAdvisor | CPU,内存,网络等 |

| Node性能 | node-exporter | CPU,内存,网络等 |

| K8s资源对象 | kube-state-metrics | Pod,Deployment,Service等 |

- kubelet内置监控指标接口:https://127.0.0.1:10250/metrics

- kubelet内置的cadvisor指标接口:https://127.0.0.1:10250/metrics/cadvisor

- 基于k8s服务发现

- 不是所有的pod都会被监控,如果这个pod想要被监控想要声明让Prometheus监控

```yaml

metadata:

annotations:

prometheus.io/scrape: "true"

```

- Prometheus告警

```

cd k8s-prometheus-grafana/dashboard

vim alertmanager-configmap.yaml

```

- 配置文件内容如下:

```yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-config

namespace: ops

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.163.com:25' #邮箱官网

smtp_from: 'linuxtxc@163.com' #发送告警的邮箱

smtp_auth_username: 'linuxtxc@163.com'

smtp_auth_password: 'QCVUMVDBEIZTBBQW' #邮箱安全码

route:

group_interval: 1m

group_wait: 10s

receiver: default-receiver

repeat_interval: 1m

receivers:

- name: default-receiver

email_configs:

- to: "413848871@qq.com" #接受邮箱

```

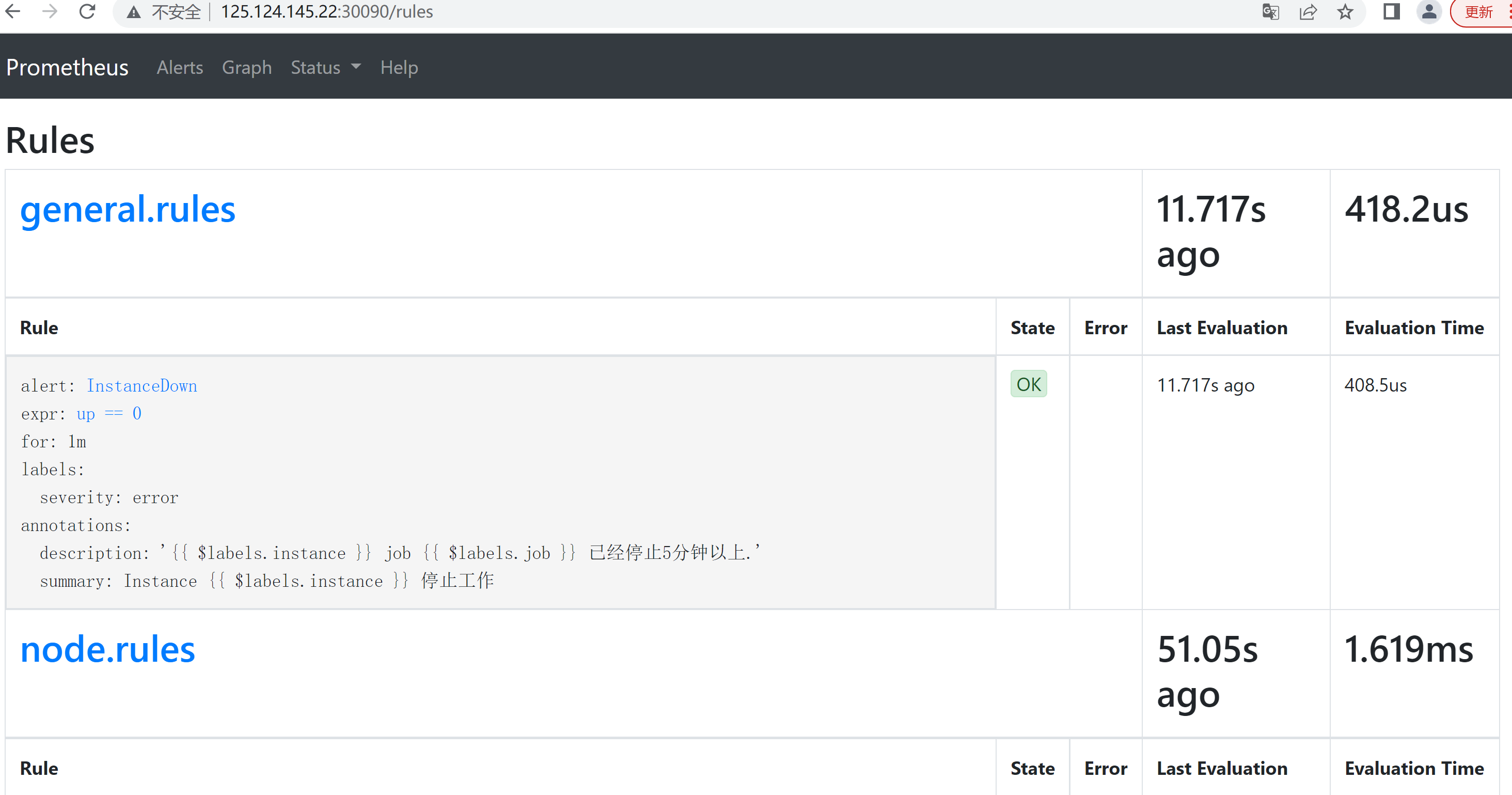

- 告警规则

```

kubectl apply -f k8s-prometheus-grafana/prometheus/prometheus-rules.yaml

#配置钉钉告警

- 创建钉钉群->群设置->智能群助手->添加机器人->自定义->添加

- 获取Webhook地址,填写到dingding-webhook-deployment.yaml,然后apply

- kubectl apply -f alertmanager-dingding-configmap.yaml

```

----

#### 10.k8s集群管理平台部署

##### 10.1.kuboard安装

```shell

#下载

#wget http://oss.linuxtxc.com/deploy/yaml/kuboard-v3.yaml

wget https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

kubectl apply -f kuboard-v3.yaml

```

访问 Kuboard

- 在浏览器中打开链接 `http://172.16.8.124:30080`

- 输入初始用户名和密码,并登录

- 用户名: `admin`

- 密码: `Kuboard123`

##### 10.2.kubesphere安装

```shell

#wget https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

#wget https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

wget http://oss.linuxtxc.com/deploy/yaml/kubesphere/kubesphere-installer.yaml

wget http://oss.linuxtxc.com/deploy/yaml/kubesphere/cluster-configuration.yaml

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

#查看安装情况

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

```

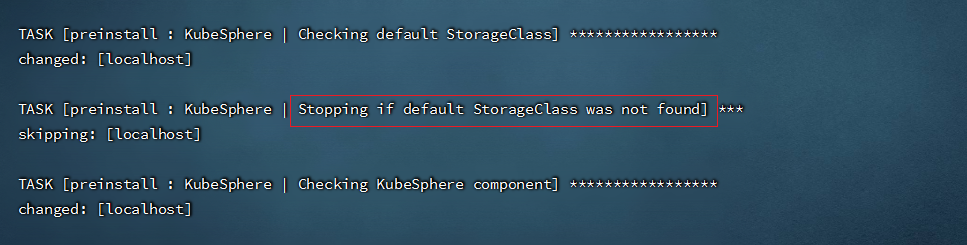

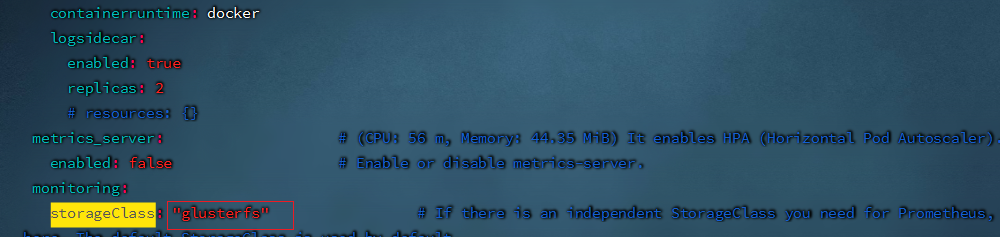

- 解决方法:

```shell

#yaml文件中的storageClass默认为空,需要在两处添加,我的storageClass为"glusterfs"

vim cluster-configuration.yaml

```

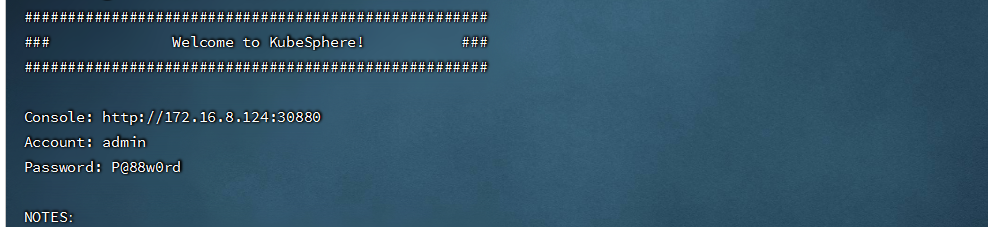

```shell

#再次查看安装情况(安装成功后会有如下提示)

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

```

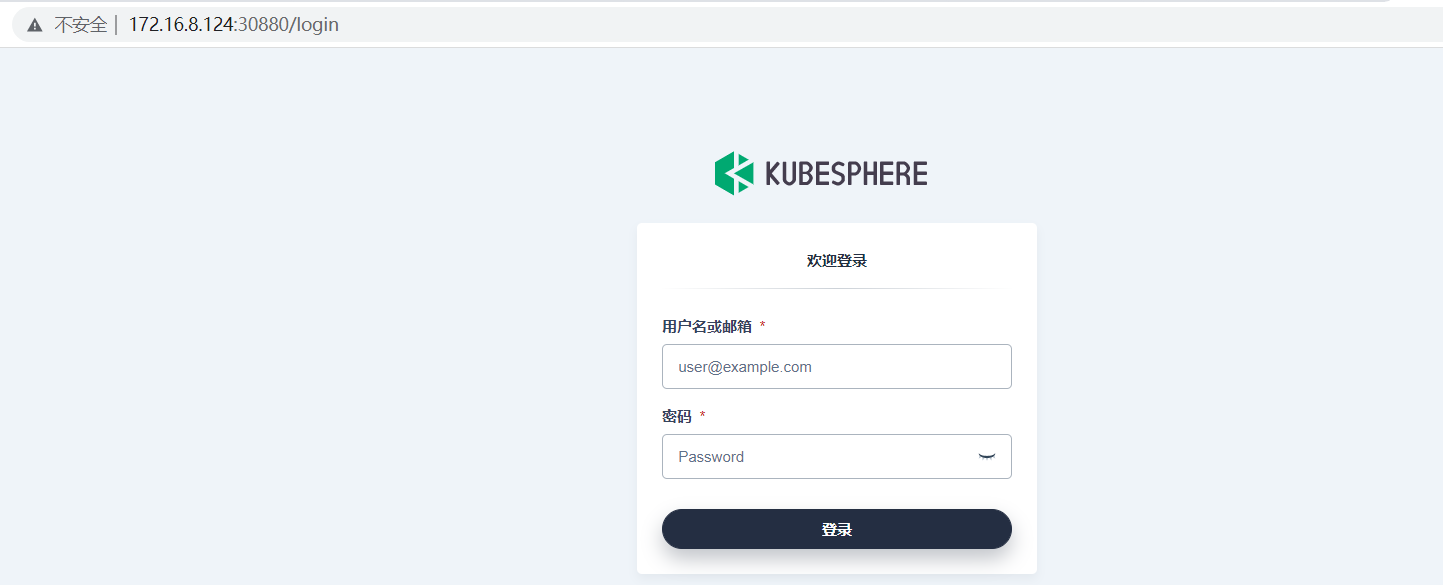

- 访问 curl:http://172.16.8.124:30880/

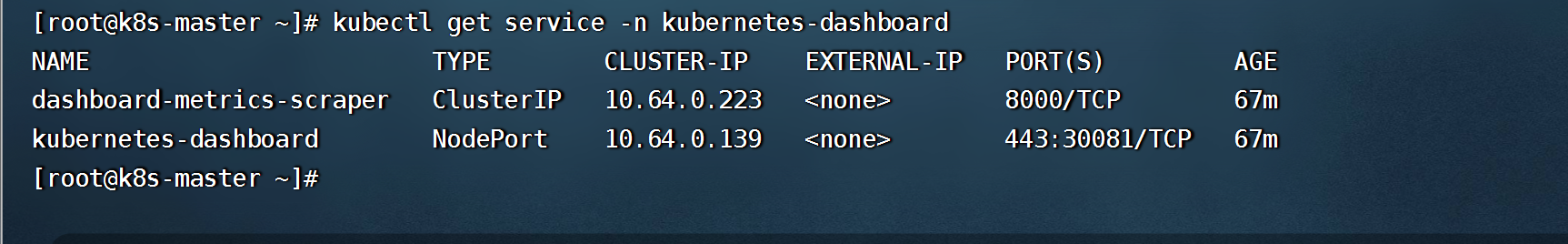

##### 10.3.部署dashboard

```shell

wget http://oss.linuxtxc.com/deploy/yaml/kubernetes-dashboard.yaml

kubectl apply -f kubernetes-dashboard.yaml

#查看Nodeport访问端口

kubectl get service -n kubernetes-dashboard

```

- https://nodeIP:30081

```shell

#创建用户

kubectl create serviceaccount dashboard-admin -n kube-system

#用户授权

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

#获取token

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

```

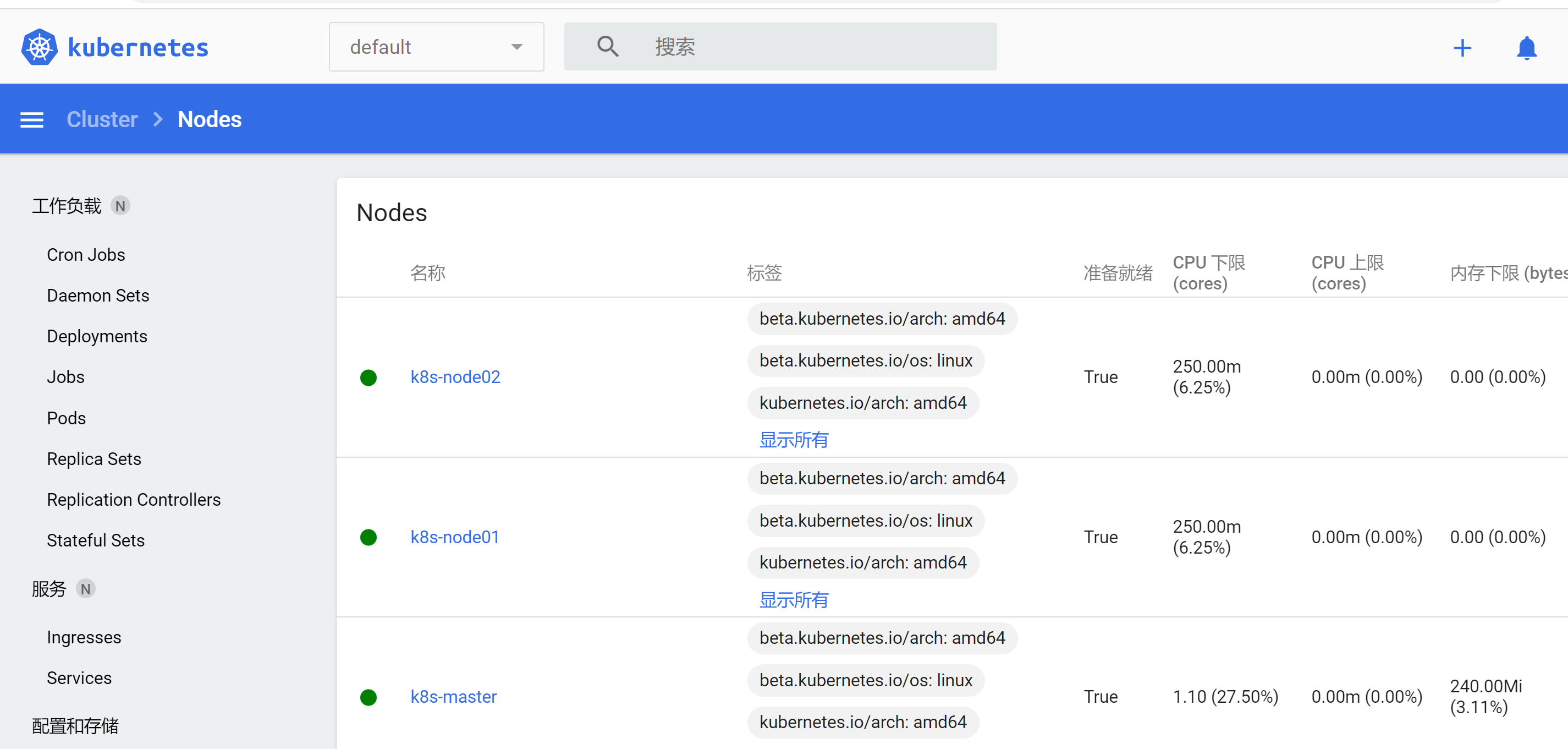

- 输入token后

#### 11.helm包管理

- Helm是一个Kubernetes的包管理工具,就像Linux下的包管理器,如yum/apt-get等,可以方便的将之前打包好的yaml文件部署到kubernetes上

- Helm有三个重要概念:

- Helm:一个命令行客户端工具,主要是用于Kubernetes应用chart的创建,打包,发布和管理。

- Chart:应用描述,一系列用于描述k8s资源相关文件的集合

- Release:基于Chart的部署实体,一个chart被Helm运行后将会生成对应的release,并在k8s中创建出真实运行的资源对象

- chart常用文件

- charts/ (可选项)是一个目录,存放一些调用的charts

- Chart.yaml (必需项)定义一些基础信息。例如作者、版本等

- templates/ (必需项)是应用需要的yaml文件模板

- requirements.yaml (可选项)同charts一样的。

- values.yaml (必需项)默认配置值。例如定义的一些变量等。

##### 1.1.helm安装

```shell

#GitHub下载地址

#https://github.com/helm/helm/releases

wget https://get.helm.sh/helm-v3.5.4-linux-amd64.tar.gz

tar -xf helm-v3.5.4-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/

#查看版本

helm version

```

##### 1.2.helm常用命令

```shell

creat 创建一个chart并指定名字

package 将chart目录打包到chart存档文件中

dependency 管理chart 依赖

get 下载一个release,可用子命令:all,hooks,manifest,notes,values

history 获取release历史

install 安装一个chart

list 列出release

package 将chart目录打包到chart存档文件中

pull 从远程仓库下载chart并解压到本地 #helm pull stable/mysql --untar

repo 添加,列出,移除,更新和索引chart仓库,可用子命令:add,index,list,remove,update

rollback 从之前版本回滚

search 根据关键字搜索chart,可用子命令;hub,repo

show 查看chart详细信息,可用子命令:all,chart,readme,values

status 显示已命名版本的状态

template 本地呈现模板

uninstall 卸载一个release

upgrade 更新一个release

version 查看helm客户端版本

```

##### 1.3.自建chart

```

#创建chart

helm create mychart

#会在当前目录下创建一个目录

cd /root/mychart

```

- 修改模板文件,(可以将自带的模板文件删除)

```yaml

cd /root/mychart/templates

vim deployment.yaml (会引用values.yaml 文件中的值)

----------

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: {{ .Values.labels.app }}

name: nginx-deployment

spec:

replicas: {{ .Values.replicas }}

selector:

matchLabels:

app: {{ .Values.matchLabels.app }}

template:

metadata:

labels:

app: {{ .Values.labels.app }}

spec:

containers:

- image: {{ .Values.containers.image }}

name: alpine-nginx

imagePullPolicy: {{ .Values.containers.imagePullPolicy }}

volumes:

- name: data

nfs:

server: 192.168.1.211 # NFS服务器地址

path: {{ .Values.nfs.path }} # 共享文件路径

```

- 修改values.yaml (自定义,注:文件不需要加 “-”)

```yaml

nfs:

server: 192.168.1.211 # NFS服务器地址

path: /opt/data/nfs/nginx

volumeMounts:

mountPath: /opt/apps/nginx/conf/vhosts

metadata:

labels:

app: web-deployment

name: web-deployment

matchLabels:

app: web-deployment

containers:

image: alpine-nginx:1.19.6

imagePullPolicy: IfNotPresent

labels:

app: web-deployment

#service port

service:

port: 80

targetport: 80

type: NodePort

name: nginx-service

#service 标签选择器

selector:

app: web-deployment

```

- 使用自建的chart部署服务

```shell

helm install web-nginx /root/mychart

```

- 更改变量值

```shell

#--description,添加描述信息,有利于版本回滚

helm install web-nginx /root/mychart --set service.port=8080 --set service.targetport=80 --set labels.app=tomcat --description="nginx-1.17.3"

#--values,-f,使用yaml文件更改变量值(文件格式与values.yaml相同)

helm install web-nginx /root/mychart -f service-values.yaml

#--dry-run,将配置打印出来

helm install web-nginx /root/mychart -f service-values.yaml --dry-run

#版本更新

helm upgrade web-nginx /root/mychart -f deploy-values.yaml

#查看历史版本

helm history web-nginx

#回滚到2版本

helm rollback web 2

```

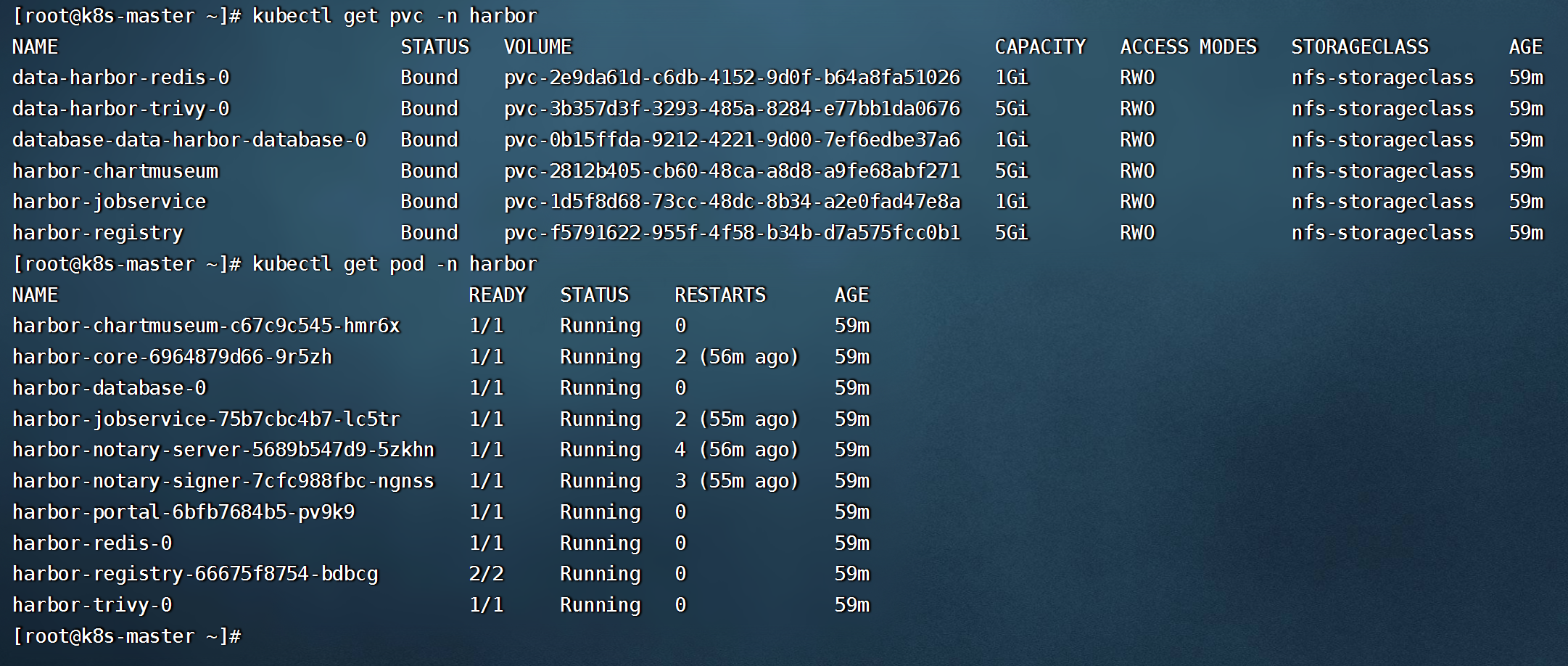

##### 1.4.helm安装harbor

```shell

#添加harbor的helm仓库

helm repo add harbor https://helm.goharbor.io

#下载最新的harbor包

helm pull harbor/harbor

kubectl create namespace harbor

#进入目录

mv harbor /opt

cd /opt/harbor

#使用域名证书创建secret

kubectl create secret tls tls-harbor.linuxtxc.com --key=harbor.linuxtxc.com.key --cert=harbor.linuxtxc.com.pem -n harbor

```

- 编辑values.yaml文件

```shell

expose:

type: ingress

tls:

auto:

commonName: ""

secret:

secretName: "tls-harbor.linuxtxc.com" #添加使用证书创建的secret

......

externalURL: https://harbor.linuxtxc.com #添加自己的域名

......

persistence:

enabled: true

resourcePolicy: "keep"

persistentVolumeClaim:

registry:

existingClaim: ""

storageClass: "nfs-storageclass" #添加自己的storageclass

......

chartmuseum:

existingClaim: ""

storageClass: "nfs-storageclass" #添加自己的storageclass

......

jobservice:

existingClaim: ""

storageClass: "nfs-storageclass" #添加自己的storageclass

......

database:

existingClaim: ""

storageClass: "nfs-storageclass" #添加自己的storageclass

......

redis:

existingClaim: ""

storageClass: "nfs-storageclass" #添加自己的storageclass

......

trivy:

existingClaim: ""

storageClass: "nfs-storageclass" #添加自己的storageclass

```

- 查看资源创建情况(创建会需要几分钟时间)

- 安装harbor

```shell

helm install harbor harbor/harbor -f values.yaml -n harbor

```

- 添加harbor为可信任仓库

```shell

vim /etc/docker/daemon.json

......

"insecure-registries": ["harbor.linuxtxc.com:93"]

##重启docker

systemctl restart docker

```

#### 12. Jenkins+gitlab实现CICD

---

##### 12.1.安装gitlab

```shell

mkdir gitlab

cd gitlab

docker run -d \

--name gitlab \

-p 8043:443 \

-p 99:80 \

-p 2222:22 \

-v $PWD/config:/etc/gitlab \

-v $PWD/logs:/var/log/gitlab \

-v $PWD/data:/var/opt/gitlab \

-v /etc/localtime:/etc/localtime \

--restart=always \

lizhenliang/gitlab-ce-zh:latest

```

- 初次会先设置管理员密码 ,然后登陆,默认管理员用户名root,密码就是刚设置的。

##### 12.2.安装Jenkins

- 创建Jenkins.yaml ,内容如下:

```yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

name: jenkins

labels:

name: jenkins

spec:

serviceAccountName: jenkins

containers:

- name: jenkins

image: jenkins/jenkins:lts

ports:

- containerPort: 8080

- containerPort: 50000

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

securityContext:

fsGroup: 1000

volumes:

- name: jenkins-home

persistentVolumeClaim:

claimName: jenkins

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins

spec:

storageClassName: "nfs-storageclass"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: Service

metadata:

name: jenkins

spec:

selector:

name: jenkins

type: NodePort

ports:

- name: http

port: 80

targetPort: 8080

protocol: TCP

nodePort: 30008

- name: agent

port: 50000

protocol: TCP

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: jenkins

rules:

- apiGroups: [""]

resources: ["pods","events"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["secrets","events"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: jenkins

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: jenkins

subjects:

- kind: ServiceAccount

name: jenkins

```

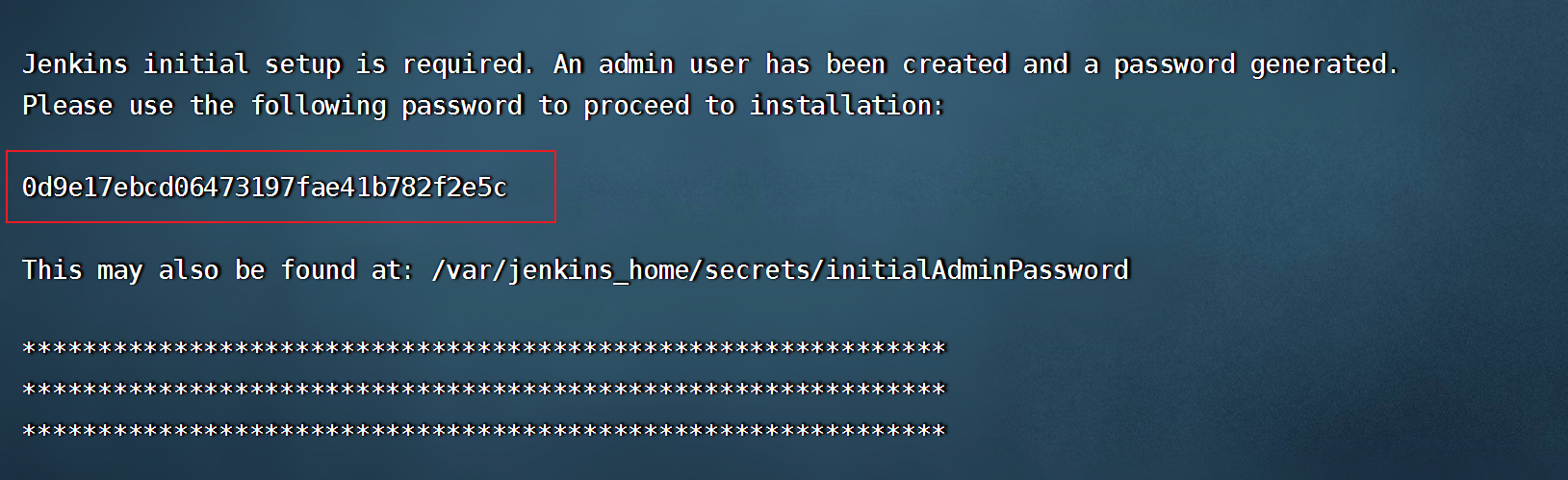

- 查看/var/jenkins_home/secrets/initialAdminPassword目录下的凭证

```shell

kubectl logs jenkins-fd79f7c9d-b85nj

```

- 修改插件源

```yaml

#进入持久化存储目录

cd /opt/data/nfs/default-jenkins-pvc-812aa60a-6244-4ad8-bbf0-42894eaa2200/updates

#修改插件源

sed -i 's/http:\/\/updates.jenkins-ci.io\/download/https:\/\/mirrors.tuna.tsinghua.edu.cn\/jenkins/g' default.json && \

sed -i 's/http:\/\/www.google.com/https:\/\/www.baidu.com/g' default.json

#重启Jenkins

kubectl delete pod jenkins-fd79f7c9d-b85nj

```

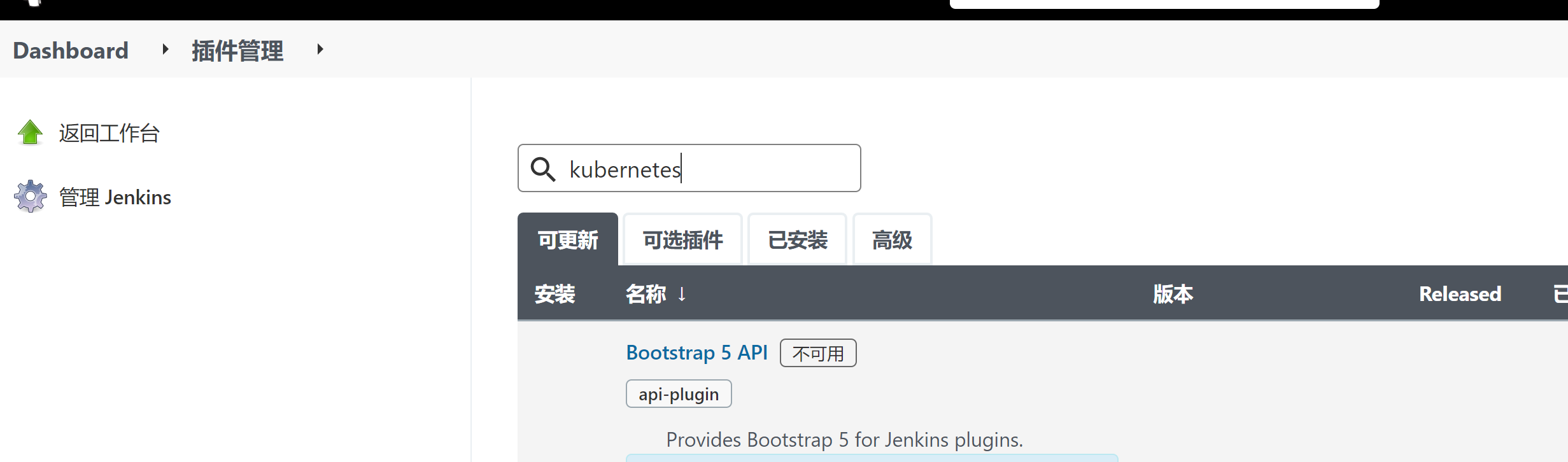

- 安装插件,管理Jenkins->系统配置 ->管理插件 -> 分别搜索Git Parameter / Git / Pipeline / kubernetes / Config File Provider

##### 12.3.jenkins 主从架构

###### 12.3.1.jenkins slave 节点配置

- 需要安装kubernetes插件(上面已安装)

- 当触发Jenkins任务时,Jenkins会调用kubernetes API创建 Slave Pod,Pod启动后会连接Jenkins,接受任务并处理

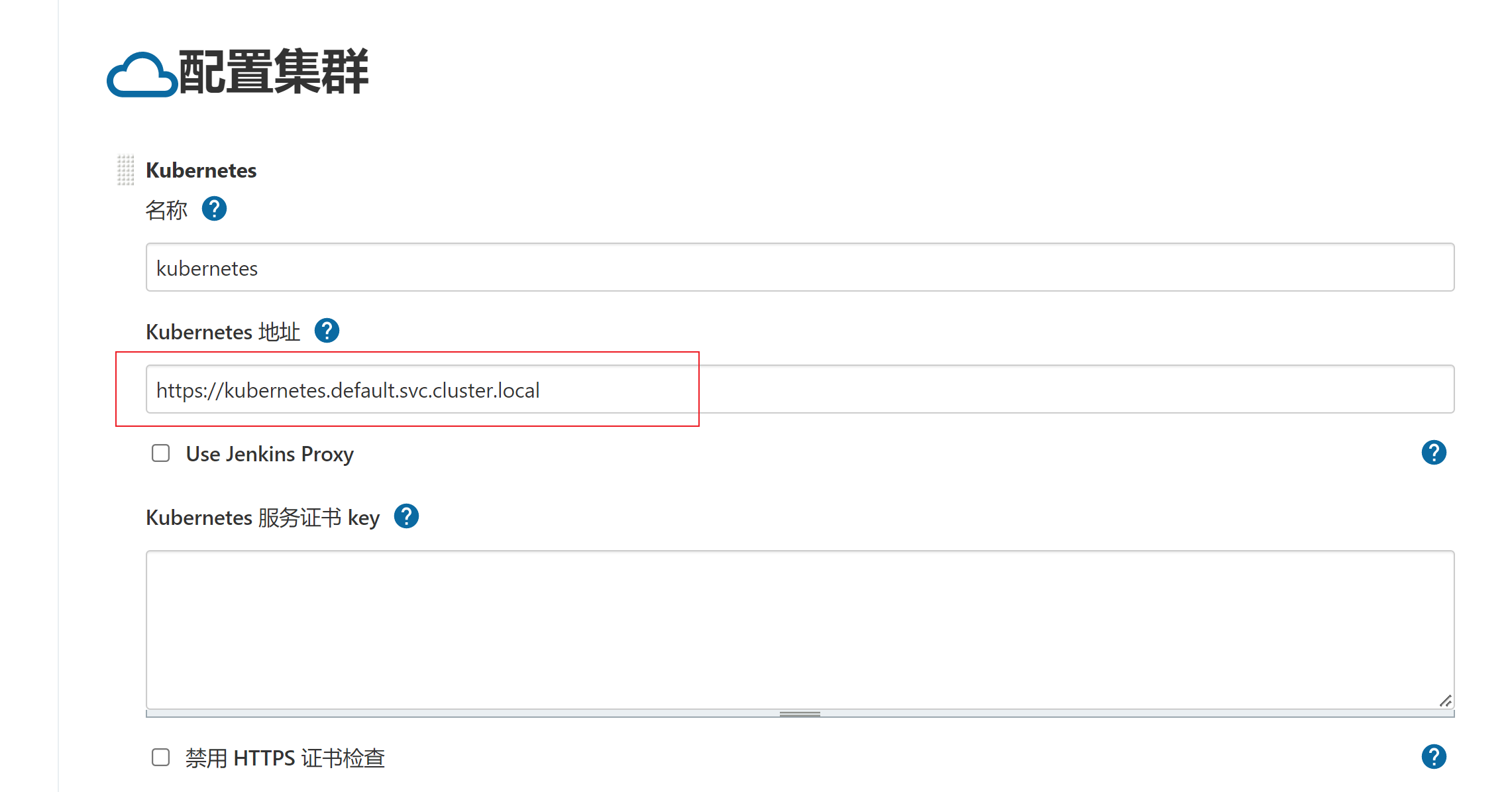

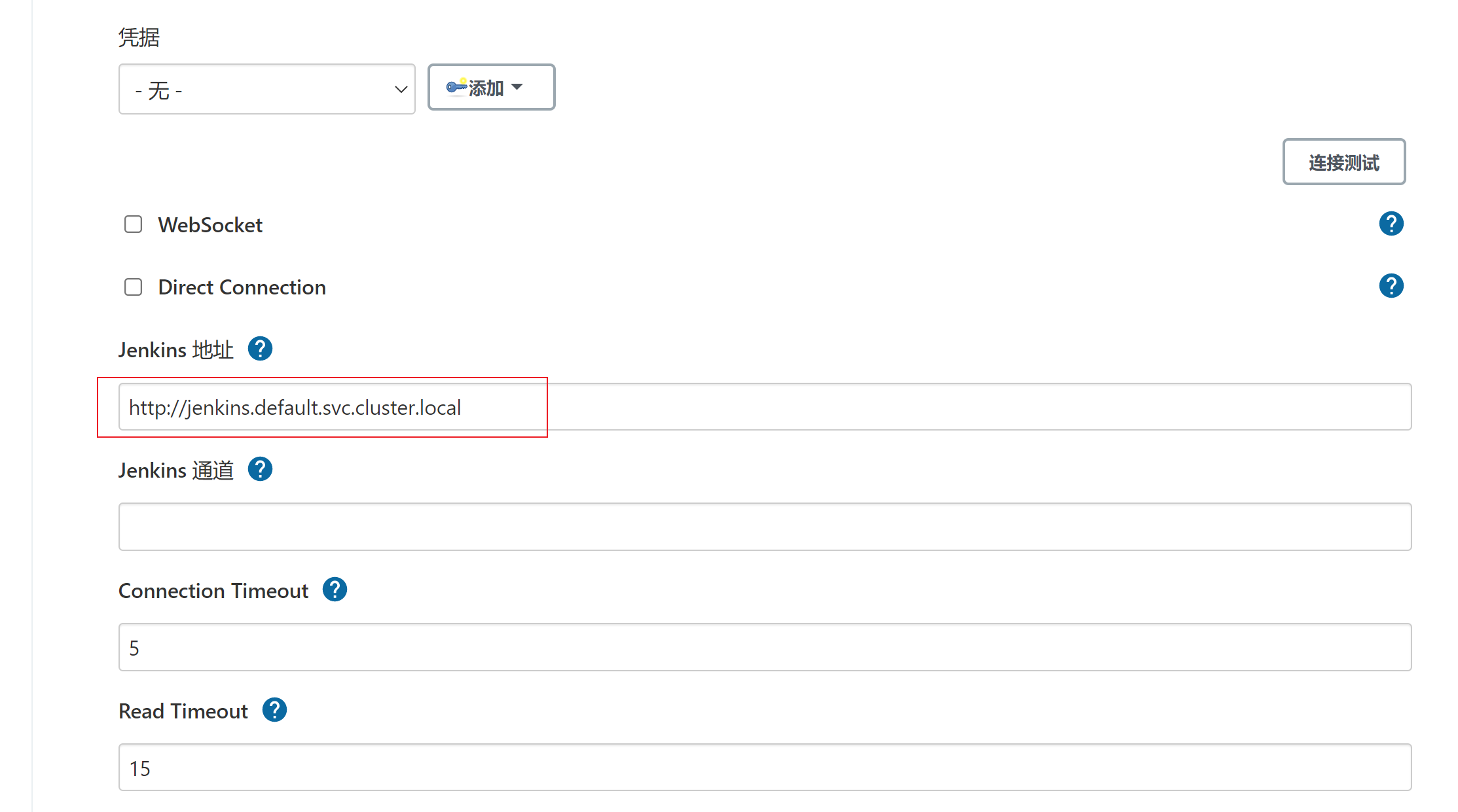

- 点击节点管理—>Configure Clouds -> Add a new cloud -> Kubernetes地址处填写 https://kubernetes.default.svc.cluster.local (kubernetes网络地址)-> jenkins地址( http://jenkins.default.svc.cluster.local)

###### 12.3.2.Jenkins Pipeline

- Jenkins Pipeline 语法

- Stages:阶段,它是Pipeline中主要的组成部分,Jenkins将会按照Stages中的描述的顺序从上往下的执行,一个Pipeline可以划分为若干个Stage,每个Stage代表一组操作,比如Build,Test,Deploy

- Steps:步骤,Steps是最基本的操作单元,可以是打印一句话,也可以是构建一个docker镜像,由各类Jenkins插件提供,比如命令“sh”,“mvn”,就相当于我们平时shell终端执行mvn命令一样

- 执行一个pipeline

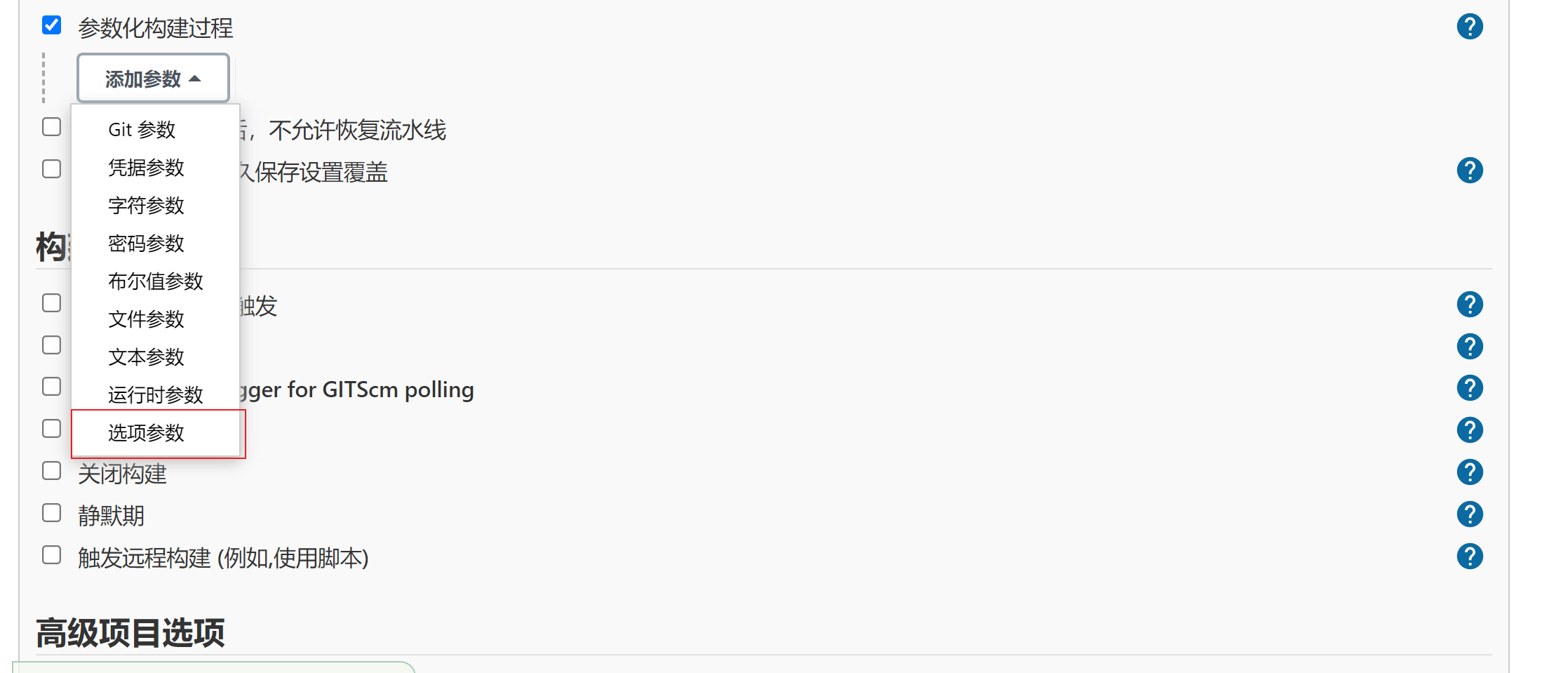

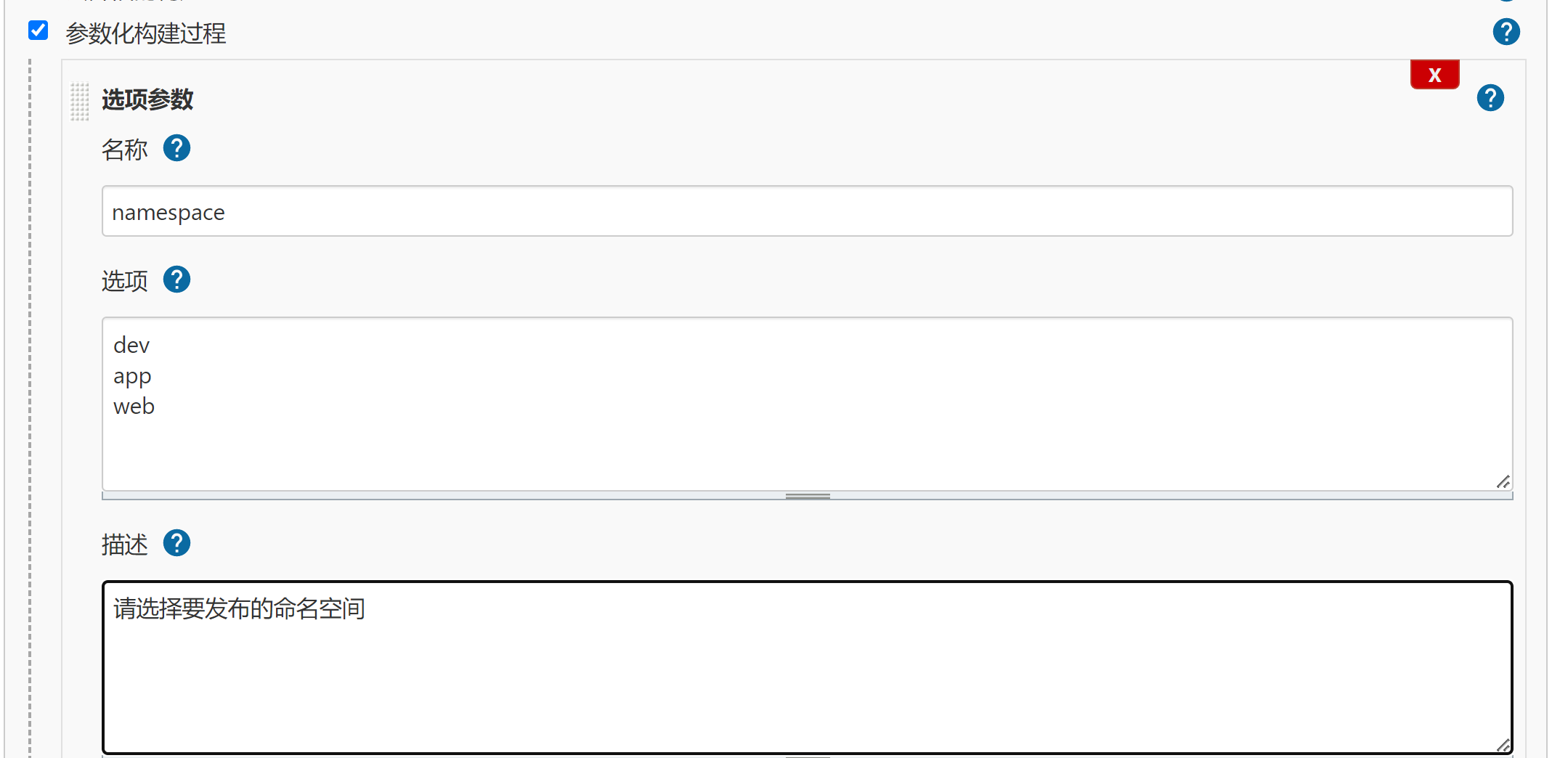

- 点参数化构建过程,选择选项参数

- 自定义参数(多个选项,需要换行写)

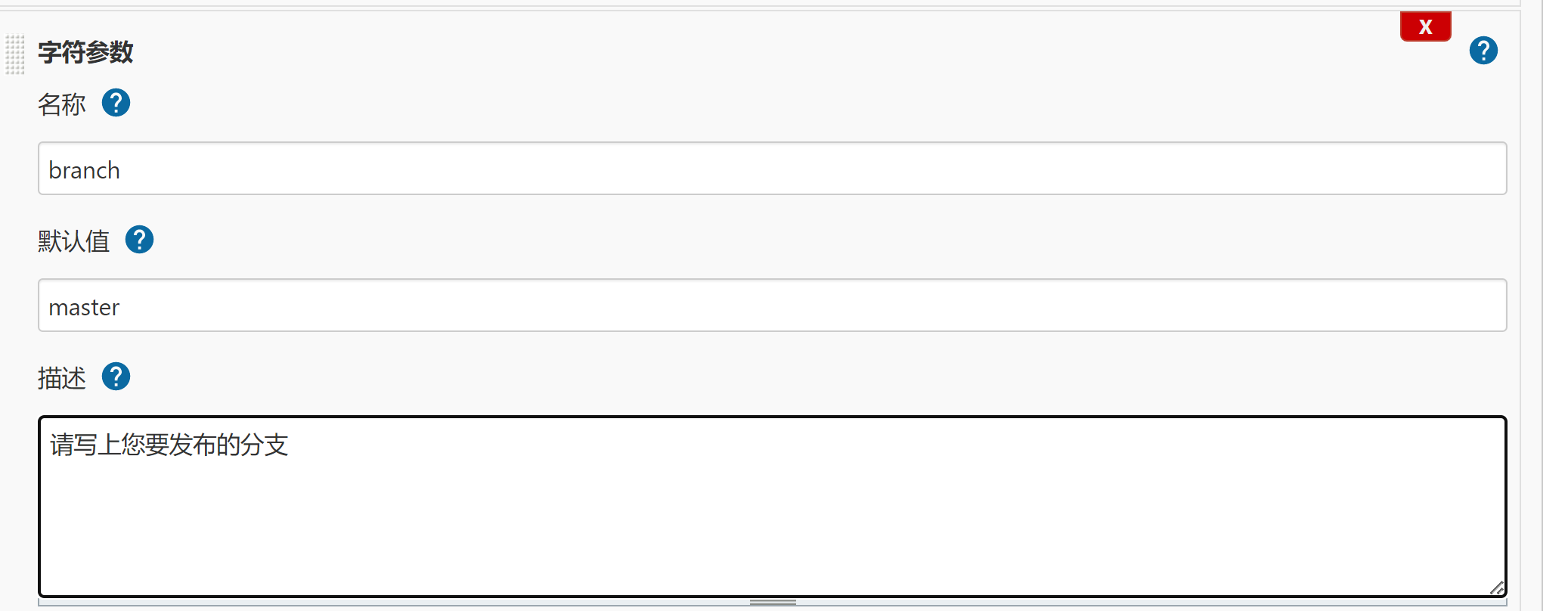

- 再次点击添加参数,选择字符参数

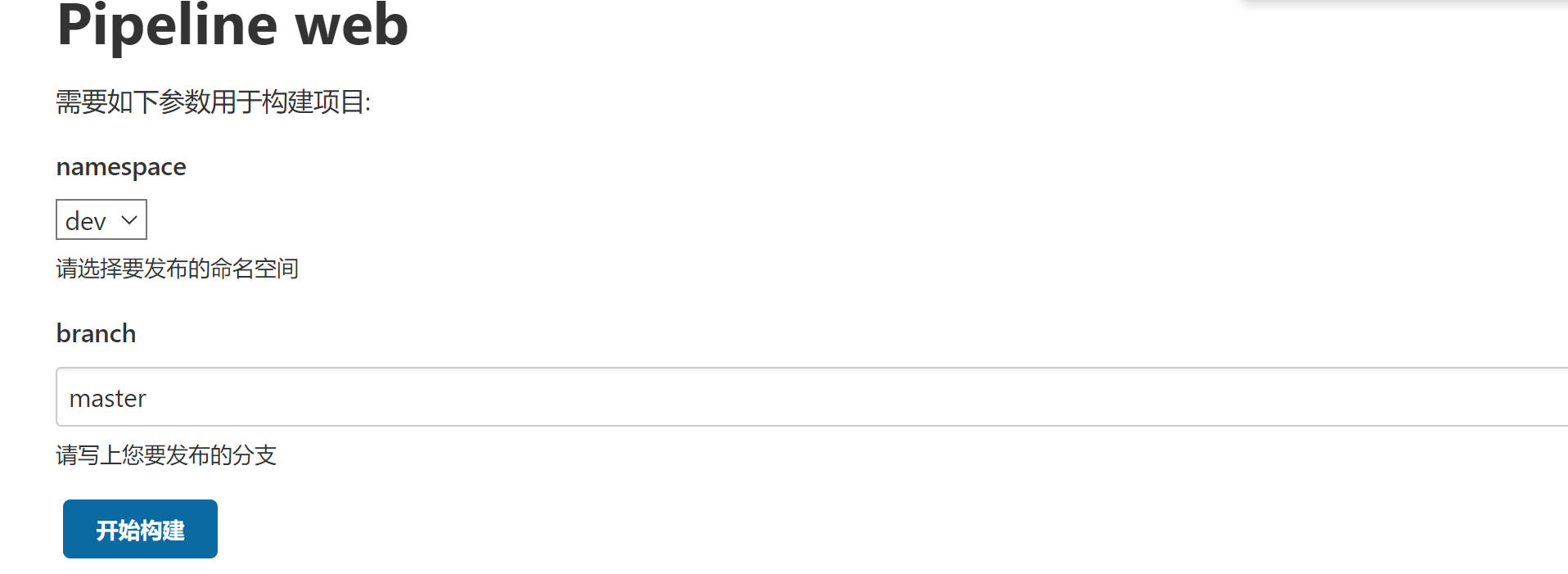

- 保存并点击构建,便有了一个可选择参数的变量和一个有默认值的变量(不指定便默认)

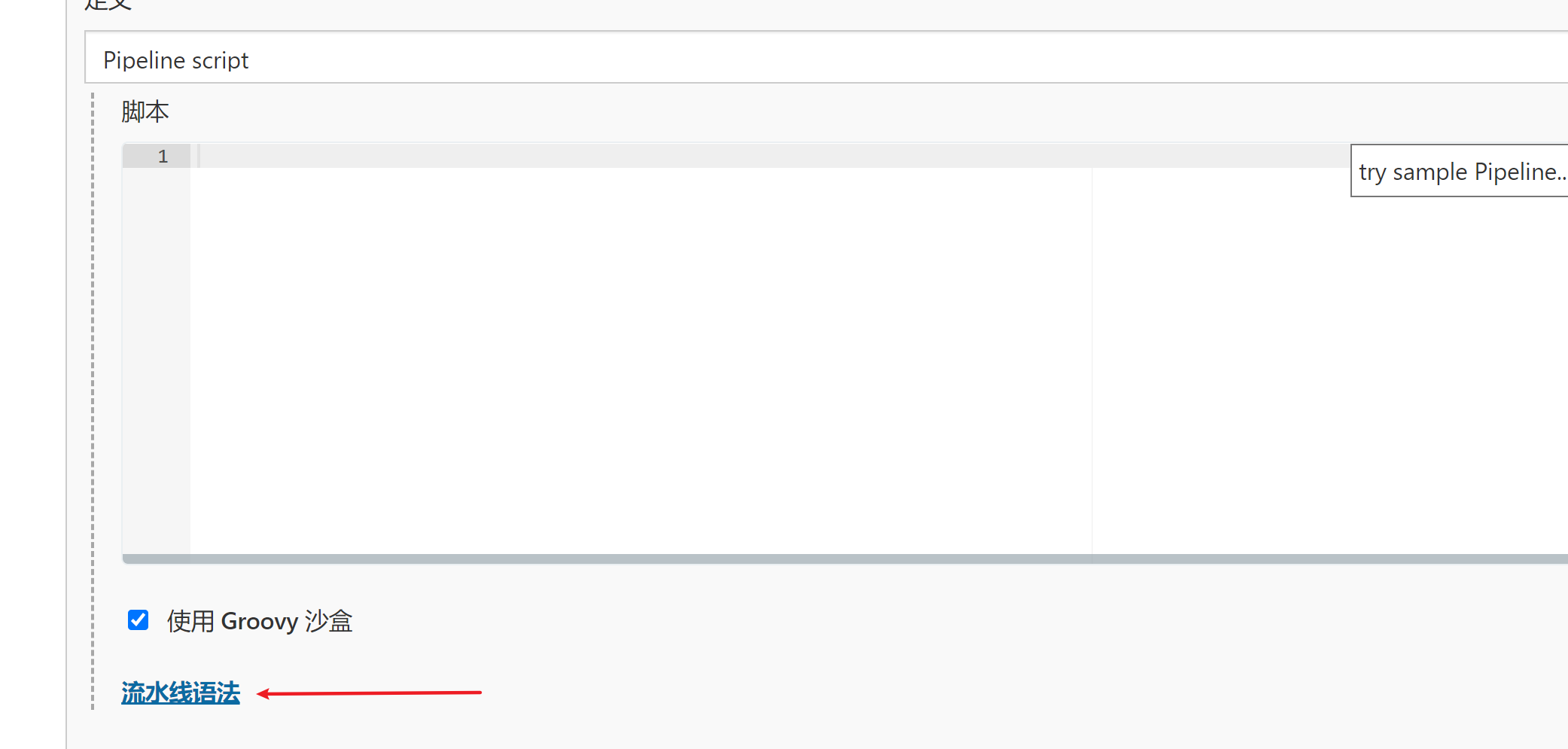

- 使用pipeline语法生成配置

- 点击流水线语法

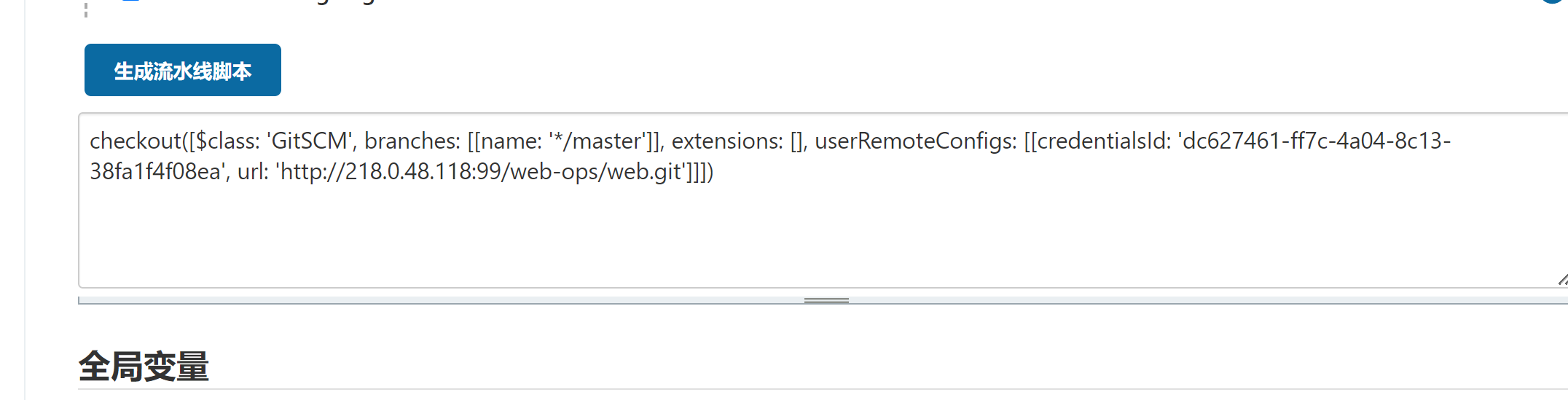

- 示例步骤点击 checkout: Check out from version control —>写上git仓库地址—>创建git仓库凭证—>再点击生成流水线脚本

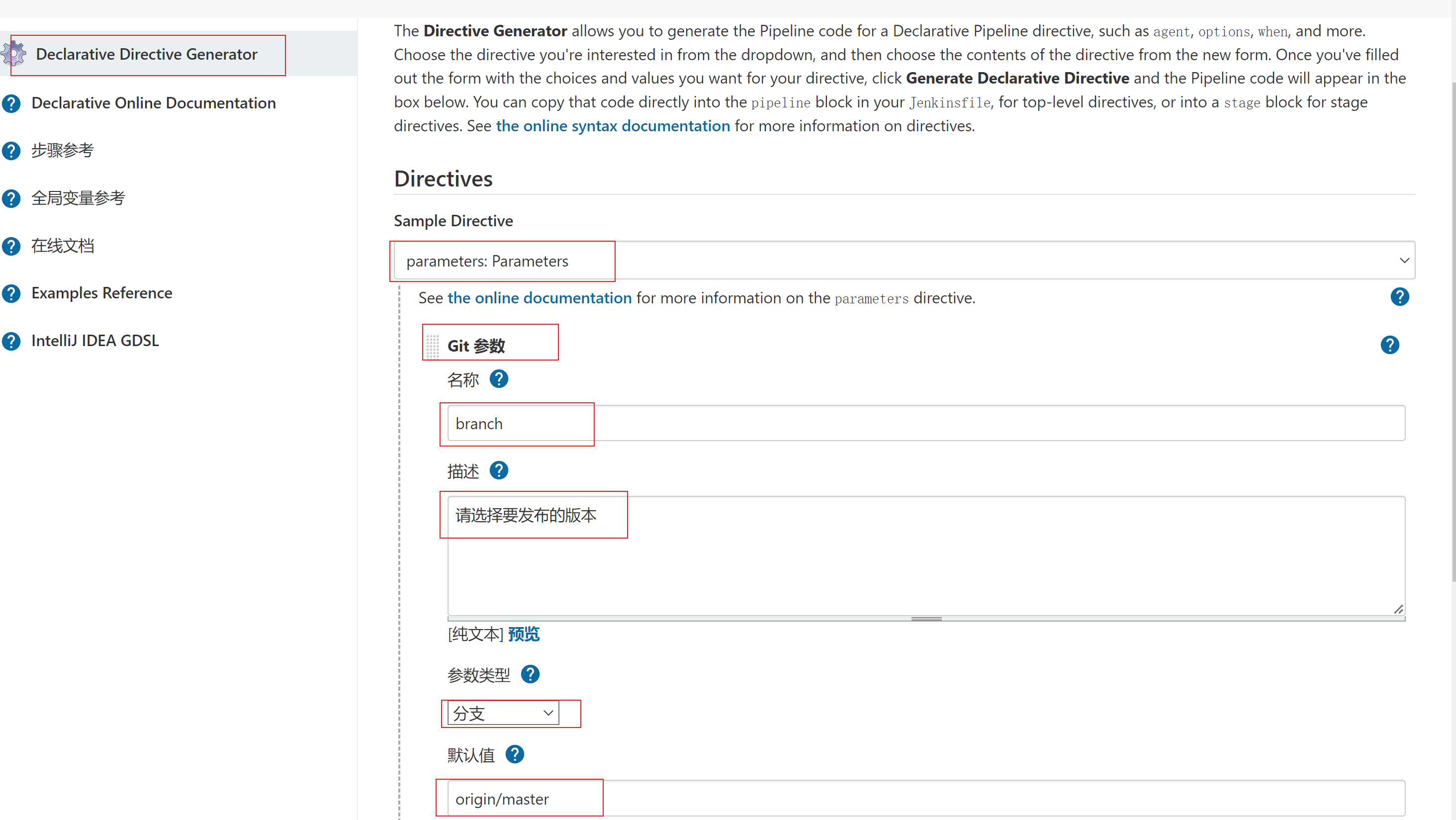

- 动态获取gitlab仓库分支,也可以添加其他参数化构建,如选项参数(在流水线语法中点击Declarative Directive Generator—> 选择parameters:Parameters—>选择GIt参数—>填写参数—>生成代码片段)

- Pipeline脚本如下(stages与parameters同级)

```yaml

pipeline {

agent {

kubernetes {

label "jenkins-slave"

yaml '''

apiVersion: v1

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: shell

image: "harbor.linuxtxc.com:9090/txcrepo/jenkins-slave-jdk:1.8"

command:

- sleep

args:

- infinity

'''

defaultContainer 'shell'

}

}

parameters {

gitParameter branch: '', branchFilter: '.*', defaultValue: 'origin/master', description: '请选择要发布的版本', name: 'branch', quickFilterEnabled: false, selectedValue: 'NONE', sortMode: 'NONE', tagFilter: '*', type: 'GitParameterDefinition'

}

stages {

stage('Main') {

steps {

sh "echo ${namespace}"

}

}

stage('拉取代码') {

steps {

checkout([$class: 'GitSCM', branches: [[name: "${Branch}"]], extensions: [], userRemoteConfigs: [[credentialsId: 'dc627461-ff7c-4a04-8c13-38fa1f4f08ea', url: 'http://218.0.48.118:99/web-ops/web.git']]])

}

}

}

}

```

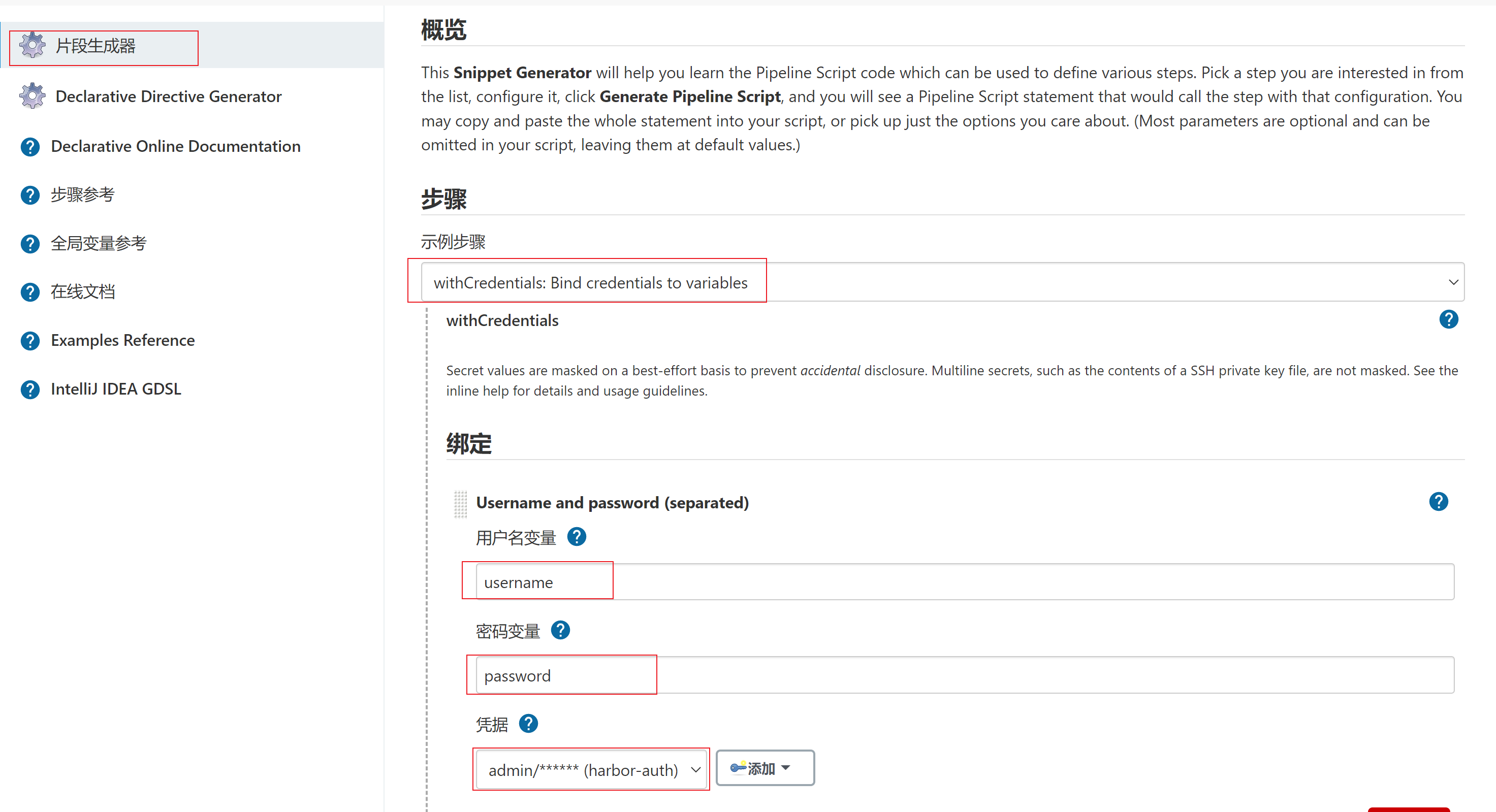

- 将凭据用户名密码生成变量(避免用明文展示)

- 生成脚本片段如下

```shell

withCredentials([usernamePassword(credentialsId: '36c1732b-6502-45b5-9d5b-05d691dfbbbc', passwordVariable: 'password', usernameVariable: 'username')]) {

// some block

}

```

- 将镜像部署到k8s平台的思路

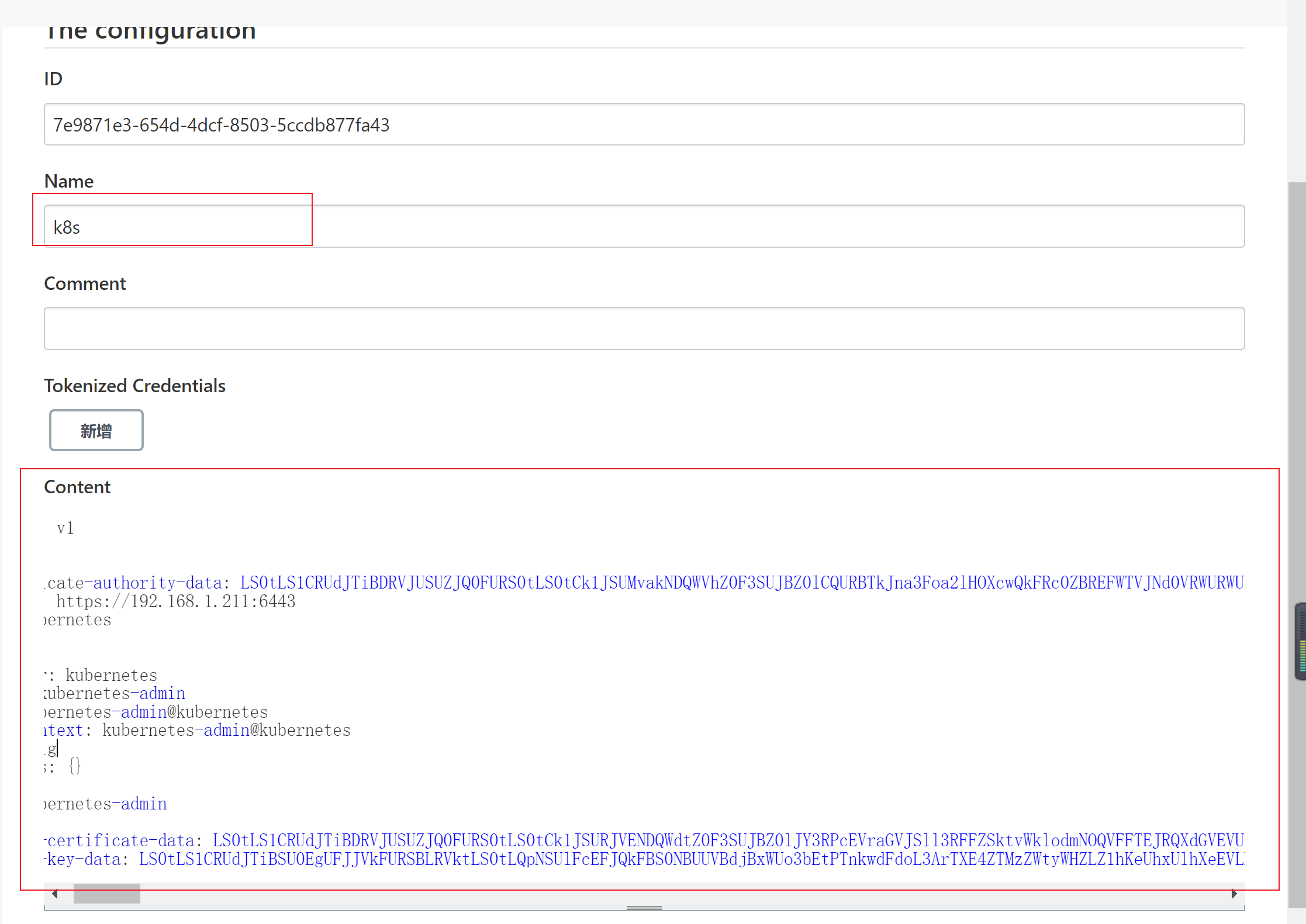

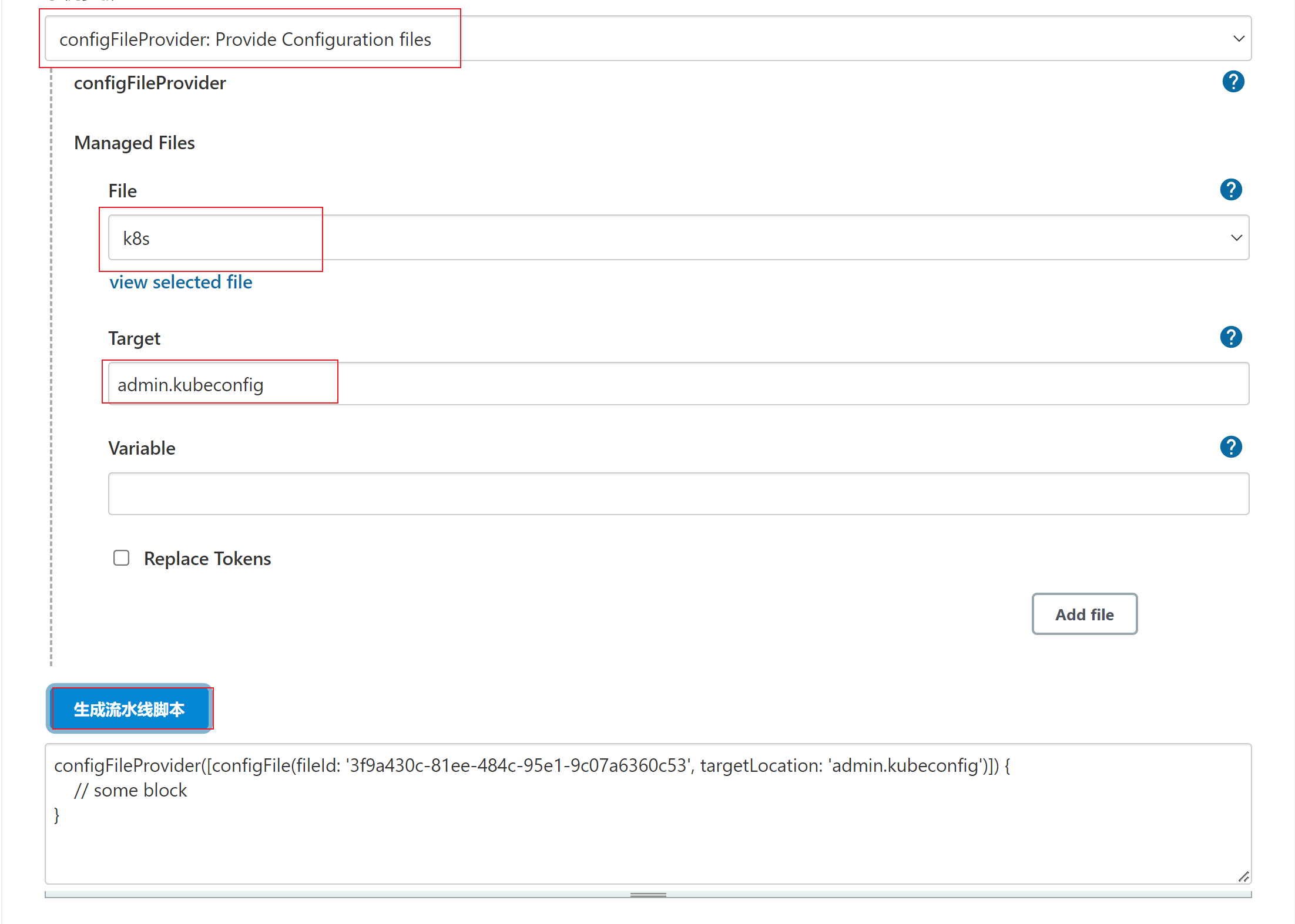

- 将部署项目yaml文件提交到项目代码仓库里,在slave容器中使用kubectl apply 部署,由于kubectl 使用kubeconfig 配置文件连接k8s集群,还需要通过 Config File Provider 插件将kubeconfig配置文件存储到Jenkins,然后再挂载到slave容器中,这样就有权限部署了(kubectl apply -f deploy.yaml --kubeconfig=config)

- 注:为提高安全性,kubeconfig文件应分配权限

- 将kubeconfig配置文件存储到jenkins中

```shell

cat .kube/config

```

- 点击系统管理—>Managed files—>Add a new Config—>Custom file (自定义文件)—>再直接点击下一步—>更改名字,再将kubeconfig文件写入

- 生成pipeline脚本片段(需要将fileid 部分 修改成刚刚创建的k8s 凭据)

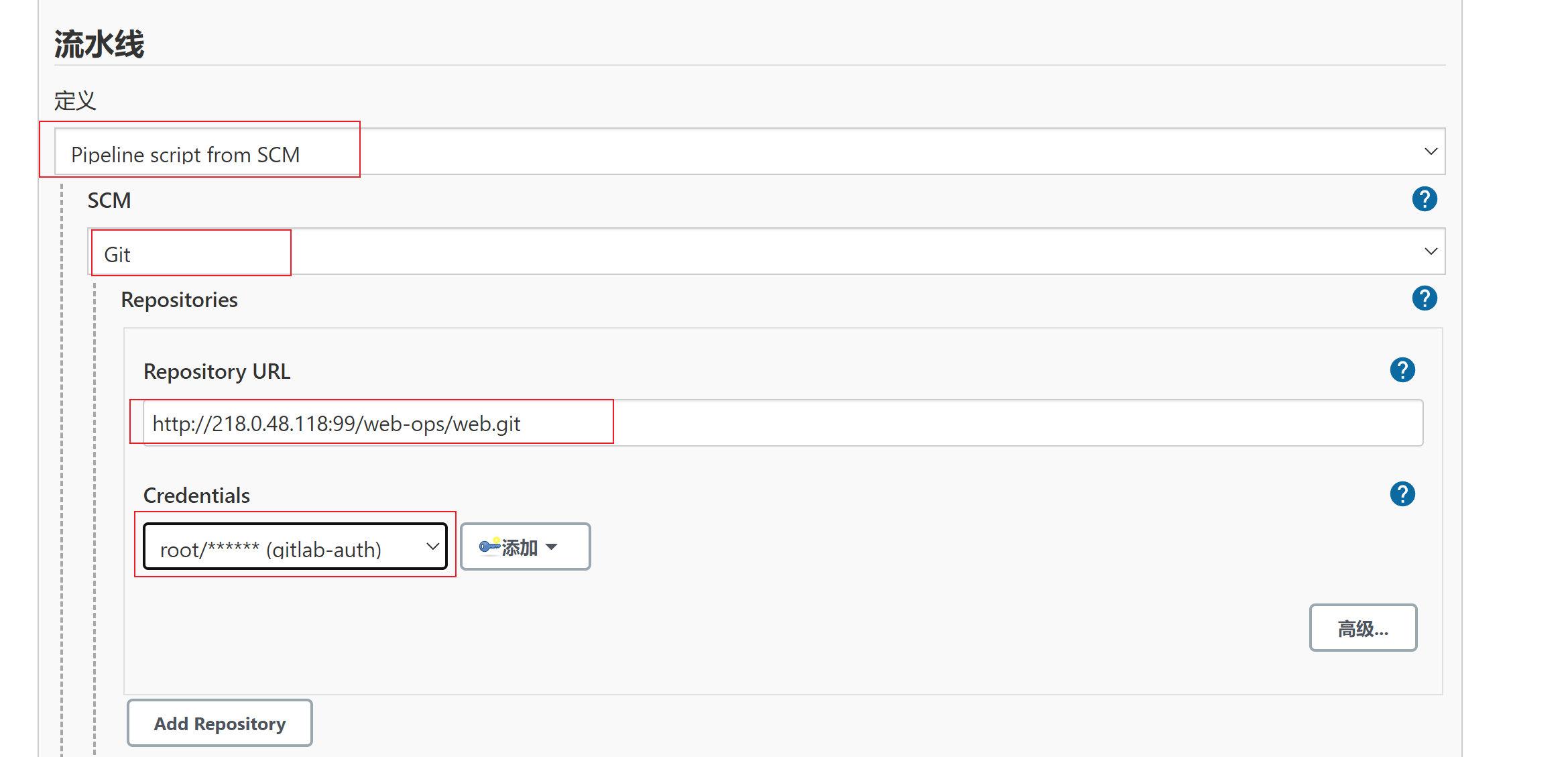

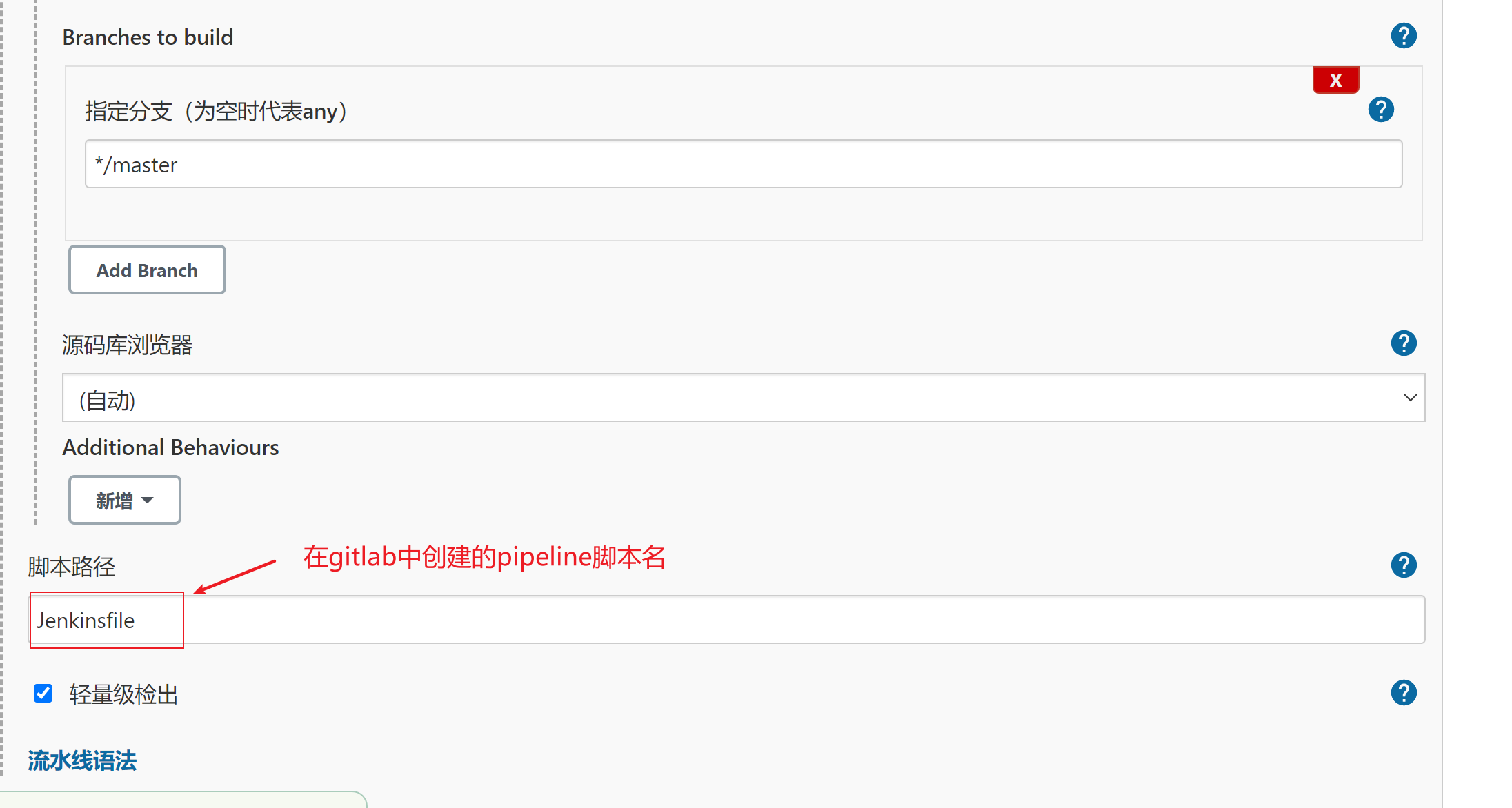

- 以下是将deploy.yaml与Jenkinsfile,都可以放在gitlab仓库中

- 在gitlab中创建Jenkinsfile,再配置pipeline脚本

- 一个完整的pipeline脚本

```yaml

// 公共

def registry = "harbor.linuxtxc.com:9090"

// 项目

def project = "txcrepo"

def app_name = "java-demo3"

// BUILD_NUMBER,自带变量,逐次递增

def image_name = "${registry}/${project}/${app_name}:${BUILD_NUMBER}"

def git_address = "http://218.0.48.118:99/web-ops/web.git"

// 认证

def secret_name = "harbor-key-secret"

def harbor_auth = "36c1732b-6502-45b5-9d5b-05d691dfbbbc"

def git_auth = "3467ad1b-6fdc-4a4a-a758-19b229a362fa"

def k8s_auth = "3f9a430c-81ee-484c-95e1-9c07a6360c53"

pipeline {

agent {

kubernetes {

label "jenkins-slave"

yaml """

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: jnlp

image: "${registry}/zhan/jenkins_slave-jdk:1.8"

imagePullPolicy: IfNotPresent

volumeMounts:

- name: docker-cmd

mountPath: /usr/bin/docker

- name: docker-sock

mountPath: /var/run/docker.sock

- name: maven-cache

mountPath: /root/.m2

volumes:

- name: docker-cmd

hostPath:

path: /usr/bin/docker

- name: docker-sock

hostPath:

path: /var/run/docker.sock

- name: maven-cache

hostPath:

path: /tmp/m2

"""

}

}

parameters {

gitParameter branch: '', branchFilter: '.*', defaultValue: 'master', description: '选择发布的分支', name: 'Branch', quickFilterEnabled: false, selectedValue: 'NONE', sortMode: 'NONE', tagFilter: '*', type: 'PT_BRANCH'

choice (choices: ['1', '3', '5', '7'], description: '副本数', name: 'Replicas')

choice (choices: ['dev','test','prod','default'], description: '命名空间', name: 'Namespace')

}

stages {

stage('拉取代码'){

steps {

checkout([$class: 'GitSCM',

branches: [[name: "${params.Branch}"]],

doGenerateSubmoduleConfigurations: false,

extensions: [], submoduleCfg: [],

userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_address}"]]

])

}

}

stage('代码编译'){

steps {

sh """

mvn clean package -Dmaven.test.skip=true

"""

}

}

stage('构建镜像'){

steps {

withCredentials([usernamePassword(credentialsId: "${harbor_auth}", passwordVariable: 'password', usernameVariable: 'username')]) {

sh """

unzip target/*.war -d target/ROOT

echo '

FROM lizhenliang/tomcat

LABEL maitainer lizhenliang

ADD target/ROOT /usr/local/tomcat/webapps/ROOT

' > Dockerfile

docker build -t ${image_name} .

docker login -u ${username} -p '${password}' ${registry}

docker push ${image_name}

"""

}

}

}

stage('部署到K8S平台'){

steps {

configFileProvider([configFile(fileId: "${k8s_auth}", targetLocation: "admin.kubeconfig")]){

sh """

sed -i 's#IMAGE_NAME#${image_name}#' deploy.yaml

sed -i 's#SECRET_NAME#${secret_name}#' deploy.yaml

sed -i 's#REPLICAS#${Replicas}#' deploy.yaml

kubectl apply -f deploy.yaml -n ${Namespace} --kubeconfig=admin.kubeconfig

"""

}

}

}

}

}

```

---

#### 13.k8s日志收集,ELK部署

- 应用程序日志记录体现方式分为两类:

- 标准输出:输出到控制台,使用kubectl logs可以看到,日志保存在/var/lib/docker/containers/<容器ID>/xxx-json.log

- 针对标准输出的日志收集:以DaemonSet 方式在每个Node上部署一个日志收集程序,采集/var/lib/docker/containers/目录下所有的容器日志

- 日志文件:写到容器文件系统的文件中

- 针对容器日志文件的日志收集:在pod中增加一个容器运行日志采集器,使用emptyDir共享目录让日志采集器读取到日志文件

- 日志平台解决方案 ELK

- Elasticsearch:数据存储,搜索,分析

- Logstash:采集日志,格式化,过滤,最后将数据推送到Elasticsearch

- Kibana:数据可视化

- 在k8s中部署服务

```shell

wget http://oss.linuxtxc.com/kubernetes-elk.zip

cd kubernetes-elk/

#需要将storageclass改为创建的

sed -i 's/managed-nfs-storage/nfs-storageclass/g' ./*.yaml

kubectl apply -f elasticsearch.yaml

kubectl apply -f kibana.yaml

kubectl apply -f filebeat-kubernetes.yaml

```

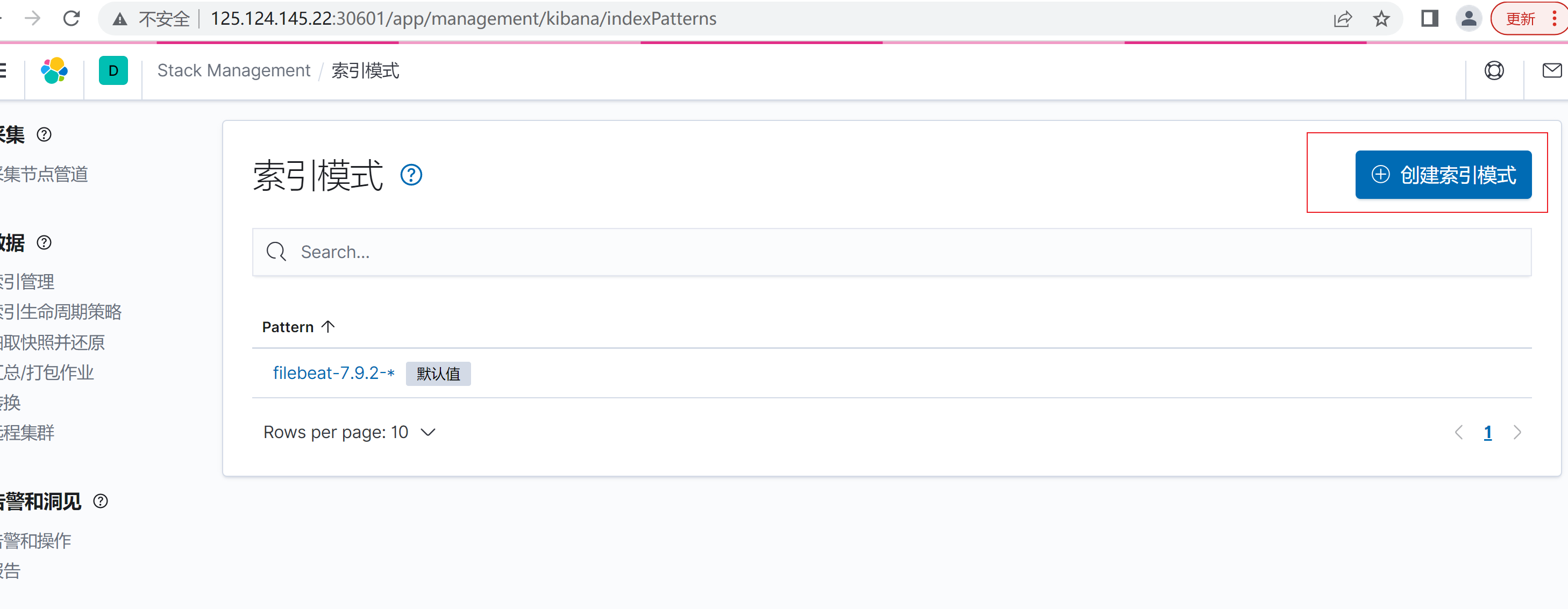

- 访问kibana,添加elasticsearch索引(http://125.124.145.22:30601/)

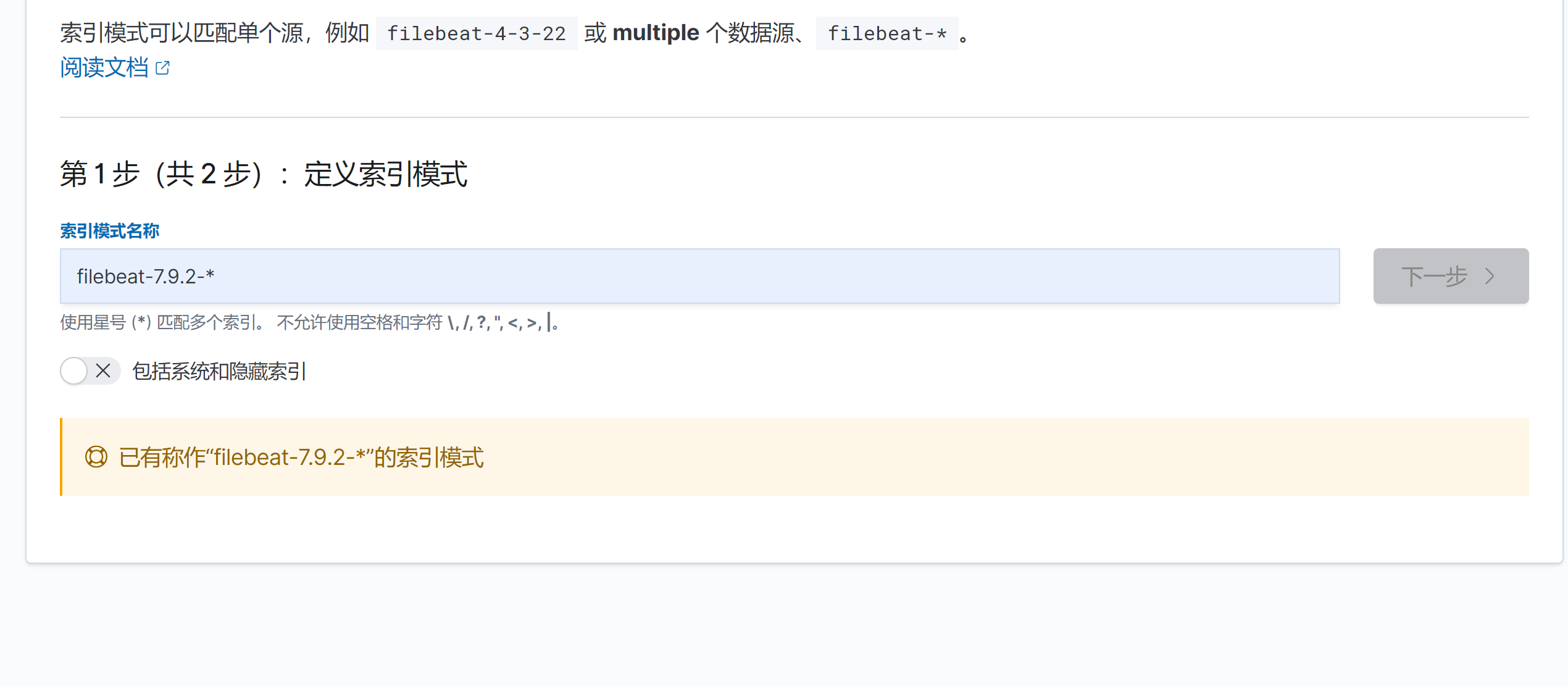

- 点击创建索引模式

- 正则匹配索引名称(我这里是之前创建过)

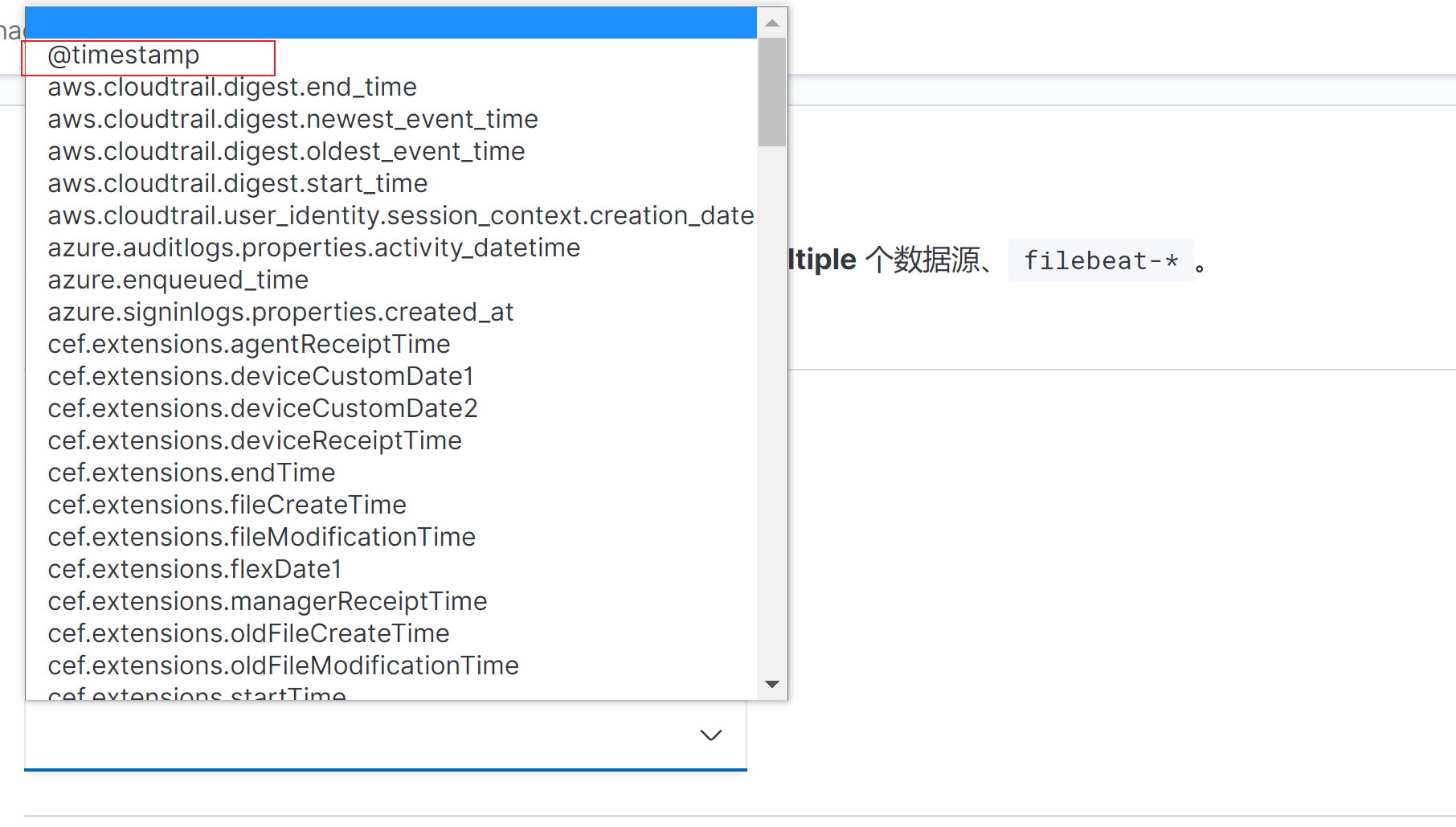

- 时间字段选择时间戳

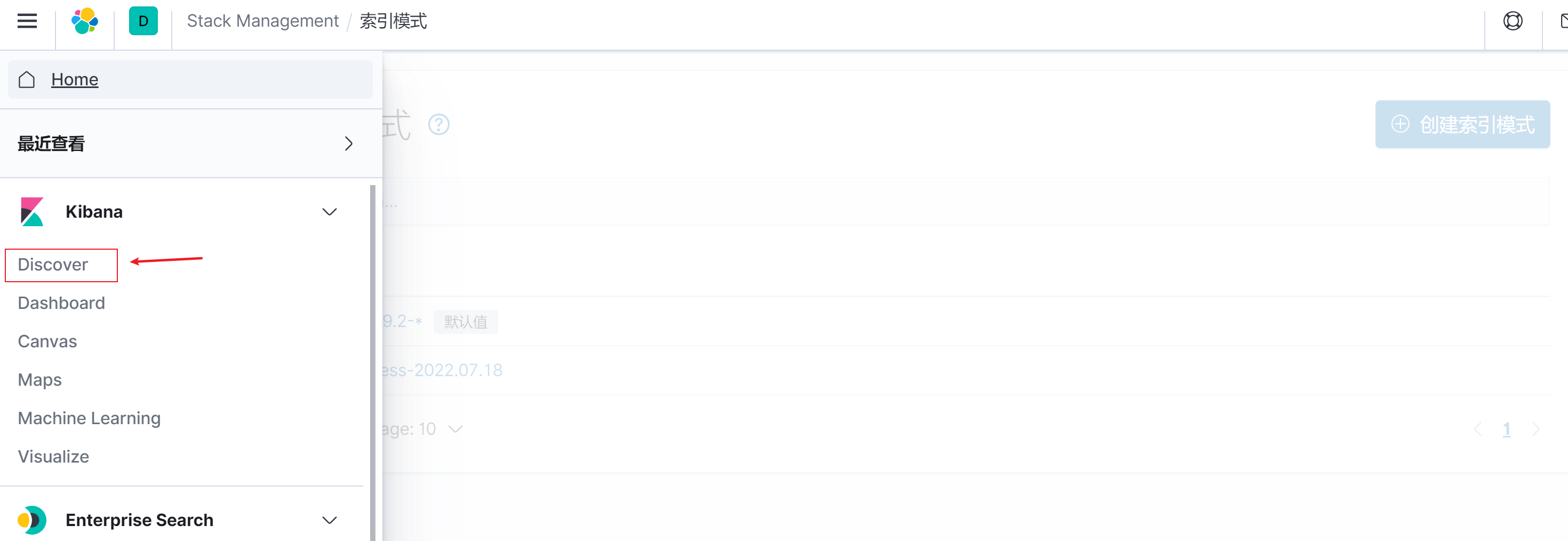

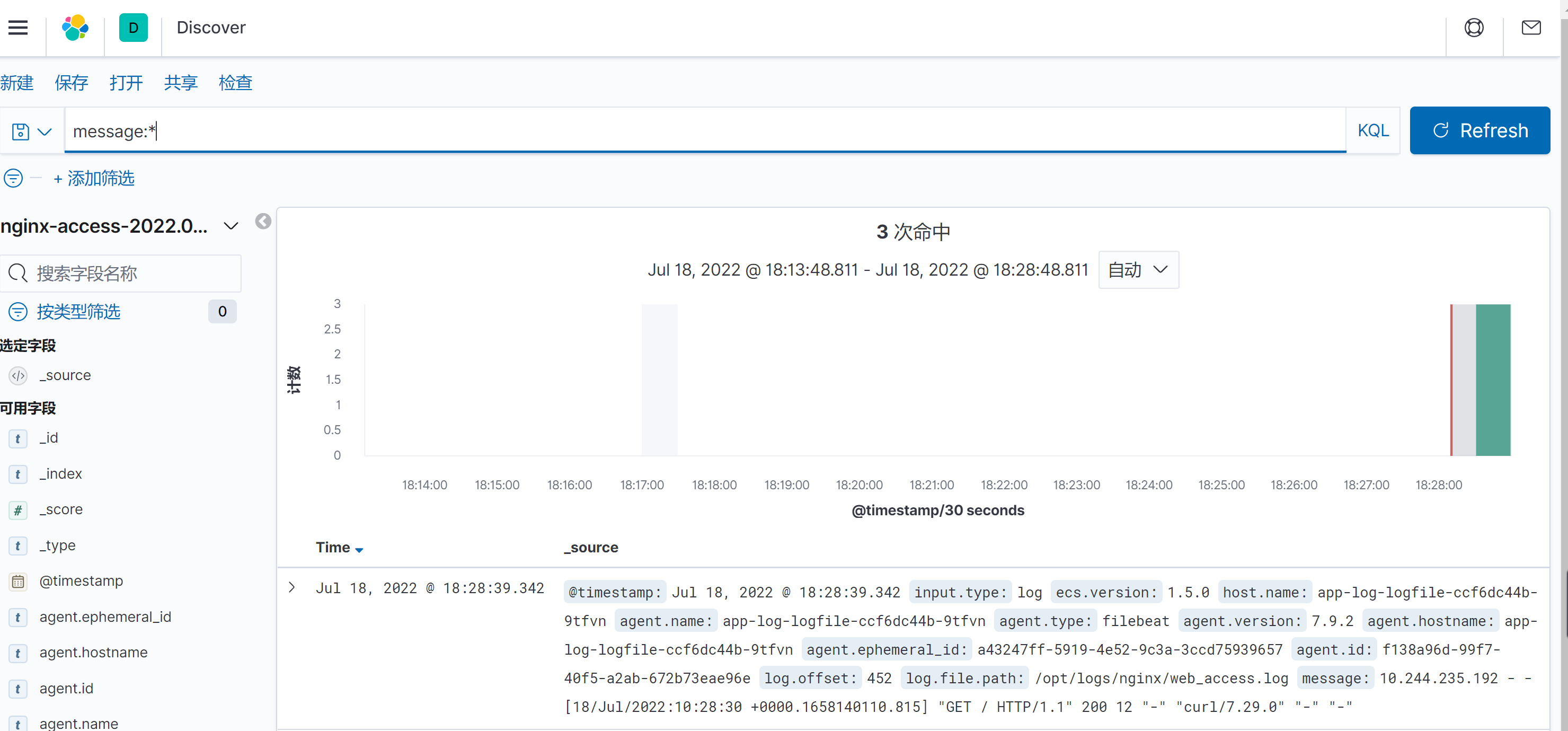

- 创建成功后,搜索查看日志,点击kibana->Discover

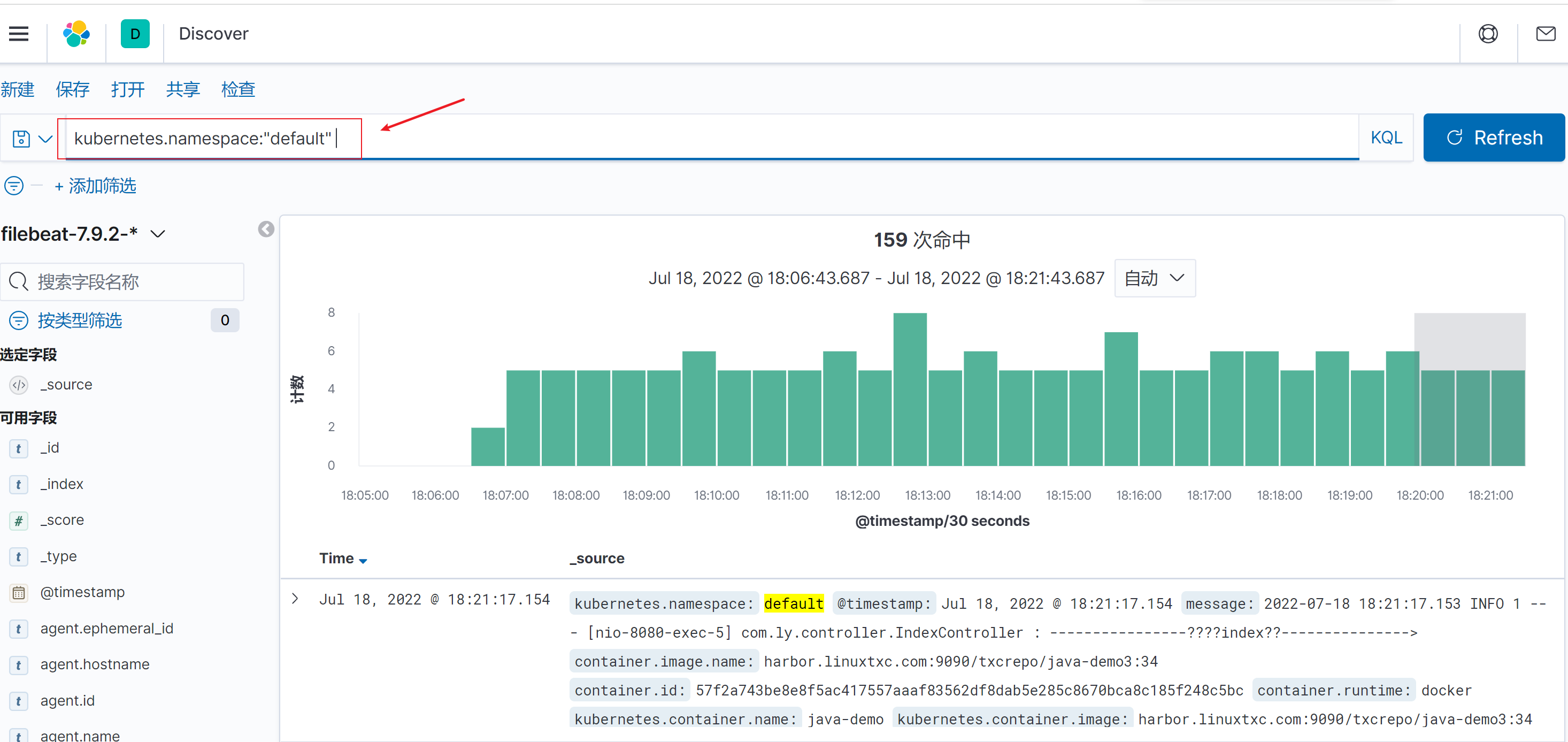

- 通过搜索查询日志

- 创建一个能收集日志文件的应用,app-log-logfile.yaml 内容如下:

```yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-log-logfile

spec:

replicas: 1

selector:

matchLabels:

project: microservice

app: nginx-logfile

template:

metadata:

labels:

project: microservice

app: nginx-logfile

spec:

imagePullSecrets:

- name: harbor-key-secret

containers:

# 应用容器

- name: nginx

image: harbor.linuxtxc.com:9090/txcrepo/alpine-nginx:1.19.6

# 将数据卷挂载到日志目录

volumeMounts:

- name: nginx-logs

mountPath: /opt/logs/nginx

# 日志采集器容器

- name: filebeat

image: elastic/filebeat:7.9.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

memory: 500Mi

securityContext:

runAsUser: 0

volumeMounts:

# 挂载filebeat配置文件

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

# 将数据卷挂载到日志目录

- name: nginx-logs

mountPath: /opt/logs/nginx

# 数据卷共享日志目录

volumes:

- name: nginx-logs

emptyDir: {}

- name: filebeat-config

configMap:

name: filebeat-nginx-config

---

apiVersion: v1

kind: Service

metadata:

name: app-log-logfile

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

project: microservice

app: nginx-logfile

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-nginx-config

data:

# 配置文件保存在ConfigMap

filebeat.yml: |-

filebeat.inputs:

- type: log

paths:

- /opt/logs/nginx/*.log

# tags: ["access"]

fields_under_root: true

fields:

project: microservice

app: nginx

setup.ilm.enabled: false

setup.template.name: "nginx-access"

setup.template.pattern: "nginx-access-*"

output.elasticsearch:

hosts: ['elasticsearch.ops:9200']

index: "nginx-access-%{+yyyy.MM.dd}"

```

- 查看访问日志如下

---

#### 14.搭建kubernetes高可用集群

| 主机名 | IP地址 | 安装软件 |

| ------------ | ----------------------------------- | ------------------- |

| k8s-master01 | 192.168.1.211(vip:192.168.1.200) | Haproxy,keepalived |

| k8s-master02 | 192.168.1.15(vip:192.168.1.200) | Haproxy,keepalived |

| k8s-master03 | 192.168.1.49(vip:192.168.1.200) | Haproxy,keepalived |

##### 14.1.安装Haproxy

```shell

#三台都需要安装

yum -y install haproxy

#备份配置文件

mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bak

```

- 配置文件

```shell

vim /etc/haproxy/haproxy.cfg

------

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /var/run/haproxy-admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

nbproc 1

defaults

log global

timeout connect 5000

timeout client 10m

timeout server 10m

listen admin_stats

bind 0.0.0.0:10080

mode http

log 127.0.0.1 local0 err

stats refresh 30s

stats uri /status

stats realm welcome login\ Haproxy

stats auth admin:123456

stats hide-version

stats admin if TRUE

listen kube-master

bind 192.168.1.200:16443

mode tcp

option tcplog

balance source

server 192.168.1.211 192.168.1.211:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.1.15 192.168.1.15:6443 check inter 2000 fall 2 rise 2 weight 1

server 192.168.1.49 192.168.1.49:6443 check inter 2000 fall 2 rise 2 weight 1

```

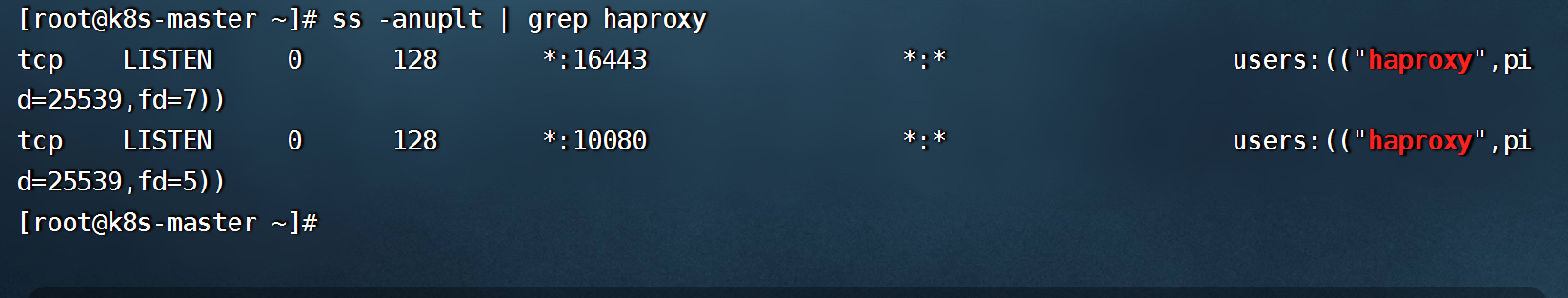

- 启动并设置开机自启

```shell

systemctl enable haproxy && systemctl restart haproxy

netstat -lnpt|grep haproxy

```

##### 14.2.安装keepalived

```shell

yum -y install keepalived

#备份配置文件

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

```

- 修改配置文件,vim /etc/keepalived/keepalived.conf

- k8s-master01

```shell

global_defs {

router_id k8s-master01

script_user root

enable_script_security

}

vrrp_script check-haproxy {

#检查所在节点的 haproxy 进程是否正常。如果异常则将权重减少3

script "/opt/scripts/haproxy_check.sh"

interval 5 #脚本执行间隔

weight -3

}

vrrp_instance VI_1 {

state MASTER

#VIP所在接口

interface eth0

dont_track_primary

virtual_router_id 88 #组ID,同组相同

priority 100 ##优先级,主大于备

advert_int 3 #vrrp通告间隔时间

authentication {

auth_type PASS #通讯协议

auth_pass 1111

}

#检测脚本

track_script {

check-haproxy

}

#虚拟IP地址

virtual_ipaddress {

192.168.1.200/24

}

}

```

- k8s-master02

```shell

global_defs {

router_id k8s-master02

script_user root

enable_script_security

}

vrrp_script check-haproxy {

#检查所在节点的 haproxy 进程是否正常。如果异常则将权重减少3

script "/opt/scripts/haproxy_check.sh"

interval 5 #脚本执行间隔

weight -3 #检测失败将会减少3权重

}

vrrp_instance VI_1 {

state BACKUP

#VIP所在接口

interface eth0

dont_track_primary

virtual_router_id 88 #组ID,同组相同

priority 95 ##优先级,主大于备

advert_int 3 #vrrp通告间隔时间

authentication {

auth_type PASS #通讯协议

auth_pass 1111

}

#检测脚本

track_script {

check-haproxy

}

#虚拟IP地址

virtual_ipaddress {

192.168.1.200/24

}

}

```

- k8s-master03

```shell

global_defs {

router_id k8s-master03

script_user root

enable_script_security

}

vrrp_script check-haproxy {

#检查所在节点的 haproxy 进程是否正常。如果异常则将权重减少3

script "/opt/scripts/haproxy_check.sh"

interval 5 #脚本执行间隔

weight -3 #检测失败将会减少3权重

}

vrrp_instance VI_1 {

state BACKUP

#VIP所在接口

interface eth0

dont_track_primary

virtual_router_id 88 #组ID,同组相同

priority 90 ##优先级,主大于备

advert_int 3 #vrrp通告间隔时间

authentication {

auth_type PASS #通讯协议

auth_pass 1111

}

#检测脚本

track_script {

check-haproxy

}

#虚拟IP地址

virtual_ipaddress {

192.168.1.200/24

}

}

```

- vim /opt/scripts/haproxy_check.sh(#检测脚本记得添加执行权限)

```shell

#!/bin/bash

rpm -q psmisc &> /dev/null

if [ $? -ne 0 ];then

yum -y install psmisc &> /dev/null

fi

killall -0 haproxy &> /dev/null

if [ $? -ne 0 ];then

systemctl restart haproxy &> /dev/null

PS_NU=`ps -aux | grep -c haproxy`

if [ ${PS_NU} -gt 1 ];then

systemctl stop keepalived

fi

fi

```

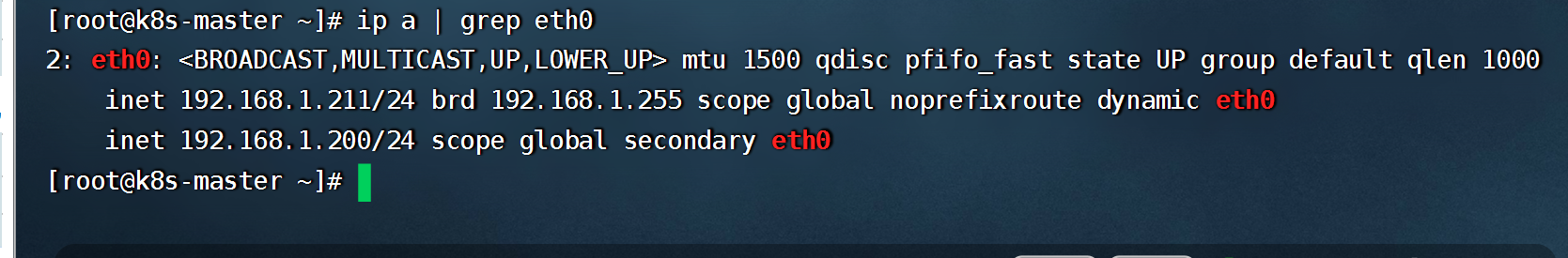

- 启动并设置开机自启

```shell

systemctl enable keepalived && systemctl start keepalived

#查看虚拟IP(如果是云服务器,可能需要在云平台上绑定虚拟IP)

ip a | grep eth0

```

##### 14.3.初始化kubernetes集群

```shell

kubeadm init --kubernetes-version=1.23.0 \

--apiserver-advertise-address=192.168.1.211 \

--control-plane-endpoint=192.168.1.200 \

--apiserver-bind-port=6443 \

--service-cidr=10.64.0.0/24 \

--pod-network-cidr=10.244.0.0/16 \

--image-repository registry.aliyuncs.com/google_containers

#--control-plane-endpoint=虚拟VIP地址

--service-dns=cluster.local #指定域名后缀

```

- 初始化其他master节点

```shell

kubeadm join 192.168.1.200:6443 --token mxn459.46vcmjrek2d7u64q --discovery-token-ca-cert-hash sha256:4799994a76e37e778187e1caad62831aa8eeb0d63d64dd22b16f4c7fce72d94f --control-plane --certificate-key cfe21d65a43b65cb975d93fa937c364aac39a6c3e58b50930351422e066e92b0

#--control-plane,添加master节点,--certificate-key,指定master加入集群的token,可通过命令 "kubeadm init phase upload-certs --upload-certs"获取

#创建kubeconfig文件

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

```

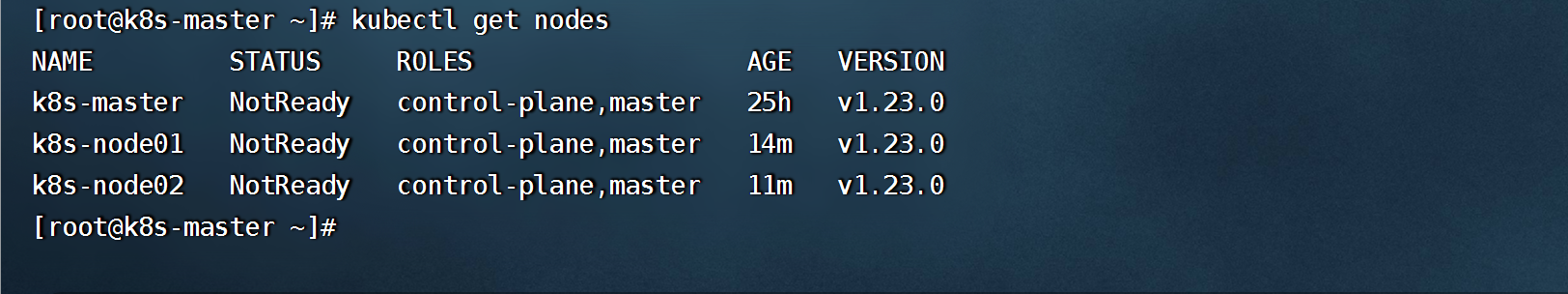

- 查看node(NotReady,因为未安装网络组件)

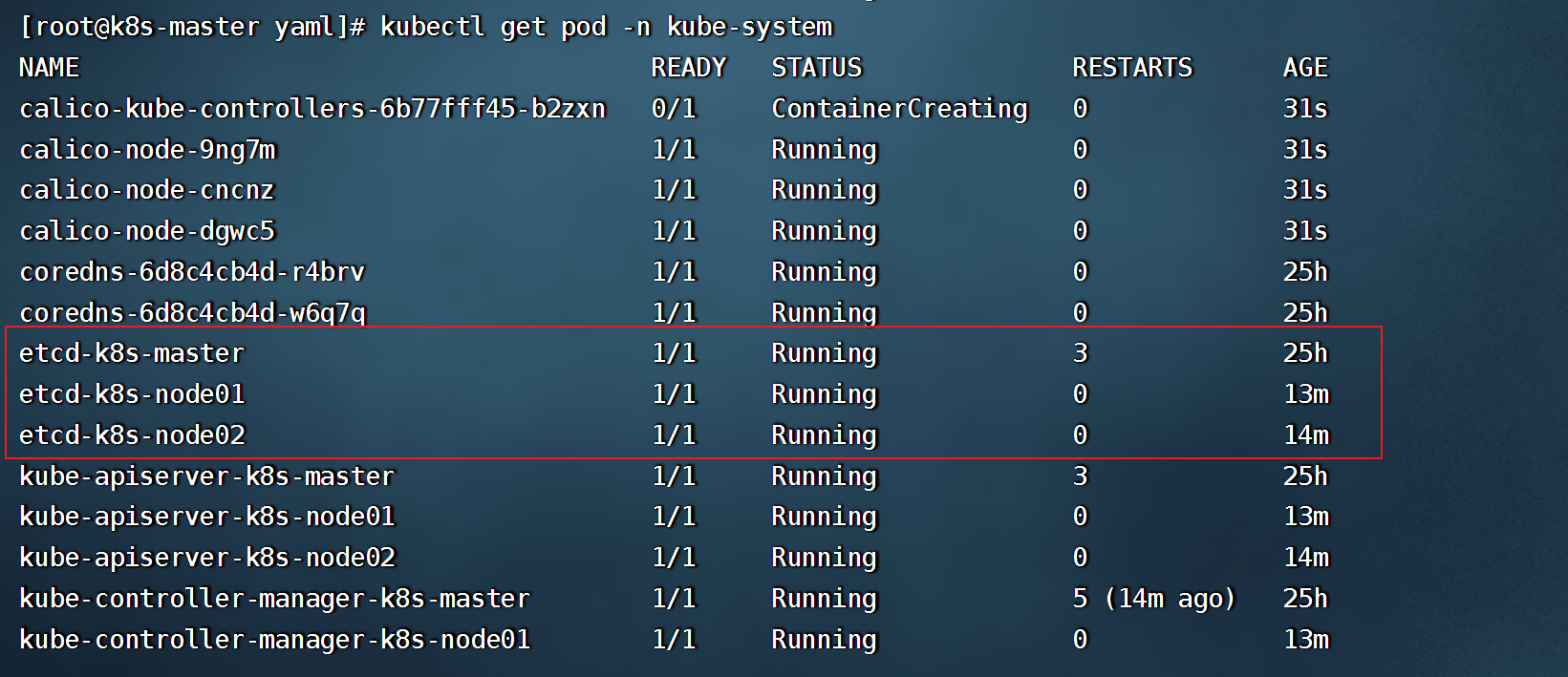

- 安装好网络组件后,查看各组件pod是否正常

- 加入其他node节点

```shell

#查看将节点加入集群的命令

#kubeadm token create --print-join-command

kubeadm join 192.168.1.200:6443 --token mxn459.46vcmjrek2d7u64q --discovery-token-ca-cert-hash sha256:4799994a76e37e778187e1caad62831aa8eeb0d63d64dd22b16f4c7fce72d94f

```

##### 14.4.检测keepalived 虚拟VIP漂移

- 修改haproxy.cfg配置

```shell

#将监听端口改成已经占用的端口,再重启

listen kube-master

bind 192.168.1.200:6443

mode tcp

option tcplog

balance source

```

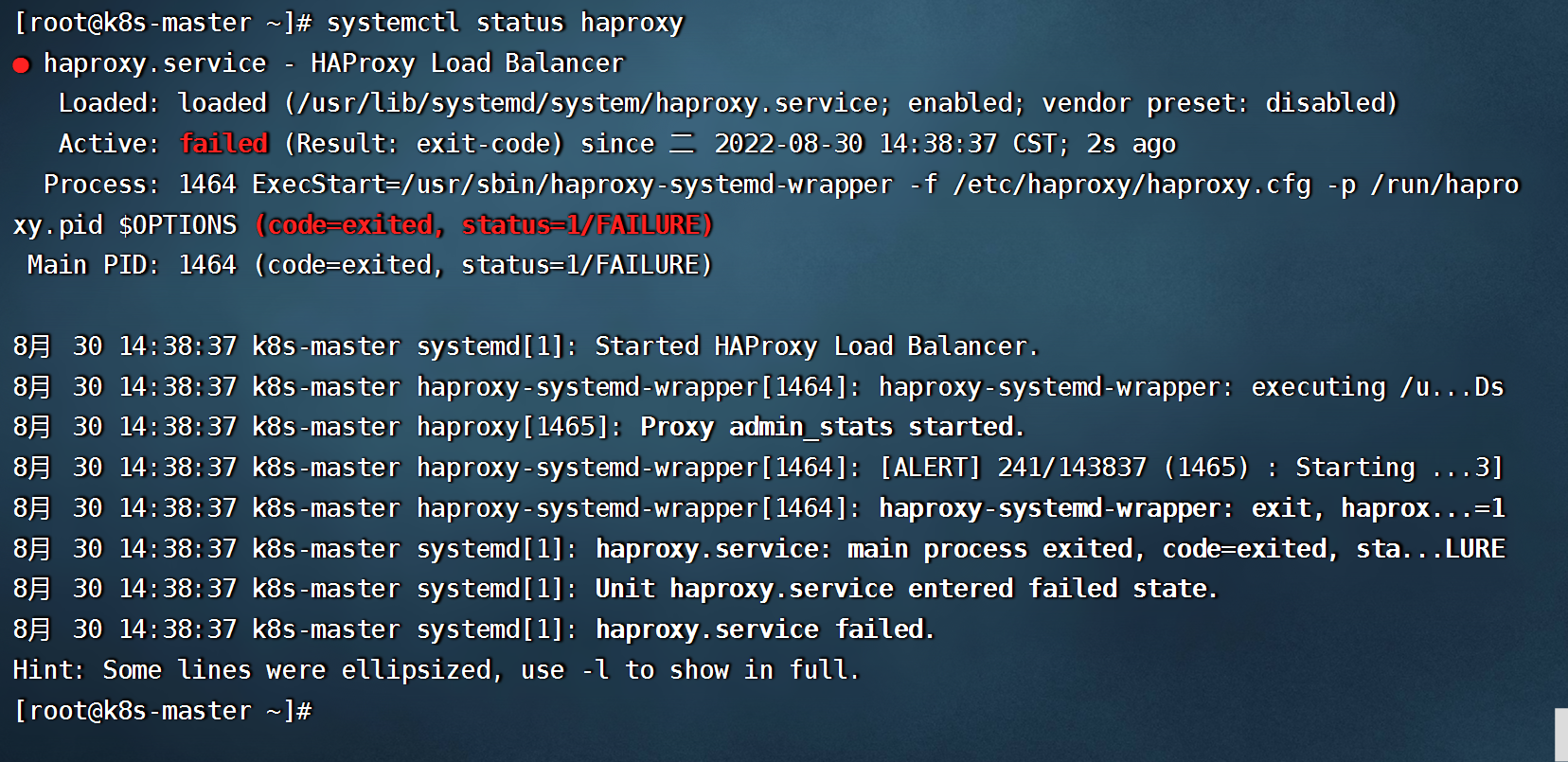

- 查看haproxy服务状态

```shell

systemctl status haproxy

```

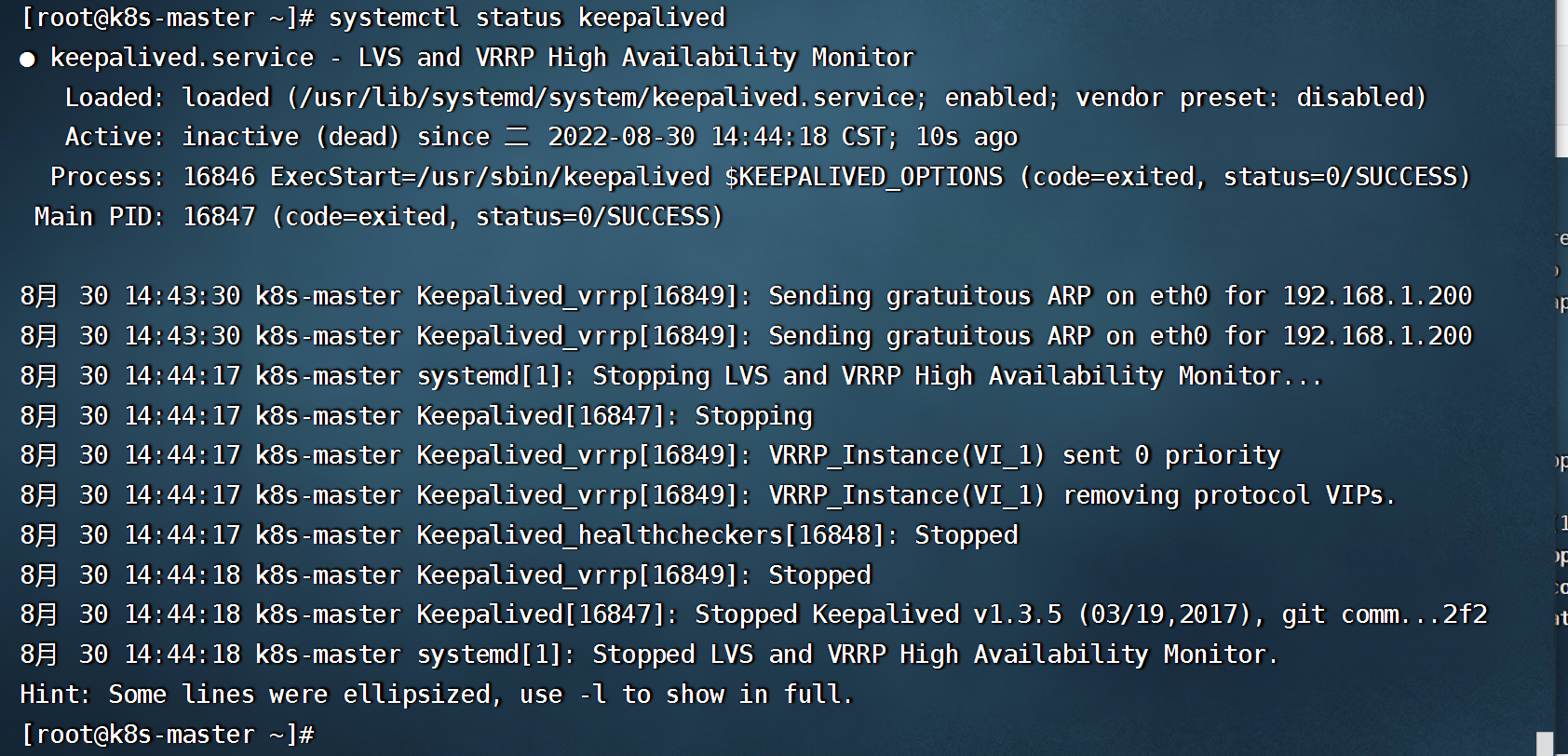

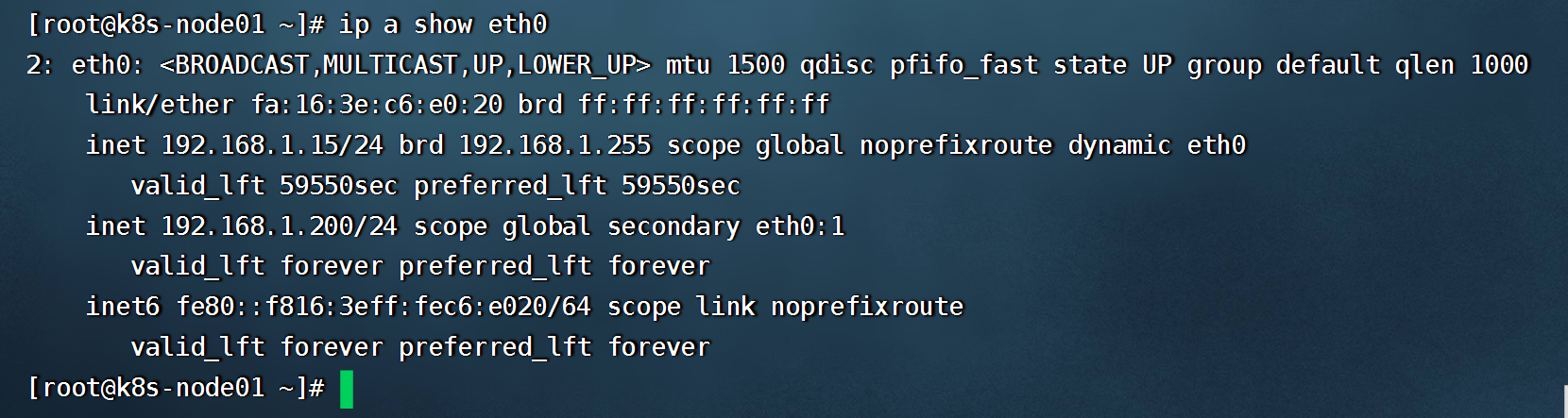

- 等待一下查看keepalived状态

```shell

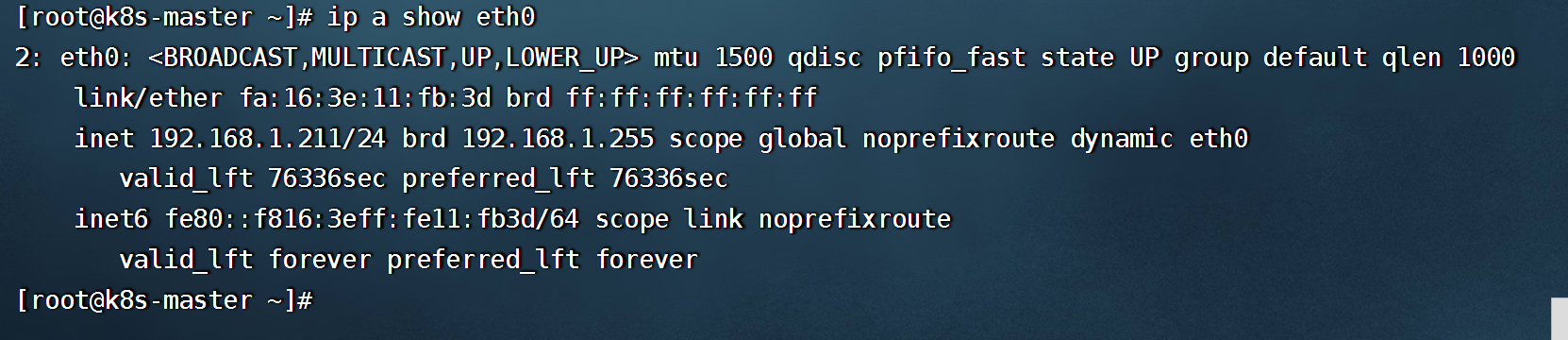

#查看虚拟VIP,

ip a show eth0

```

- 查看其他节点

Sign in with Wallet

Sign in with Wallet

Sign in with Wallet

Sign in with Wallet