---

canonical_url: https://www.scaler.com/topics/difference-between-artificial-intelligence-and-machine-learning/

title: Difference Between Artificial Intelligence and Machine Learning - Scaler Topics

description: Did you know Artificial Intelligence is accomplished with Machine Learning, but both are not the same? Scaler Topics explains the key difference between artificial intelligence and machine learning.

author: Anshika Gupta

category: Machine Learning

amphtml: https://www.scaler.com/topics/difference-between-artificial-intelligence-and-machine-learning/amp/

publish_date: 2021-08-29

---

:::section{.main}

## Overview

The terms Artificial Intelligence and Machine Learning created a buzz decades ago and have significantly made a place in our lives today. The whole world has made remarkable progress with Artificial Intelligence and Machine Learning. We can see our everyday lives getting better, be it a simple mobile application or a major business idea.

The way student developers are dwelling their interests in Artificial Intelligence and Machine Learning, the future world will witness brilliant leaders in all aspects of life, be it business, healthcare, education, or any other field.

[[Artificial Intelligence](https://invozone.com/ai/hire/)](https://) is the intelligence exhibited by systems and machines instead of the human mind, and Machine Learning is its subset. However, the terms are related to each other but have different applications and capabilities. With this article, let us get started with an introduction to AI and ML and how they are different from each other.

:::

:::section{.main}

## What are Artificial Intelligence and Machine Learning?

### 1. Artificial Intelligence

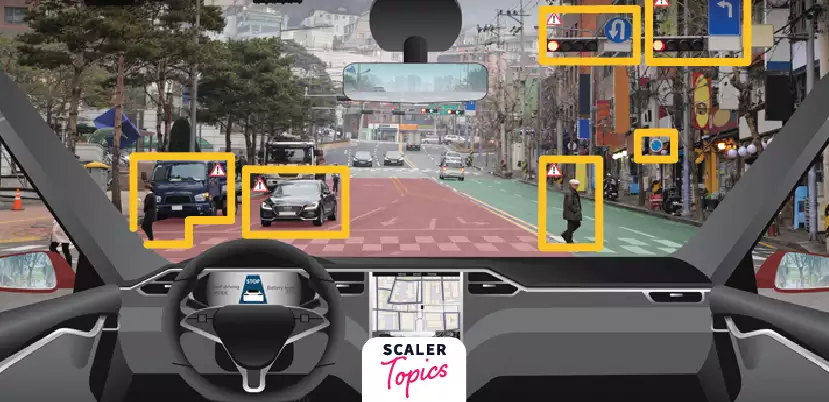

Artificial Intelligence (AI) refers to systems designed to mimic human-like intelligence and reasoning. Unlike conventional programs that execute specific, predefined tasks, AI thrives on flexibility. Its algorithms can learn, adapt, and make decisions based on vast amounts of data. This learning ability differentiates AI from traditional programming; it's about "training" rather than merely "defining" a task. For instance, consider self-driving cars. Such vehicles rely on AI to interpret surroundings, obey traffic rules, and make on-the-spot driving decisions, much like a human driver would. The car's cameras and sensors constantly gather information, while its AI algorithms decide actions like slowing down or stopping, mirroring human judgment in real-time driving scenarios.

### 2. Machine Learning

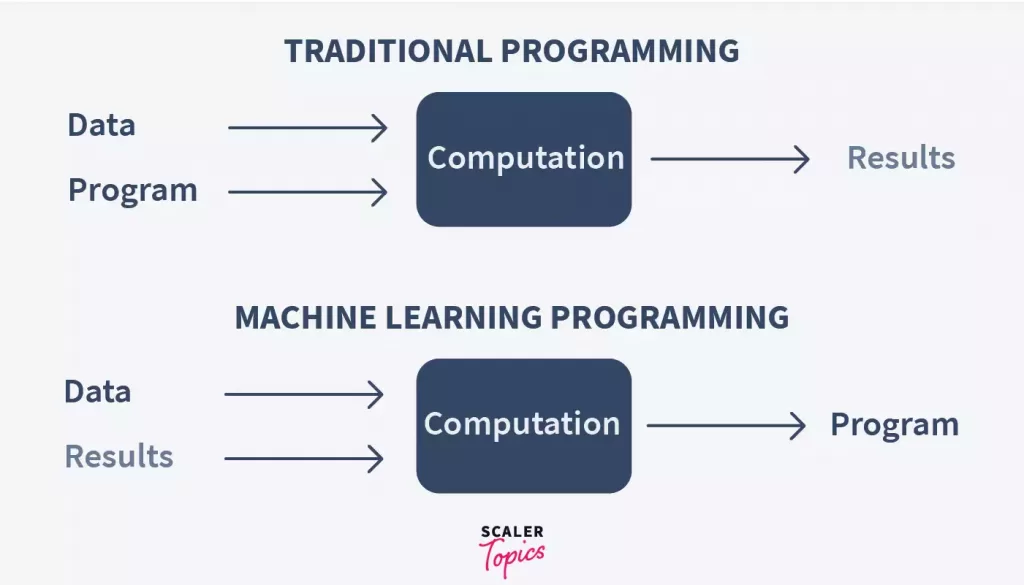

Machine Learning is a subset of Artificial Intelligence that involves training a machine for some specific tasks with past experiences and applying those learnings to future tasks. ML involves the usage of different algorithms and techniques to analyze, understand and detect patterns from data. The patterns then detected are used to perform tasks of similar kinds.

ML involves training a machine for some specific tasks with past experiences and applying those learnings to future tasks. ML involves the usage of different algorithms and techniques to analyze, understand and detect patterns from data. The patterns then detected are used to perform tasks of similar kinds.

Well, think of it as an algorithm. Algorithm to analyze, draw inferences and detect patterns from the data and apply those inferences to unseen (new) data, and predict the future outcome. (We will talk more about algorithms further in the article). Now comes the ‘Learning’ part.

Consider an algorithm that finds the smallest number of all given numbers. The algorithm has nothing to learn but to perform calculations, compare the numbers and give an output. Machine Learning algorithms ‘learn’ by modifying themselves, and adjusting their parameters while being exposed to large amounts of data.

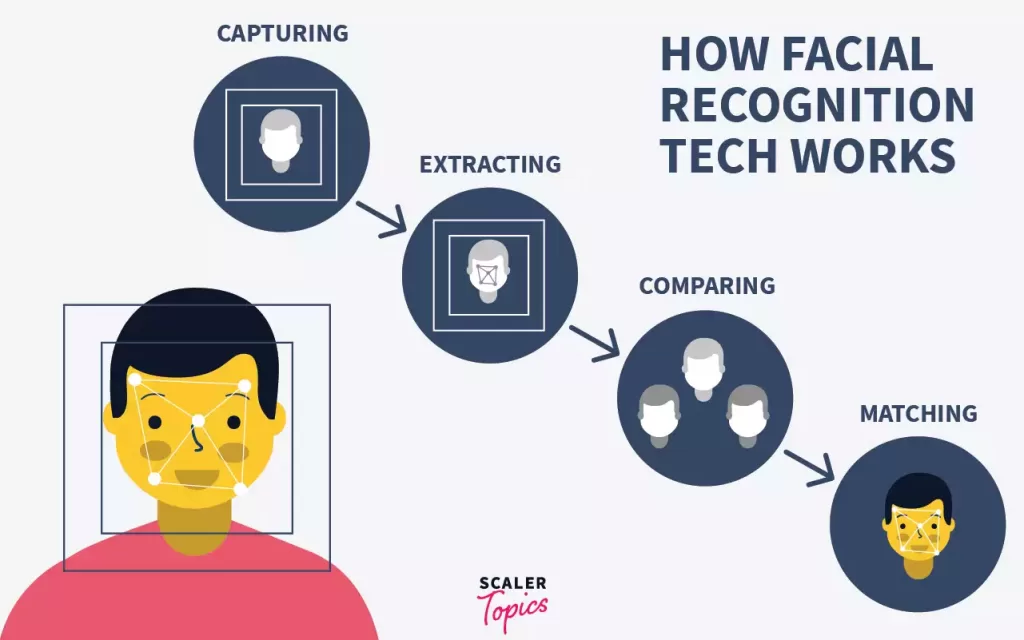

Let us talk about one of the most essential examples of Machine Learning: Face Recognition.

The algorithm learns the features from the images that are fed to it and maps them to the respective names of the people. Here the images are the ‘inputs’, and the name of the people are the ‘labels’. After training and learning the face features, if a new photograph of a person (whose other photos were used to train the ML model) is given in, the algorithm will be able to identify the person and will give his/her name as the output. This face recognition is used in various applications such as attendance systems at schools and offices, social media tagging systems, etc.

This form of training a machine learning model with labeled data is called Supervised Machine Learning, which is of two types:

* Classification

* Regression

The second type of Machine Learning is Unsupervised Machine Learning, where the input data is given without labels, and the algorithm analyzes and extracts patterns and relationships from the data. This further goes into two types:

* Unsupervised Transformations.

* Clustering

The third type of Machine Learning is Reinforcement Learning which is a reward-based trial-n-error method. An agent does tasks in an environment and improves its actions based on reward and feedback. This is really exciting as here, we do not have a fixed training dataset but a fixed set of goals that are to be achieved by the agent. One of the most intriguing examples is online game-playing.

Decision trees are the widely used algorithms for regression and classification tasks. To explain it in the easiest terms, we can think of decision trees as continuous If-Else that lead to an answer/decision. What makes this algorithm special is that we do not have to define these conditions (If-else) explicitly. It rather creates the decision boundaries (conditions) dynamically.

:::

:::section{.main}

## Difference Between Artificial Intelligence and Machine Learning

Machine Learning, being a subset of Artificial Intelligence, leads to confusion, and quite often, the terms AI and ML are used interchangeably. Now that we read about what AI and ML are in the previous section of the article let us move forward to understand the difference between the two.

Intuition:

Let us understand the difference with the help of a real-time example. Consider a security surveillance system installed at your house. The entire package is an example of an AI system, and its various components, such as object detection, human identification, trespassing alerts, etc. can be taken as examples for various ML algorithms developed to cater to the requirements of the AI system.

### The key differences between AI and ML are:

| **Artificial Intelligence** | **Machine Learning** |

|------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------|

| Intelligence exhibited by systems and machines. | A subset of AI training machines to learn patterns from data. |

| Aims to solve complex problems by imitating human intelligence. | Aims for the best possible accuracy for a task. |

| Implies decision-making mimicking human thought. | Uses algorithms to predict outputs based on data. |

| Can encompass a broad range of tasks and actions. | Focuses on specific tasks it's trained for. |

| Seen in security systems, robots, etc. | Seen in recommendation systems, face recognition, etc. |

| Can be rule-based without needing to learn from data. | Always requires data to train and refine algorithms. |

| Driven by solving real-world problems, even without data. | Emerged due to advancements in computing power and availability of large datasets. |

| Operates under a broader concept which might not always require pattern recognition or prediction. | Primarily revolves around pattern recognition and predictions based on given data. |

:::

:::section{.summary}

## Conclusion

* Artificial Intelligence (AI) represents the broader concept of machines being able to mimic human-like tasks, while Machine Learning (ML) is a specialized subset focusing on training machines to learn from data and make predictions.

* AI encompasses a wide range of capabilities including decision-making that imitates human reasoning. ML, on the other hand, primarily revolves around recognizing patterns in data and forecasting outcomes.

* AI has a multitude of applications from security systems to advanced robotics. ML, while a part of AI, shines in specific tasks like recommendation systems and face recognition.

* While AI can be rule-based and might not always need data to function, ML is inherently data-driven, requiring vast amounts of data to train and refine its algorithms.

* AI's goal has been to solve complex problems by imitating human intelligence, irrespective of the approach. ML has emerged more prominently due to the surge in computing power and the availability of large datasets.

:::