# As AI enters healthcare, how do we assure fair treatment for everyone?

Good health is invaluable. But, as a value, good health is not distributed equally across society.

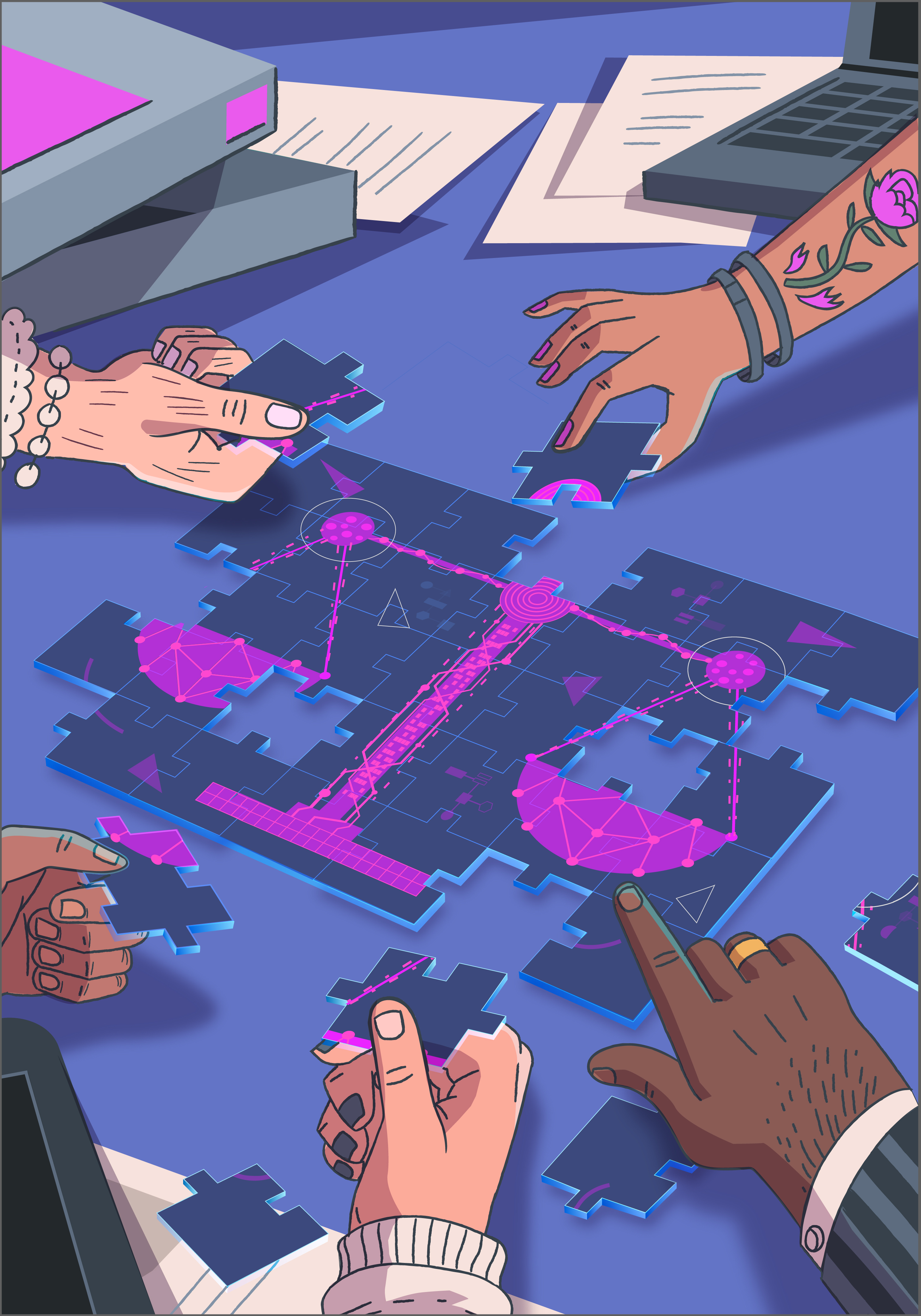

Many of the causes or influences of good health are intertwined with socioeconomic factors. For instance, where we live may contribute to our cardiovascular health (e.g. high levels of air pollution). And those of us who were fortunate enough to receive a good education, can make sure our health literacy informs our everyday choices—even if we sometimes fall short of our ambitions. We call such factors, *social determinants of health*, in recognition that our individual choices and general constitution are only a piece of the puzzle when it comes to achieving health equity.

Data-driven technologies, such as AI, cound increasingly affect how health is distributed throughout society. For example, the following list are winners of [NHS England's 'AI in Health and Care Award'](https://transform.england.nhs.uk/ai-lab/ai-lab-programmes/ai-health-and-care-award/ai-health-and-care-award-winners/) are some examples (among others) of projects that have received funding to work with NHS sites to support the adoption of these technologies and, hopefully, bring associated benefits to clinical or operational pathways. Note how some of the technologies target a very specific patient group, whereas others will have broader societal impact:

- DERM ([Skin Analytics Ltd](https://skin-analytics.com/)): leveraging AI in the analysis of images of skin lesions, distinguishing between cancerous, pre-cancerous and benign lesions.

- eHub ([eConsult Health Ltd](https://econsult.net/)): AI for triage and automation of GP e-consultation requests, reducing staff time to manage the system.

- Paige prostate cancer detection tool ([University of Oxford](https://www.paige.ai/)): Using AI-based diagnostic software to support the interpretation of pathology sample images, in order to more efficiently detect, grade and quantify cancer in prostate biopsies.

- Galen Breast ([Ibex Medical Analytics Ltd](https://ibex-ai.com/galen-breast/)): using AI to examine breast tissue samples to detect any abnormal cells and order further tests that might be needed.

Here, it should be clear from this list, how benefits (and risks) of research and development, therefore, are not equally distributed across society. On the one hand, the choice of which technologies to develop in the first group may only benefit a sub-group of society as a whole (e.g. people with prostate cancer). And, on the other hand, even broadly applicable technologies may affect populations differentially (e.g. AI for triage and automation of GP e-consultation may benefit those with higher levels of digital literacy or access to technology).

This raises a question:

> How should we assess and assure the fairness of these individual technologies, as well as the broader digital healthcare ecosystem in which they are situated?

## What does fairness mean in the context of data-driven technologies?

Defining fairness in the context of data-driven technologies, such as AI, is challenging. We could, for instance, focus on addressing the sources of bias that exist prior to and throughout a project's lifecycle (e.g. limited representation in a training dataset). Other aspects include:

- Inclusivity and diversity of opinions and perspectives during stakeholder engagement (e.g. who has been consulted during the project's design and development)

- Non-discrimination (e.g. are specific sub-groups of a patient population disproportionately disadvantaged by errors or safety concerns with the system)

- Equitable impact of the system (e.g. are specific healthcare professionals overly burdened unfairly by the deployment of the system)

So, in addition to defining fairness, it is also important to clearly demonstrate how this goal has been realised (or operationalised) across a project's lifecycle to provide assurance to stakeholders and affected users that the design, development, deployment, and use of digital health technology is fair and equitable.

Ethical principles, such as fairness, have been recognised as vital to ensuring the responsible development and use of data-driven technologies. Examples of organisations or groups that have articulated fairness or equity as a vital goal for the trustworthy and ethical governance of data-driven technologies include (but are not limited to):

- International organisations and governments (e.g. [Organisation for Economic Co-operation and Development](https://oecd.ai/en/ai-principles), [UK Government](https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach/white-paper), [US Government](https://www.whitehouse.gov/briefing-room/statements-releases/2023/10/30/fact-sheet-president-biden-issues-executive-order-on-safe-secure-and-trustworthy-artificial-intelligence/))

- Health-related organisations (e.g. [NHS England](https://transform.england.nhs.uk/ai-lab/ai-lab-programmes/ethics/#striving), [World Health Organisation](https://iris.who.int/bitstream/handle/10665/341996/9789240029200-eng.pdf?sequence=1))

- Standards bodies (e.g. [National Institute of Standards and Technology](https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf), [International Standards Organisation](https://www.iso.org/artificial-intelligence/responsible-ai-ethics)):

- Commercial organisations (e.g. [Microsoft](https://blogs.microsoft.com/wp-content/uploads/prod/sites/5/2022/06/Microsoft-Responsible-AI-Standard-v2-General-Requirements-3.pdf), [IBM](https://aif360.res.ibm.com/))

- Research and Civil society organisations (e.g. [Global Partnership on AI](https://gpai.ai/projects/responsible-ai/),[Pollicy](), [Algorithmic Justice League](https://www.ajl.org/spotlight-documentary-coded-bias), [Ada Lovelace Institute](https://www.adalovelaceinstitute.org/about/)).

So, with all this attention paid to fairness and equity, surely there is consensus on how such a goal should be assured, right?

Not quite.

What is needed is an open, structured, and accessible approach for clarifying the multifaceted nature of goals such as fairness.

The Trustworthy and Ethical Assurance of Digital Health and Healthcare project has recently published a report that introduces such a methodology, known as _Trustworthy and Ethical Assurance_.

## Trustworthy and Ethical Assurance of Digital Health and Healthcare

Trustworthy and Ethical Assurance (or TEA for short) is a collaboration between the Alan Turing Institute, the Centre for Assuring Autonomy (University of York), and the Responsible Technology Adoption Unit (Department for Science, Innovation and Technology).

At the centre of this collaboration is an open-source and community-centred platform—the TEA platform—which has been developed to guide teams and organisations in crafting well-reasoned ethical arguments. These arguments, known as *assurance cases*, can help foster community engagement and sustainable practices within teams, build trust and transparency among stakeholders and users, and also support ongoing regulation and policy.

A recent report, produced by the team, introduces and motivates the TEA platform by demonstrating its utility within the domain of digital health and healthcare. This is supported by two real-world case studies of digital health technologies.

One of these examples is a medical imaging platform, known as the CemrgApp, with custom image processing and computer vision toolkits for applying statistical, machine learning, and simulation approaches to cardiovascular data). In the report, we present and exaplain a partial assurance case that sets out the types of claims and evidence that can be leveraged to justify and assure ethical principles such as fairness and health equity.

[Insert Picture]

## Next Steps

A key goal of the project and report was to establish an agenda and set of next steps for the TEA platform, grounded in a community-centred approach.

In February 2024, the project team were awarded funding from the UKRI's BRAID programme to continue research and development of the platform, but focusing on the specific area of digital twins.

In addition to taking forward some of the key recommendations made in the report (e.g. developing multi-disciplinary communities of practice), this new project will also allow us to work more closely with a wider range of project teams that care deeply about ensuring their work is responsible, trustworthy, and ethical.

As we stand on the cusp of a healthcare transformation powered by digital tools and data-driven insights, the imperative to navigate this new terrain with a compass calibrated to health equity and justice has never been more critical. We hope the TEA platform can help people navigate this journey in a more open, collaborative, and, ultimately, equitable manner.

### Acknowledgements and Funding Statements

The Trustworthy and Ethical Assurance of Digital Health and Healthcare project and report was funded by the Assuring Autonomy International Programme, a partnership between Lloyd’s Register Foundation and the University of York. You can read more about the project here: https://www.turing.ac.uk/research/research-projects/trustworthy-and-ethical-assurance-digital-healthcare

The Trustworthy and Ethical Assurance of Digital Health and Healthcare report has been co-produced with the Responsible Technology Adoption Unit, part of the Department for Science, Innovation and Technology, and is based on their '[Introduction to AI Assurance](https://www.gov.uk/government/publications/introduction-to-ai-assurance/introduction-to-ai-assurance)' guidance.

The Trustworthy and Ethical Assurance of Digital Twins (TEA-DT) project is funded by an award from the UKRI’s Arts and Humanities Research Council to Dr Christopher Burr, as part of the BRAID programme. You can read more about the project here: https://www.turing.ac.uk/research/research-projects/trustworthy-and-ethical-assurance-digital-twins-tea-dt