### Natural Language Processing

# 自然語言處理(NLP)

---

## 從認知、理解,到生成的技術

#### 其實跟 CV 很像,只是 focus 不同感官的互動 👀 👄

---

# 做什麼

---

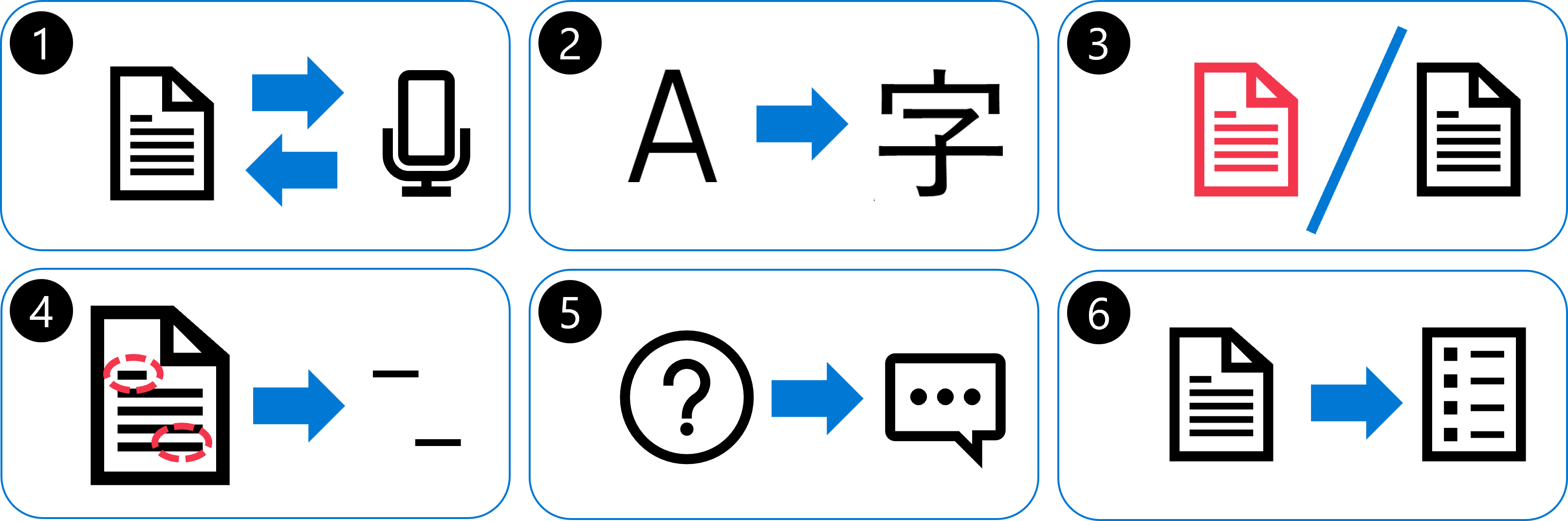

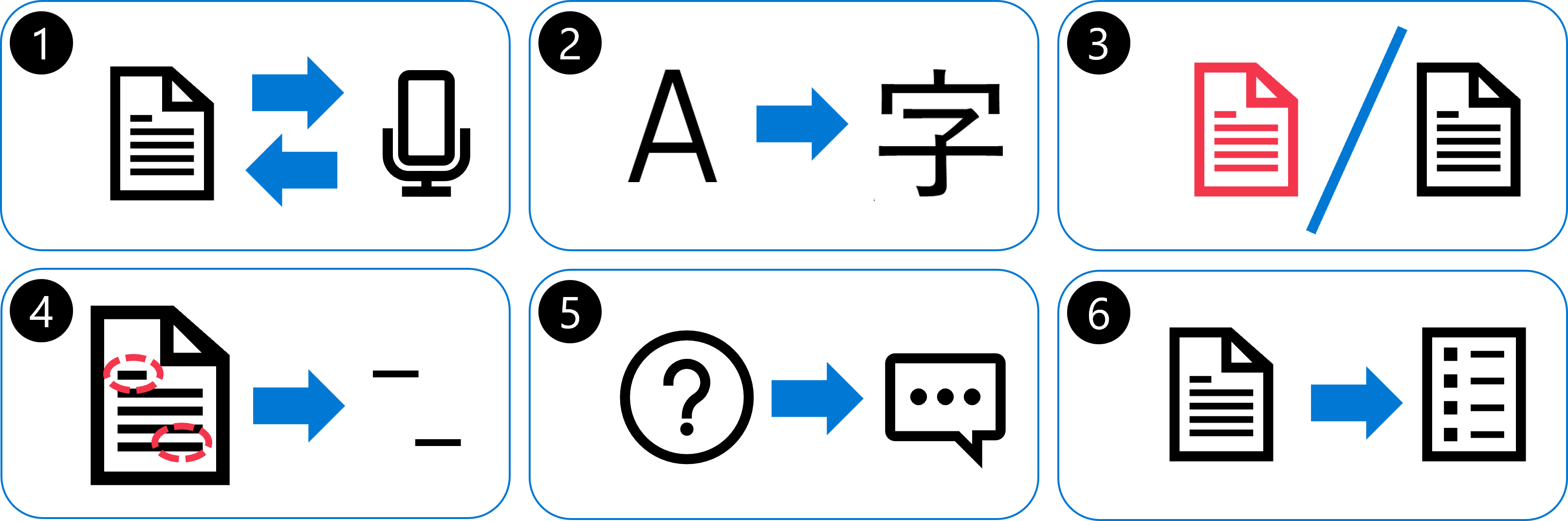

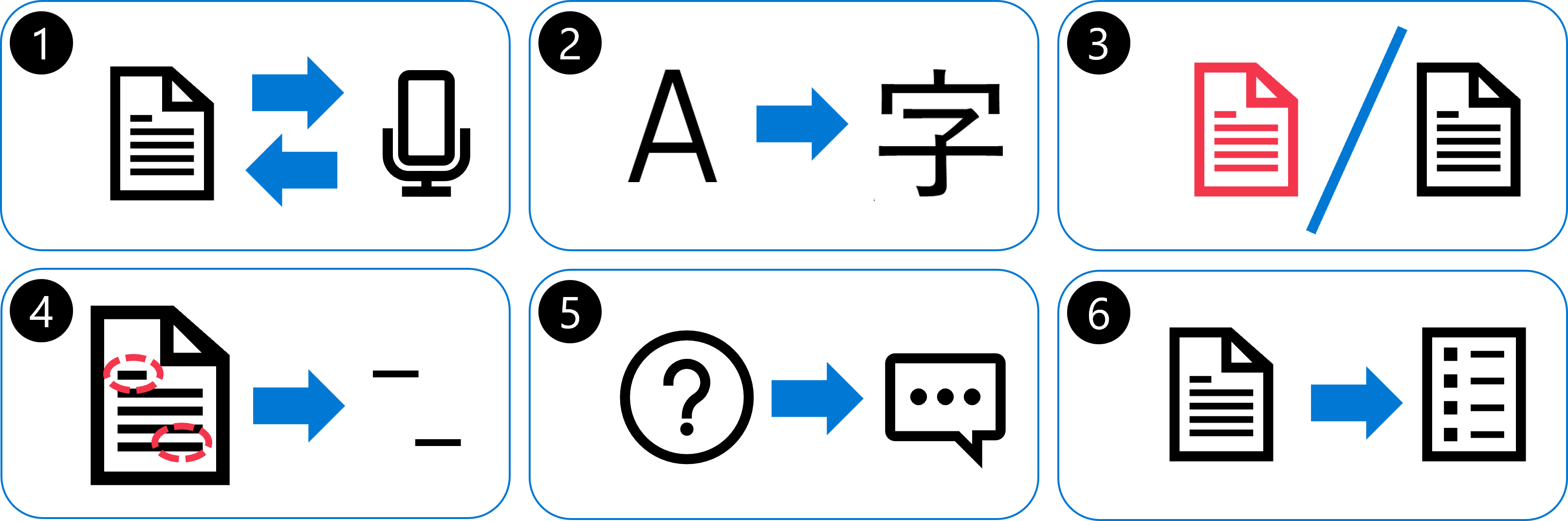

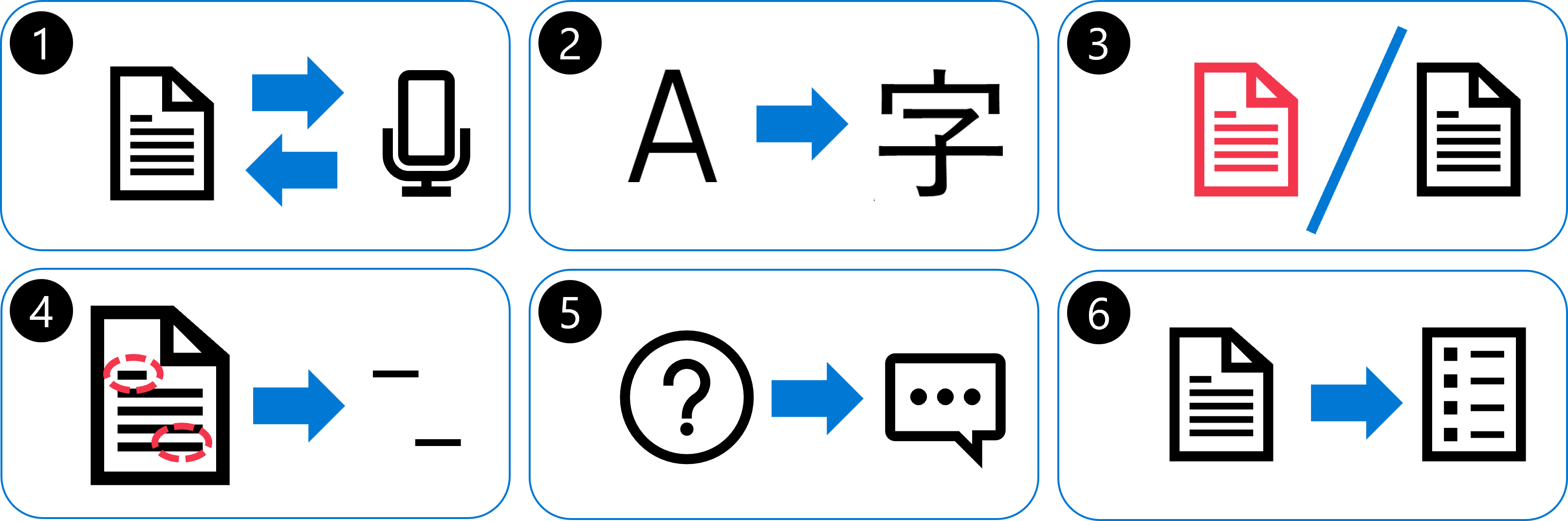

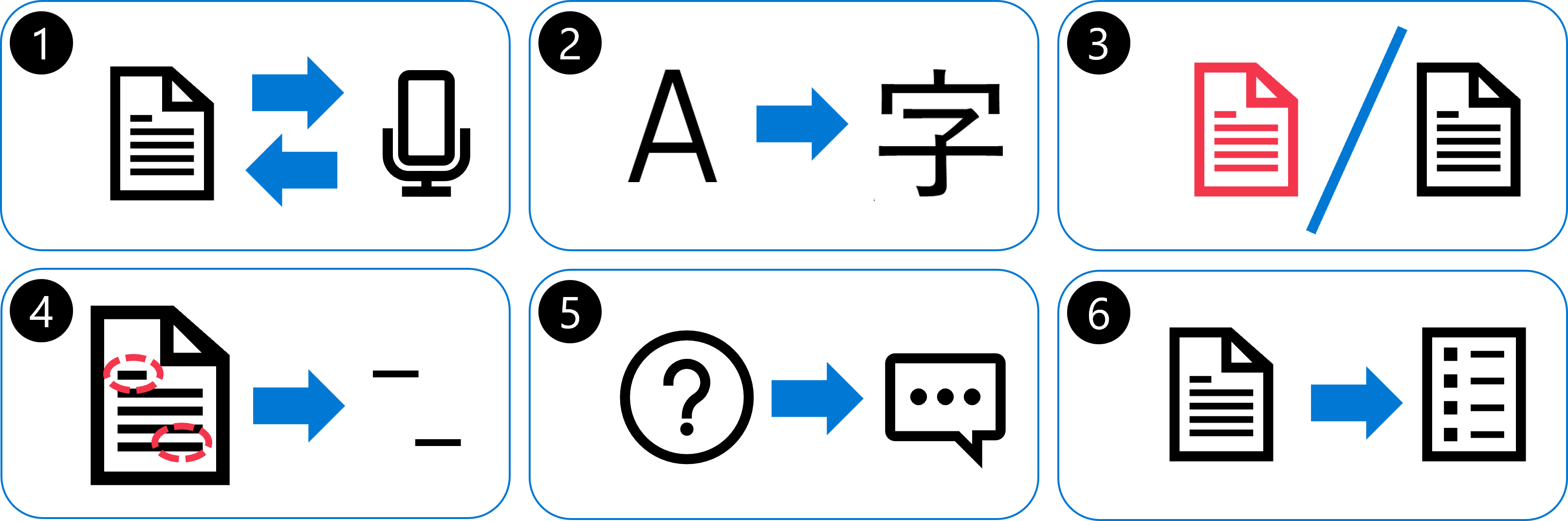

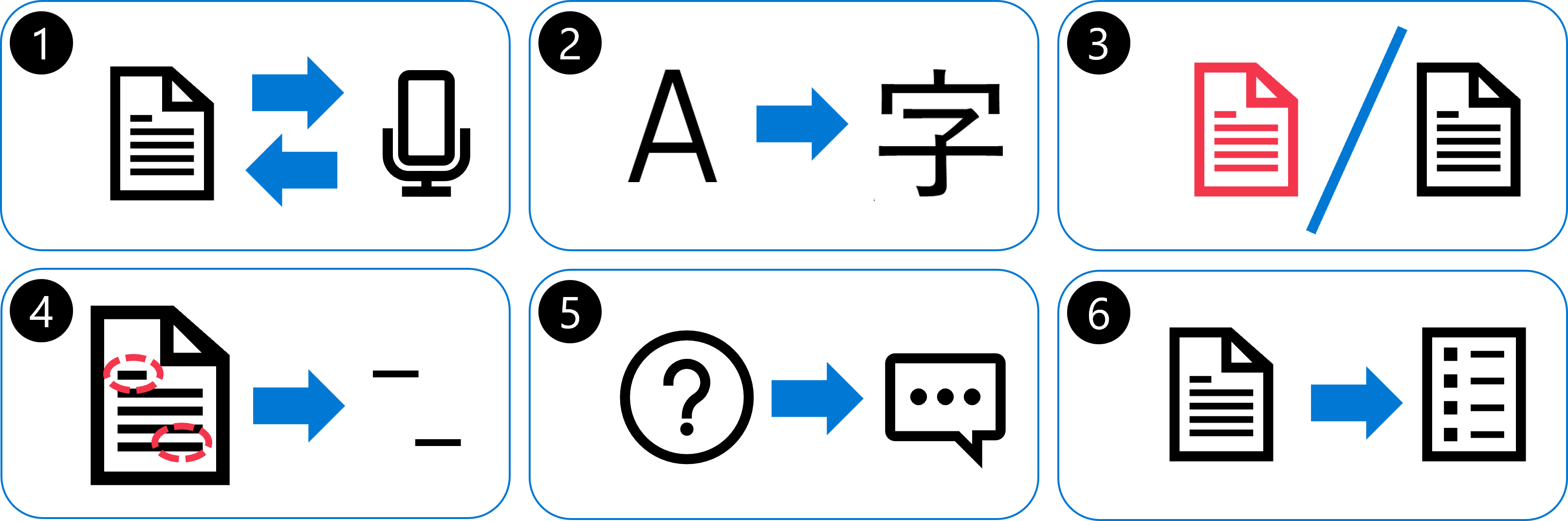

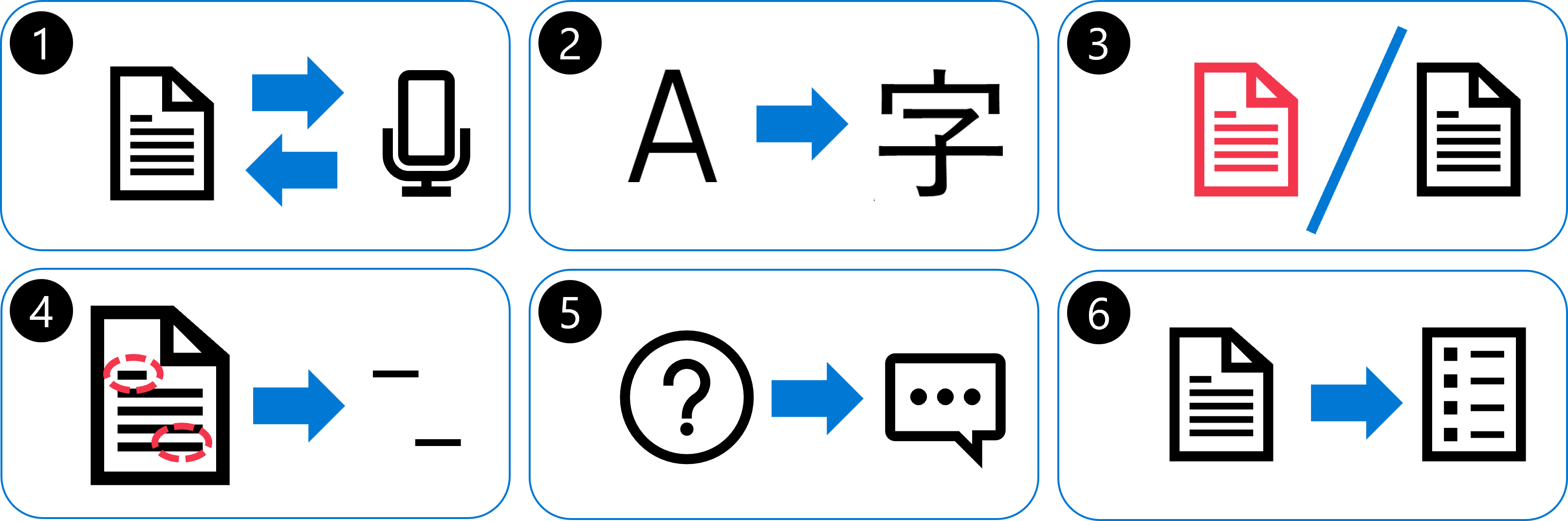

## 做什麼

- Speech-to-text and text-to-speech conversion

---

## 做什麼

- Machine translation

---

## 做什麼

- Text classification

- Spam detection

- Topic modeling

---

## 做什麼

- Text classification

- Sentiment analysis

- Toxicity classification

----

## 做什麼

---

## 做什麼

- Entity extraction

- Named entity recognition

- Information retrieval

----

## 做什麼

----

## 做什麼

---

## 做什麼

- Question answering

- Text generation

- Autocomplete

- Chatbots

---

## 做什麼

- Text summarization

---

# 怎麼做

---

## Statistical techniques

---

## Statistical techniques

---

## Statistical techniques

---

## Traditional ML techniques

---

## Traditional ML techniques

---

## Deep learning techniques

---

## Deep learning techniques

----

### Word embedding

----

### RNNs for memory

----

### RNNs for memory

----

### Long Short-Term Memory (LSTM)

<img src="https://miro.medium.com/v2/resize:fit:1400/format:webp/1*J5W8FrASMi93Z81NlAui4w.png" style="background:white;"></img>

https://tengyuanchang.medium.com/%E6%B7%BA%E8%AB%87%E9%81%9E%E6%AD%B8%E7%A5%9E%E7%B6%93%E7%B6%B2%E8%B7%AF-rnn-%E8%88%87%E9%95%B7%E7%9F%AD%E6%9C%9F%E8%A8%98%E6%86%B6%E6%A8%A1%E5%9E%8B-lstm-300cbe5efcc3

---

## Deep learning techniques

---

## Deep learning techniques

---

## Deep learning techniques

----

### Transformer architecture

---

# 怎麼用

---

## LLMs as foundation model

---

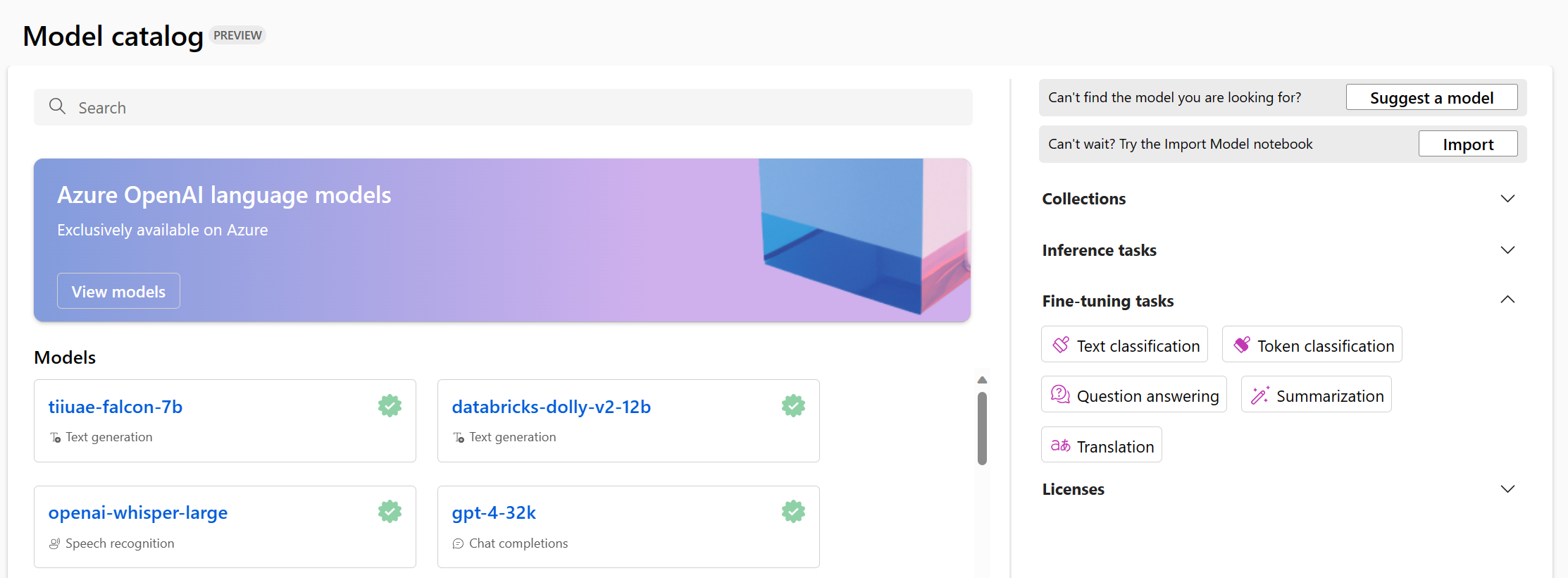

## Model catalog in Azure ML

---

## HuggingFace

https://huggingface.co/

----

### CKIP 爭議

----

### CKIP 爭議

----

### CKIP 爭議

- https://huggingface.co/ckiplab

- https://github.com/ckiplab/ckip-transformers

----

### CKIP 爭議

- 大型語言模型的實驗過程

- ~~從頭自幹~~

- 大型語言模型的適用性

- 通用

- 專用

- 大型語言模型的訓練難題

- 預訓練:語料庫

- 指令微調:[Instruction Tuning](https://youtu.be/Q1KVJNwAMJk?si=u_yqZ97aPBbTw-3g)

- Post-Training:RLHF

---

## 參考資料

- [MS Learn | Understand the Transformer architecture and explore large language models in Azure Machine Learning](https://learn.microsoft.com/zh-tw/training/modules/explore-foundation-models-in-model-catalog/)

- [Deeplearning.ai | A Complete Guide to Natural Language Processing](https://www.deeplearning.ai/resources/natural-language-processing/)

- [淺談遞歸神經網路 (RNN) 與長短期記憶模型 (LSTM)](https://tengyuanchang.medium.com/%E6%B7%BA%E8%AB%87%E9%81%9E%E6%AD%B8%E7%A5%9E%E7%B6%93%E7%B6%B2%E8%B7%AF-rnn-%E8%88%87%E9%95%B7%E7%9F%AD%E6%9C%9F%E8%A8%98%E6%86%B6%E6%A8%A1%E5%9E%8B-lstm-300cbe5efcc3)

- [AutoEncoder (三) - Self Attention、Transformer](https://medium.com/ml-note/autoencoder-%E4%B8%89-self-attention-transformer-c37f719d222)

- [【專欄】ckip-llama-2真的有這麼爛嗎?淺談LLMs的training與data的關係。](https://axk51013.medium.com/%E5%B0%88%E6%AC%84-ckip-llama-2%E7%9C%9F%E7%9A%84%E6%9C%89%E9%80%99%E9%BA%BC%E7%88%9B%E5%97%8E-%E6%B7%BA%E8%AB%87llms%E7%9A%84training%E8%88%87data%E7%9A%84%E9%97%9C%E4%BF%82-67a4eb4a5077)

{"title":"week_6_nlp_overview","description":"","contributors":"[{\"id\":\"f86386aa-f010-402c-b40f-4d1d7d6afa8b\",\"add\":8026,\"del\":1591}]"}