# LUMI Hackathon Krakow (Nov 2023) - pre-event training

### Login to Lumi

```

ssh USERNAME@lumi.csc.fi

```

To simplify the login to LUMI, you can add the following to your `.ssh/config` file.

```

# LUMI

Host lumi

User <USERNAME>

Hostname lumi.csc.fi

IdentityFile <HOME_DIRECTORY>/.ssh/id_rsa

ServerAliveInterval 600

ServerAliveCountMax 30

```

The `ServerAlive*` lines in the config file may be added to avoid timeouts when idle.

Now you can shorten your login command to the following.

```

ssh lumi

```

If you are able to log in with the ssh command, you should be able to use the secure copy command to transfer files. For example, you can

copy the presentation slides from lumi to view them.

```

scp lumi:/project/project_465000828/Slides/AMD/<file_name> <local_filename>

```

You can also copy all the slides with the . From your local system:

```

mkdir slides

scp -r lumi:/project/project_465000828/Slides/AMD/* slides

```

If you don't have the additions to the config file, you would need a longer command:

```

mkdir slides

scp -r -i <HOME_DIRECTORY>/.ssh/<public ssh key file> <username>@lumi.csc.fi:/project/project_465000828/slides/AMD/ slides

```

or for a single file

```

scp -i <HOME_DIRECTORY>/.ssh/<public ssh key file> <username>@lumi.csc.fi:/project/project_465000828/slides/AMD/<file_name> <local_filename>

```

This exercises were meant for ROCm 5.4.3 installation. So it assumed you have the following environment loaded to make all components available, namely the different profilers and their dependencies:

```

module load craype-accel-amd-gfx90a

module load PrgEnv-amd

module use /pfs/lustrep2/projappl/project_462000125/samantao-public/mymodules

module load rocm/5.4.3 omnitrace/1.10.4-rocm-5.4.x omniperf/1.0.10-rocm-5.4.x

source /pfs/lustrep2/projappl/project_462000125/samantao-public/omnitools/venv/bin/activate

```

## Rocprof

Setup your allocation (make sure the environemnt above is loaded):

```

salloc -N 1 --gpus=8 -p small-g --exclusive -A project_465000828 -t 20:00

```

Download examples repo and navigate to the `HIPIFY` exercises

```

cp -rf /pfs/lustrep1/projappl/project_465000828/Exercises/AMD/HPCTrainingExamples .

cd HPCTrainingExamples/HIPIFY/mini-nbody/hip/

```

Compile and run one case. We are on the front-end node, so we have two ways to compile for the

GPU that we want to run on.

1. The first is to explicitly set the GPU archicture when compiling (We are effectively cross-compiling for a GPU that is present where we are compiling).

```

hipcc -I../ -DSHMOO --offload-arch=gfx90a nbody-orig.hip -o nbody-orig

```

2. The other option is to compile on the compute node where the compiler will auto-detect which GPU is present. Note that the autodetection may fail if you do not have all the GPUs (depending on the ROCm version). If that occurs, you will need to set `export ROCM_GPU=gfx90a`.

```

srun hipcc -I../ -DSHMOO nbody-orig.cpp -o nbody-orig

```

Now Run `rocprof` on nbody-orig to obtain hotspots list

```

srun rocprof --stats nbody-orig 65536

```

Check Results

```

cat results.csv

```

Check the statistics result file, one line per kernel, sorted in descending order of durations

```

cat results.stats.csv

```

Using `--basenames on` will show only kernel names without their parameters.

```

srun rocprof --stats --basenames on nbody-orig 65536

```

Check the statistics result file, one line per kernel, sorted in descending order of durations

```

cat results.stats.csv

```

Trace HIP calls with `--hip-trace`

```

srun rocprof --stats --hip-trace nbody-orig 65536

```

Check the new file `results.hip_stats.csv`

```

cat results.hip_stats.csv

```

Profile also the HSA API with the `--hsa-trace`

```

srun rocprof --stats --hip-trace --hsa-trace nbody-orig 65536

```

Check the new file `results.hsa_stats.csv`

```

cat results.hsa_stats.csv

```

On your laptop, download `results.json`

```

scp -i <HOME_DIRECTORY>/.ssh/<public ssh key file> <username>@lumi.csc.fi:<path_to_file>/results.json results.json

```

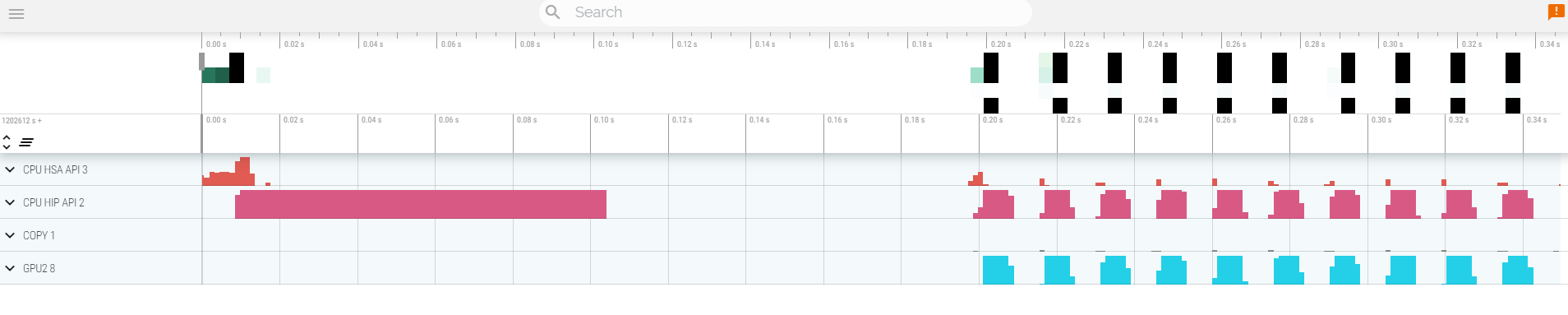

Open a browser and go to [https://ui.perfetto.dev/](https://ui.perfetto.dev/).

Click on `Open trace file` in the top left corner.

Navigate to the `results.json` you just downloaded.

Use the keys WASD to zoom in and move right and left in the GUI

```

Navigation

w/s Zoom in/out

a/d Pan left/right

```

Read about hardware counters available for the GPU on this system (look for gfx90a section)

```

less $ROCM_PATH/lib/rocprofiler/gfx_metrics.xml

```

Create a `rocprof_counters.txt` file with the counters you would like to collect

```

vi rocprof_counters.txt

```

Content for `rocprof_counters.txt`:

```

pmc : Wavefronts VALUInsts

pmc : SALUInsts SFetchInsts GDSInsts

pmc : MemUnitBusy ALUStalledByLDS

```

Execute with the counters we just added:

```

srun rocprof --timestamp on -i rocprof_counters.txt nbody-orig 65536

```

You'll notice that `rocprof` runs 3 passes, one for each set of counters we have in that file.

Contents of `rocprof_counters.csv`

```

cat rocprof_counters.csv

```

## Omnitrace

Omnitrace is made available by the modules listed in the header of these exercises.

* Allocate resources with `salloc`

`salloc -N 1 --ntasks=1 --partition=small-g --gpus=1 -A project_465000828 --time=00:15:00`

* Check the various options and their values and also a second command for description

`srun -n 1 --gpus 1 omnitrace-avail --categories omnitrace`

`srun -n 1 --gpus 1 omnitrace-avail --categories omnitrace --brief --description`

* Create an Omnitrace configuration file with description per option

`srun -n 1 omnitrace-avail -G omnitrace.cfg --all`

* Declare to use this configuration file:

`export OMNITRACE_CONFIG_FILE=/path/omnitrace.cfg`

* Get the training examples if you didn't already:

`cp -r /pfs/lustrep1/projappl/project_465000828/Exercises/AMD/HPCTrainingExamples .`

* Compile and execute saxpy

* `cd HPCTrainingExamples/HIP/saxpy`

* `hipcc --offload-arch=gfx90a -O3 -o saxpy saxpy.hip`

* `time srun -n 1 ./saxpy`

* Check the duration

* Compile and execute Jacobi

* `cd HIP/jacobi`

<!--

* Need to make some changes to the makefile

* ``MPICC=$(PREP) `which CC` ``

* `MPICFLAGS+=$(CFLAGS) -I${CRAY_MPICH_PREFIX}/include`

* `MPILDFLAGS+=$(LDFLAGS) -L${CRAY_MPICH_PREFIX}/lib -lmpich`

* comment out

* ``# $(error Unknown MPI version! Currently can detect mpich or open-mpi)``

-->

* Now build the code

* `make`

* `time srun -n 1 --gpus 1 Jacobi_hip -g 1 1`

* Check the duration

#### Dynamic instrumentation

* Execute dynamic instrumentation: `time srun -n 1 --gpus 1 omnitrace-instrument -- ./saxpy` and check the duration

<!-- * Execute dynamic instrumentation: `time srun -n 1 --gpus 1 omnitrace-instrument -- ./Jacobi_hip -g 1 1` and check the duration (may fail?) -->

* About Jacobi example, as the dynamic instrumentation wuld take long time, check what the binary calls and gets instrumented: `nm --demangle Jacobi_hip | egrep -i ' (t|u) '`

* Available functions to instrument: `srun -n 1 --gpus 1 omnitrace-instrument -v 1 --simulate --print-available functions -- ./Jacobi_hip -g 1 1`

* the simulate option means that it will not execute the binary

#### Binary rewriting (to be used with MPI codes and decreases overhead)

* Binary rewriting: `srun -n 1 --gpus 1 omnitrace-instrument -v -1 --print-available functions -o jacobi.inst -- ./Jacobi_hip`

* We created a new instrumented binary called jacobi.inst

* Executing the new instrumented binary: `time srun -n 1 --gpus 1 omnitrace-run -- ./jacobi.inst -g 1 1` and check the duration

* See the list of the instrumented GPU calls: `cat omnitrace-jacobi.inst-output/TIMESTAMP/roctracer.txt`

#### Visualization

* Copy the `perfetto-trace.proto` to your laptop, open the web page https://ui.perfetto.dev/ click to open the trace and select the file

#### Hardware counters

* See a list of all the counters: `srun -n 1 --gpus 1 omnitrace-avail --all`

* Declare in your configuration file: `OMNITRACE_ROCM_EVENTS = GPUBusy,Wavefronts,VALUBusy,L2CacheHit,MemUnitBusy`

* Execute: `srun -n 1 --gpus 1 omnitrace-run -- ./jacobi.inst -g 1 1` and copy the perfetto file and visualize

#### Sampling

Activate in your configuration file `OMNITRACE_USE_SAMPLING = true` and `OMNITRACE_SAMPLING_FREQ = 100`, execute and visualize

#### Kernel timings

* Open the file `omnitrace-binary-output/timestamp/wall_clock.txt` (replace binary and timestamp with your information)

* In order to see the kernels gathered in your configuration file, make sure that `OMNITRACE_USE_TIMEMORY = true` and `OMNITRACE_FLAT_PROFILE = true`, execute the code and open again the file `omnitrace-binary-output/timestamp/wall_clock.txt`

#### Call-stack

Edit your omnitrace.cfg:

```

OMNITRACE_USE_SAMPLING = true;

OMNITRACE_SAMPLING_FREQ = 100

```

Execute again the instrumented binary and now you can see the call-stack when you visualize with perfetto.

## Omniperf

* Load Omniperf as in the header of these exercises.

* Reserve a GPU, compile the exercise and execute Omniperf, observe how many times the code is executed

```

salloc -N 1 --ntasks=1 --partition=small-g --gpus=1 -A project_465000828 --time=00:30:00

cp -r /project/project_465000828/Exercises/AMD/HPCTrainingExamples/ .

cd HPCTrainingExamples/HIP/dgemm/

mkdir build

cd build

cmake ..

make

cd bin

srun -n 1 omniperf profile -n dgemm -- ./dgemm -m 8192 -n 8192 -k 8192 -i 1 -r 10 -d 0 -o dgemm.csv

```

* Run `srun -n 1 --gpus 1 omniperf profile -h` to see all the options

* Now is created a workload in the directory workloads with the name dgemm (the argument of the -n). So, we can analyze it

```

srun -n 1 --gpus 1 omniperf analyze -p workloads/dgemm/mi200/ &> dgemm_analyze.txt

```

* If you want to only roofline analysis, then execute: `srun -n 1 omniperf profile -n dgemm --roof-only -- ./dgemm -m 8192 -n 8192 -k 8192 -i 1 -r 10 -d 0 -o dgemm.csv`

There is no need for srun to analyze but we want to avoid everybody to use the login node. Explore the file `dgemm_analyze.txt`

* We can select specific IP Blocks, like:

```

srun -n 1 --gpus 1 omniperf analyze -p workloads/dgemm/mi200/ -b 7.1.2

```

But you need to know the code of the IP Block

* If you have installed Omniperf on your laptop (no ROCm required for analysis) then you can download the data and execute:

```

omniperf analyze -p workloads/dgemm/mi200/ --gui

```

* Open the web page: http://IP:8050/ The IP will be displayed in the output