---

tags: dibsi, snakemake

---

# Snakemake_Automation

## Setup for the tutorial

### Install the necessary software and data

We will need tools for RNAseq analysis, i.e Salmon, edgeR and snakemake for the automation. All these packages are available through conda.

```

cd ~/

conda install -y salmon

curl -L -O https://raw.githubusercontent.com/ngs-docs/angus/2018/scripts/install-edgeR.R

sudo Rscript --no-save install-edgeR.R

```

You'll also need the yeast data from [trimming](quality-and-trimming.html#data-source) again:

```

cd ~/

mkdir -p data

cd data

curl -L https://osf.io/5daup/download -o ERR458493.fastq.gz

curl -L https://osf.io/8rvh5/download -o ERR458494.fastq.gz

curl -L https://osf.io/2wvn3/download -o ERR458495.fastq.gz

curl -L https://osf.io/xju4a/download -o ERR458500.fastq.gz

curl -L https://osf.io/nmqe6/download -o ERR458501.fastq.gz

curl -L https://osf.io/qfsze/download -o ERR458502.fastq.gz

```

And, finally, download the necessary analysis code into your home directory

```

cd ~/

curl -L -O https://raw.githubusercontent.com/ngs-docs/angus/2018/scripts/gather-counts.py

curl -L -O https://raw.githubusercontent.com/ngs-docs/angus/2018/scripts/yeast.salmon.R

```

Let's install Snakemake in our instance.

Run:

```

conda install -y snakemake

```

---

# Overview

+ Genomics analysis projects often generate hundreds or thousands of files. Keeping track of all of the scripts used to generate a set of finalized reports is an error-prone and difficult task.

+ The naive approach to organizing a project is to make a bash script that executes each step of the analysis from mapping to plotting.

+ Organizing a project in this manner is a good first step to producing reproducible analyses, however there are few common problems with this approach.

For example:

* After plotting all of your results you realize that you need to re-run the peak calling step, but in order to do that you need to rerun the entire pipeline.

* The script might grow to be 1,000s of lines of code making debugging very tedious as mistakes will be hard to spot.

# Bash scripting:

A **shell script** is a text file full of shell commands, that run just as if you're running them interactively at the command line.

We'll script everything but the install and data download part of [the RNAseq differential expression tutorial](https://angus.readthedocs.io/en/2018/rna-seq.html).

Use nano to create a new file named `run-rnaseq.sh` in your home directory on the Jetstream computer, and past the following script:

```

# make an rnaseq directory and change into it

mkdir rnaseq

cd rnaseq

# link the data in from the other directory

ln -fs ~/data/*.fastq.gz .

# download the reference transcriptome

curl -O https://downloads.yeastgenome.org/sequence/S288C_reference/orf_dna/orf_coding.fasta.gz

# index the reference transcriptome

salmon index --index yeast_orfs --type quasi --transcripts orf_coding.fasta.gz

# run salmon quant on each of the input read data sets

for i in *.fastq.gz

do

salmon quant -i yeast_orfs --libType U -r $i -o $i.quant --seqBias --gcBias

done

# collect the counts

python2 ~/gather-counts.py

# run the edgeR script!

Rscript --no-save ~/yeast.salmon.R

```

This is now a shell script that you can use to execute all

of those commands in *one* go -- try it out! Run:

```

cd ~/

bash run-rnaseq.sh

```

You should now have a directory `rnaseq/` containing a bunch of files, including the PDF outputs of the RNAseq pipeline, `yeast-edgeR-MA-plot.pdf`, `yeast-edgeR-MDS.pdf`, and `yeast-edgeR.csv`.

### Re-running the shell script

Suppose you wanted to re-run the script. How would you do that?

Well, note that the `rnaseq` directory is created at the top of the script, and everything is executed in that directory. So if you remove the rnaseq directory like so,

```

rm -fr rnaseq

```

you can then do

```

bash run-rnaseq.sh

```

to just ...rerun everything.

### Another good addition: make it fail nicely

One sort of weird aspect of shell scripts is that (by default) they keep running even if there's an error. This is bad behavior and we should turn it off.

You can observe this behavior by rerunning the script above without deleting the directory `rnaseq/` - the `mkdir` command will print an error because the directory still exists, but

A good addition to every shell script is to make it fail on the first error. Do this by putting

```

set -e

```

at the top - this tells bash to exit at the first error, rather than continuing bravely onwards.

### Automation via shell script is wonderful, but there are a few problems here.

First, you have to run the entire workflow each time and it recomputes everything every time. If you’re running a workflow that takes 4 days, and you change a command at the end, you’ll have to manually go in and just run the stuff that depends on the changed command.

Second, it’s very explicit and not very generalizable. If you want to run it on a different RNAseq data set, you’re going to have to change a lot of commands.

**Problem statement:**

* As computational work becomes more and more integral to many aspects of scientific research, computational reproducibility has become an issue of increasing importance to computer systems researchers and domain scientists alike.

* Though computational reproducibility seems more straight forward than replicating physical experiments, the complex and rapidly changing nature of computer environments makes being able to reproduce and extend such work a serious challenge.

Studies focusing on code that has been made available with scientific publications regularly find the same common issues that pose substantial barriers to reproducing the original results or building on that code:

1. "Dependency Hell"

2. Imprecise documentation

3. Code rot

4. Barriers to adoption and reuse in existing solutions

# _enter Snakemake_

Snakemake Tutorial: https://slides.com/olexschoen/snakemake-bioconda#/

The [Snakemake workflow management system](https://snakemake.readthedocs.io/en/stable/) is a tool to create reproducible and scalable data analyses. Workflows are described via a human readable, Python based language. They can be seamlessly scaled to server, cluster, grid and cloud environments, without the need to modify the workflow definition. Finally, Snakemake workflows can entail a description of required software, which will be automatically deployed to any execution environment.

Snakemake follows the [GNU Make](https://www.gnu.org/software/make/) paradigm:

- workflows are defined in terms of **rules** that define how to create output files from input files

- Dependencies between the rules are determined automatically, creating a DAG (directed acyclic graph) of jobs that can be automatically parallelized.

## Fake pipeline

1. Let’s get started by creating a workspace with fake data:

```

# Create a folder where we will run our commands:

mkdir snakemake-example

cd snakemake-example

# Make a fake genome:

touch genome.fa

# Make some fake data:

mkdir fastq

touch fastq/Sample1.R1.fastq.gz fastq/Sample1.R2.fastq.gz

touch fastq/Sample2.R1.fastq.gz fastq/Sample2.R2.fastq.gz

```

2. Creating and running a simple Snakefile

Let’s create a file called Snakefile to complete the first step of our pipeline. Open your preferred text editor, paste the code below, and save it into a file called Snakefile

```

SAMPLES = ['Sample1', 'Sample2']

rule all:

input:

expand('{sample}.txt', sample=SAMPLES)

rule quantify_genes:

input:

genome = 'genome.fa',

r1 = 'fastq/{sample}.R1.fastq.gz',

r2 = 'fastq/{sample}.R2.fastq.gz'

output:

'{sample}.txt'

shell:

'echo {input.genome} {input.r1} {input.r2} > {output}'

```

Understanding the Snakefile

Let’s walk through the Snakefile line by line.

```

SAMPLES = ['Sample1', 'Sample2']

```

We define a list of strings called SAMPLES with our sample names that we’ll use later in the Snakefile.

```

rule all:

input:

expand('{sample}.txt', sample=SAMPLES)

```

The input of rule all represents the final output of your pipeline. In this case, we’re saying that the final output consists of two files: Sample1.txt and Sample2.txt.

* expand() is a special function that is automatically available to you in any Snakefile. It takes a string like {sample}.txt and expands it into the list `['Sample1.txt','Sample2.txt']`.

```

rule quantify_genes:

input:

genome = 'genome.fa',

r1 = 'fastq/{sample}.R1.fastq.gz',

r2 = 'fastq/{sample}.R2.fastq.gz'

output:

'{sample}.txt'

shell:

'echo {input.genome} {input.r1} {input.r2} > {output}'

```

Because we specified Sample1.txt and Sample2.txt as the final output files, we need a rule for how to create these files. Instead of writing two rules (one rule for Sample1 and a second rule for Sample2) we write just one rule with the special string `{sample}.txt` as the output.

When Snakemake reads `{sample}.txt`, it knows to replace it with each of the values inside `SAMPLES` to create Sample1.txt and Sample2.txt. Next, it will extract Sample1 from the string Sample1.txt and put it into the input files. So, `fastq/{sample}.R1.fastq.gz` becomes `fastq/Sample1.R1.fastq.gz` and `fastq/{sample}.R2.fastq.gz` becomes `fastq/Sample1.R2.fastq.gz`.

In our fake pipeline, we won’t actually quantify gene expression. Instead, we’ll just echo the names of the input files into an output file.

## Running Snakemake

We can run the pipeline by invoking snakemake. It knows to look for a file called Snakefile. Otherwise, you can specify a file to use with the `--snakefile` option.

#### execute the workflow without target: first rule defines target

```snakemake```

#### dry-run

```snakemake -n```

#### dry-run, print shell commands

```snakemake -n -p```

#### dry-run, print execution reason for each job

```snakemake -n -r```

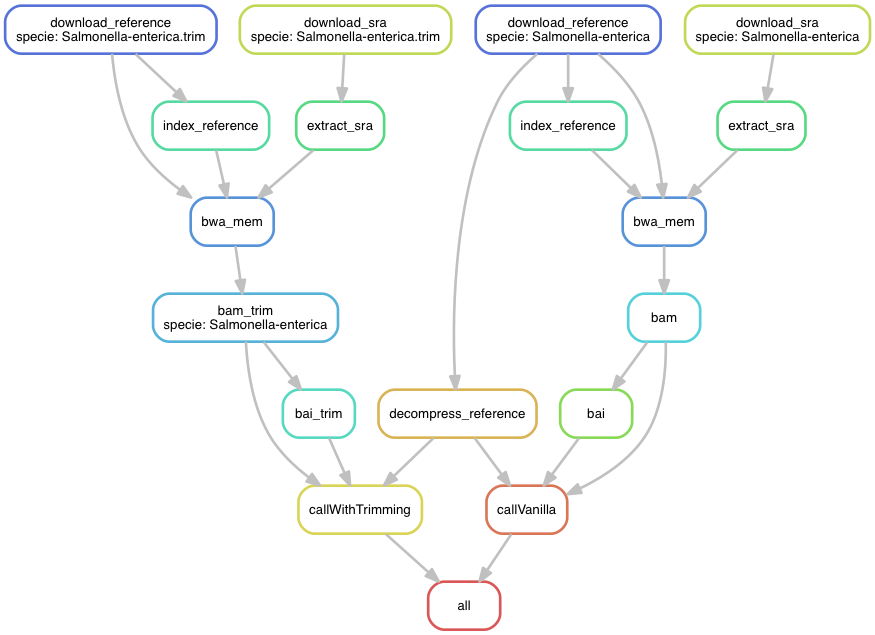

## an example directed-acyclic graph(dag):

---

## RNAseq Snakemake workflow

Let us change directories to the the rnaseq folder created earlier using bash script and create a new Snakefile:

```

cd ~/rnaseq

nano Snakefile

```

Copy and paste the following workflow into your Snakefile:

```

input_files=["ERR458493.fastq.gz",

"ERR458494.fastq.gz",

"ERR458495.fastq.gz",

"ERR458500.fastq.gz",

"ERR458501.fastq.gz",

"ERR458502.fastq.gz"]

rule make_plots:

input:

expand("{input_files}.quant.counts", input_files=input_files)

output:

"yeast-edgeR-MA-plot.pdf",

"yeast-edgeR-MDS.pdf",

"yeast-edgeR.csv"

shell:

"Rscript --no-save ~/yeast.salmon.R"

# make the .counts files

rule make_counts:

input:

expand("{input_files}.quant", input_files=input_files)

output:

expand("{input_files}.quant.counts", input_files=input_files)

shell:

"python2 ~/gather-counts.py"

rule generate_quant_dir:

input:

"{accession}.fastq.gz"

output:

"{accession}.fastq.gz.quant"

shell:

"salmon quant -i yeast_orfs --libType U -r {wildcards.accession}.fastq.gz -o {wildcards.accession}.fastq.gz.quant --seqBias --gcBias"

```

### Visualizing workflow diagrams

Try running:

```

snakemake --dag yeast-edgeR.csv | dot -Tpng > dag.png

```

Lets look at the dag diagram by downloading it onto your local computer.

Open a local terminal on your laptop and scp the dag.png file from your jestream instance:

```scp username@ip-address:~/rnaseq/dag.png /path/to/Destination/```

### Challenge: Extend the snakemake rules to download and prepare the reference

References:

[official Snakemake Tutorial](http://snakemake.readthedocs.io/en/stable/tutorial/tutorial.html)