# Final project evaluation

## Brief project description and features

* A service for keeping track of personal income and expenses

* Monitor your finances in different currencies

* Add tags to transactions to see what categories of expenses you have

* Collect statictics about expenses independent of their currencies

## Project components and teams

The project consists of the Python backend and the Expense tracker mobile

app.

The team is crossfunctional and most of the team worked on both parts

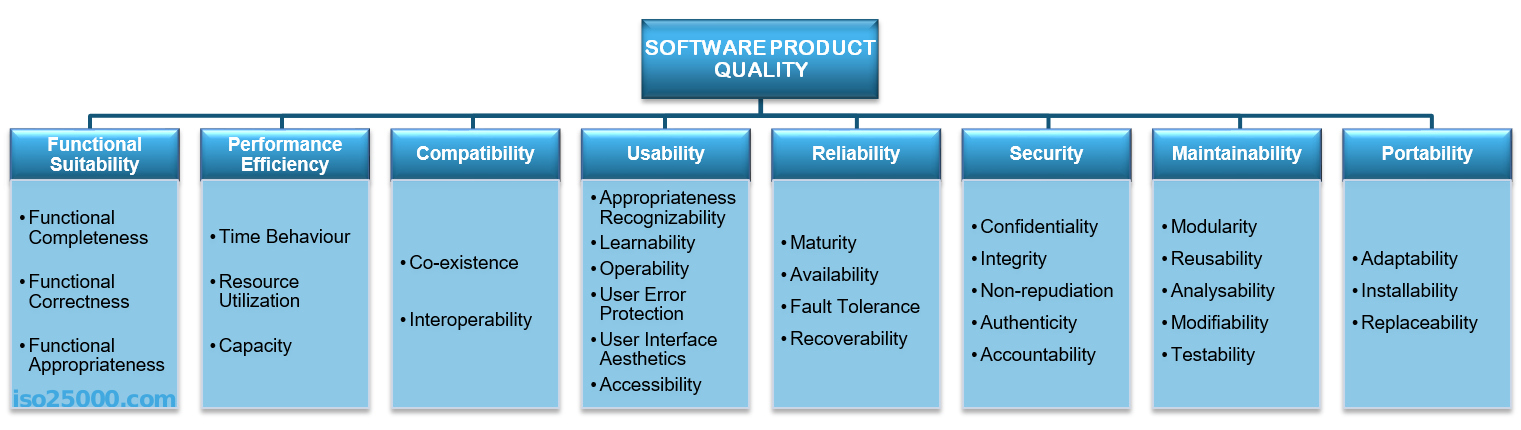

## Quality model

<!---

NOTE: I HAVE A LITTLE IDEA WHAT I AM WRITING ABOUT!!! CROSS-CHECK BEFORE

PRESENTATION PLZZZZ

-->

We decided to use parts of ISO 25010 model. Its quality characteristics seem to fit for our usecase.

https://iso25000.com/index.php/en/iso-25000-standards/iso-25010

Requirements for MVP:

- Up to 100 simultaneous users

- Our modelled user makes 1 request each 3-5 seconds

- User should be able to do the following:

- create transactions in different currencies

- see list of own transations

- know how much money he/she spent in rubles.

## Quality characteristics self-evaluation

* Functional suitability

Functional completeness was not achieved during this project due to the

lack of time and business on other side projects. We made the foolowin simplifications:

* the only available base currency is RUB;

* we did not monitor mobile application;

However, the developed

functions are correct. The correctness is ensured by the testing quality gates: unit, integration. Test case quality is verified using mutation testing.

* Performance utilization

The original requirement for this MVP was to handle up to 100 users at the same time. To verify this requirement holds we set up a load testing.

Using locust **<insert https://locust.io logo pls>**.

The server succesfully handles 100 modelled users:

According to monitorings, the bottle-neck is registration and authorization processes. They involve cryptography, that our cheap cloud processor cannot do fast.

Our full monitoring dashboard **<probably cut this image>**:

Hardware monitorings also show CPU lack:

<!-- * Compatibility

Compatibility was not a primary concern for our product. However, we use

an external REST API to receive currency rates. Therefore, it is

important to watch for breaking changes of those APIs. To do this we

**WHAT???** -->

* Usability

The usability is split to the two different usability components.

* Backend -> Mobile application developers: The usability was achieved by conducting a planning sessions with

the mobile frontend developers. For each change we discussed if it would be convenient for the mobile frontend.

* Mobile app -> End-users usability is verified by the members of the team that are experienced in UX design.

* Reliability

The reliability of the services was ensured by our quality gates and

monitoring. Also we planned to add monitoring to the mobile application,

too. However, these plans did not come true.

* Security

Security on the backend side is ensured by static analyzers: bandit <https://github.com/PyCQA/bandit>, safety <https://pyup.io/safety/>

* Maintainability

To ensure maintainability we abstained from use of libraries and

frameworks that were not production-ready. However, this action was not

performed in time. We also have set up static analyzers that ensured

quality of code of projects. The mobile application uses Detekt and

the backend service uses pylint, mypy for code and darglint for documentation

* Portability

* The backend service uses Python. Python code is executed inside a docker-container that are supported by vast range of platforms either directly or via VM. To ensure easy

installation of the backend server we added infrastructure-as-code

scenarios to easily deploy all backend services to the cloud.

* Mobile app is targeted at Android platform. It has form of a native Android app,

which is not portable for other mobile platforms.

## Quality gates

The both projects are hosted at Gitlab. We implemented the quality gates

using the GitLab CI. The mobile application validates code quality with a

static analysis tools. It also verifies correctness of functions by

performing unit tests and UI integration tests with a mocked backend

server. These checks run on every merge request.

Unfortunately, we failed to set up measuring of test coverage because it

is hard for instrumented tests and Unit tests covers only a small part of

code so it is pointless to measure coverage by them.

Mobile Quality gates

Backend production quality gates:

## Recovery

Usually errors are happening on 3rd party service or database access. We handle integration issues using Tencity. Database issues are handled using transactioning and we try to use safe functions.

## Lessons learnt

1) Develop a qulity product requires a lot of dedication and should not be mixed with other activities

2) Instruments for mobile application quality are not mature: we could not automatically measure coverage for UI tests and UX quality

3) Once again: good coverage and a lot of testing do not save us from bugs.

<!-- It is important to pay attention to all quality aspects. Due to our

ignorance we have a well-tested, monitored application and maintanable

application. However, the application is unusable because it lacks too

much functions. -->

## Application screenshots