# 20241010-open-house-mk-quick-report

This document outlines the Bok Center's [Thursday, October 10th Information Session on Generative AI](https://bokcenter.harvard.edu/event/integrate-chatgpt-edu-your-academic-routines), from initial planning and preparation, through the event itself and reflections on what we learned. The event was organized around six colorful iMac stations, each offering participants a different way to engage with AI tools and concepts, with a focus on practical demonstrations and ethical discussions. The lessons learned will help shape future events and improve how we present AI in educational settings.

## the plan and prep

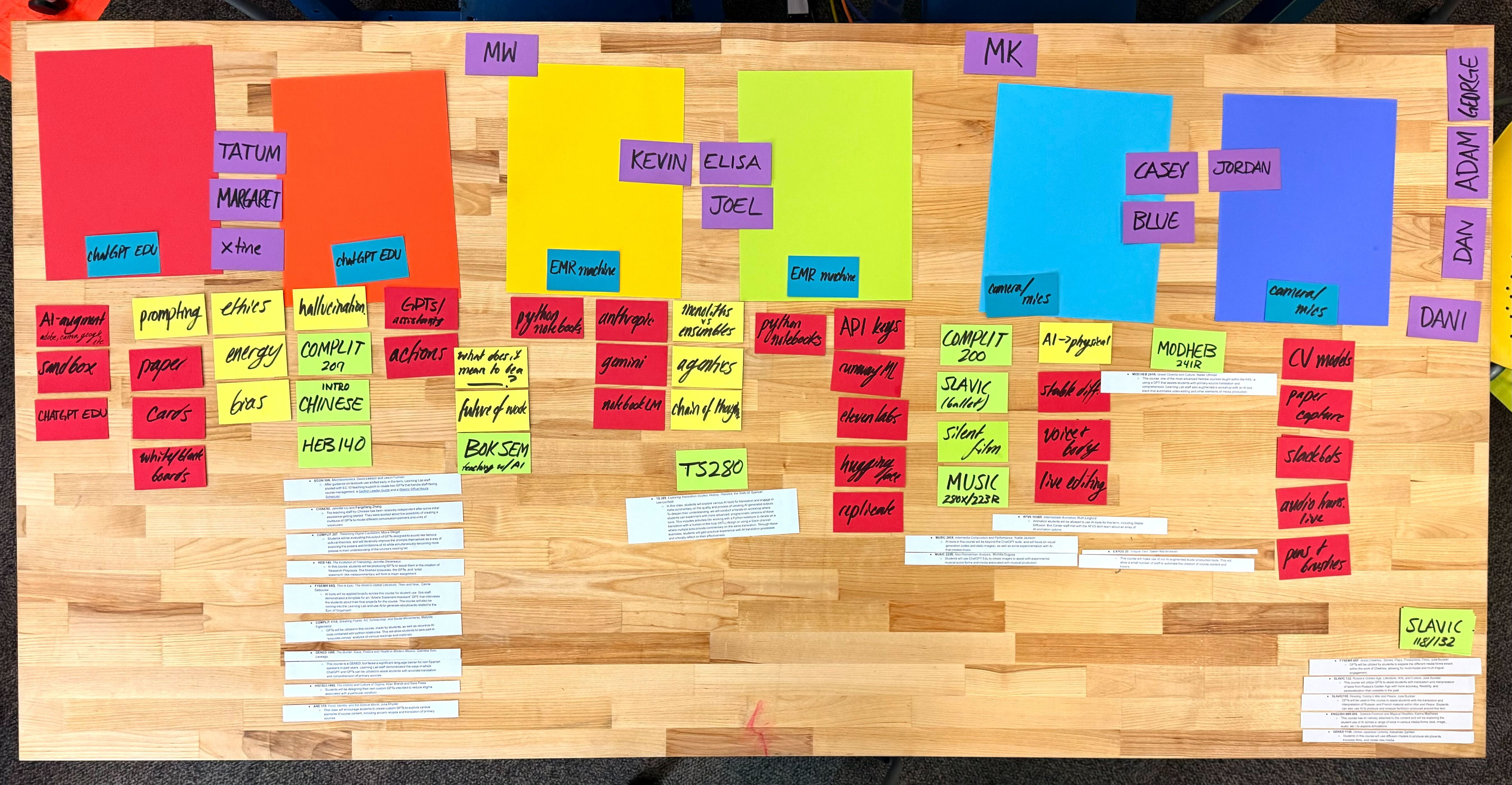

We decided to design an installation based around the six colorful iMacs that we have. We took stock of the team members available on the day, the tools we wanted to demonstrate, and various moves and demos based on our work with courses that have used generative AI. We also identified key topics that we wanted to cover, such as AI ethics, prompt engineering, chain-of-thought reasoning, and agents.

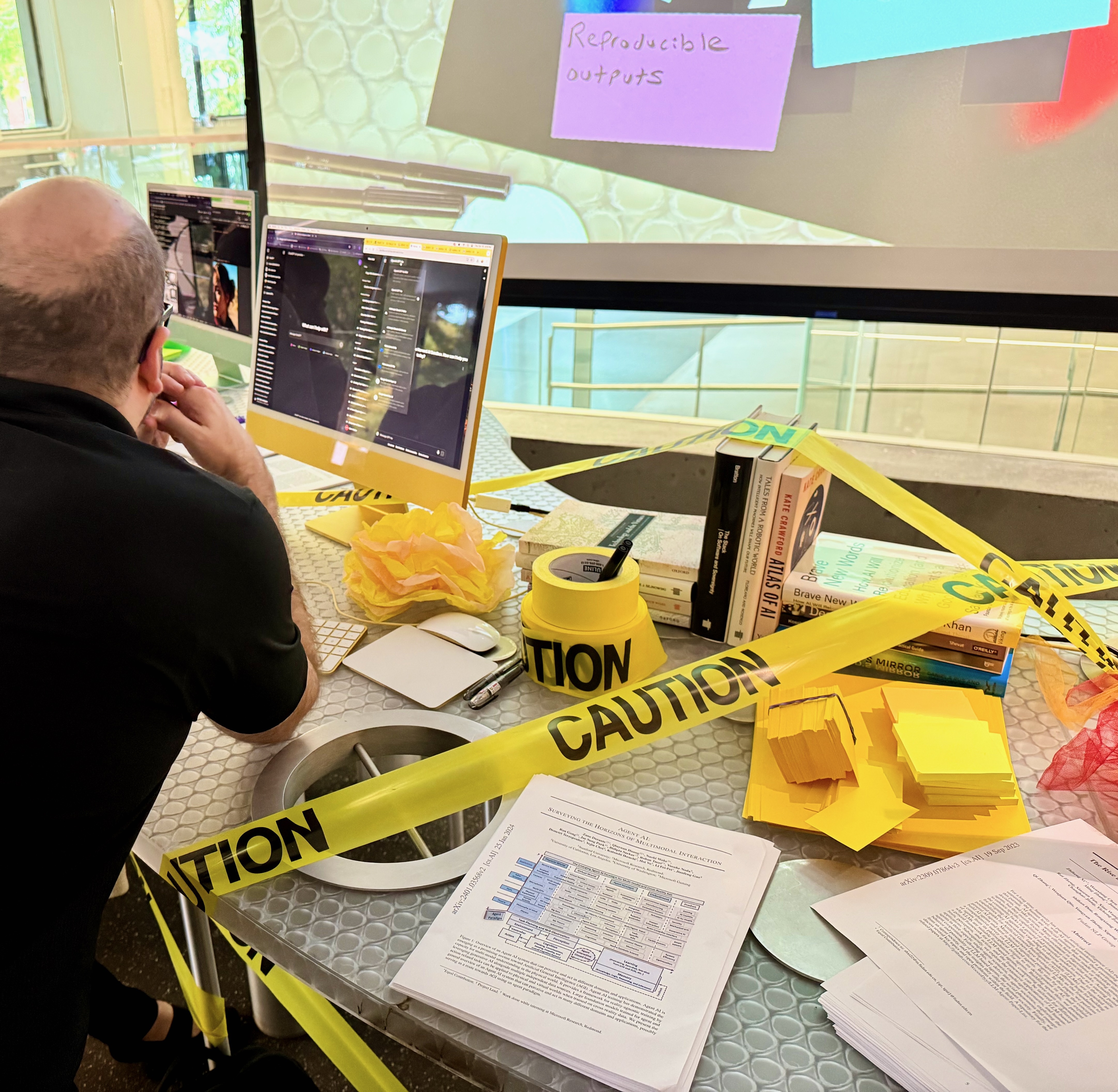

We gathered all the necessary Learning Lab materials and transported them to the Science Center. At one point, Madeleine was seen carrying a piece of truss across Harvard Square, demonstrating our hands-on efforts.

## the event

The event was structured around a series of iMacs arranged in rainbow order, from red to violet, and each station offered participants a unique and progressive experience with generative AI. Our goal was to guide attendees through a logical flow of the stations, while also allowing flexibility for those who needed to engage with specific topics more directly. Madeleine welcomed participants as they arrived, ensuring a smooth entry process.

### red

Getting started with ChatGPT Edu.

Experiment and reflect. What does it get right and wrong? Factually? Ethically?

At the red station, Christine engaged participants with a "stop and reflect" approach. This station introduced users to basic generative AI tools, encouraging them to explore the technology's capabilities and limitations. Christine helped participants, particularly those new to AI, understand factual accuracy, ethical concerns, and the potential biases in AI-generated outputs. Participants tested prompts related to their areas of expertise, allowing them to critically evaluate the system's responses.

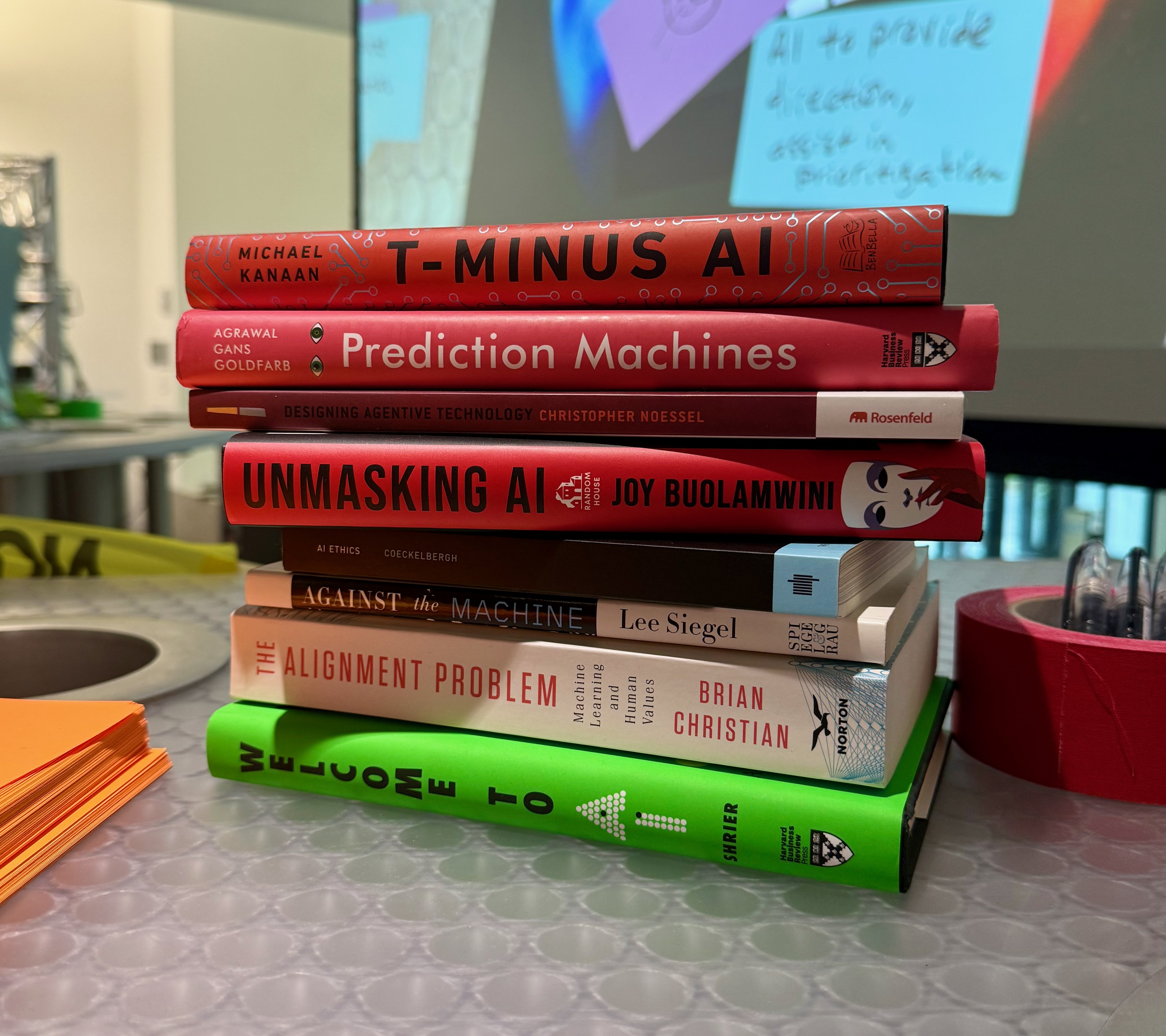

Resources at this station included books and academic papers on AI bias, providing a foundation for further discussion on these critical issues.

#### articles on bias

* [Fairness and Bias in Artificial Intelligence: A Brief Survey of Sources, Impacts, and Mitigation Strategies](https://www.mdpi.com/2413-4155/6/1/3)

* [How can we manage biases in artificial intelligence systems – A systematic literature review](https://www.sciencedirect.com/science/article/pii/S2667096823000125)

### orange

Next steps.

All-in-one teacher/student replacements vs ensemble of unitaskers (Rosey vs Roomba).

The orange station offered a more advanced engagement with AI, facilitated by a representative from the Academic Resource Center. Here, participants were encouraged to reflect on AI’s role in education, particularly how it can either enhance or impede the learning process. To illustrate this, we used a comparison between Rosey, the Jetsons' robot maid, and a Roomba, emphasizing AI's strength in performing discrete tasks rather than replicating human functions in their entirety. The station also introduced the concept of designing custom prompt-based tools (PBTs) for specific tasks, aligning with current research on the development of AI agents.

#### articles on agents

* [Agent AI: Surveying the Horizons of Multimodal Interaction](https://arxiv.org/abs/2401.03568)

* [The Rise and Potential of Large Language Model Based Agents: A Survey](https://arxiv.org/abs/2309.07864)

* [LLM Multi-Agent Systems: Challenges and Open Problems](https://arxiv.org/abs/2402.03578)

* [A Survey on Context-Aware Multi-Agent Systems: Techniques, Challenges and Future Directions](https://arxiv.org/abs/2402.01968)

### yellow

Getting better quality responses.

- comparing Custom GPT RAG to NotebookLM

- comparing models

- looking under the hood

At the yellow station, we introduced participants to more complex AI methodologies, such as chain-of-thought reasoning and retrieval-augmented generation (RAG). A comparison between ChatGPT and NotebookLM showcased the latter’s superior ability to restrict outputs to its source materials, avoiding the hallucinations often seen in other systems. This station, reinforced by academic readings on these topics, emphasized the importance of a cautious approach to AI. We also encouraged discussions on when to avoid AI altogether, advocating for traditional methods like pen-and-paper work or oral presentations where appropriate.

#### articles on chain of thought

* [Navigate through Enigmatic Labyrinth A Survey of Chain of Thought Reasoning: Advances, Frontiers and Future](https://arxiv.org/abs/2309.15402)

### green

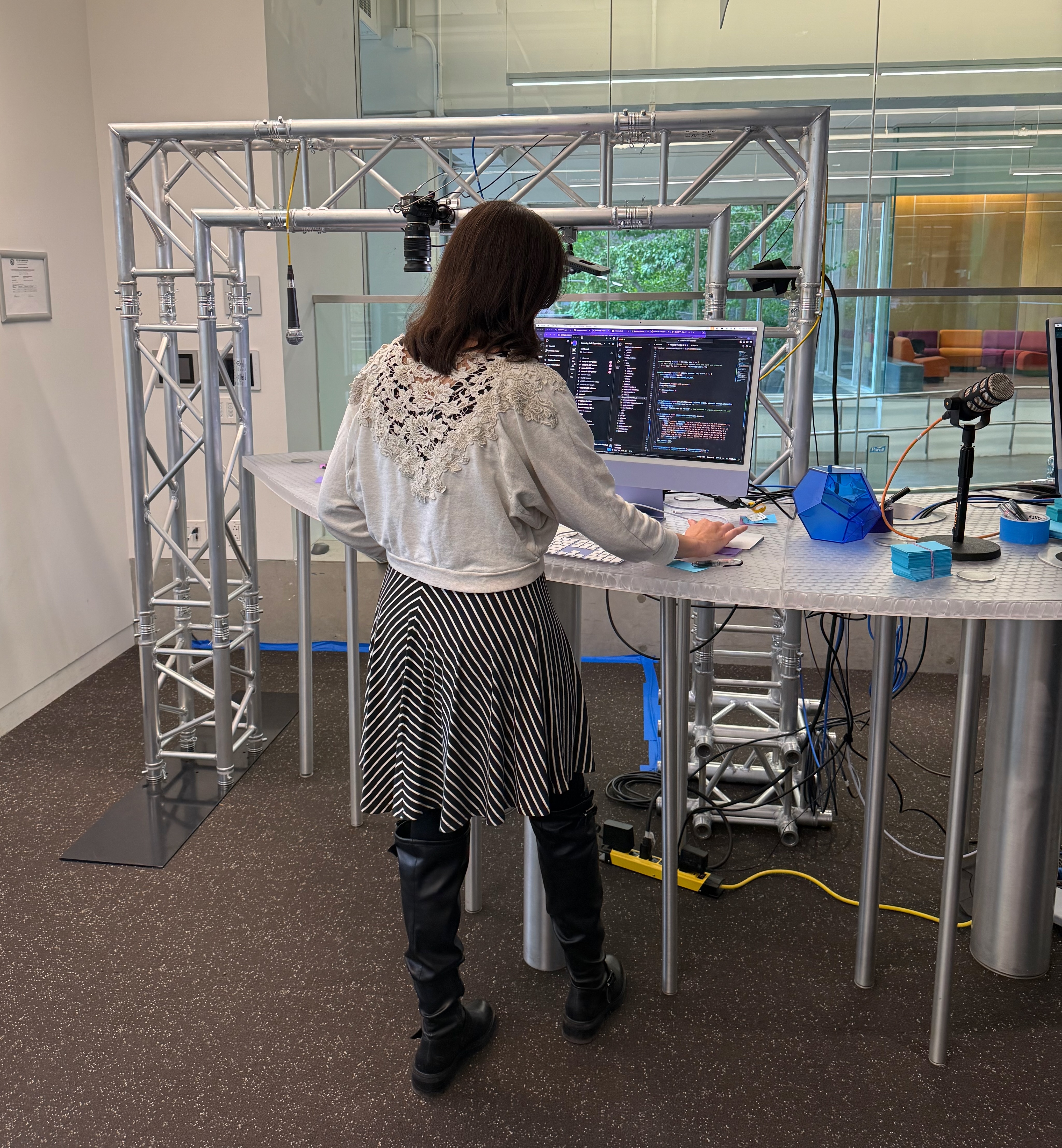

Python notebooks.

At the green station, we offered a hands-on technical experience, led by Elisa, one of our Specialized Learning Lab Undergraduate Fellows with coding and Generative AI expertise. This station focused on the use of Python notebooks to explore advanced APIs from platforms such as OpenAI, Replicate, Google, and Anthropic. Elisa demonstrated how these cutting-edge developer tools offer more flexibility and control than consumer-facing AI products, allowing participants to experiment with the latest advancements in generative AI technology.

### blue

Audio capture and agents.

The blue station introduced participants to multimodal AI inputs. Using microphones, we captured participant speech, which was then transcribed and, in some cases, translated or converted into visual content. We also demonstrated how Slackbots could be programmed to perform tasks based on this input. Additionally, bots created news reports and offered counterarguments, allowing for interactive and creative AI engagement. This station highlighted the potential of AI to augment real-world experiences in innovative ways.

### violet

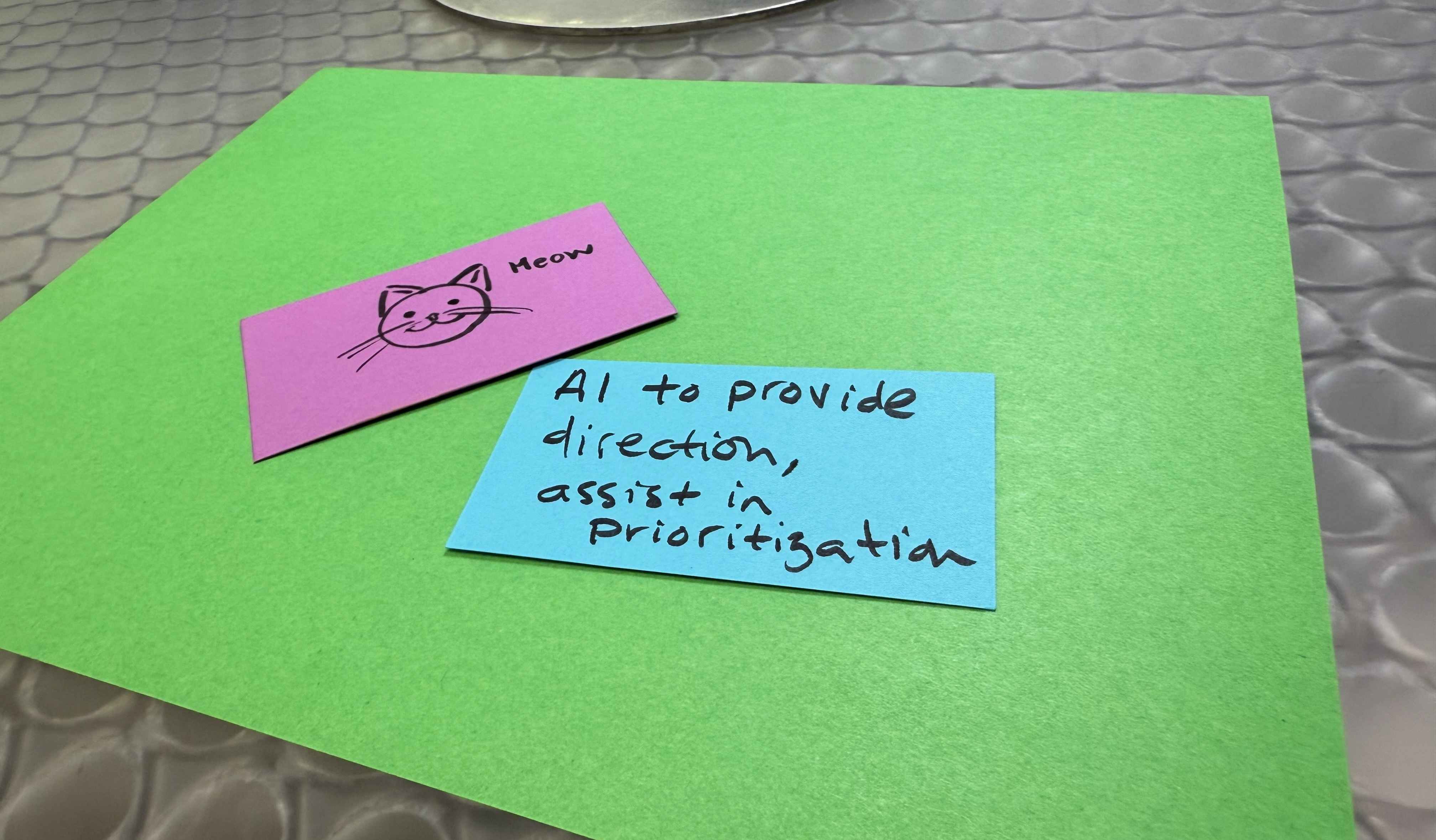

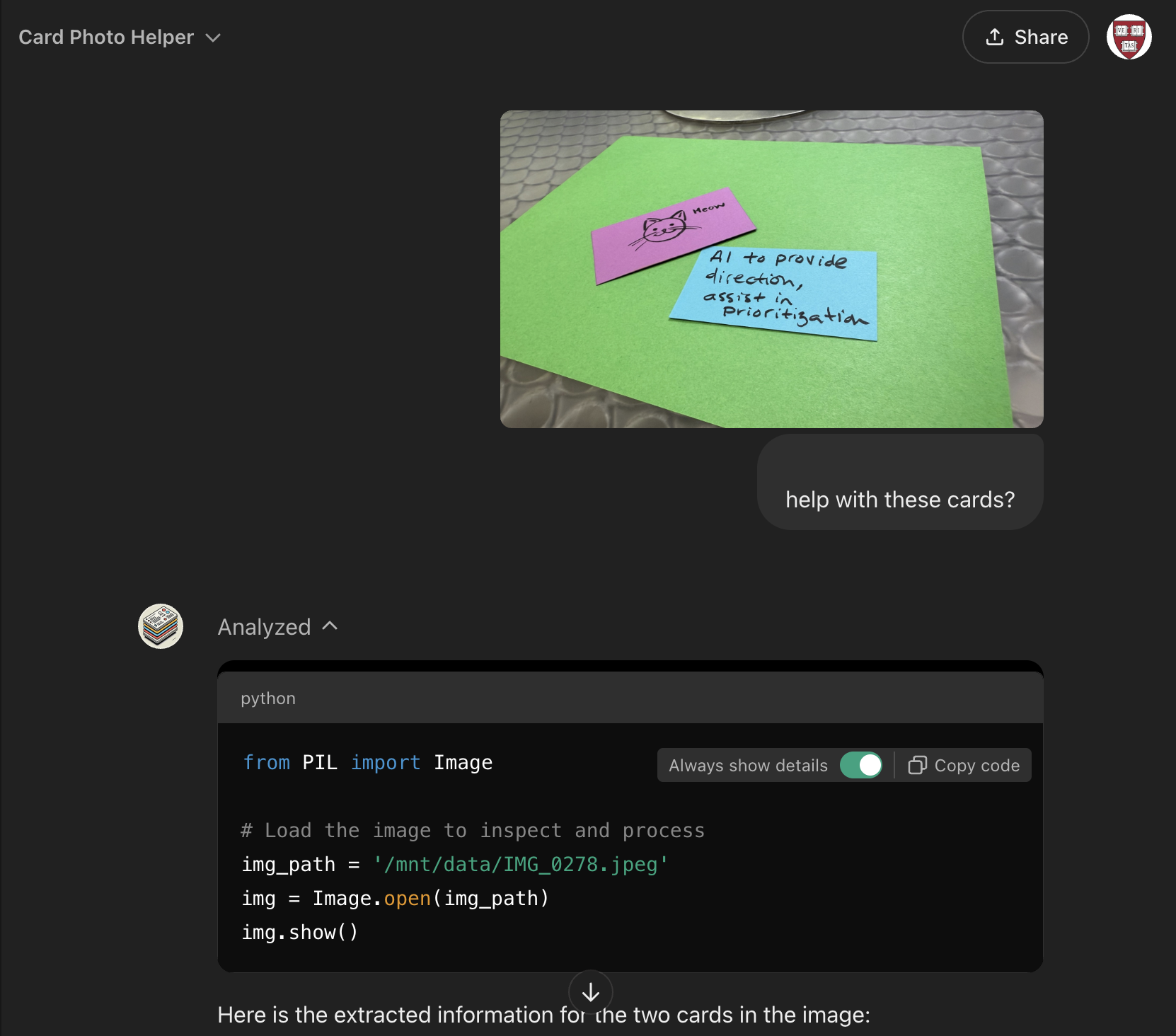

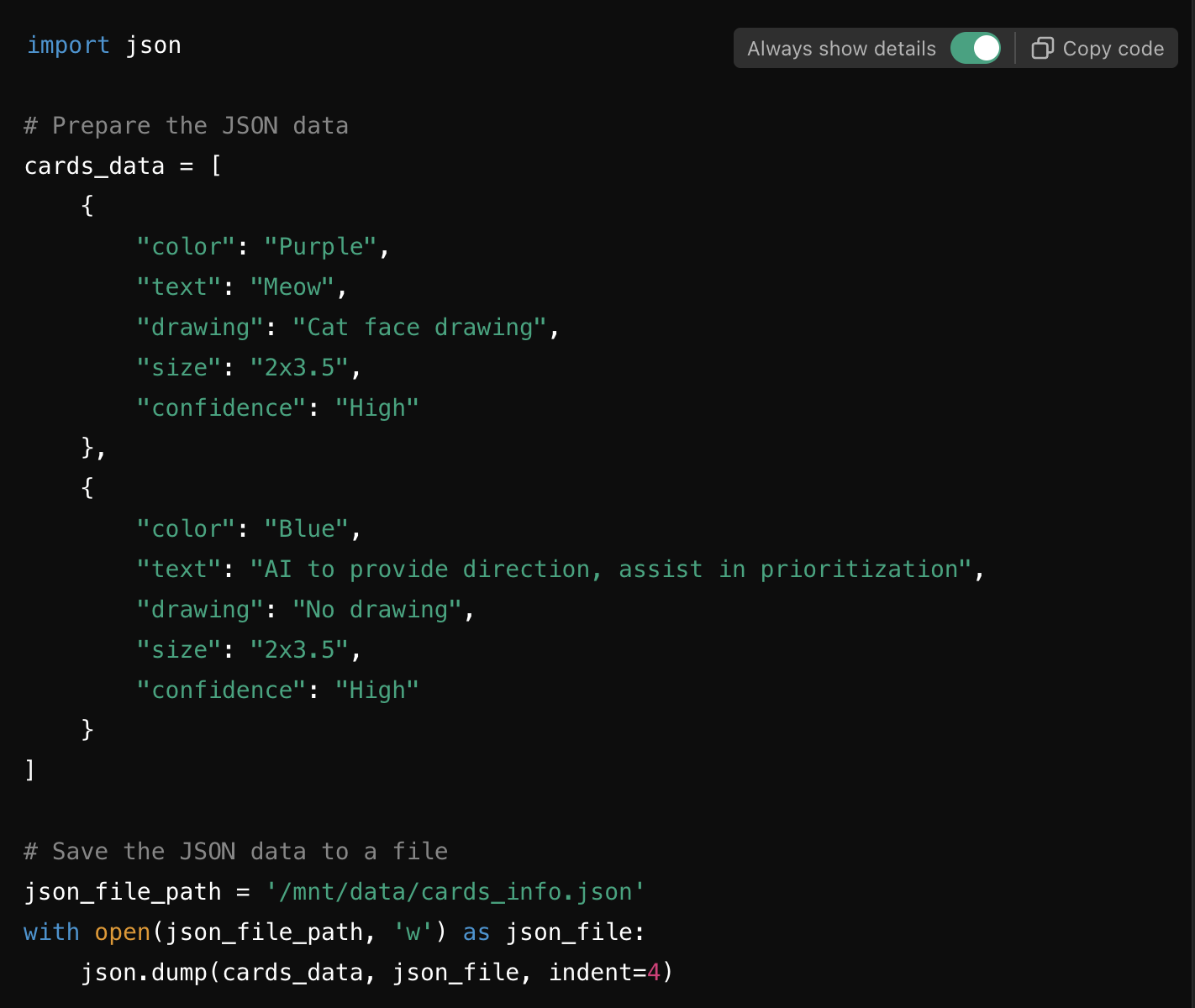

Video capture and image processing.

At the violet station, we demonstrated the integration of AI into video capture and low-code/no-code solutions. Participants wrote or drew on index cards, which we then converted into structured data using a custom GPT interface. This example showcased how AI can be applied in educational settings without requiring extensive technical knowledge, providing a practical tool for both instructors and students.

## the aftermath

Following the event, we efficiently packed and returned to the Learning Lab by 5:30 PM. The open house provided us with valuable insights into how to effectively run similar events in the future, both in terms of structure and participant engagement. As we move forward, we plan to refine our approach to balance flexibility with structured guidance, ensuring that complex AI concepts remain accessible and relevant to a wide range of audiences.