# Spaces (WIP)

> This is a WIP README for a new repo I'm creating based on gym.Spaces, but without Gym as a requirement.

Simple, Expressive Structural Interfaces in Python. 100% Backward-compatible with gym.spaces.

Spaces are used to specify the interface between algorithms and the environments or problems they can be applied to. They have played a key role in the standardization of RL research.

Here, push them further, and kick things up a notch.

## 100% Backward-Compatible with Gym

Spaces can be used anywhere a gym.Space is expected!

```python

# From this:

from gym.spaces import Box

# to this:

from spaces import Box

```

### Installation:

```console

$ pip install spaces

```

------

## Added Features:

### Torch / Jax-compatible Spaces

```python

>>> import torch

>>> input_space = Box(0, 1, shape=(4,), dtype=torch.float32, device="cuda")

>>> input_space.sample()

tensor([0.9674, 0.4693, 0.0800, 0.0476], device='cuda:0')

```

```python

>>> import jax.numpy as jnp

>>> input_space = Box(0, 1, shape=(4,), dtype=jnp.float32)

>>> input_space.sample()

DeviceArray([0.9653214 , 0.22515893, 0.63302994, 0.29638183], dtype=float32)

```

## Added Spaces

- ImageSpace!

```python

>>> image_batch = ImageSpace(0, 1, (32, 3, 256, 256), dtype=np.float64)

>>> image_batch

ImageSpace(0.0, 1.0, (32, 3, 256, 256), float64)

>>> image_batch.is_channels_first

True

>>> image_batch.batch_size

32

>>> image_batch.channels

3

>>> image_batch.height, image_batch.width

(256, 256)

```

- <details>

<summary>TypedDicts!</summary>

```python

>>> class VisionSpace(TypedDictSpace):

... x: Box = Box(0, 1, (4,), dtype=np.float64)

>>> s = VisionSpace()

>>> s

VisionSpace(x:Box(0.0, 1.0, (4,), float64))

>>> s.seed(123)

>>> s.sample()

{'x': array([0.70787616, 0.3698764 , 0.29010696, 0.10647454])}

```

</details>

- <details>

<summary>NamedTuples!</summary>

```python

>>> class DatasetItemSpace(NamedTupleSpace):

... x: Box = Box(0, 1, shape=(4,))

... y: Discrete = Discrete(10)

>>> s = DatasetItemSpace()

>>> s

DatasetItemSpace(x=Box(0.0, 1.0, (4,), float64), y=Discrete(10))

>>> s.seed(123)

>>> s.sample()

DatasetItem(x=array([0.70787616, 0.3698764 , 0.29010696, 0.10647454]), y=6)

```

</details>

### Filtering!

You can describe arbitrarily-specific spaces using predicates:

```python

>>> even_integers = Discrete(10).where(lambda n: n % 2 == 0)

>>> even_integers.sample()

6

```

```python

>>> unit_circle = Box(-1, 1, shape=(2,), dtype=np.float64, seed=123).where(

... lambda xy: (xy ** 2).sum() <= 1

...)

>>> np.array((0.1, 0.2)) in unit_circle

True

>>> np.array((1, 1)) in unit_circle

False

>>> unit_circle.sample()

array([ 0.36470373, -0.89235796])

>>> def hypersphere(radius: float, dimensions: int) -> FilteredSpace[np.ndarray]:

... return Box(-radius, +radius, shape=(dimensions,)).where(

... lambda v: v.pow(2).sum() <= radius**dimensions

... )

...

>>> unit_sphere = hypersphere(radius=1, dimensions=3)

```

### Spaces as first-class objects!

Spaces can be easily changed using additions, multiplications, divisions, etc:

```python

>>> from spaces import Box

>>> space = Box(0, 1)

>>> space + 1

Box(1, 2, shape=(), dtype=np.float32)

>>> space * 10

Box(0, 10, shape=(), dtype=np.float32)

>>> space + space

Box(0, 2, shape=(), dtype=np.float32)

```

Fancier operations, like multiplying or dividing two spaces together, will produce an output space that will perform the multiplication / division from each source space before returning the product!

```python

>>> from spaces import Box

>>> unit_uniforms = [Box(0, 1) for _ in range(5)]

>>> product_of_uniforms = np.product(unit_uniforms)

>>> product_of_uniforms

ProductSpace([

Box(0, 1),

...

Box(0, 1),

])

>>> product_of_uniforms.sample()

0.013806694

>>> product_of_uniforms.sample()

0.25681835

```

_**This means, it's also possible to pass a space through a neural network!**_

### **Envs** (<-- big deal!)

Now you can even specify directly the type of environments that your agents / models can be applied to!

```python

>>> Envs: Space[gym.Env]

>>> discrete_action_envs = Envs().where(lambda env: isinstance(env.action_space, Discrete))

>>> gym.make("CartPole-v1") in discrete_action_envs

True

>>> gym.make("Pendulum-v1") in discrete_action_envs

False

>>> discrete_action_envs.sample()

<TimeLimit<OrderEnforcing<MountainCarEnv<MountainCar-v0>>>>

```

### Datasets

```python

>>> from spaces import Datasets, Image, Discrete, Tuple

>>>

>>> dataset_space = Datasets(

... item=Tuple(Image(low=0, high=256, shape=(3, 32, 32)), Discrete(10)),

... length=lambda l: l < 10_000,

... )

>>> from torchvision.datasets import FakeData

... fake_dataset = FakeData(

... size=1000, image_size=(3, 32, 32), num_classes=10,

... )

>>> fake_dataset in dataset_space

True

>>> from torchvision.datasets import CIFAR10

>>> CIFAR10("data") in dataset_space

True

>>> from torchvision.datasets import MNIST

>>> MNIST("data") in dataset_space

False

```

### Unions!

```python

>>> odd_integers = Discrete().where(lambda n: n % 2 == 0)

>>> greater_than_5 = Discrete().where(lambda n: n > 5)

>>> union = odd_integers | greater_than_5

>>> union

UnionSpace([

Discrete(n=1000).where(lambda n: n % 2 == 0),

Discrete().where(lambda n: n > 5)

])

>>> union.sample()

3449325481

```

```python=

def union(space_a: Space[A], *other_spaces: Space[B]) -> Space[A | B]:

...

# TODO: For boxes, maybe we could just return Box(low=np.minimum(a.low, b.low), high=np.maximum(a.high, b.high)) ?

# However that isn't great if there are spots where they don't overlap! Maybe we could check for overlap before doing this?

# TODO: Given a bunch of Box spaces, compare the lowest of maximum and the highest minimum to check if there is a non-covered region?

```

### Transformations

This library comes with a buttload of transformation functions that can directly be applied to spaces, not just their values!

For instance:

```python

>>> from spaces import ImageSpace

>>> from spaces.transforms import channels_first

>>> image_space = spaces.ImageSpace(0, 256, (32, 32, 3), np.uint8)

>>> channels_first(image_space)

ImageSpace(0, 256, (3, 32, 32), dtype=np.uint8)

```

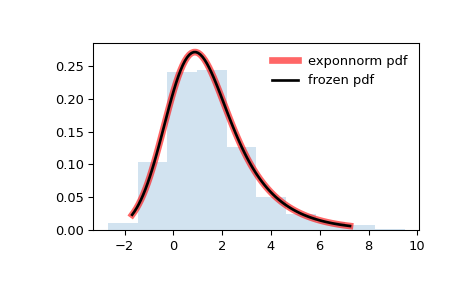

### Rich Priors

You can use much more than just uniform priors for your spaces!

A wide variety of priors can be used over a given space! It is for example possible to use any of the priors from [scipy distrubutions](https://docs.scipy.org/doc/scipy/reference/stats.html):

```python

>>> from scipy.stats import exponnorm

>>> space = Box(-np.inf, np.inf, prior=exponnorm)

>>> histogram = space.hist()

>>> histogram.show()

```

### Higher-Order spaces

Spaces of Spaces!

- Discretes: Space of Discrete spaces

- Boxes: Space of Box spaces

- Tuples: Space of Tuple spaces

- Dicts: Space of Dict Spaces

- Envs: Space of gym Environments

- Dataset: Space of Datasets

## Other ideas:

- Space of Functions? e.g. input space, output space?