# Toward Concurrency

(返回[2016年暑期系統軟體課程:台南場次](https://hackmd.io/EwZg7AbALAjArADgLSShJUBGECcSEAME6uYmB2OAplAGa1A))

## 軟體開發現況

* [The Free Lunch Is Over: A Fundamental Turn Toward Concurrency in Software](http://www.gotw.ca/publications/concurrency-ddj.htm)

* [An Introduction to Lock-Free Programming](http://preshing.com/20120612/an-introduction-to-lock-free-programming/)

* [Acquire and Release Semantics](http://preshing.com/20120913/acquire-and-release-semantics/)

* [Weak vs. Strong Memory Models](http://preshing.com/20120930/weak-vs-strong-memory-models/)

* [Concurrency Kit](http://concurrencykit.org/): Concurrency primitives, safe memory reclamation mechanisms and non-blocking data structures for the research, design and implementation of high performance concurrent systems.

## Functional Programming

* [Why are functional languages considered a boon for multi threaded environments?](http://stackoverflow.com/questions/2909282/why-are-functional-languages-considered-a-boon-for-multi-threaded-environments)

* [It’s Time to Get Good at Functional Programming](http://www.drdobbs.com/article/print?articleId=212201710&siteSectionName=tools)

* [Don’t Be Scared Of Functional Programming](https://www.smashingmagazine.com/2014/07/dont-be-scared-of-functional-programming/)

* [Functional programming in C](https://lucabolognese.wordpress.com/2013/01/04/functional-programming-in-c/)

## Concurrency (並行) vs. Parallelism (平行)

* [Concurrency is not Parallelism](https://blog.golang.org/concurrency-is-not-parallelism)[,](https://www.youtube.com/watch?v=cN_DpYBzKso) by Rob Pike

* [錄影](https://vimeo.com/49718712); [後續 stackoverflow 討論](http://stackoverflow.com/questions/11700953/concurrency-is-not-parallelism)

**Task:** Let’s burn a pile of obsolete language manuals! One at a time!

**Concurrency:** There are many concurrently decompositions of the task! One example:

(<u>composition 合成 decomposition 分解</u>)

[ **Interruptability** ]

**Parallelism:** The previous configuration occurs in parallel if there are at least 2 gophers working at the same time or not.

[ **Independentability** ]

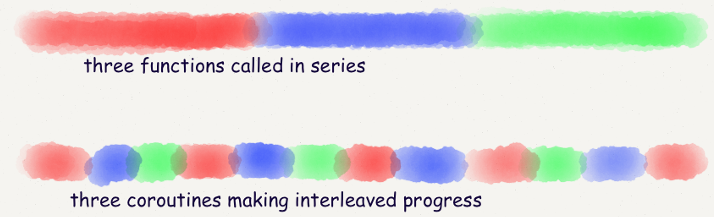

換個觀點來理解 [ [source](http://stackoverflow.com/questions/1050222/concurrency-vs-parallelism-what-is-the-difference) ]

* **Concurrency:** If two or more problems are solved by a single processor.

* multiple execution flows with the potential to share resources

* Example: two threads competing for a I/O port.

* the separation of tasks to provide **interleaved execution**

* Concurrency solves the problem of having scarce CPU resources and many tasks. So, you create threads or independent paths of execution through code in order to share time on the scarce resource. Up until recently, concurrency has dominated the discussion because of CPU availability.

* **Parallelism:** If one problem is solved by multiple processors.

* splitting a problem in multiple similar chunks.

* Example: parsing a big file by running two processes on every half of the file.

* the **simultaneous execution of multiple pieces of work** in order to increase speed

* Parallelism solves the problem of finding enough tasks and appropriate tasks (ones that can be split apart correctly) and distributing them over plentiful CPU resources. Parallelism has always been around of course, but it’s coming to the forefront because multi-core processors are so cheap.

## Concurrency 系列文章 (繁體中文)

* [Concurrency系列(一): 理解Concurrency之路](http://enginechang.logdown.com/posts/784600-concurrency-series-1-the-road-to-understand-concurrency)

* [Concurrency系列(二): 從Sequenced-Before開始說起](http://enginechang.logdown.com/posts/788206-concurrency-series-2-starting-from-sequenced-before)

* [Concurrency系列(三): 朝Happens-Before邁進](http://enginechang.logdown.com/posts/797113-concurrency-series-3-happens-before)

* [Concurrency系列(四): 與Synchronizes-With同在](http://enginechang.logdown.com/posts/797957-concurrency-series-4-be-with-the-synchronizes-with-relation)

* [Concurrency系列(五): Sequential Consistency的代價](http://enginechang.logdown.com/posts/809449-concurrency-series-5-the-price-of-sequential-consistency)

* 冼鏡光的[並行計算投影片](http://blog.dcview.com/article.php?a=BTgBYw1qUWIAaQ%3D%3D)

## Process vs. Thread vs. Coroutines

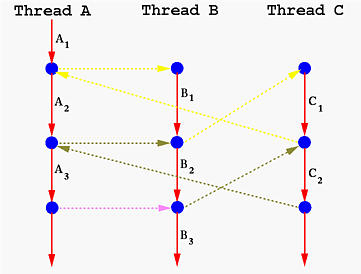

* With threads, the operating system switches running tasks **preemptive**ly according to its scheduling algorithm.

* [SMT (Simultaneous Multithreading), VMT(Vertical Multithreading)](http://www.fujitsu.com/global/products/computing/servers/unix/sparc-enterprise/technology/performance/processor.html)

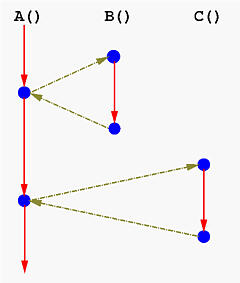

* With coroutines, the programmer chooses, meaning tasks are cooperatively multitasked by pausing and resuming functions at set points.

* coroutine switches are cooperative, meaning the programmer controls when a switch will happen.

* The kernel is not involved in coroutine switches.

一圖勝千語:

將函式呼叫從原本的面貌: [ [source](http://www.csl.mtu.edu/cs4411.ck/www/NOTES/non-local-goto/coroutine.html) ]

轉換成以下:

C 程式實做 coroutines 的手法相當多,像是:

* [switch-case](http://blog.linux.org.tw/~jserv/archives/001848.html)

* [setjmp / longjmp](http://descent-incoming.blogspot.tw/2014/02/coroutine.html)

## POSIX Threads

[Getting Started With POSIX Threads](http://www.csie.ntu.edu.tw/~r92094/c++/pthread.txt) (繁體中文翻譯)

## POSIX Thread 實例: 光線追蹤

[2016q1:Homework2](http://wiki.csie.ncku.edu.tw/embedded/2016q1h2) 提到 raytracing 程式,[UCLA Computer Science 35L, Winter 2016 Software Construction](http://web.cs.ucla.edu/classes/winter16/cs35L/) 課程有個作業值得參考:**[S35L_Assign8_Multithreading](https://github.com/maxwyb/CS35L_Assign8_Multithreading)**

編譯與測試

```bash=

$ git clone *https://github.com/maxwyb/CS35L_Assign8_Multithreading.git* raytracing-threads

$ cd raytracing-threads

$ make

$ ./srt 4 > out.ppm

$ diff -u out.ppm baseline.ppm

```

將 `srt` 後面的數字換成 1, 2, 4, 8 來測試執行時間

[ [main.c](https://github.com/maxwyb/CS35L_Assign8_Multithreading/blob/master/main.c) ]

```C=

#include <pthread.h>

pthread_t* threadID = malloc(nthreads * sizeof(pthread_t));

int res = pthread_create(&threadID[t], 0, pixelProcessing, (void *)&intervals[t]);

int res = pthread_join(threadID[t], &retVal);

```

## POSIX Thread

- [ ] [POSIX Threads Programming](https://computing.llnl.gov/tutorials/pthreads/)

* Condition variables provide yet another way for threads to synchronize. While mutexes implement synchronization by controlling thread access to data, ==condition variables allow threads to synchronize based upon the actual value of data.==

* [Priority Inversion on Mars](http://wiki.csie.ncku.edu.tw/embedded/priority-inversion-on-Mars.pdf)

* [Getting Started With POSIX Threads 宋振華](http://www.csie.ntu.edu.tw/~r92094/c++/pthread.txt)

* Without [condition variables](https://www.cs.mtu.edu/~shene/NSF-3/e-Book/MONITOR/CV.html), the programmer would need to have threads continually polling (possibly in a critical section), to check if the condition is met. This can be very resource consuming since the thread would be continuously busy in this activity. A condition variable is a way to achieve the same goal without polling.

* A condition variable is always used in conjunction with a mutex lock.

[](http://i.cmpnet.com/ddj/blogs/2011/06/inheritance.png)http://i.cmpnet.com/ddj/blogs/2011/06/inheritance.png

Condition variables must be declared with type pthread_cond_t, and must be initialized before they can be used. There are two ways to initialize a condition variable:

1. Statically, when it is declared. For example:

**pthread_cond_t myconvar = PTHREAD_COND_INITIALIZER;**

1. Dynamically, with the pthread_cond_init() routine. The ID of the created condition variable is returned to the calling thread through the _condition_ parameter. This method permits setting condition variable object attributes, _attr_.

**使用案例與情境**

* main 函式建立 3 個執行緒,其中 2 個執行任務並更新名為 "count" 的變數

* 第 3 個執行緒等待 "count" 變數的內含值成為某個指定數值.

```C=

#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

#define NUM_THREADS 3

#define TCOUNT 10

#define COUNT_LIMIT 12

int count = 0;

int thread_ids[3] = {0,1,2};

**pthread_mutex_t count_mutex;**

**pthread_cond_t count_threshold_cv;**

void *inc_count(void *t) {

long my_id = (long)t;

for (int i = 0; i < TCOUNT; i++) {

**pthread_mutex_lock(&count_mutex);**

count++;

/*

Check the value of count and signal waiting thread when condition is

reached. Note that this occurs while mutex is locked.

*/

if (count == COUNT_LIMIT) {

**pthread_cond_signal(&count_threshold_cv);**

printf("inc_count(): thread %ld, count = %d Threshold reached.\n",

my_id, count);

}

printf("inc_count(): thread %ld, count = %d, unlocking mutex\n",

my_id, count);

**pthread_mutex_unlock(&count_mutex);**

/* Do some "work" so threads can alternate on mutex lock */

sleep(1);

}

**pthread_exit(NULL);**

}

void *watch_count(void *t) {

long my_id = (long)t;

printf("Starting watch_count(): thread %ld\n", my_id);

/*

Lock mutex and wait for signal. Note that the pthread_cond_wait

routine will automatically and atomically unlock mutex while it waits.

Also, note that if COUNT_LIMIT is reached before this routine is run by

the waiting thread, the loop will be skipped to prevent pthread_cond_wait

from never returning.

*/

**pthread_mutex_lock(&count_mutex);**

while (count < COUNT_LIMIT) {

**pthread_cond_wait(&count_threshold_cv, &count_mutex);**

printf("watch_count(): thread %ld Condition signal received.\n", my_id);

count += 125;

printf("watch_count(): thread %ld count now = %d.\n", my_id, count);

}

**pthread_mutex_unlock(&count_mutex);**

**pthread_exit(NULL);**

}

int main (int argc, char *argv[])

{

int i, rc;

long t1 = 1, t2 = 2, t3 = 3;

**pthread_t threads[3];**

**pthread_attr_t attr;**

/* Initialize mutex and condition variable objects */

**pthread_mutex_init(&count_mutex, NULL);**

**pthread_cond_init (&count_threshold_cv, NULL);**

/* For portability, explicitly create threads in a joinable state */

pthread_attr_init(&attr);

pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_JOINABLE);

pthread_create(&threads[0], &attr, watch_count, (void *)t1);

pthread_create(&threads[1], &attr, inc_count, (void *)t2);

pthread_create(&threads[2], &attr, inc_count, (void *)t3);

/* Wait for all threads to complete */

for (i = 0; i < NUM_THREADS; i++)

**pthread_join(threads[i], NULL);**

printf ("Main(): Waited on %d threads. Done.\n", NUM_THREADS);

/* Clean up and exit */

pthread_attr_destroy(&attr);

pthread_mutex_destroy(&count_mutex);

pthread_cond_destroy(&count_threshold_cv);

pthread_exit(NULL);

}

```

## Thread Pool

[ [source](http://swind.code-life.info/posts/c-thread-pool.html) ]

StackOverFlow 裡頭提及: [Existing threadpool C implementation](http://stackoverflow.com/questions/6297428/existing-threadpool-c-implementation)

裡面有提到幾個 C Thread Pool 的實作範例與可參考的文件

* [threadpool-mbrossard](https://github.com/mbrossard/threadpool)

* [threadpool-jmatthew](http://people.clarkson.edu/~jmatthew/cs644.archive/cs644.fa2001/proj/locksmith/code/ExampleTest/)

* [cthreadpool](http://sourceforge.net/projects/cthpool/)

* [C-Thread-Pool](https://github.com/Pithikos/C-Thread-Pool)

以 threadpool-mbrossard 作為第一個研究的版本,因為仍在維護,而且作者是 [Existing threadpool C implementation](http://stackoverflow.com/questions/6297428/existing-threadpool-c-implementation) 的發文者。

**A simple C thread pool implementation**

Currently, the implementation:

* Works with pthreads only, but API is intentionally opaque to allow other implementations (Windows for instance).

* Starts all threads on creation of the thread pool.

* Reserves one task for signaling the queue is full.

* Stops and joins all worker threads on destroy.

**Thread Pool 的資料結構**

首先 Thread Pool 要有的東西就是 job 或者是 task 讓 Thread 知道他們要做什麼事情。

```C=

typedef struct {

void (*function)(void *);

void *argument;

} threadpool_task_t;

```

所以只要有一個資料結構紀錄要執行的 function pointer 與要傳遞的參數即可。 接下來就是 Thread Pool 本身,他必須存放所有的 Thread 與 Job Queue:

```C=

struct threadpool_t {

pthread_mutex_t lock;

pthread_cond_t notify;

pthread_t *threads;

threadpool_task_t *queue;

int thread_count;

int queue_size;

int head;

int tail;

int count;

int shutdown;

int started;

};

```

這邊使用一個 pthread_t 的 pointer 來紀錄所有的 Thread,簡單來說,就是一個 pthread_t 的 array,而 head, tail 就是紀錄 array 的 offset。 threadpool_task_t 也是一樣的原理,真是出乎意料的簡單。

**ThreadPool 的建立與工作的執行**

再來就是 Thread Pool 的建立,由於剛剛提到的他其實是使用一個 pthread array 與一個 job array 來存放所有的 thread 與 jobs。 因此需要在一開始的時候就決定 Thread Pool 與 Jobs 的最大數量。

```C=

/* Allocate thread and task queue */

pool->threads = (pthread_t *) malloc(sizeof(pthread_t) * thread_count);

pool->queue = (threadpool_task_t *) malloc(sizeof(threadpool_task_t) * queue_size);

```

而每個 Thread 要排入執行的 callback function 透過以下:

```C=

static void *threadpool_thread(void *threadpool)

{

threadpool_t *pool = (threadpool_t *) threadpool;

threadpool_task_t task;

for(;;) {

/* Lock must be taken to wait on conditional variable */

pthread_mutex_lock(&(pool->lock));

/* Wait on condition variable, check for spurious wakeups.

When returning from `pthread_cond_wait`(), we own the

lock. */

while((pool->count == 0) && (!pool->shutdown)) {

pthread_cond_wait(&(pool->notify), &(pool->lock));

}

if((pool->shutdown == immediate_shutdown) ||

((pool->shutdown == graceful_shutdown) &&

(pool->count == 0))) {

break;

}

/* Grab our task */

task.function = pool->queue[pool->head].function;

task.argument = pool->queue[pool->head].argument;

pool->head += 1;

pool->head =

(pool->head == pool->queue_size) ? 0 : pool->head;

pool->count -= 1;

/* Unlock */

pthread_mutex_unlock(&(pool->lock));

/* Get to work */

(*(task.function))(task.argument);

}

pool->started--;

pthread_mutex_unlock(&(pool->lock));

pthread_exit(NULL);

return(NULL);

}

```

在 **for(;;)** 裡面,Thread 第一件要做的事情就是去搶奪 pool 的 lock,當搶到 lock 的 Thread 發現沒有工作可以做的時候, 就會執行 pthread_cond_wait 來等待通知。這時候 pool->lock 會被 Unlock,因此這時候其他 Thread 也可以進來這個區域。 所以在完全沒有工作的情況下,所有的 Thread 都會在這邊 waiting。

當 Thread 被透過 pthread_cond_signal 喚醒的時候,該 Thread 就會重新取得 pool->lock。 這時他就可以安心的取出 queue 中的 task,等待取出完畢之後,再 unlock 讓其他被喚醒的 Thread 也可以去取得 Task。

之後就是執行 task 中的 function pointer 做該做的工作。

**ThreadPool 的 destory**

destory 就更簡單了,只要使用 pthread_cond_broadcast 通知所有的 Thread 起來,由於 shoutdown 的確認會在執行工作之前。 所以該 thread 就會離開執行工作的迴圈,並且結束。

[mbrossard 完整的 ThreadPool 原始碼](https://github.com/mbrossard/threadpool/blob/master/src/threadpool.c)

## Lock-free Thread Pool

[ [source](http://blog.csdn.net/xhjcehust/article/details/45844901) ]

大多數 thread pool 實做都離不開 lock 的使用,如 pthread_mutex 結合 (condition variable) pthread_cond。一般來說,lock 的使用對於程式效能影響較大,雖然現有的 pthread_mutex 在 lock 的取得 (acquire) 與釋放,已在 Linux 核心和對應函式庫進行效能提昇,但我們仍會希望有不仰賴 lock 的 thread pool 的實做。

**常見 thread pool 實做原理**

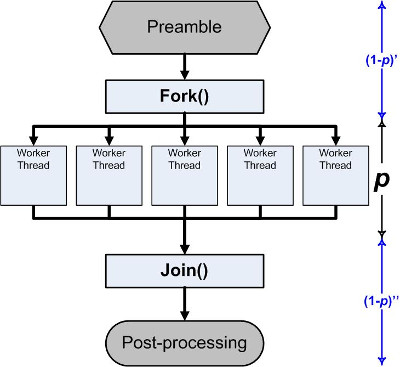

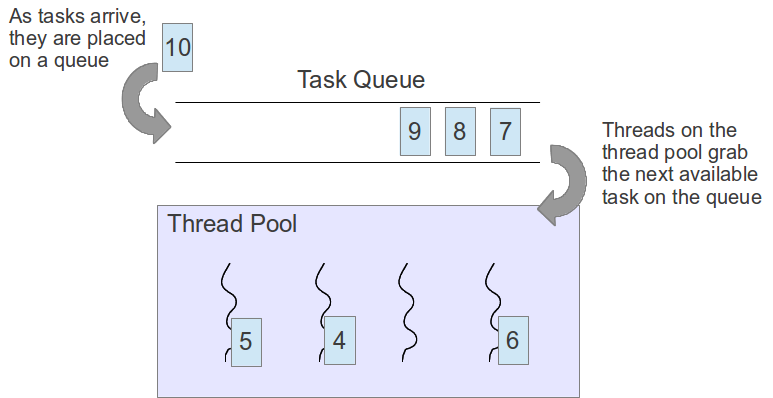

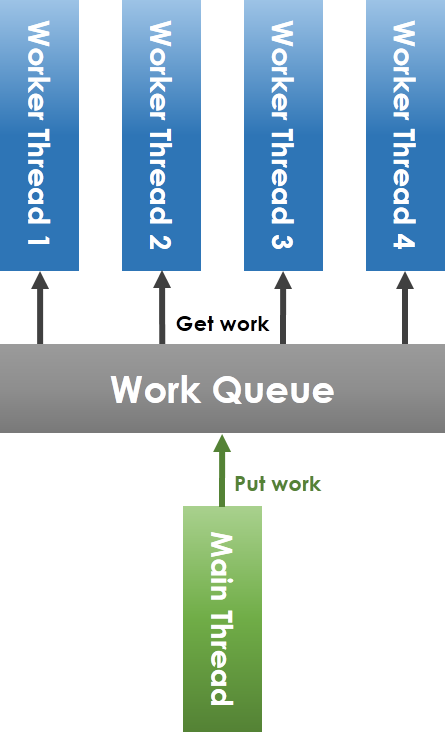

如上圖所示,workqueue (工作佇列) 由 main thread (主執行緒) 和 worker thread 共享,main thread 將任務放進 workqueue,worker thread 從 workqueue 中取出任務執行。要注意到,共享 workqueue 的操作必須在 mutex 的保護下安全進行,main thread 將任務放進 workqueue 時,若偵測到目前待執行的工作數目小於 worker thread 總數,則要透過 condition variable 喚醒可能處於等待狀態的 worker thread。

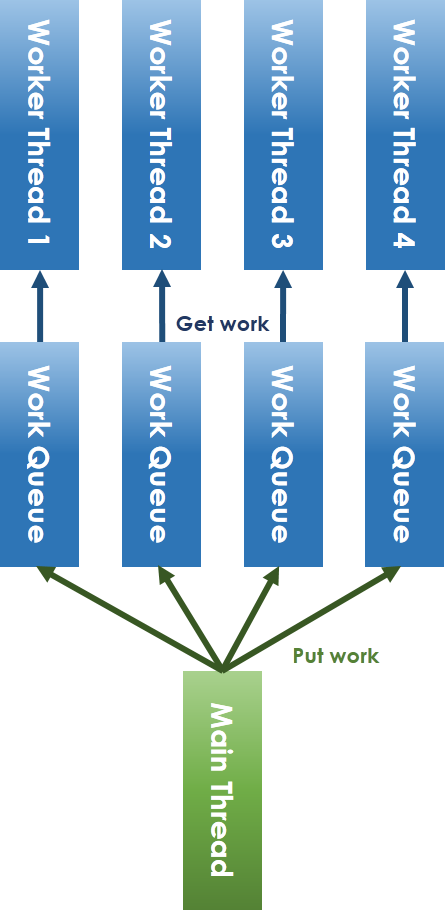

**lock-free 化 thread pool 實做原理**

為解決 lock-free 所面臨的議題,我們一定要避免共享資源的競爭 (contention),因此將共享 workqueue 加以拆分成每 worker thread 一個 workqueue 的方式,如上圖。對於 main thread 放入工作和 worker thread 取出任務的競爭問題,可以採取 ring-buffer 的方式避免。在解決了lock 機制後,就只剩下 condition variable 的問題,condition variable 本質上就是提出來解決執行緒之間的通訊議題,不然為了追蹤特定變數,如之前提到的 "count" 變數,還得額外建立執行緒來追蹤。而 semaphore 作為一種通信方式,可以代替之,其大體開發模式為:

```C=

sigemptyset(&zeromask);

sigemptyset(&newmask);

sigaddset(&newmask, SIGXX);

sigprocmask(SIG_BLOCK, &newmask, &oldmask) ;

while (!CONDITION)

sigsuspend(&zeromask);

sigprocmask(SIG_SETMASK, &oldmask, NULL)

```

**lock-free thread pool 實做說明**

程式碼請見: [](https://github.com/xhjcehust/LFTPool)[https://github.com/xhjcehust/LFTPool](https://github.com/xhjcehust/LFTPool)

在 lock-free thread pool 的實做裡頭,有別於常見 thread pool 之處,在於 semaphore 與 condition variable 、排程演算法、增加或減少執行緒數目後的 task migration,另一點則是引入 [ring-buffer](https://en.wikipedia.org/wiki/Circular_buffer),後者參考了 Linux 核心中的 kfifo 實做。

(1) **semaphore 與 condition variable**

semaphore 與 condition variable 的區別,主要在於 condition variable 的喚醒 (signal),對於接收端執行緒而言可以忽略,而在未設定 semaphore 處理函式的情況下,semaphore 的接收會導致接收執行緒,甚至是整個程式的終止。因此,需要在 thread pool 產生執行緒前,事先指定 semaphore 處理函式,如此一來,新建立的執行緒會繼承這個 semaphore 處理函式。多執行緒中,semaphore 的訊號 (UNIX signal; 本質上非同步) 傳送,主要採用 `pthread_kill`,為避免使用其他 semaphore ,本程式中使用了 SIGUSR1。

(2) **任務排程演算法**

常見 thread pool 實做的任務排程,主要透過作業系統的 thread scheduler 來達成。考慮到 load-balancing,main thread 放入任務時應採取合適的任務排程演算法,將任務放入對應的 worker thread 隊列,本程式目前已實做 Round-Robin 和 Least-Load 演算法。Round-Robin 即輪詢式地分配工作,Least-Load 即選擇目前具有最少工作的 worker thread 放入。

(3) **Task migration**

在執行緒的動態增加和減少的過程中,同樣基於 load-balancing 的考量,涉及到現有 task 的遷移 (migration) 問題。load-balancing 演算法主要基於平均工作量的想法,即統計目前時刻的總任務數目,均分至每一個執行緒,求出每個工作者執行緒應該增加或減少的工作數目,然後從頭至尾遍歷,需要移出工作的執行緒與需要移入工作的執行緒執行 task migration,相互抵消。最後若還有多出來的工作,再依次分配。

遷入 (migreate IN) 工作不存在競態,因為加入工作始終由 main thread 完成,而遷出 (migrate OUT) 工作則存在競態,因為在遷出工作的同時,worker thread 可能在同時執行任務。所以需要採用 atomic operation 加以修正,其主要思想就是預先擷取 (prefetch) 的技巧,大致實做程式碼如下:

```C=

do {

work = NULL;

if (thread_queue_len( thread ) <= 0) // thread_queue_len() 必須是 atomic

break;

tmp = thread ->out;

// prefetch work

work = & thread->work_queue[queue_offset(tmp)];

} while (!__sync_bool_compare_and_swap(& thread ->out, tmp, tmp + 1));

if (work) do_something();

```

* Test-and-Set instruction: [](https://en.wikipedia.org/wiki/Test-and-set)[https://en.wikipedia.org/wiki/Test-and-set](https://en.wikipedia.org/wiki/Test-and-set)

* `__sync_bool_compare_and_swap` 是 GCC atomic builtin function 之一: [](https://gcc.gnu.org/onlinedocs/gcc-5.3.0/gcc/_005f_005fsync-Builtins.html#_005f_005fsync-Builtins)[https://gcc.gnu.org/onlinedocs/gcc-5.3.0/gcc/_005f_005fsync-Builtins.html#_005f_005fsync-Builtins](https://gcc.gnu.org/onlinedocs/gcc-5.3.0/gcc/_005f_005fsync-Builtins.html#_005f_005fsync-Builtins)

* C11 標準正式將 atomic operation 納入,請見: [](http://en.cppreference.com/w/c/atomic)[http://en.cppreference.com/w/c/atomic](http://en.cppreference.com/w/c/atomic)

Atomic 是種同步機制,不須透過 explicit lock (如 mutex),也能確保變數之間的同步。Linux 核心提供了對應的型態 `atomic_t`。而 gcc 也提供了內建的函式 (built-in functions),專門處理 atomics,如下:

type __sync_fetch_and_add (type *ptr, type value, ...)

type __sync_fetch_and_sub (type *ptr, type value, ...)

上面的 "type" 可以是 int8 .. int64。

最常用到的就是「加」和「減」的操作,使用如下:

```C=

int val = 0;

__syn_fetch_and_add(&val, 1);

```

這是種輕量級的同步機制,若只是對一個變數去做同步,實在不需要透過 mutex。在真實硬體環境中,atomics 的效能會比 `pthread_mutex_t` 好許多。

atomics 通常會伴隨著探討 memory barrier,這是因為編譯器進行最佳化時,往往會為了最佳化去變更指令的順序,但在特定的狀況下,往往會帶來後遺症,為了避免衝擊,gcc 也提供以下內建函式來處理 memory barrier:

__sync_synchronize()

在執行緒的動態減少後,原先執行緒上未能執行完的任務,只需要由 main thread 再次根據任務排程演算法重新分配至其他存活的 worker thread 隊列中即可,不存在上述問題,當然,此時可以同時執行 load-balancing 演算法加以最佳化。

(4) ring-buffer

ring-buffer 實做主要參考了 Linux 核心中 kfifo 的實做,如下圖所示:

環狀佇列長度為 2 的整數次方,out 和 in 下標一直遞增至越界後迴轉,其類型為 `unsigned int`,即 out 指標一直追趕 in 指標,out 和 in 映射至 FiFo 的對應下標處,其間的元素即為隊列元素。

## 對 Linked-List 排序

從 [Bubble sort](https://en.wikipedia.org/wiki/Bubble_sort) 談起,如何有效操作不連續記憶體

* [Linked List Bubble Sort](http://faculty.salina.k-state.edu/tim/CMST302/study_guide/topic7/bubble.html) (*)

* [Linked List Algorithms](http://c.learncodethehardway.org/book/ex33.html)

* [A Comparative Study of Linked List Sorting Algorithms](https://www.cs.mtu.edu/~shene/PUBLICATIONS/1996/3Conline.pdf), Ching-Kuang Shene [[冼鏡光](https://zh.wikipedia.org/zh-tw/%E5%86%BC%E9%8F%A1%E5%85%89)] (1996)

* 「冼」發音為ㄒㄧㄢˇ

* [Linked Lists: Locking, LockFree, and Beyond](http://www.cs.nyu.edu/courses/fall05/G22.2631-001/lists.slides2.pdf)

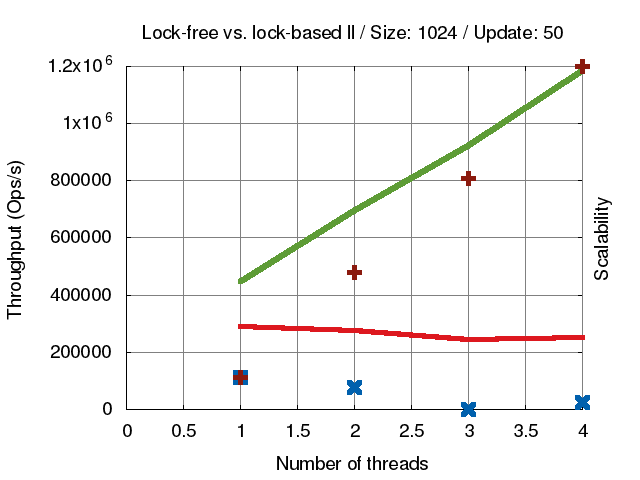

* **[concurrent-ll](https://github.com/jserv/concurrent-ll)**: concurrent linked-list 實作

* [ size = **128** ]

* [ size = **1024** ]

* [ size = **8192** ]

## 隨堂測驗

Quiz-3: 將 [Linked List Bubble Sort](http://faculty.salina.k-state.edu/tim/CMST302/study_guide/topic7/bubble.html) 裡頭的排序演算法從 recursive bubble sort 更換為 recursive merge sort

(linked list 資料結構不變更)

[2016q1 Week 2 ](https://embedded2016.hackpad.com/Sc7AmIvN7EN)

counting semaphore implementation in atomic instructions.