# Assessing Science Code

"Assessing code" means analyzing your code without altering what your code does. The goal is to figure what it is doing, how long it takes, and what it outputs.

Importantly, this is _not_ about changing code. See [Improving Science Code](#Improving-Science-Code) for that. The idea is that before you try to change things you need to understand what is going on. And if you are trying to make things faster, you need to know how long they take to run right now.

## Measuring Code

A lot of these suggestions will assume that you are running Python code within a Jupyter notebook.

### Performance

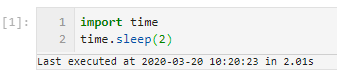

The most obvious way to measure code is by seeing how long it takes to run. In Jupyter notebooks there are certain magics that can give you a quick sense of how long things take. At the top of the cell put `%%time` then whenever you run the cell you will get an output of how long it takes. There is also an [Execute Time notebook extension](https://github.com/deshaw/jupyterlab-execute-time) that provides per-cell execution time.

Source: https://github.com/deshaw/jupyterlab-execute-time

If your code has a lot of functions here is a little decorator that you can use to time them. Just copy `timed` and add `@timed` on the line above a function definition to get a print out of how long it takes for the function to run.

```python

from functools import wraps

from time import time

def timed(f):

@wraps(f)

def wrap(*args, **kwargs):

t_start = time() # capture the start time

result = f(*args, **kwargs) # run the function

t_end = time() # capture the end time

t_diff = (t_end - t_start) # get the time difference

# humanize the time difference

if t_diff > 60:

took = f"{t_diff / 60:.2f} min"

elif t_diff > 1:

took = f"{t_diff:.2f} sec"

else:

took = f"{t_diff * 1000:.2f} ms"

print(f"func:{f.__name__} took: {took}")

return result # return the result of the function

return wrap

```

### Profiling

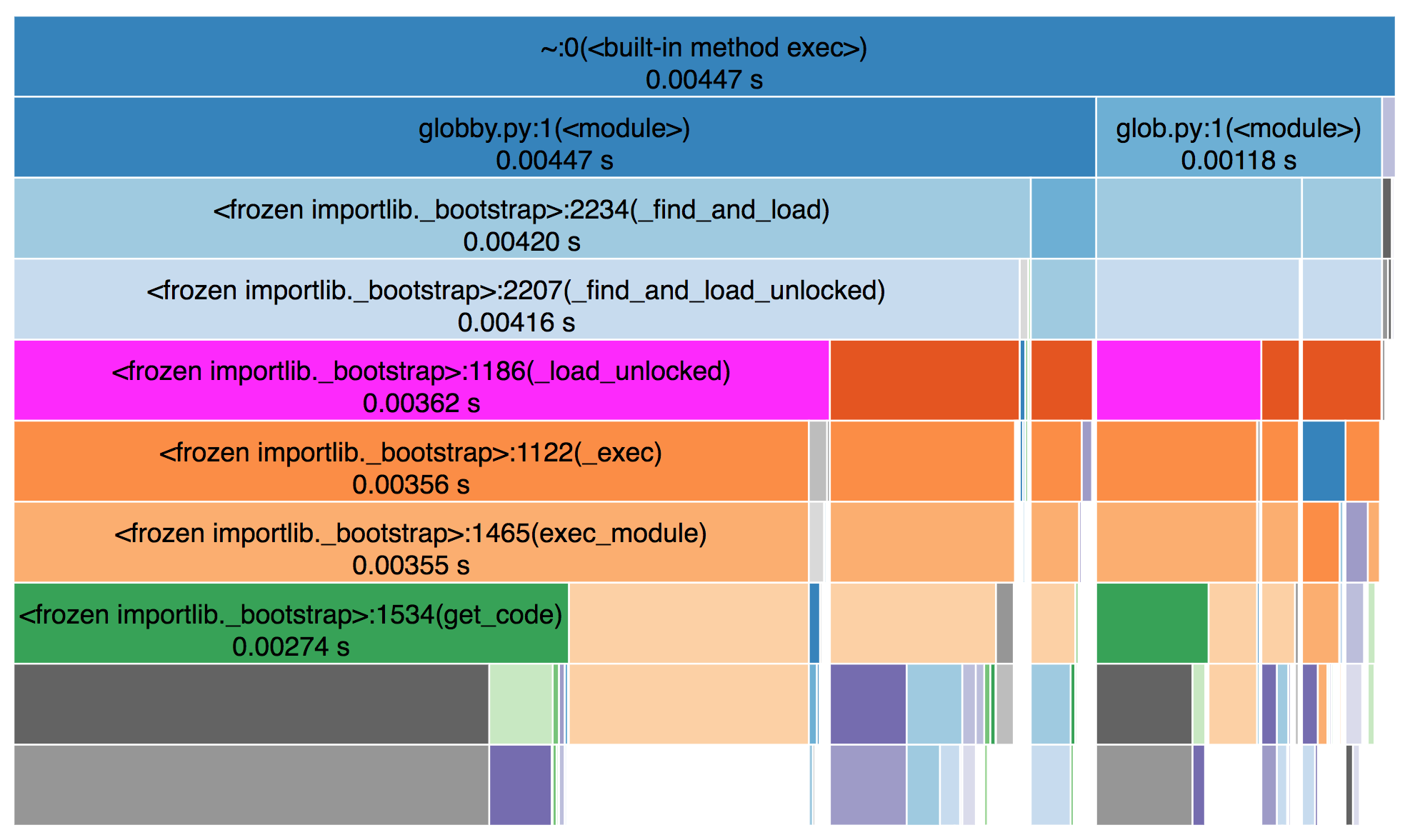

Getting timings per-function is a step down the path towards code profiling. Profiling is for when you want to get a better sense of what part of a piece of code is taking the most time. If you are just getting started you can try out [snakeviz](https://jiffyclub.github.io/snakeviz/) to get a visual representation of how long each subfunction takes.

Source: https://jiffyclub.github.io/snakeviz/

To get a more complete sense of the memory and compute utilization there are lightweight tools like [psrecord](https://github.com/astrofrog/psrecord) and heavier tools like [scalene](https://github.com/plasma-umass/scalene) that give all kinds of detailed information and can even provide suggestions for optimization.

### Benchmarking

If you are concerned about long-term degradation of your work, then you can use benchmarking to measure performance over time. That way you can track if things start taking longer than they used to and avoid getting to a place where you "just feel" like things used to be faster.

This is especially useful for projects that are developed over time by a group of people. There is sometimes a tendency to graft new functionality onto existing functions and that can ultimately slow things down and make functions hard to understand and debug.

## Understanding code

<!-- TODO: do we want sections on documentation or typing? -->

### Debugging

Before we get into it, just know there is nothing wrong with sticking a print statement in your code to start getting a sense of what's going on.

If your code is raising an error you can use [pdb](https://docs.python.org/3/library/pdb.html) to check out out what's going on. In Jupyter notebook you can enter a `pdb` session just by running `%pdb` in an empty cell. Then if you rerun the cell that was raising an error you will be dropped into an interactive `pdb` session.

Using `pdb` takes some getting used to, but some quick tips:

- use `l` to **list** the lines of code around the part you are looking at.

- use `u` to step back **up** into the function that called the one you are in.

- use `n` to run the **next** line of code.

- use `pp` to **pretty print** a variable.

Even if you don't have an error, but just want to inspect in more detail what is happening within a function you can use `pdb`. Add `breakpoint()` at any point in your code the next time you run it you will enter a `pdb` session at that line.

### Tests

Tests are one of the software development tools that is perfectly suited to science code. The notion of a test is that you make a statement about what the output of a particular function should be. Here is an example of a test:

```python

def test_read_input_file():

df = read_input_file("tests/data/filename.csv")

assert df.columns == ["time", "lat", "lon", "air_temperature"]

```

Focus on testing things that your function is doing not things that another library is responsible for. For instance you don't need to test `pd.read_csv` but you _do_ need to test conversions that you do after reading in the data.

### Visualizing output

Visualizations are not always included as part of assessing code, but they are crucial for improving intution about what's going on. The visualzations that you create while working on code can be very different than ones that you would make for a published paper. There are several libraries in the scientific python stack that try to make it is simple as possible to go from data to a visual representation. If you are already comfortable with one of those libraries you should use that. If you don't have a favorite library yet, then take a look at [hvplot](https://hvplot.holoviz.org/).

# Improving Science Code

"Improving code" means not only making code faster and more reliable, but also making code easier to debug, refactor, update, and understand.

If you are reading this in conjunction with [Assessing Science Code](#Assessing-Science-Code) then you can think of it as a continuous cycle. Assessing the code should give insight about how to improve it, improving the code should make it easier to assess.

Most of this involves rewriting sections of your code. It is not always the right time to do that, but when it is here are some things to keep in mind.

## Code Organization

### Pipelines

Often code is structured around science concepts, but it can be helpful to think instead in terms of pipelines. To organize your code by pipelines ask:

1. What are the inputs to this workflow?

2. What are the outputs from this workflow?

3. What are the steps for getting from inputs to outputs?

When you organize code by pipeline steps rather than science concepts it makes it easier to see steps that can be independent of each other (which means you can parallelize them). It also makes it easier to see what the inputs and outputs are for each step.

Whenever it is practical, you should consider storing the output of each pipeline step. This let's you pick back up in the middle of the workflow if something goes wrong with a later step. Storing intermediary outputs can also help with debugging since you can see what you had at each stage.

### Functions

Has this happened to you? You start reading a function to try to figure out what is going on and then you realize that function relies on another fuunction which relies on another one and another one...

<!-- Potentially a drawing of turles all the way down. -->

It's not uncommon for code to be structured in that way and often it has to do with a desire for code to be extremely DRY (Do not Repeat Yourself) or to have a consistent level of abstraction at every level. Both of these concepts are good in theory, but in practice they can make code hard to debug.

Here are some questions to ask when you are writing a function:

- Is it going to be used more than three times?

- Does it get different inputs each time it is used?

- Does it make the code easier to understand?

- Is the function more than 4 lines long?

If one or two of these are "no" then consider whether a function is really needed.

## Code Contents

### Naming

You can make any code more legible by using variable names that are clear and descriptive. This means using full words and substituting underscores for spaces.

| Do | Don't |

| -- | ----- |

| `region_name = "USA"` | `regnm = "USA"` |

### Constants

Move science parameters into one file and import them directly from there in the places where you need them. Constants should not be passed as paramenters to functions. Consider using a library like [pydantic-settings](https://docs.pydantic.dev/latest/concepts/pydantic_settings/) to make it easy to override constants.

### Making code faster

Writing and reading code take time just the same way that running code takes time, so if a function works and runs for 10 minutes once a year then maybe it doesn't need to be faster. Another way of putting it is: the more often a piece of code runs, the more valuable it is to make it run fast.

If you have decided that it is worthwile to speed up your code the first thing to do is look for for-loops. Go to the deepest nested for-loop and see what happens in there. If it is math, see whether you can use vectors (for instance numpy arrays) rather than iterating. If there are function calls, think about what the inputs to the functions will be. Ideally you should only run a funciton if there are new inputs. That includes IO so if you are repeatedly reading the same file within a for-loop, consider moving the read up and out.

If you aren't sure which approach will be faster create a minimal example (or even better a test) and try it both ways and measure how long each takes.

## Code Output

When writing output make sure to use stable and popular file formats. Here are some examples:

- for dictionary-like data: json

- for tables: parquet

- for rasters: tiff or COG

- for nd arrays: zarr

When writing output you often have control over how the data gets compressed and how each datatype is represented (think 8 bit vs 64 bit). You probably don't need to think about that when you first write a file, but if you are trying to make reads and writes more performant, that is something to consider.

### The case against Pickles

Any reputable file format has a detailed specification and makes strong promises about backwards compatibility. This means that you will always be able to read your data. An example of a file format that doesn't make such strong guarantees is a Python pickle. Pickles can be read back in _as long as the environment has not changed_. In addition to potentially trapping you with old versions of software, pickles are also terrible for reproducibility because it can be very hard to recreate exactly the same environment on a different machine.

### Write to local - copy to s3

Reading straight from s3 is great! It is a core to cloud-native IO concepts. But when writing, we recommend that you write things locally first and then copy them to s3 - or to any cloud object storage.