---

tags: Kermadec Class, Machine Learning, Tensor Flow, Deep Learning, Activation Function, Vanishing Gradient

---

Machine Learning Week 7

=

# Day 1:

## Machine Learning vs Deep Learning:

ML: **Human has to do manual** feature extraction, including:

- Cleaning input data.

- Enigineer the features: creating new/changing features.

- Select useful features.

DL: **Machine will do** feature extraction.

However, **DL needs a lot of data**.

### What if there is not much data?

**Performance** of Deep Learning and Machine Learning are **the same**.

## Deep Learning:

**Shallow layer** to detect low level features (simple ones): Edges and Lines in different angles.

**Middle layer** to detect middle level features (a litte more complex): Parts of object.

**Deep layer** to detect high level features (supper complex ones): The whole object.

## Activation Function in Deep Learning:

Activation Function transform a **linear** function to **non-Linearity**.

Deep Learning is a combination of multiple machine learning models, which use the output of previous model as input.

Similar to curves line is a combination of short straight lines.

- **Sigmoid**: values from **0 to 1**, result of derivative can **easily reach 0**, which is the **vanishing radius**, when there are many layers and neurons in Deep Learning model.

- **Hperbolic Tangent (TANH)**: values from **-1 to 1**, result of derivative can **easily reach 0** (but **not as easy as Sigmoid**), which is the vanishing radius, when there are many layers in Deep Learning model.

- **Rectified Linear Unit (ReLU)**: values from **0 to infinity**, result of derivative **hardly reaches 0** when there are a lot of neurons and layers.

**Tanh** and **Sigmoid** is good for **small** Deep Learning models.

**ReLU** is the best for **big** Deep Learning models.

## More layer is better than more neuron in 1 layer

Because the **result** of each layer can be **used by another layer**, which can also **reduce computing resources** because each neuron in **1 layer does not have to learn everything again from scratch**.

## Overfitting

Deep Learning hardly get underfit, most of the time, Deep Learning will get wellfit or overfit.

## Min Loss Function

Loss is stable and small enough -> It is good enough model.

There is no way to find the global minimum loss point because loss function in Deep Learning is an infinite space of parameters.

## Hyperparameters for Tunning:

- Learning Rate

- Weight Initialization for Breaking Symmetry

- Hidden Layer

- Hidden Neuron

- Batch Size

- ...

# Day 2

## Tensor Flow

Similar to Numpy, but can run on GPU/TPU.

<img src="https://miro.medium.com/max/4044/1*c8vKRVnog07DK6D5WJ3f3Q.png">

* TF supports **distributed computing** across multiple devices and servers

* TF can extracting the **computation graph** from a Python function, then optimize it (e.g., by pruning unused nodes)

<img src="https://i.imgur.com/Jrz1ikn.png">

### Computation graph

**Computation graph** is universial for every language. It is like a guideline for computer to do the calcuations that all programming language can understand.

* Computation graphs can be executed **efficiently** (e.g. by automatically running independent operations in parallel)

* Computation graphs can be export to a **portable format**, so you can run it in another environment (e.g. mobile device)

### Tensor Flow Concept

Build a computaion graph for each model, then put tensor flow in the graph, then output.

<img src="https://github.com/Amin-Tgz/awesome-tensorflow-2/raw/master/imgs/TF.png">

## Basic Codes

### Random Values:

Creating tensor randomly with normal distribution

```

tf.random.normal(shape=(2, 2), mean=0., stddev=1.)

```

Mean = 0

Standard Deviation = 1

### Assigning New Value to a Variable

Tensor is inmutable.

Tensor Variable is mutable.

To keep "status" variable when assign new value, must use .assign:

```

a = tf.Variable(initial_value)

# DO NOT do this: a = new_value

a.assign(new_value)

```

### GradientTape:

```

a = tf.constant([3.])

b = tf.constant([4.])

with tf.GradientTape() as tape:

tape.watch(a) # Start recording the history of operations applied to `a`

c = tf.sqrt(tf.square(a) + tf.square(b)) # Do some math using `a`

# What's the gradient of `c` with respect to `a`?

dc_da = tape.gradient(c, a)

print(dc_da)

```

**Tensor Flow watch Variable by default in gradient operation**.

When doing **gradient operation with respect of constant "variable"**, Tensor Flow does not watch them until calling:

`.watch`: start recording **gradient** operation history of constant "variable".

### Decorator tf.function:

`@tf.function` transform a python function (normal function) to a computaion graph.

-> The function runs faster, but more difficult to debug.

**Execution flow**:

**Python function**: Step by step according to the sequence of code.

**Computaion graph**: Optimized the steps of python function (the steps are "suffle").

## Keras:

### History:

Keras save everything of the model while training:

- Accuracy of training and validation.

- Result (weights, biases) of each epoc training.

- ...

### Callbacks:

A callback is an object that is called at different points during training (e.g. at the end of every batch or at the end of every epoch) and does stuffs: Early Stop, Model Checkpoint

```

# Instantiate some callbacks

callbacks = [tf.keras.callbacks.EarlyStopping(patience=2), # if validation not improving, stop training.

tf.keras.callbacks.ModelCheckpoint(filepath='my_model.keras',

save_best_only=True,)] # save the best model only.

history = model.fit(X_train, y_train, epochs=30,

validation_data=(X_valid, y_valid),

callbacks=callbacks)

```

### Ways to Define Tensorflow Models:

<img src="https://keras-dev.s3.amazonaws.com/tutorials-img/spectrum-of-workflows.png">

#### Sequential

1 straight flow of layers.

#### Functional API

Multiple flows of layers.

Example 1:

<img src="https://i.imgur.com/G2fZMVz.png">

Example 2:

<img src="https://i.imgur.com/lQwcGmJ.png">

#### Subclassing API

Edit the logic of layers using Class (OOP).

Similar to building customized Pipeline.

<img src="https://keras-dev.s3.amazonaws.com/tutorials-img/model-building-spectrum.png">

# Day 3

## Reivew Steps in Machine Learning Model:

**Forward**: Calculate y_hat

**Backward**: Calculate Gradient (derivative)

**Update**: Calculate new weights and bias with Gradient.

**Repeat**

Deep Learning Model is only a nested of multiple Machine Learning Models.

## Tunning Deep Learning Model:

### Mini Batch:

The smaller batch, the harder loss to decrease, the faster the model train.

**Reason**: The model only generalize for 1 small batch of samples at 1 time, which may not be suitable for other batches.

The bigger batch, the more stable the loss to decrease.

### Learning Rate Decay:

Decrease Learning Rate along the training Epoc:

- Decrease after specific Epoc with/without a specific value.

- Decrease after a specific condition (loss does not decrease after a specific Epoc...)

### Prevent Overfitting:

- **Early Stopping**: Stop after certain conditions.

- **Dropout**: Drop out neurons randomly, to make the model less depending on any certain neurons, which can make the model more general. Very similar to Weights Regularization.

- **Weights Regularization**:

- Data Augmentation: Produce new input based on original input with a little bit adjustment (rotation, change color, add noise,...) to prevent the model from remembering the input.

### Optimizer

Optimizer conatins algorithm of decreasing Loss.

- "SGD": Gradient Descent with Momentum.

- "RMSpop": Gradient Descent with Learning Decay.

- "Adam" is the best: Gradient Descent with Momentum and Learning Decay.

# Day 4

## Keras:

Keras is different from Tensorflow.

Keras is sub library in Tensorflow.

**import Keras**: Old version of Keras and Tensorflow does **not support** this library.

**import tensorflow.keras**: **Newest** version of Keras that Tensorflow support.

tensorflow.keras.datasets: limited datasets.

tensorflow.datasets: Vast variety datasets.

## Activation function:

- **softmax**: propability of each classes, good for **multiple classes** classification. Output of softmax is an **array** (percentage of each classes).

- **sigmoid**: propability of each classes, good for **binary classification**. Output of sigmoid is a **scarlar** (percentage of 0 or 1).

## Loss:

**CategoricalCrossentropy**: if y_hat (prediction) **shape == y (true label) shape** -> use **CategoricalCrossentropy**.

**SparseCategoricalCrossentrop**: if y_hat (prediction) **shape != y (true label) shape** -> use **Sparse**.

## Extract Model:

### Use tf.keras.callbacks.ModelCheckpoint:

**.h5**: extention of file for saving tensorflow model/weight.

Can save at any epoch as 1 file (1 epoch - 1 file).

Must

model.save()

## pathlib to make folder directory universial

## Pipeline in Tensorflow

Pipeline to preprocess data for model training.

`tf.data.Dataset`

For train dataset -> Map -> Cache -> Repeat -> Shuffle -> Batch -> Prefetch

For test dataset -> Map -> Cache (optional) -> Batch -> Prefetch (optional)

```

# Split into train and test set

train_dataset = ds_map_cache.take(train_size)

test_dataset = ds_map_cache.skip(train_size)

final_train_dataset = train_dataset.repeat().shuffle(SHUFFLE_BUFFER_SIZE).batch(BATCH_SIZE).prefetch(tf.data.experimental.AUTOTUNE)

final_test_dataset = test_dataset.batch(BATCH_SIZE).prefetch(tf.data.experimental.AUTOTUNE)

```

# [Keras + Pipeline Cheat Sheet](https://colab.research.google.com/drive/1_ZpagBFBBv1NzxsQn2T8niBIneEymX4o#scrollTo=yQDw42Ydf4is)

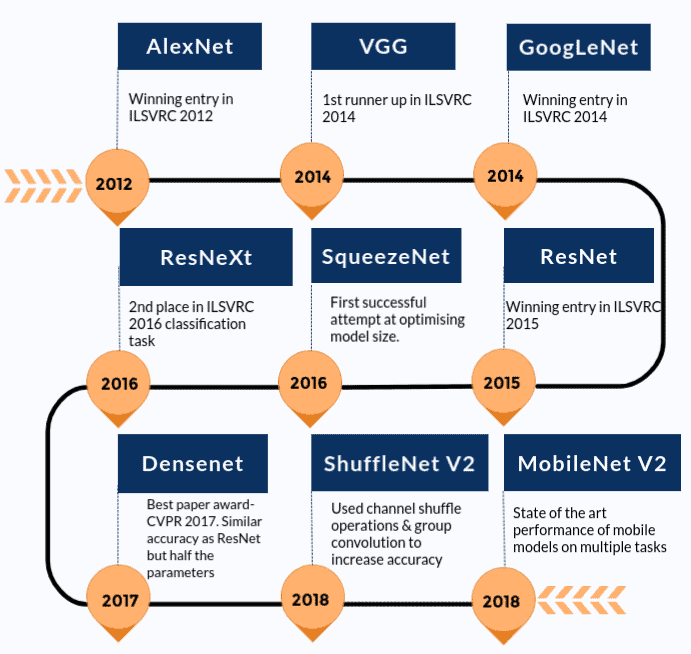

## Transfer Learning

Fetch a copy of MobileNet v2 from [tf.keras.applications](https://www.tensorflow.org/versions/r2.0/api_docs/python/tf/keras/applications)

--------------------------------------------------

# Day 5

## Flask Website

CSS

Similar to dictionary in python, but with `;`

```

h1 {

color: orange;

text-align: center;

}

```