# Malloc Source code Audit

## Malloc Chunk:

struct malloc_chunk {

INTERNAL_SIZE_T mchunk_prev_size; /* Size of previous chunk, if it is free. */

INTERNAL_SIZE_T mchunk_size; /* Size in bytes, including overhead. */

struct malloc_chunk* fd; /* double links -- used only if this chunk is free. */

struct malloc_chunk* bk;

/* Only used for large blocks: pointer to next larger size. */

struct malloc_chunk* fd_nextsize; /* double links -- used only if this chunk is free. */

struct malloc_chunk* bk_nextsize;

};

typedef struct malloc_chunk* mchunkptr;

#### Allocated Chunk:

chunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of previous chunk, if unallocated (P clear) |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of chunk, in bytes |A|M|P|

mem-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| User data starts here... .

. .

. (malloc_usable_size() bytes) .

. |

nextchunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| (size of chunk, but used for application data) |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of next chunk, in bytes |A|0|1|

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

#### Free Chunk:

chunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of previous chunk, if unallocated (P clear) |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

`head:' | Size of chunk, in bytes |A|0|P|

mem-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Forward pointer to next chunk in list |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Back pointer to previous chunk in list |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Unused space (may be 0 bytes long) .

. .

. |

nextchunk-> +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

`foot:' | Size of chunk, in bytes |

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

| Size of next chunk, in bytes |A|0|0|

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

**P (PREV_INUSE)**: 0 when previous chunk is free.

**M (IS_MMAPPED)**: The chunk is obtained through `mmap`.

**A (NON_MAIN_ARENA)**: 0 for chunks in the main arena.

> Chunks in fastbins are treated as _allocated_ chunks in the sense that they are not consolidated with neighboring free chunks.

### General Info:

Once the free space at the top of the heap is used up, the heap manager will have to ask the kernel to add more memory to the end of the heap. On the initial heap, the heap manager asks the kernel to allocate more memory at the end of the heap by calling _**sbrk**_

Very large allocation requests get special treatment in the heap manager. These large chunks are allocated off-heap using a direct call to _**mmap**_, and this fact is marked using a flag in the chunk metadata. When these huge allocations are later returned to the heap manager via a call to _**free**_, the heap manager releases the entire _**mmap** _ed region back to the system via _**munmap**_.

## Binning:

### Fast Bins:

Singly linked lists and chunks in each lists are having same size.

The in use bit is always set to ***1*** to avoid consolidation

- *7* Bins by default.

- No of bins -> *`NFASTBINS`*

- Size from `0x20(idx:0)-0x80(idx:6)`

- Last in First out.

### Unsorted Bins:BIN[1]:

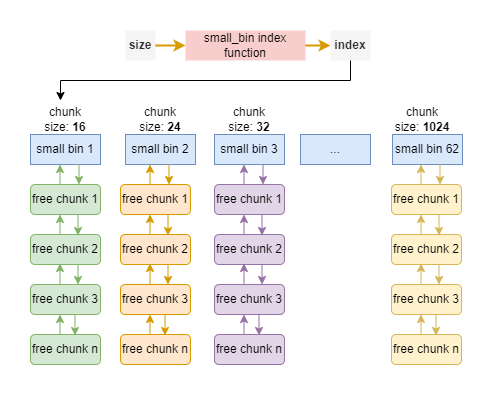

### Small Bins:BIN[2]-BIN[63]:

- Doubly Linked List

- FIFO

- Each bin have identical Size, the nth index small bin has a chunk size `(16n,16n+16)`

- upto 1024 B

- max size of small bin->*`MIN_LARGE_SIZE`*

### Large Bins:BIN[64]-BIN[126]

- Doubly LL

- Have chunks of different Size

- Sorted in Descending order [large ='head']

- Size => 1024B and 128KB

- Slower than Small bins as the insertion can be at any place inside the LL.

### T-cache

- Introduced in glibc 2.26

- Singly linked list

- 64 Singly-Linked tcache bins

- 7 same size chunk

- Size=> 24-1032 bytes

- in use bit will be 1

## _int_malloc():

- Lock heap

- Try tcache first

- Select the bin number

- Check for appropriate cache

- Allocate if found

- Big allocation =>mmap

- Standard allocation process

- Try fast bin(if size less than 128 bytes)/ small bin (up-to 1024 KB)

- if a fast bin exist, allocate chunk from there and also prefill tcache with entries from fast bin

- similar process for small bins too

- *Checks During Fast bin allocation*

- First it checks for misaligned chunk

- check for corruption {fastbin index check}

- *Checks During Small bin allocation*

- Checks the integrity of the doubly linked list

- `bck = victim->bk`

- `bck->fd != victim`

- Unlink takes place:

- `bin->bk = victim->bk;`

- `victim->bk->fd = bin;`

- If not found in fast bin Ptmalloc will iterate through fast bin and truly free them and add them to unsorted bin **(malloc_consolidate)**

- Then it will iterate through the unsorted bins `MAX_ITERS`

- *Checks During Unsorted bin allocation*

- Checks if the size is invalid or not

- Checks the size of the next chunk and verify if it is correct and within the bounds

- Checks if the `size of chunk` and `next->prev_size` are same

- Checks the consistency and integrity of Doubly linked list

- Checks `next->prev_inuse` is zero to verify the victim chunk is free.

- If size of chunk > request size => split, return allocation and place the remaining chunk back in unsorted bin

- if size of chunk = request size => return allocation

- if the size of chunk falls in small bin size adds them to small bin (promote to tcache *later).

- if the size of chunk falls in large bin size add them to large bin (promote to tcache *later).

- Search in large bin

- Best Fit

- if a suitable chunk is found then split it, return allocation and place the remaining chunk in unsorted bin

- Checks if the large bin is corrupted

- `fwd->bk->fd != fwd`

- Also Checks for the integrety of the unsorted linked list while inserting the remaining chunk

- `unsorted_chunks(av)->fd->bk == unsorted_chunks(av)`

- If no chunks are found in bins

- Top chunk will be used for allocation and remaining chunk will be the top chunk

- if top chunk < required size

- sbrk used

- add the remaining chunk to unsorted bin.

- mmap used

-check if not then the top size is corrupted

- Release heap lock

## _int_free():

- heap lock (multi thread process)

- Check if its a valid size(atleast size `MINSIZE`) or multiple of `MALLOC_ALIGNMENT`

- if it fits in tcache

- select the right bin

- check if the entry hasn't been freed(double-free)

- if the tcache bin is full , then standard free process

- push the chunk to corresponding bin

- If M bit is set => munmap

- Standard free process

- if it fits into fast bin, push it into corresponding fast bin.

- *Checks During Fastbin Insertion*

- For a chunk in fastbin range:

- Valid next size size is between minimum and maximum size

-> `( av->system_mem )`

- The chunk at the head should not be equal to the inserting chunk

- size of lastly free fastchunk is same as current chunk

- `&av->have_fastchunk = true`

- chunk > 128 byte consolidate fast bin along with the chunk and put the resultant chunk into unsorted bin.

- merge the chunk with the neighbor chunks and store the resultant chunk in unsorted bin:

- malloc will later sort it to the corresponding bins.

- *Check*

- `unsorted_chunks(av)->fd->bk == unsorted_chunks(av)`

- If the result chunk is adjacent to the top chunk then the chunk will be merged with the top chunk and if it is greater than *`DEFAULT_TRIM_THRESHOLD`* then malloc_trim will reduce the top.

- *Other checks*

- check for corruption(top):

- `p == av->top`

- next chunk(by memory) is within the boundaries of the arena

- check prev_inuse of next chunk

- `!prev_inuse(nextchunk)`

- next chunk(by memory) should be between minimum and maximum size

- Release Heap Lock.