# 搞懂卷機網路 convolution network

# 概念

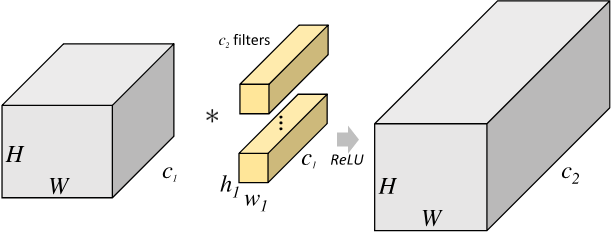

+ 大致上就是透過filter去跟數值做點機後相加起來,成為新的數值

https://www.youtube.com/watch?v=YRhxdVk_sIs&ab_channel=deeplizard

+ [link](https://blog.csdn.net/weixin_30793735/article/details/88915612?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.control&dist_request_id=a371ec2e-8e8f-4c1c-8ead-a59a1da2fbf5&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.control)

+ [link](https://chih-sheng-huang821.medium.com/%E5%8D%B7%E7%A9%8D%E7%A5%9E%E7%B6%93%E7%B6%B2%E8%B7%AF-convolutional-neural-network-cnn-1-1%E5%8D%B7%E7%A9%8D%E8%A8%88%E7%AE%97%E5%9C%A8%E5%81%9A%E4%BB%80%E9%BA%BC-7d7ebfe34b8)

# 1d 2d 3d

https://stackoverflow.com/questions/42883547/intuitive-understanding-of-1d-2d-and-3d-convolutions-in-convolutional-neural-n

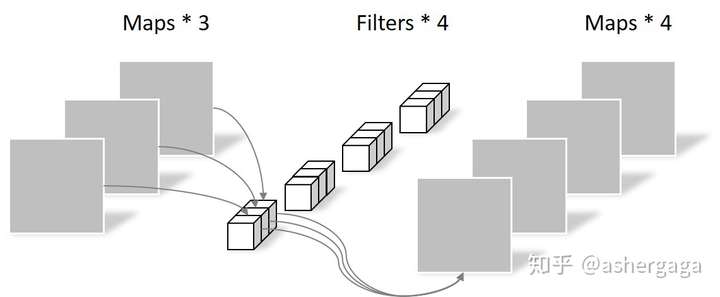

# deep wise and point wise

Depthwise(DW)卷積與Pointwise(PW)卷積,合起來被稱作Depthwise Separable Convolution(參見Google的Xception),該結構和常規卷積操作類似,可用來提取特徵,但相比於常規卷積操作,其**參數量和運算成本較低**。

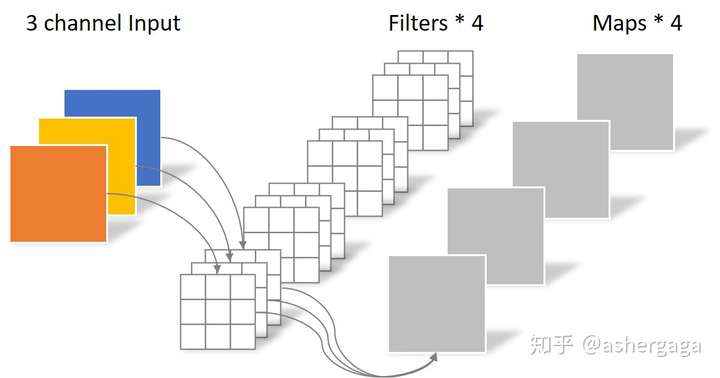

+ 正常版本

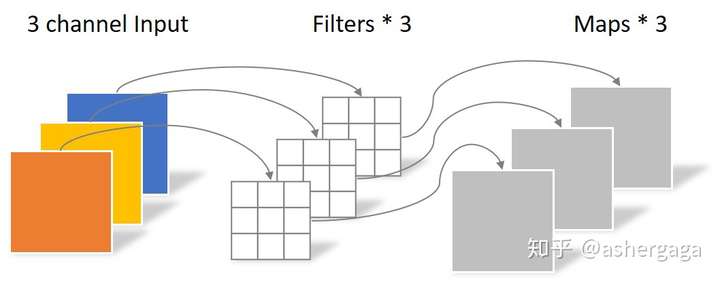

+ deep wise + point wise

https://zhuanlan.zhihu.com/p/80041030

# 1*1 Convolution

https://hackerstreak.com/1x1-convolution/

把她想成 channel * 1 * 1就好

The MLP in the Network-in-Network (NIN) paper works by taking (1x1xC) slice as its input and produces an output value for each (1x1xC) slice of the input. So that means for an input tensor of shape (Height x Width x Channels), the NIN produces an output of shape (Height x Width).

> 所以1×1卷積其實就是作channel之間的合成

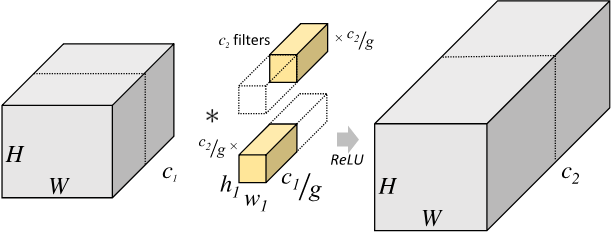

# 分組捲機

[link](https://blog.csdn.net/weixin_30793735/article/details/88915612?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.control&dist_request_id=a371ec2e-8e8f-4c1c-8ead-a59a1da2fbf5&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.control)

[link](https://zhuanlan.zhihu.com/p/65377955)

> 假設原本輸入 C~in~ * H * W

> 那麼Kernel size = C~out~ * Cin *H * W

> if group Conv

> 那麼Kernel size = C~out~ * (C~in~/g) *H * W