# This is not an AI Art Podcast (Ep. 9)

## Intro

Welcome to episode nine! This is your host, Doug Smith. This is Not An AI art podcast is a podcast about, well, AI ART – technology, community, and techniques. With a focus on stable diffusion, but all art tools are up for grabs, from the pencil on up, and including pay-to-play tools, like Midjourney. Less philosophy – more tire kicking. But if the philosophy gets in the way, we'll cover it.

But plenty of art theory!

Today we've got:

* Model madness model reviews: a lora that includes a guide, and 3 models.

* Bloods and crits: art critique on 4 pieces

* Technique of the week: if one ain't enough, use two.

* My project update: so you can learn from my process

Available on:

* [Spotify](https://open.spotify.com/show/4RxBUvcx71dnOr1e1oYmvV)

* [iHeartRadio](https://www.iheart.com/podcast/269-this-is-not-an-ai-art-podc-112887791/)

* [Google Podcasts](https://podcasts.google.com/feed/aHR0cHM6Ly9hbmNob3IuZm0vcy9kZWY2YmQwOC9wb2RjYXN0L3Jzcw)

Show notes are always included and include all the visuals, prompts and technique examples, the format is intended to be so that you don't have to be looking at your screen -- but the show notes have all the imagery and prompts and details on the processes we look at.

## News

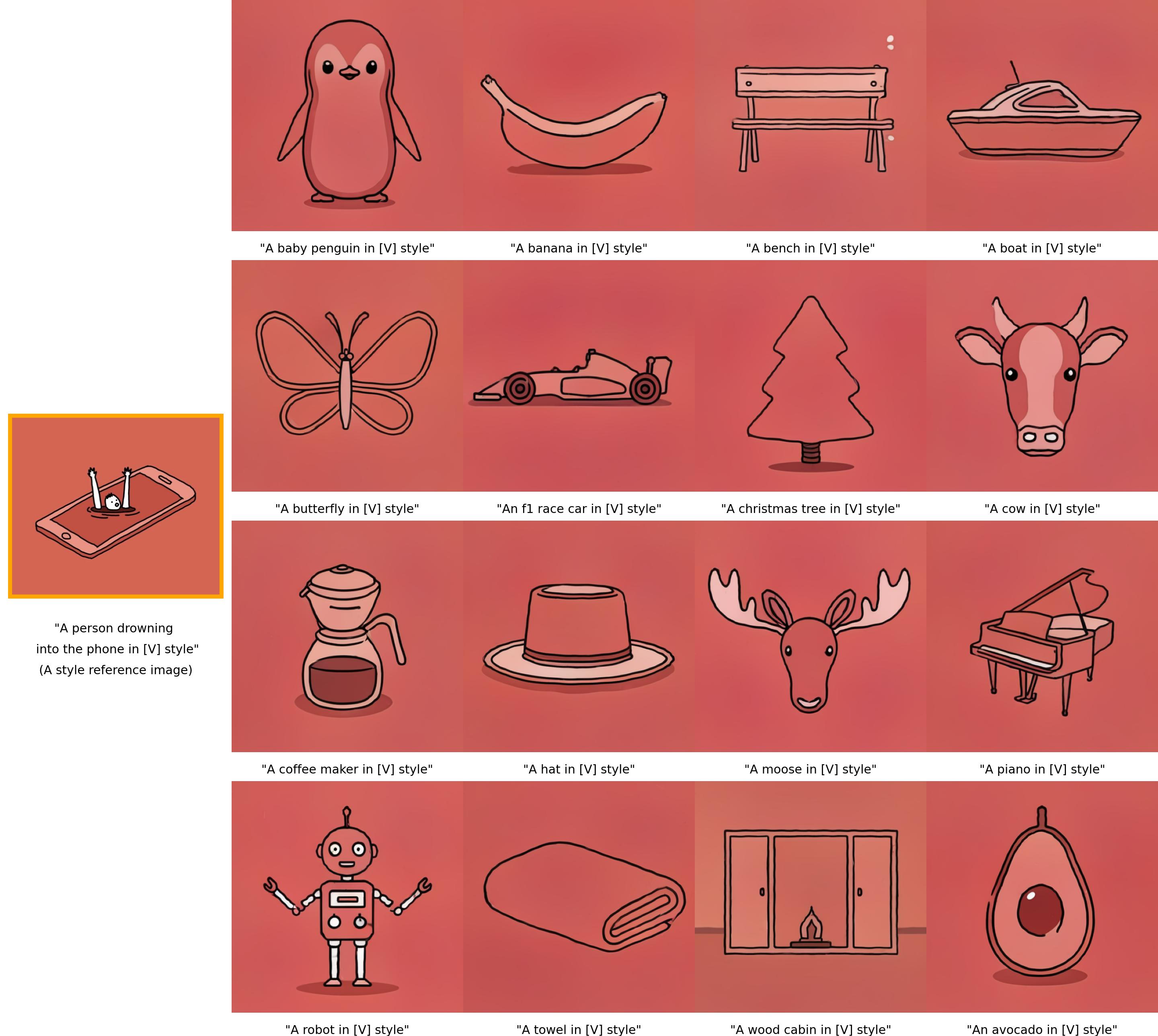

There's this new proposed training technique from Google research, https://styledrop.github.io/

Apparently trained with only one image!

If you've been following the /r/stablediffusion subreddit, you know that the meme-of-the-week is QR codes

Everyone's trying QR codes with control net, and having good luck with getting them scannable.

However, I found in [this reddit thread](https://www.reddit.com/r/StableDiffusion/comments/141hg9x/controlnet_for_qr_code/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button) that apparently the original poster is using a specialized control net for QR codes.

Gimmicky, but also kinda neat.

## Theme of the week: Be as bothered about the shot as Scorcese is.

I saw [this Scorcese clip on /r/oldschoolcool](https://www.reddit.com/r/OldSchoolCool/comments/141gofr/martin_scorsese_interview_1983/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

And more of [the full interview on YouTube](https://www.youtube.com/watch?v=8DBRkzTmS-w)

> there's tons of questions just what looks like a very simple shot has has many many yet as many choices to be made as a director

This is what I want for people listening to this pod -- be in control as the director. The critic. The art director, really.

Yes -- embrace the fast generation and ideas from your AI tools. But, have a vision for what you want.

Look at what you're going to do, and then come back to it, and make decisions about it.

Be as bothered as Scorcese about this who's up at 5am bothered about it trying to figure out where to put the camera.

## Model Madness

### "Passage" LoRA and training guide

LoRA training for a "magical portal" kind of thing, but with a guide!

* [From this reddit thread](https://www.reddit.com/r/StableDiffusion/comments/141sfyj/learn_how_to_train_a_passage_lora_including/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* [Direct link to the guide](https://civitai.com/articles/166)

### Dreamscapes & Dragonfire Model

* From [this reddit thread](https://www.reddit.com/r/StableDiffusion/comments/1456alm/resource_dreamscapes_dragonfire_v20/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* And [on civitai](https://civitai.com/models/50294/dreamscapes-and-dragonfire-new-v20)

```

elven ranger sneaking through a forest at night, fur clothing, torch, background of mossy rock, ((dirt and grit)), moss covered rocks and mist in background, dark background, DOF, 8k, (shadow) (dark setting) subsurface scattering, specular light, highres, octane render, ray traced, masterpiece, best quality, highest quality, intricate, detailed, perfect lighting, perfect shading, (mature adult:1.25), (photorealistic:1.5)

Negative prompt: (bad_prompt_v2:0.8),Asian-Less-Neg,bad-hands-5, BadDream, (skinny:1.2)

Steps: 35, Sampler: DPM++ 2M Karras, CFG scale: 6, Seed: 1589922260, Face restoration: CodeFormer, Size: 512x768, Model hash: 64a21449d7, Model: dreamscapesDragonfireNEW_dsDv20, VAE: vae-ft-mse-840000-ema-pruned, Denoising strength: 0.51, Hires upscale: 1.5, Hires upscaler: Latent

```

Flappers, from a magic prompt...

```

1920s flapper in a NYC back alley, dark neon lights, highly detailed, digital painting, artstation, concept art, sharp focus, illustration, art by artgerm and greg rutkowski and alphonse mucha, cinematic lighting

Negative prompt: (bad_prompt_v2:0.8),Asian-Less-Neg,bad-hands-5, BadDream, (skinny:1.2)

Steps: 35, Sampler: DPM++ 2M Karras, CFG scale: 6, Seed: 538934091, Face restoration: CodeFormer, Size: 512x768, Model hash: 64a21449d7, Model: dreamscapesDragonfireNEW_dsDv20, VAE: vae-ft-mse-840000-ema-pruned, Denoising strength: 0.51, Hires upscale: 1.5, Hires upscaler: Latent

```

### ForgeSaga Landscape (merge)

* [On reddit](https://www.reddit.com/r/StableDiffusion/comments/140zryo/too_many_waifus_not_enough_landscape_art_see/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* [On Civitai](https://civitai.com/models/84200?modelVersionId=89497)

```

(a red barn in new hampshire:1.2), fantasy, medieval, loading screen art, <lora:epiNoiseoffset_v2:0.2> <lora:add_detail:0.3>, ink outlines, wallpaper, vignette, matte painting by (Kazimir Malevich:1.1), simple lighting, dynamic shading, complementary colors, HDR, absurdres, cinematic, muted colors, limited color pallete, (masterpiece:1.2), (best quality:1.1)

Negative prompt: (bad_prompt_v2:0.8),Asian-Less-Neg,bad-hands-5, BadDream, (skinny:1.2)

Steps: 35, Sampler: DPM++ 2M Karras, CFG scale: 6, Seed: 656066101, Face restoration: CodeFormer, Size: 768x512, Model hash: 75c9ae2adf, Model: forgesagaLandscape_v10, VAE: vae-ft-mse-840000-ema-pruned, Denoising strength: 0.5, Hires upscale: 1.5, Hires upscaler: Latent

```

```

the rolling hills of Vermont, simple lighting, dynamic shading, complementary colors, HDR, absurdres, cinematic, muted colors, limited color pallete, (masterpiece:1.2), (best quality:1.1)

Negative prompt: (bad_prompt_v2:0.8),Asian-Less-Neg,bad-hands-5, BadDream, (skinny:1.2)

Steps: 35, Sampler: DPM++ 2M Karras, CFG scale: 6, Seed: 4149380547, Face restoration: CodeFormer, Size: 768x512, Model hash: 75c9ae2adf, Model: forgesagaLandscape_v10, VAE: vae-ft-mse-840000-ema-pruned, Denoising strength: 0.5, Hires upscale: 1.5, Hires upscaler: Latent

```

### Western animation model

* [On reddit](https://www.reddit.com/r/StableDiffusion/comments/144p4uo/i_made_a_new_base_model_for_comicswestern/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* [On civitai](https://civitai.com/models/86546/western-animation-diffusion)

This comes from the maker of dreamshaper, so I have high expectations!

Man -- took me a minute, make sure to read the instructions. But especially, I'd recommend upscaling it immediately with an anime upscaler and also to not use face restore.

You're definitely going to need to inpaint (what else is new?) anything that's not a close up, ime.

```

witches standing around a boiling cauldron, secret hideout, mysterious, alchemy, dark, ((masterpiece)) <lora:add_detail:0.5>

Negative prompt: (low quality, worst quality), (FastNegativeEmbedding:0.9)

Steps: 50, Sampler: DPM++ 2M Karras, CFG scale: 8, Seed: 1290141544, Size: 512x768, Model hash: 670e89364d, Model: westernAnimation_v1, VAE: vae-ft-mse-840000-ema-pruned, Denoising strength: 0.33, Clip skip: 2, Hires upscale: 2, Hires steps: 10, Hires upscaler: R-ESRGAN 4x+ Anime6B

```

```

a cackling evil witch standing over a boiling cauldron, gothic dress, secret hideout, mysterious, alchemy, dark, ((masterpiece)) <lora:add_detail:0.5>

Negative prompt: (low quality, worst quality), (FastNegativeEmbedding:0.9)

Steps: 50, Sampler: DPM++ 2M Karras, CFG scale: 8, Seed: 2348991232, Size: 512x768, Model hash: 670e89364d, Model: westernAnimation_v1, VAE: vae-ft-mse-840000-ema-pruned, Denoising strength: 0.33, Clip skip: 2, Hires upscale: 2, Hires steps: 10, Hires upscaler: R-ESRGAN 4x+ Anime6B

```

### Additional Resources

* [List of cheat sheets for stable diffusion](https://www.reddit.com/r/StableDiffusion/comments/142ar2i/stable_diffusion_cheat_sheets/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* These save you time suring around for prompt words, art styles, etc.

* Big list in this, but, I liked [this artist reference](https://supagruen.github.io/StableDiffusion-CheatSheet/#SargentJohnSinger).

* [Tutorial to convert model to TensorRT](https://www.reddit.com/r/StableDiffusion/comments/142ixuh/how_to_convert_models_to_tensorrt/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* This is how the new Nvidia drivers are going to speed stuff up

* I keep meaning to play, but I haven't tried yet.

* [Prompt comment extension](https://www.reddit.com/r/StableDiffusion/comments/143orgc/how_to_comment_out_parts_of_your_prompts_my_first/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* Brand new, but maybe worth a try.

* Having comments is a way that we organize code to give it some additional meaning, or instructions. This is a good idea for prompts

* [Git tracking for building models](https://www.reddit.com/r/StableDiffusion/comments/144dd2n/gittheta_for_tracking_the_provenance_of_stable/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

* https://github.com/r-three/git-theta

* Great idea, I'm having trouble tracking everything that I do with models, and I'm looking for tools to improve my workflow.

* I might just start with a notebook -- notebooks are your friend.

* Sketchbooks are your lover.

*

## Bloods and Crits

### "Сradle of giants" [on Reddit](https://www.reddit.com/r/StableDiffusion/comments/142g5f7/%D1%81radle_of_giants/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

From u/LongjumpingRelease32, again, who did the one from the policemen standing around a street light last week. Which I didn't realize they were progress pictures, I thought they were a series. So lets look at what they change this week instead of a pure crit, as they did a new series

They start with a composition...

Then they get the first concept..

Then they go hi-res, then they do this...

Then they have a few iterations before final, including a color grading step (nice work to finish it up)

### "Sweet Dreams" [on Reddit](https://www.reddit.com/r/StableDiffusion/comments/13z5iqv/sweet_dreams/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

I absolutely love this in terms of content and story, and rendering. It's very thought provoking and beautiful.

The composition is also pretty good.

We have some problems though, one of which is depth. There's such a potential for depth here, but the depth of the woman is flattened, and I think it's from having a very very strong contrast line in the kind of "portal".

Figural problems. There's something funny with her nose going into the cloud. The index finger needs a quick fix. Weird crease behind her arm, upper body shape is weird. I feel like you'll see this stuff more quickly if you're drawing from observation more.

I'd almost like to see the clouds kind of come out of this window.

## "The Mall. Its actually kind of funny to render something so mundane" [on Reddit](https://www.reddit.com/r/StableDiffusion/comments/1450fdy/the_mall_its_actually_kind_of_funny_to_render/?utm_source=share&utm_medium=android_app&utm_name=androidcss&utm_term=1&utm_content=share_button)

I actually picked this in part because the OP has a story in the comments that goes along with the workflow. Their story is very relatable and cool, and it adds to the narrative. Giving your work context helps with the narrative, and the artist did this. It also played to the audience because I went to look for the workflow -- and I also read the story. Well done.

One from a series... most have the same kind of issues.

The people need inpainting. The figure on the far left is merged into the background.

The compositions are very good, and the renderings are very good.

The advertisements/billboard/poster kind of stuff need another pass. I'd fix up the "fake text" kind of stuff too.

Hey also -- realism is awesome. See [realism on wikipedia](https://en.wikipedia.org/wiki/Realism_(arts)) -- there used to be a time when people didn't paint every day things, it had to be somehow religious or with the monarchy or something like that. We also see this kind of like... idealism A TON IN AI ART. *cough* Waifu's *cough* to the point where it's almost kind of sickening. So, mundane is a recipe for winning.

## Washington Chase (by me)

Speaking of mundane! I made a piece based on someone's old mail.

I really struggled with the correct balance to get the envelope to sit in 3 space, and it could probably use more work overall with the perspective.

I Should probably map out a perspective grid on it and fix it all the way. But, it's supposed to be "painterly" and I'm kind of OK that it has some like... "still-life-like focus in some areas" it feels natural and it's not work against me entirely.

I don't like that there's no repetition of the kind of form of the letter. That's actually why I put the books there, so there'd be another rectangular shape moving into the background. It's kind of not strong enough though.

I also took some liberty with the books and have the cover facing in a weird way. But I wanted the text upside down because it's a strong design and it could draw your eye away from what I want you to focus on first, which is the envelope.

From an actual "cover" I bought

## Technique of the Week: Multi-control net for hands

Inspired by this [reddit thread](https://www.reddit.com/r/StableDiffusion/comments/1436tbw/why_does_controlnet_not_handle_my_desired_hand/)

### Woisek's Method

*"Just use another helper if one is not enough ... 🤓"* -/u/Woisek

If you don't have two controlnets in automatic1111/vlad, go to settings for control net and there's a slider for multiples (then you need a whole restart)

We start with:

(fwiw, I reverse image searched it, I think it's from Adobe stock)

And we add a control net for pose...

And then we process one for softedge, I edited the mouth out of mine and added some detail to the hand

And I came out with an image like this:

(As you can see, I didn't inpaint to improve it, just an illustration of the method)

The prompt isn't so important, but in case you'd like it...

```

<lora:wip_v121:0.7> cute 3 0 year old dutch woman holding a hand to her forehead, (detailed skin, skin texture, goosebumps), cozy neighborhood coffee shop serving artisanal brews and fresh pastries, photorealism, golden hour, Crewson style shadowing, 8k resolution, minimalistic, masterpiece, pastel soft neon lighting, trending on artstation, ultra detailed, hyperrealism, raw photo, analog style, film grain, depth of field

Negative prompt: (bad_prompt_v2:0.8),Asian-Less-Neg,bad-hands-5, (drawing, anime, render), UnrealisticDream, (skinny:1.2)

Steps: 35, Sampler: DPM++ 2M Karras, CFG scale: 5, Seed: 1141576760, Face restoration: CodeFormer, Size: 768x512, Model hash: 70346f7a1e, Model: artAerosATribute_aerosNovae, VAE: vae-ft-mse-840000-ema-pruned, ControlNet 0: "preprocessor: openpose, model: control_v11p_sd15_openpose [cab727d4], weight: 1, starting/ending: (0, 1), resize mode: Crop and Resize, pixel perfect: False, control mode: Balanced, preprocessor params: (512, 64, 64)", ControlNet 1: "preprocessor: none, model: control_v11p_sd15_softedge [a8575a2a], weight: 1, starting/ending: (0, 1), resize mode: Crop and Resize, pixel perfect: True, control mode: Balanced, preprocessor params: (512, 64, 64)"

```

And `cozy neighborhood coffee shop serving artisanal brews and fresh pastries` is a chatGPT generated thing I have in a dynamic prompt for locations to mix up stuff when I'm genning a bunch of images.

And a 4-set so you can see it's not perfect... (as if you did to begin with!)

### My attempt

Plus a bit of blender.

I used to be a great blender hacker, I'm pretty medium and rusty these days! And I'm stuck in a blender 2.x mindset.

Here's the tutorial for the process I use for poses with Blender.

[Using 3d poses in blender From AIpreneur](https://www.youtube.com/watch?v=ptEZQrKgHAg&t=331s)

Toyxyz's Character bones that look like Openpose for blender : https://toyxyz.gumroad.com/l/ciojz

Worth a few bucks, I think.

And while this looks like a mess...

I get this pose out of it...

And this hand line art...

...It didn't turn out as well as Woisek's!

## Updates on my project.