# image-2-code

## 1

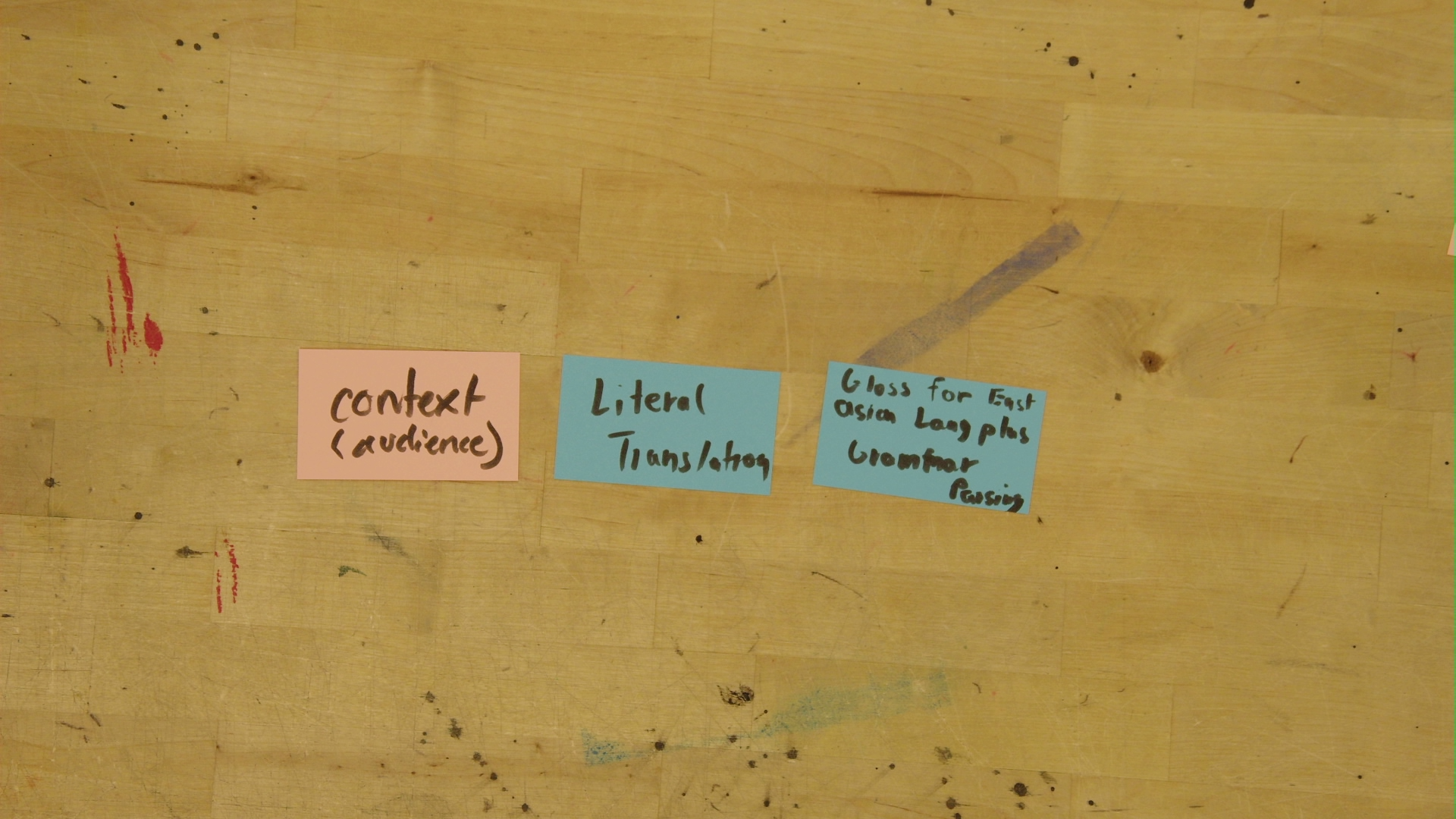

1. **Context (Audience)** → defines who the translation is for.

2. **Literal Translation** → generates a faithful, word-level translation.

3. **Gloss + Grammar Passing (for East Asian Languages)** → refines translation to include cultural/grammatical cues for pedagogy.

---

```python

# 🟧 CARD 1: CONTEXT (AUDIENCE)

def define_context(src_text: str, target_audience: str) -> str:

"""

Ask the model to analyze the source text and extract key context features

relevant to the target audience (e.g., tone, register, cultural references).

"""

prompt = f"""

You are preparing to translate a passage for a specific audience.

Source text:

{src_text}

Audience description:

{target_audience}

Step 1: Identify the intended communicative goals, tone, and cultural nuances.

Step 2: Suggest translation strategies to preserve those features.

Output only a short prose paragraph describing the translation approach.

"""

resp = client.responses.create(

model=MODEL_MAIN,

input=prompt

)

return resp.output_text.strip()

context_summary = define_context(SOURCE_TEXT, "English-speaking undergraduate students in a world literature course")

print(context_summary)

```

---

```python

# 🟦 CARD 2: LITERAL TRANSLATION

def literal_translation(src_text: str, context_notes: str) -> str:

"""

Perform a literal, close translation that preserves word order and syntax

as much as possible while remaining grammatical in English.

"""

prompt = f"""

Translate the following passage as literally as possible into English.

Use the context notes as interpretive guidance but avoid paraphrase.

<context>

{context_notes}

</context>

<source>

{src_text}

</source>

Output only the English translation text.

"""

resp = client.responses.create(

model=MODEL_MAIN,

input=prompt

)

return resp.output_text.strip()

draft_translation = literal_translation(SOURCE_TEXT, context_summary)

print(draft_translation)

```

---

```python

# 🟦 CARD 3: GLOSS + GRAMMAR PASSING (for East Asian Languages)

def gloss_and_grammar_pass(src_text: str, literal_english: str) -> str:

"""

Add interlinear glosses, key grammar notes, and minimal cultural annotations.

Especially suited for pedagogical translation of East Asian languages.

"""

prompt = f"""

You are producing a glossed translation for students learning about East Asian languages.

Starting from the literal English translation, provide:

• A brief interlinear gloss (word or phrase-level alignment)

• Grammar notes (e.g., particle usage, verb aspect, honorifics)

• Minimal commentary on cultural/idiomatic choices

Keep the gloss compact and suitable for classroom use.

Return as Markdown with the following sections:

### Gloss

### Grammar Notes

### Commentary

"""

resp = client.responses.create(

model=MODEL_MAIN,

input=prompt

)

return resp.output_text.strip()

annotated_translation = gloss_and_grammar_pass(SOURCE_TEXT, draft_translation)

display(Markdown(annotated_translation))

```

---

```python

# OPTIONAL: FEEDBACK + REVISION LOOP (reuses your sample functions)

feedback = judge_translation(SOURCE_TEXT, draft_translation)

revised_translation = revise_translation(SOURCE_TEXT, draft_translation, feedback)

print(revised_translation)

```

---

### 🧩 Summary of the Workflow

| Step | Card | Function | Purpose |

| ---- | ----------------------- | ---------------------------------------------- | -------------------------------------------- |

| 1 | 🟧 Context (Audience) | `define_context()` | Establish communicative and cultural frame |

| 2 | 🟦 Literal Translation | `literal_translation()` | Produce raw literal translation |

| 3 | 🟦 Gloss + Grammar Pass | `gloss_and_grammar_pass()` | Pedagogical refinement for language learners |

| 4 | (Optional Loop) | `judge_translation()` + `revise_translation()` | Iterative feedback/refinement chain |

---

## 2 (Jonah?)

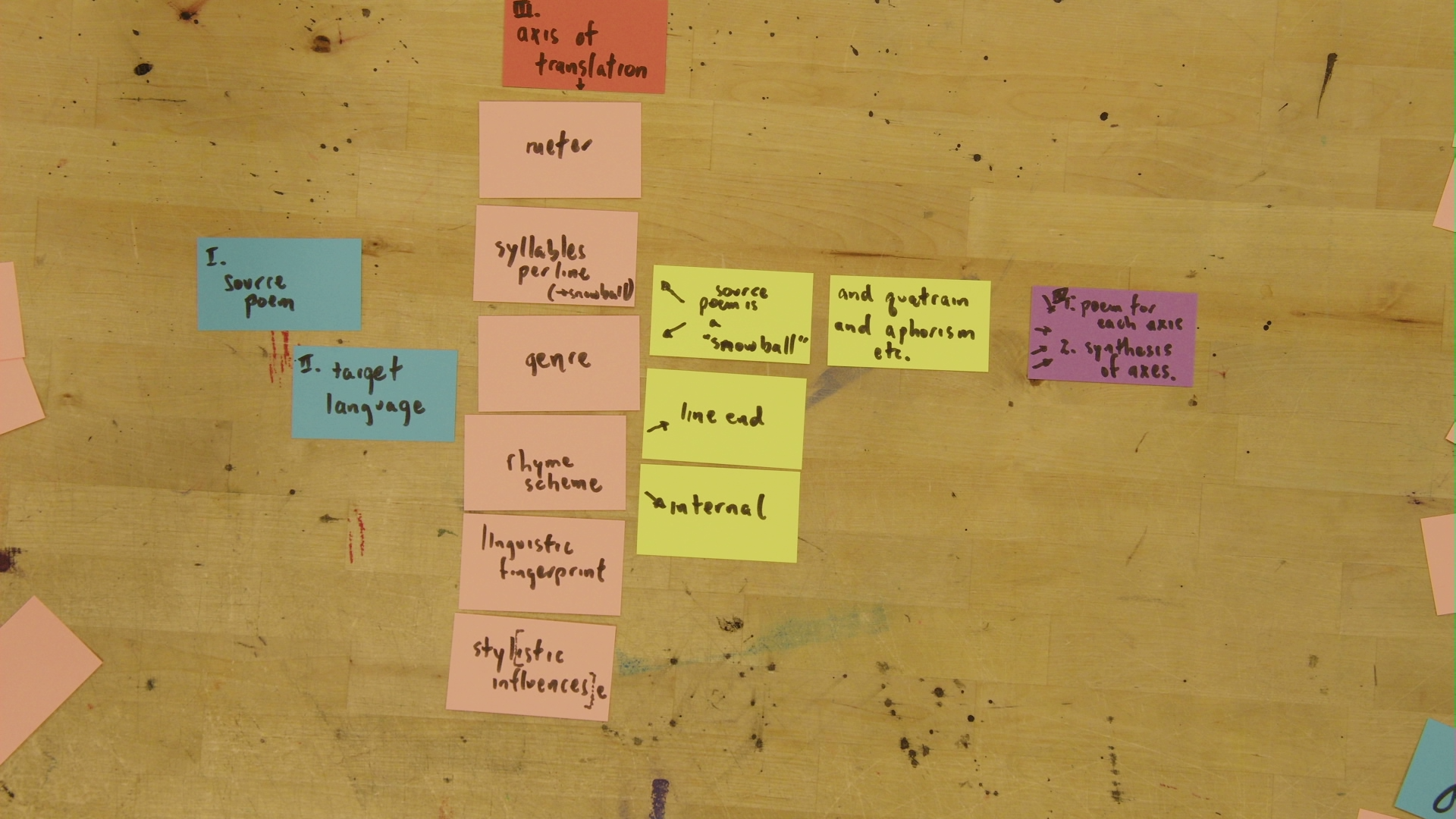

* **I. source poem**

* **I. target language** (2nd blue card—implicitly “II.”)

* **III. axes of translation** (column header)

* meter

* syllables per line *(+ “snowball” option)*

* genre

* rhyme scheme

* linguistic fingerprint

* stylistic influences

* Side notes (yellow cards):

* “source poem is a ‘snowball’ ”

* “line end”

* “internal”

* “and quatrain and aphorism etc.” *(forms/containers)*

* Purple card (tasks):

1. poem for each axis

2. synthesis of axes

Below are **drop-in cells**. They assume you already have `client` and `MODEL_MAIN` defined (same API pattern as in your sample `judge_translation`/`revise_translation` cells).

---

```python

# CELL 1 — Setup: source & target

# Required: define these upstream or here.

SOURCE_TEXT = """<PASTE YOUR SOURCE POEM HERE>"""

TARGET_LANGUAGE = "English" # e.g., "English", "French", etc.

# Optional: form containers spotted on cards

FORMS = ["quatrain", "aphorism"] # extend as needed

# Optional: the source poem is a snowball?

SOURCE_IS_SNOWBALL = False # set True if the original grows syllables per line

```

---

```python

# CELL 2 — Axis schema (from the cards)

from dataclasses import dataclass, asdict

from typing import Optional, List, Dict, Any

@dataclass

class Meter:

# e.g., "iambic pentameter", "7+5 mora", "free verse but rhythmic periodicity"

pattern: Optional[str] = None

@dataclass

class SyllablesPerLine:

# explicit counts or a rule ("snowball": increment each line)

counts: Optional[List[int]] = None

snowball: bool = False # True → 1,2,3,4… or custom progression

@dataclass

class Genre:

# e.g., "lyric", "didactic", "elegy", "satire", "haiku-like", etc.

label: Optional[str] = None

@dataclass

class RhymeScheme:

# e.g., "ABAB", "AABB", "none", internal vs line-end tendencies

scheme: Optional[str] = None

line_end_bias: bool = True

internal_bias: bool = False

@dataclass

class LinguisticFingerprint:

# lexical + syntactic habits (alliteration, particles, honorifics, verb-final, etc.)

notes: Optional[str] = None

@dataclass

class StylisticInfluences:

# e.g., authors/movements/devices to echo

influences: Optional[List[str]] = None

@dataclass

class TranslationAxes:

meter: Meter = Meter()

syllables: SyllablesPerLine = SyllablesPerLine()

genre: Genre = Genre()

rhyme: RhymeScheme = RhymeScheme()

fingerprint: LinguisticFingerprint = LinguisticFingerprint()

style: StylisticInfluences = StylisticInfluences()

forms: List[str] = None # e.g., ["quatrain", "aphorism"]

axes = TranslationAxes(

meter=Meter(pattern=None), # keep None for model to infer/decide

syllables=SyllablesPerLine(counts=None, snowball=False),

genre=Genre(label=None),

rhyme=RhymeScheme(scheme=None, line_end_bias=True, internal_bias=False),

fingerprint=LinguisticFingerprint(notes=None),

style=StylisticInfluences(influences=None),

forms=FORMS or []

)

```

---

```python

# CELL 3 — Context builder (source poem + target language + axis briefing)

import json

import textwrap

def build_axis_brief(axes: TranslationAxes) -> str:

d = asdict(axes)

# tidy JSON for visibility inside prompts

return json.dumps(d, ensure_ascii=False, indent=2)

def plan_from_cards(src: str, target_lang: str, axes: TranslationAxes, source_is_snowball: bool=False) -> str:

brief = build_axis_brief(axes)

prompt = f"""

You are planning a literary translation with explicit constraints derived from workshop cards.

<source_poem>

{src}

</source_poem>

<target_language>{target_lang}</target_language>

<cards_axis_brief>

{brief}

</cards_axis_brief>

Additional notes:

- If the source poem is a "snowball" (syllables increase per line), preserve that constraint if feasible.

- "line end" vs "internal" refers to rhyme placement preference.

Task:

1) Summarize the translation plan in 5–8 bullet points mapping each axis to concrete constraints.

2) Flag any impossible combinations or tradeoffs.

Return ONLY Markdown with:

### Plan

- bullet points...

### Risks

- bullet points...

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

plan_md = plan_from_cards(SOURCE_TEXT, TARGET_LANGUAGE, axes, SOURCE_IS_SNOWBALL)

```

---

```python

# CELL 4 — Literal pass (baseline), agnostic to axes

def literal_pass(src: str, target_lang: str) -> str:

prompt = f"""

Translate the source poem as literally as possible into {target_lang}.

Keep source line breaks where possible. Avoid paraphrase, additions, or omissions.

Output ONLY the translation text (no commentary, no Markdown).

<source>

{src}

</source>

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

literal_translation = literal_pass(SOURCE_TEXT, TARGET_LANGUAGE)

```

---

```python

# CELL 5 — Variant generator: "poem for each axis"

def axis_instruction(axis_name: str, axes: TranslationAxes) -> str:

"""Produce a concise, enforceable instruction for a single axis."""

a = axes

if axis_name == "meter":

return f"Conform to meter: {a.meter.pattern or 'infer appropriate meter; justify implicitly via rhythm'}."

if axis_name == "syllables":

if a.syllables.snowball:

return "Use a SNOWBALL pattern: increase syllables line-by-line (e.g., 1,2,3,4, ...)."

if a.syllables.counts:

return f"Enforce syllables per line: {a.syllables.counts} (strict)."

return "Keep roughly constant syllable counts per line; avoid major fluctuations."

if axis_name == "genre":

return f"Adopt genre conventions of: {a.genre.label or 'closest fitting genre'}."

if axis_name == "rhyme":

bits = []

bits.append(f"Rhyme scheme: {a.rhyme.scheme or 'subtle approximate rhyme'}")

if a.rhyme.line_end_bias: bits.append("Favor line-end rhyme.")

if a.rhyme.internal_bias: bits.append("Favor internal rhyme.")

return " | ".join(bits) + "."

if axis_name == "fingerprint":

return f"Linguistic fingerprint: {a.fingerprint.notes or 'echo syntactic/lexical habits from the source'}."

if axis_name == "style":

return "Stylistic influences: " + (", ".join(a.style.influences) if a.style.influences else "tastefully echo source-era/style") + "."

if axis_name == "forms":

return "Conform to one of these containers: " + (", ".join(a.forms) if a.forms else "no strict container") + "."

return ""

AXES_ORDER = ["meter", "syllables", "genre", "rhyme", "fingerprint", "style", "forms"]

def generate_axis_variant(src: str, target_lang: str, axes: TranslationAxes, axis_name: str, base: str=None) -> str:

"""

Create a 'poem for each axis' variant: enforce ONE axis strongly, keep others minimal.

If `base` is provided, treat it as the starting draft (e.g., literal pass).

"""

inst = axis_instruction(axis_name, axes)

prompt = f"""

Produce a translation in {target_lang}.

Enforce the following axis **strongly**: {axis_name.upper()}.

Constraint: {inst}

Keep all other axes minimal and non-contradictory.

<source>

{src}

</source>

{"<starting_draft>\n" + base + "\n</starting_draft>" if base else ""}

Output ONLY the poem (no commentary, no Markdown).

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

axis_variants = {

axis: generate_axis_variant(SOURCE_TEXT, TARGET_LANGUAGE, axes, axis, base=literal_translation)

for axis in AXES_ORDER

}

```

---

```python

# CELL 6 — Axis-fit judge (JSON) to score each variant for its axis

def judge_axis_fit(axis_name: str, src: str, draft: str, axes: TranslationAxes) -> dict:

"""

Return ONLY JSON:

{"score": 0-10, "positives": [...], "negatives": [...], "evidence": "..."}

"""

inst = axis_instruction(axis_name, axes)

prompt = f"""

Evaluate how well the DRAFT satisfies the AXIS constraint.

AXIS: {axis_name}

CONSTRAINT: {inst}

<source>{src}</source>

<draft>{draft}</draft>

Return ONLY a JSON object with keys:

- "score" (integer 0-10)

- "positives" (list of short strings)

- "negatives" (list of short strings)

- "evidence" (short prose justification)

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

try:

return json.loads(resp.output_text)

except json.JSONDecodeError as e:

raise ValueError("Model did not return valid JSON for axis judge.") from e

axis_reviews = {ax: judge_axis_fit(ax, SOURCE_TEXT, axis_variants[ax], axes) for ax in AXES_ORDER}

axis_reviews

```

---

```python

# CELL 7 — Improve each axis variant using your existing critic/reviser

# re-use your provided judge/revise or plug in here.

# Example using your functions:

improved_axis_variants = {}

for ax in AXES_ORDER:

critique = {

"positives": axis_reviews[ax].get("positives", []),

"negatives": axis_reviews[ax].get("negatives", [])

}

improved_axis_variants[ax] = revise_translation(SOURCE_TEXT, axis_variants[ax], critique)

improved_axis_variants

```

---

```python

# CELL 8 — Synthesis: combine axes (purple card #2)

def synthesize_axes(src: str,

target_lang: str,

axes: TranslationAxes,

ingredients: Dict[str, str]) -> str:

"""

Create a single poem that satisfies ALL axes simultaneously,

using the improved per-axis variants as style/material references.

"""

references_md = "\n\n".join(

f"### {k}\n{v}" for k, v in ingredients.items()

)

brief = build_axis_brief(axes)

prompt = f"""

Create a final translation in {target_lang} that **synthesizes all axes**.

<axis_brief>

{brief}

</axis_brief>

Use the following improved axis-specific drafts as references (do not copy verbatim; resolve conflicts with best judgment):

{references_md}

<source>

{src}

</source>

Output ONLY the final poem (no commentary, no Markdown).

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

final_translation = synthesize_axes(

SOURCE_TEXT,

TARGET_LANGUAGE,

axes,

improved_axis_variants

)

final_translation

```

---

```python

# CELL 9 — Optional: final quality pass using your judge/revise loop

feedback = judge_translation(SOURCE_TEXT, final_translation)

final_translation_v2 = revise_translation(SOURCE_TEXT, final_translation, feedback)

final_translation_v2

```

---

```python

# CELL 10 — (Optional) East Asian gloss & grammar (if you want to keep this available)

def gloss_for_east_asian(src: str, translation: str, target_lang: str) -> str:

prompt = f"""

Produce a compact classroom-ready gloss/grammar note for a translation into {target_lang}.

Include:

### Gloss

### Grammar Notes (e.g., particles, honorifics, word order, aspect)

### Commentary (idiom/culture)

Return Markdown only.

<source>{src}</source>

<translation>{translation}</translation>

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

# gloss_md = gloss_for_east_asian(SOURCE_TEXT, final_translation_v2, TARGET_LANGUAGE)

# display(Markdown(gloss_md))

```

---

### How this maps to your cards

* **I. source poem / I. target language** → Cells 1 & 3 inputs.

* **III. axes of translation** → `TranslationAxes` dataclass + `AXES_ORDER`.

* **meter / syllables per line (snowball) / genre / rhyme scheme / linguistic fingerprint / stylistic influences** → enforced via `axis_instruction()` and judged in Cell 6.

* **line end / internal** → toggles in `RhymeScheme`.

* **“source poem is a ‘snowball’ ”** → `SOURCE_IS_SNOWBALL` and `SyllablesPerLine.snowball`.

* **“and quatrain and aphorism etc.”** → `FORMS` / `forms` axis.

* **Purple tasks** → Cell 5 (“poem for each axis”) + Cell 8 (“synthesis of axes”).

## 3

---

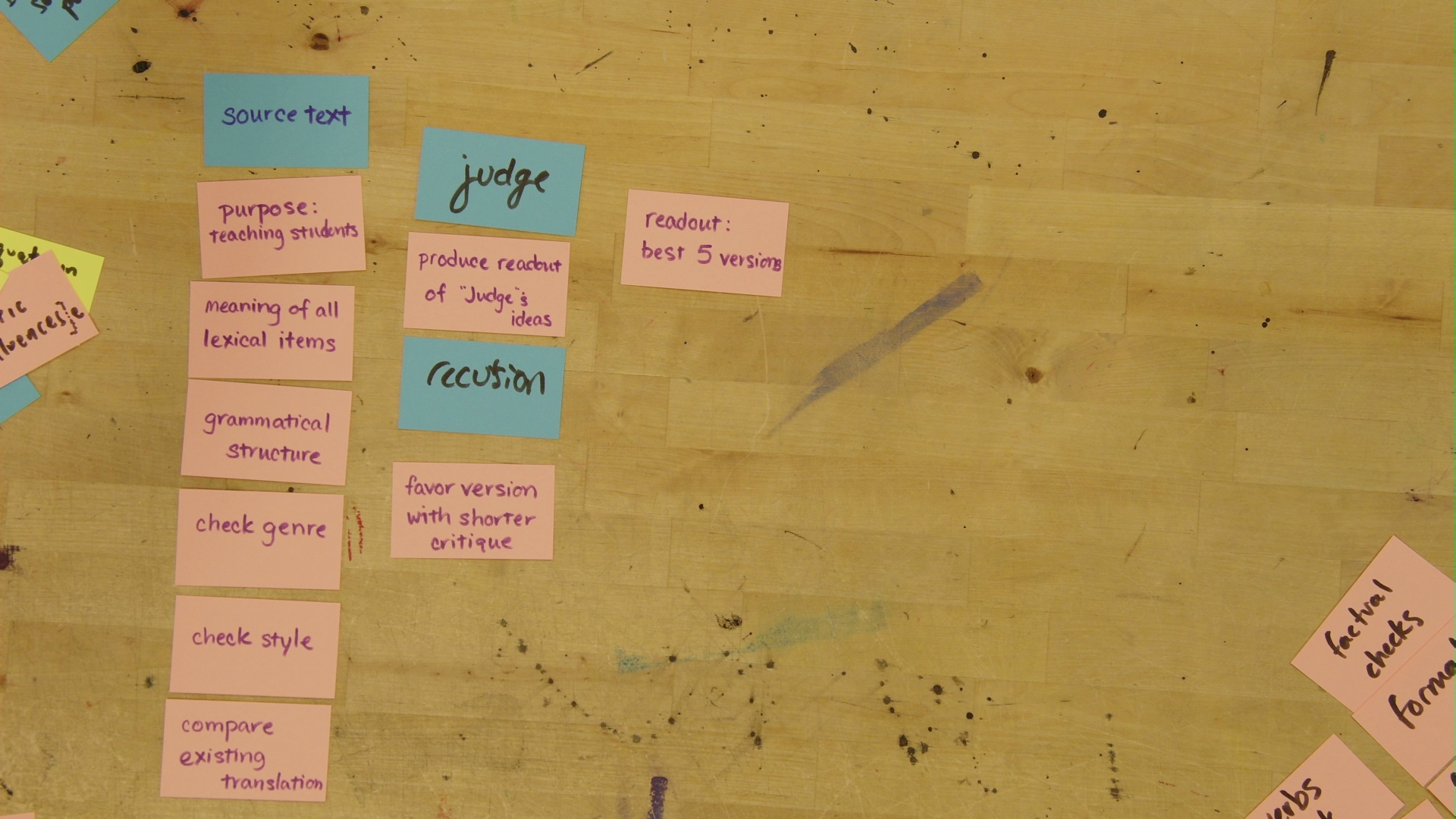

### 🩵 Blue cards

* **source text**

* **judge**

* **recursion**

### 🩷 Pink cards (left column)

* **purpose: teaching students**

* **meaning of all lexical items**

* **grammatical structure**

* **check genre**

* **check style**

* **compare existing translation**

### 🩷 Pink cards (right middle)

* **produce readout of “Judge’s ideas”**

* **favor version with shorter critique**

* **readout: best 5 versions**

### 🩷 Pink cards (bottom right corner)

* **factual checks**

* **formal checks**

---

This iteration emphasizes:

* **Multiple recursive rounds** of improvement.

* **Selective judgment:** choose *best N* versions.

* **Pedagogical readability:** shorter, more digestible critiques for students.

---

Here’s a **Python notebook chain** implementing this updated workflow.

It extends the earlier recursion code with:

1. Generation of **N recursive improvements**.

2. **Judging & ranking** each version.

3. Producing a **readout summary of top 5** for classroom discussion.

4. Optional final **factual & formal validation**.

---

```python

# CELL 1 — Setup

SOURCE_TEXT = """<PASTE YOUR SOURCE POEM OR TEXT HERE>"""

TARGET_LANGUAGE = "English"

PURPOSE = "Teaching students translation principles through iterative, judged improvements."

CHECKPOINTS = [

"meaning of all lexical items",

"grammatical structure",

"check genre",

"check style",

"compare existing translation"

]

N_VERSIONS = 10 # total recursion loops to run

TOP_K = 5 # number of best versions to summarize

```

---

```python

# CELL 2 — Initial translation

def initial_translation(src: str, target_lang: str) -> str:

prompt = f"""

Translate into {target_lang} as faithfully as possible.

Purpose: teaching students, so preserve transparency of lexical and grammatical choices.

Return only the translation text.

<source>{src}</source>

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

base_translation = initial_translation(SOURCE_TEXT, TARGET_LANGUAGE)

print(base_translation)

```

---

```python

# CELL 3 — Pedagogical judge (multi-criterion JSON)

import json

def judge_translation(src: str, draft: str, checkpoints: list) -> dict:

prompt = f"""

Evaluate this translation according to the following checkpoints:

{', '.join(checkpoints)}

Return valid JSON of the form:

{{

"<criterion>": {{

"positives": [...],

"negatives": [...]

}},

"overall_score": <0–10 integer>

}}

<source>{src}</source>

<translation>{draft}</translation>

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

try:

return json.loads(resp.output_text)

except json.JSONDecodeError:

return {"overall_score": 0}

```

---

```python

# CELL 4 — Recursive improvement loop (favor concise critique)

def improve_translation(src: str, draft: str, judgment: dict) -> str:

"""

Improve translation based on negatives; keep positives.

Produce a concise revision suited to teaching.

"""

prompt = f"""

You are revising a translation after receiving critique.

Keep positives intact, fix negatives, and favor brevity and clarity.

Output only the revised translation.

<source>{src}</source>

<judgment>{json.dumps(judgment, ensure_ascii=False, indent=2)}</judgment>

<draft>{draft}</draft>

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

def recursive_versions(src: str, base: str, n: int) -> list:

"""

Produce n recursive versions by judging and improving repeatedly.

"""

versions = [base]

for i in range(n-1):

j = judge_translation(src, versions[-1], CHECKPOINTS)

improved = improve_translation(src, versions[-1], j)

versions.append(improved)

return versions

versions = recursive_versions(SOURCE_TEXT, base_translation, N_VERSIONS)

len(versions)

```

---

```python

# CELL 5 — Judge and rank all versions

def rank_versions(src: str, versions: list, checkpoints: list) -> list:

"""

Evaluate each version with the same judge and rank by overall_score.

"""

scored = []

for idx, v in enumerate(versions):

j = judge_translation(src, v, checkpoints)

score = j.get("overall_score", 0)

scored.append({"index": idx+1, "score": score, "text": v, "judgment": j})

scored.sort(key=lambda x: x["score"], reverse=True)

return scored

ranked = rank_versions(SOURCE_TEXT, versions, CHECKPOINTS)

top5 = ranked[:TOP_K]

```

---

```python

# CELL 6 — Generate short critiques for teaching ("favor shorter critique")

def shorten_critique(judgment: dict) -> str:

prompt = f"""

Summarize the judge's critique briefly for students (≤150 words).

Keep pedagogical clarity; highlight improvement focus areas.

<judgment>{json.dumps(judgment, ensure_ascii=False, indent=2)}</judgment>

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

return resp.output_text.strip()

for t in top5:

t["short_readout"] = shorten_critique(t["judgment"])

```

---

```python

# CELL 7 — Readout: best 5 versions

def best_versions_readout(top: list) -> str:

readout = "# Readout: Best 5 Versions\n"

for v in top:

readout += f"\n## Version {v['index']} (Score: {v['score']})\n"

readout += v["text"] + "\n\n"

readout += f"**Short critique:** {v['short_readout']}\n\n"

readout += "---\n"

return readout

readout_md = best_versions_readout(top5)

display(Markdown(readout_md))

```

---

```python

# CELL 8 — Factual and formal checks (optional QA)

def qa_factual_formal(src: str, translation: str) -> dict:

prompt = f"""

Perform factual and formal checks on this translation.

Return JSON:

{{

"factual_issues": [list],

"formal_issues": [list]

}}

<source>{src}</source>

<translation>{translation}</translation>

"""

resp = client.responses.create(model=MODEL_MAIN, input=prompt)

try:

return json.loads(resp.output_text)

except json.JSONDecodeError:

return {"factual_issues": [], "formal_issues": ["JSON parse error"]}

qa_results = qa_factual_formal(SOURCE_TEXT, top5[0]["text"])

qa_results

```

---

### 🧩 Mapping of Cards → Notebook Steps

| Card | Cell / Function | Description |

| -------------------------------------------------------- | ----------------------------------------- | ------------------------------- |

| **source text** | `SOURCE_TEXT` | Base input |

| **purpose: teaching students** | `PURPOSE` | Pedagogical frame |

| **meaning / grammar / genre / style / compare existing** | `CHECKPOINTS` | Criteria for the judge |

| **judge** | `judge_translation()` | Evaluation per round |

| **produce readout of judge’s ideas** | `shorten_critique()` | Classroom summary |

| **recursion** | `recursive_versions()` | Iterative self-improvement loop |

| **favor version with shorter critique** | `shorten_critique()` used for readability | |

| **readout: best 5 versions** | `best_versions_readout()` | Markdown summary for teaching |

| **factual checks / formal checks** | `qa_factual_formal()` | Final QA stage |

---

Sign in with Wallet

Sign in with Wallet

Sign in with Wallet

Sign in with Wallet