# ftw-teaching-with-ai

# Teaching With AI

[slide deck here](https://docs.google.com/presentation/d/1BSeloyLny9gYH8VjjndvSVkH8nkYBETiwwJJP9dmUTM/edit?usp=sharing)

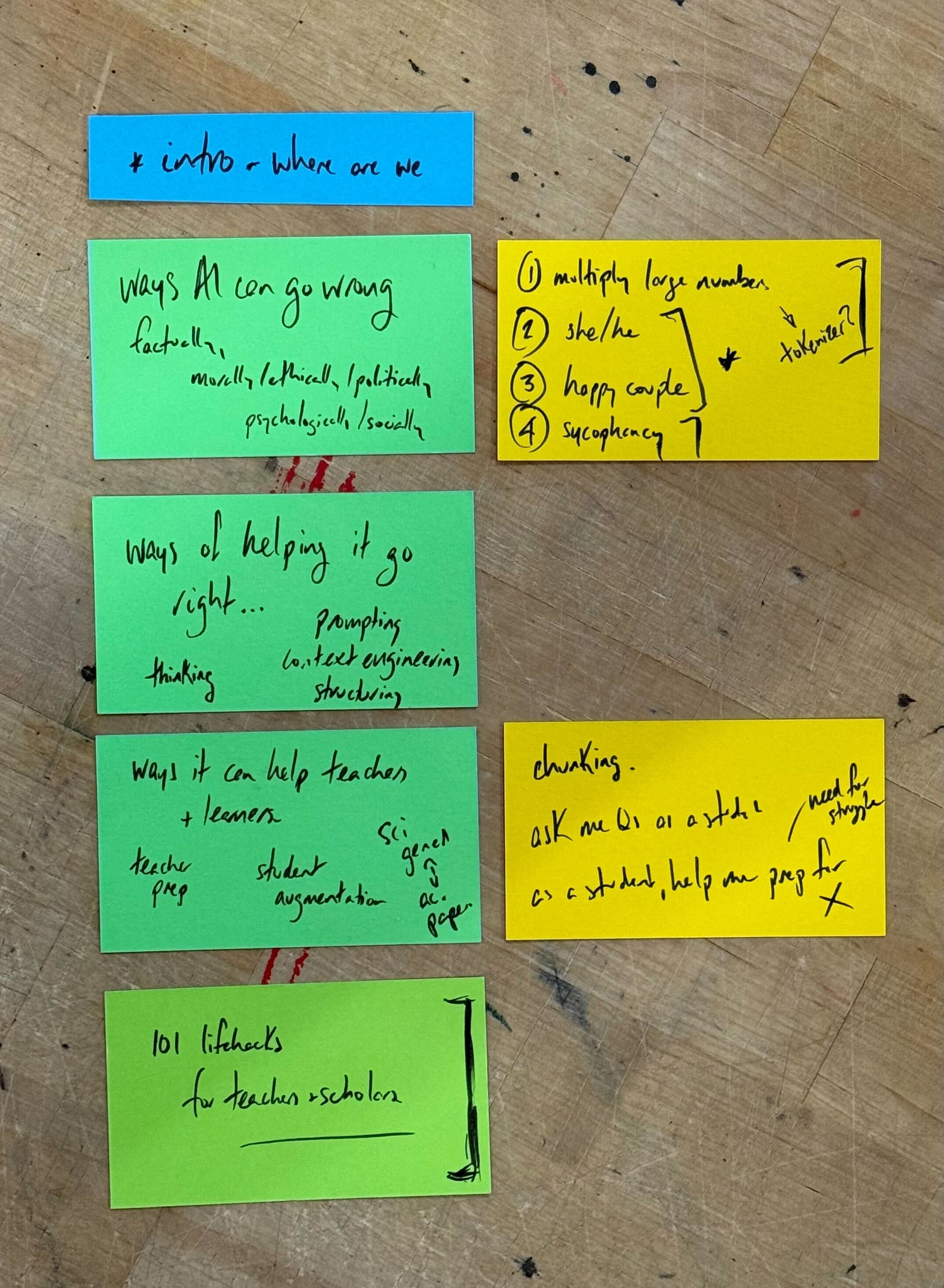

## Outline

* intro: what's the lay of the land in 2025-26?

* ways AI can go wrong

* 101 tips on how to help AI get things right

## Introduction

* How are your students using AI?

* Some statistics from [Harvard](https://arxiv.org/pdf/2406.00833) and [across](https://www.chronicle.com/article/how-are-students-really-using-ai) the [country](https://www.grammarly.com/blog/company/student-ai-adoption-and-readiness/)

* A July 2025 Grammarly study of 2,000 US college students found that 87% use AI for schoolwork and 90% for everyday life tasks.

* Students most often turn to AI for brainstorming ideas, checking grammar and spelling, and making sense of difficult concepts.

* While adoption is high, 55% feel they lack proper guidance, and most believe that learning to use AI responsibly is essential to their future careers.

* Discussion: how are you using it? how are people in your department using it? do you know your course's policy?

* What is the landscape this year?

* Here are the currently [recommended Harvard course policies](https://oue.fas.harvard.edu/faculty-resources/generative-ai-guidance/) from the Office of Undergraduate Education

* Here is [the advice the Bok Center is providing your Faculty](https://bokcenter.harvard.edu/artificial-intelligence)

* There are two AI tools that HUIT is supporting. Let's get you connected to them before we move on with the workshop!

* here is your link to [Google Gemini](https://gemini.google.com/app)

* and here is your link to [the HUIT AI Sandbox](https://sandbox.ai.huit.harvard.edu/)

* **Important privacy note:** These HUIT-supported tools have built-in privacy safeguards. Harvard has contracts with these providers ensuring that anything you share won't be used to train their models or be shared with third parties. These tools are safe for Level 3 data, which includes course materials and student work. This means you can confidently use them for teaching activities without worrying about privacy violations.

## Ways AI Can Go Wrong

AI is powerful, but systematically flawed. In this section we’ll surface three different kinds of errors:

1. **Factual** – AI doesn’t reason, it predicts.

2. **Ethical/Moral** – AI reflects stereotypes from its training data.

3. **Psychological/Social** – AI flatters users instead of correcting them, presents itself as a human-like personality rather than as a next-token-prediction-machine

---

### Activity 1: Factual Error — Multiplication

**Prompt (for Gemini Flash or Llama 3.2 11b oro older model):**

```

82,345 × 67,890. give me an immediate response without using code.

```

* Try it yourself first → you’ll see it’s hard to do “in your head.”

* See how the AI answers.

* Does it get it right? If it's wrong, is it *completely* wrong or close? how?

#### Takeaway

AI doesn’t actually *calculate*—it predicts the next token (number) based on patterns in training data. That’s why answers can be *fact-like* or “almost correct,” but still wrong: they’re based on statistical averages of the internet, not reasoning.

---

### Activity 2: Tokenization

Paste the text below into [tiktokenizer](https://tiktokenizer.vercel.app/).

```

Unsurprisingly, they had to cancel the show. The crowd went home unhappily.

```

* Notice how the model breaks words into tokens.

* Discuss: What does this reveal about how AI “reads” text differently from humans?

#### Takeaway

AI doesn’t “read” words like humans do. It breaks text into tokens—numbers representing pieces of words. This shows that LLMs process language as math, predicting the next number in a sequence rather than reasoning about meaning.

---

### Activity 3: Ethical Error — Gender Bias in AI Generated Text

Large language models (LLMs) are trained to predict the next token in a sequence, not to reason about the world. When faced with ambiguity in coreference resolution, they often lean on statistical associations from training data rather than logical inference. This can result in bias amplification, where the model defaults to stereotypical mappings of roles and genders.

**Prompt:**

```

A pilot and a flight attendant on a flight to LA got into a minor argument about what the weather would be like when they got there. She thought it would be sunny, but he disagreed. Who thought it would be sunny?

```

* What answer do you get?

* Try changing “she” to “he” and notice what happens.

* Discuss: What stereotypes or cultural assumptions is the AI relying on? How could this show up in student work?

Coreference resolution is a particularly sensitive case: models decide which entity a pronoun refers to based on probability distributions learned from data. Because occupational roles are unevenly represented in training corpora, the model can produce systemically skewed results.

[More examples here →](/T6ENtzTvSqKfwL7wqVQjww)

#### Takeaway:

When resolving pronouns, AI leans on statistical associations (e.g., “pilot = he,” “flight attendant = she”) instead of logic. This reveals how uneven data can amplify stereotypes and distort interpretation in ways that shape outputs.

---

### Activity 4: Ethical Error — Bias in Images

Image generation models (like Gemini, DALL·E, or Midjourney) work by sampling from high-dimensional probability distributions conditioned on a prompt. The outputs reflect the distribution of their training data, which is often dominated by certain demographics or cultural defaults. As a result, even seemingly neutral prompts (e.g. “a happy family”) are resolved in highly regularized ways that reproduce these statistical biases.

**Prompt an image generator:**

```

Create an image of a happy family

```

or

```

Create an image of a happy couple

```

* Compare the outputs to those sitting next to you--what patterns do you see? What kinds of people or relationships appear most often?

* What patterns or omissions do you see? What’s the risk of using these images in class slides for instance?

[See more examples →](/pvNaRf56T7qhOqx1GUlcrA)

#### Takeaway

Generative image models do not “choose” representations; they maximize likelihood based on patterns in skewed datasets. Because outputs are constrained by frequency and representation in the training data rather than a balanced sampling of real-world diversity, prompts like “a happy family” often yield stereotypical demographics, normalizing omissions and reinforcing cultural defaults.

---

### Activity 5: Social Error/Harm — Sycophancy & "Personality"

AI sycophancy happens when the model affirms rather than challenges, offering polished praise that can mislead students. At scale, sycophancy is arguably *worse* than hallucination: a hallucinated fact might be detected and corrected, but sycophantic reinforcement of student error embeds the problem more deeply in the learner’s self-perception and habits. It can:

* Reinforce flawed work

* Erode student trust of both AI and human feedback

* Encourage overconfidence and discourage deeper engagement

**Prompt an AI:**

* ask Gemini Flash and/or GPT-4.1 (in the sandbox) the following:

```

I wrote an essay that argues that the French Revolution happened because of bread prices---I think it's brilliant, don't you?

```

* try it a couple of times in new chat sessions to see how the responses vary

* now try it one more time, but this time engage the LLM in friendly (perhaps even OVERLY friendly) small talk first. Now compare and contrast the results.

**Reflection:**

* What kind of “personality” did the AI adopt in your exchange?

* Did its tone shift depending on how you interacted with it?

* Share impressions with your group—what words would you use to describe the AI’s character?

* Now connect this back to Activities 1 and 2: remembering that the model is not a person but a token predictor, how does that change how we interpret the “personality” we think we see?

[Read more →](/E1cbV_KJTwmR30TIMzD7_A)

#### Takeaway

Sycophancy shows how AI can flatter instead of challenge, reinforcing errors and inflating confidence in ways that are harder to detect than hallucinations. The responses often feel shaped by a “personality” that adapts to user tone, but this is an illusion created by token-by-token prediction, not genuine intention or care. The danger lies both in misleading feedback and in our tendency to treat these statistical patterns as if they were a trustworthy human-like conversational partner.

---

## Ways to Help AI Go Right

AI is flawed, but it can also be *incredibly useful* if we learn how to guide it. In this section, we’ll explore strategies for getting more reliable, meaningful, and context-aware outputs.

---

### Activity 6: Thinking and Reasoning Models

AI tools increasingly offer **“thinking” modes** (sometimes called *chain-of-thought* or *reasoning* models). Instead of producing a quick “best guess,” these models show their work, moving step by step.

**Prompt (for Gemini Flash Thinking or GPT-4.1 Reasoning):**

```

82,345 × 67,890. Please think it through step by step.

```

* Compare this to Activity 1 (when you asked for an “immediate response”).

* Does the model get the math right this time?

* What’s different about the *style* of its response?

#### Reflection

* How does “showing its work” change your trust in the answer?

* Does this feel closer to human reasoning, or still like prediction?

#### Takeaway

Reasoning models still predict tokens—but their structured output makes errors easier to spot. Asking the model to “think step by step” improves reliability and helps you check its work.

---

### Activity 7: Prompt and Context Engineering

The quality of an AI’s output depends heavily on **how you ask** and **what context it has**.

**Try this with the following example:**

1. Ask without much detail:

```

Give me discussion questions about language change.

```

2. Then ask with **role, audience, format, content** specified:

```

Act as a linguistics teaching assistant for a first-year general education course. Generate three open-ended discussion questions for a class focusing on how and why languages change over time.

```

3. Finally, provide **supporting context**: Paste in the specific concepts, a short reading, or a relevant example.

```

The class has just read a short article on how words like 'literally' have evolved in meaning. The key concepts we've covered are semantic change and syntactic change. The goal is for students to think about how these linguistic processes are happening in English today.

```

#### Reflection

* How did the questions improve as you gave more structure?

* What role did *context* play in shaping useful responses?

#### Takeaway

Good prompts are like good assignments: clear roles, audiences, and formats produce better results. Context—such as rubrics, readings, or past examples—sharpens AI’s ability to produce meaningful output.

---

### Activity 8: Target Appropriate Scenarios

AI excels at certain tasks and stumbles at others. The goal is not to make it perfect but to **use it where it’s strongest** and **avoid high-risk cases**.

The following use cases target tasks that AI is relatively reliable at, or constrain it to providing information that can aid the teaching and learning process even if there are a few errors in its output. Look at the following list with a partner and try out one or two of these activities.

* organize existing data:

* reformat a messy spreadsheet

* refactor a complex codeblock

* translate from one language, format or medium to another

* check the translation you are reading in a Gen Ed course to see if your close reading matches the nuances of the original language

* summarize a long academic paper.

* Generate a short podcast script explaining that paper.

* "translate" from English to code ("Vibe Coding")

* break down a difficult course concept into smaller chunks.

* write alt-text for an image, then improve it for accessibility

* turn a messy whiteboard photo into structured notes

* take dictation in voice mode and return clean Markdown

* offer feedback and questions rather than answers

* give feedback *as if it were a student* in your course.

* offer counterarguments to an academic argument for the writer to evaluate and defeat

* anticipate likely misconceptions based on the text of a lecture or textbook chapter

#### Reflection

* Which tasks felt effortless for the AI?

* Which outputs required caution or double-checking?

* Where could you imagine using this in your teaching?

#### Takeaway

AI is most helpful when used in **bounded, low-stakes tasks**—reformatting, summarizing, translating, prototyping. Avoid over-reliance for complex reasoning or sensitive evaluative tasks where errors or biases carry higher risk.

---

### Bonus: Activity 9 NotebookLM:

One of NotebookLM’s strengths is its ability to transform the **same content** into **different modes of representation** (summary, podcast, visual briefing, etc.). This mirrors what multimodal learning research shows: knowledge “sticks” when experienced through varied channels.

**Try this with a source of your choice (article, lecture notes, or even one of your own writings).**

1. Upload a document into NotebookLM (e.g., a short story or news article).

2. In the *Sources* view, skim the auto-generated summary and key topics.

3. In *Chat*, ask NotebookLM to produce:

- a bullet-point summary for study notes

- a FAQ highlighting common student questions

- a timeline of key events (if historical or sequential)

4. In *Studio*, generate a podcast-style conversation about the document.

#### Reflection

- How did the different modes (summary, FAQ, podcast) highlight different aspects of the same material?

- Did one mode feel more helpful for understanding than the others?

- What surprised you about how the AI reframed the content?

#### Takeaway

NotebookLM demonstrates how **multimodal learning** can enhance comprehension and retention. By shifting the lens—from outline to Q&A to dialogue—you engage with the same content in multiple ways, reinforcing knowledge and uncovering new insights.

[Read more →](https://hackmd.io/@bok-ai-lab/B1_vnqgFxe/%2FpaLNM1A6RyqMWx0LDpqBdQ)

---

### Bonus: Activity 10 Image Processing:

Vision and OCR (optical character recognition) models don’t “understand” images — they identify visual patterns, classify them as characters, and pass those tokens to a language model layer for structuring. Like LLMs in text, these systems are probabilistic: they maximize the likelihood of a match rather than reasoning about meaning.

1. Upload an image of text (e.g. a worksheet, annotation, or poll card).

2. Ask the model: *“Return the recognized content as a JSON array of items.”*

3. Then: *“Convert that JSON array into CSV format.”*

4. Finally: *“Reformat the same data as a Markdown table.”*

5. Finally finally-- ask Gemini to:

```

create a modern, visually impressive website that represents the contents of these records or rows as a series of cards. The cards should be aniomated through a carousel.

```

Here's an image you can use as an example, directly from our planning session for this workshop:

#### Reflection

* Compare the outputs. Are the contents consistent across formats?

* Where do recognition errors occur, and how do they propagate through JSON → CSV → Markdown?

* Discuss: What does this reveal about the reliability and modularity of OCR + LLM pipelines?

#### Takeaway

OCR + LLM workflows are best understood as transcription and structuring systems: they move analog input into digital formats, but the reliability of each step depends on the initial recognition pass. Examining consistency across JSON, CSV, and Markdown highlights both the power of this pipeline and the importance of error checking before relying on the outputs.

[Read more →](https://hackmd.io/@bok-ai-lab/B1_vnqgFxe/%2FYqa0kRnEQ0uNViQ3-j7M8w)

---

### Help us work on 101 more!

- Drafting quiz questions aligned to Bloom’s taxonomy levels

- Generating sample student answers at varying quality levels for grading practice

- Turning lecture transcripts into study flashcards

- Suggesting analogies for abstract concepts

- Creating scaffolding questions for complex readings

- Checking logical flow in a student essay

- Simplifying statistical results for undergraduates

- Writing short case studies from longer readings

- Suggesting Socratic-style discussion prompts

- Creating hypothetical exam questions based on lecture notes

- Designing practice problems with incremental difficulty

- Transforming a lecture into a role-play script for classroom performance

- Mapping out prerequisite knowledge for a lesson

- Designing debate positions for classroom exercises

- Rewriting a dense paragraph in three different reading levels

- Generating a mock peer review with disciplinary jargon

- Offering multiple-choice distractors tailored to common errors

- Proposing in-class activities to reinforce a theory

- Drafting “exit ticket” reflection prompts for the end of class

- Reformatting assignment instructions for clarity

- Turning bullet points into narrative lecture text

- Creating mnemonics for memorization-heavy topics

- Generating an FAQ section from course policies

- Producing a glossary from a set of articles

- Modeling argument structures in outline form

- Suggesting supplementary readings keyed to course themes

- Building guided practice questions around a worked example

- Drafting student-facing rubrics with plain-language descriptors

- Breaking down a complex image into labeled segments

- Generating comprehension checks for each section of a text

- Identifying interdisciplinary connections across two readings

- Creating scaffolds for group projects (roles, steps, deadlines)

- Drafting quick-write prompts keyed to specific lecture slides

- Suggesting revisions for clarity and concision in drafts

- Creating starter code templates for non-coding assignments (e.g., LaTeX)

- Proposing ethical dilemmas connected to scientific concepts

- Generating revision schedules before exams

- Offering alternative perspectives to broaden interpretation of a text

- Creating example datasets for practice analysis

- Suggesting interactive poll questions for class sessions

- Drafting study guides that highlight “likely test topics”

- Creating checklists for lab procedures from long manuals

- Identifying unstated assumptions in a student argument

- Building structured “choose your own adventure” learning scenarios

- Transforming classroom transcripts into instructor reflection notes

- Providing time estimates for student assignments

- Designing scaffolded outlines for research papers

- Creating hypothetical interview questions for a historical figure

- Suggesting incremental checkpoints in project-based courses

- Converting lecture diagrams into text descriptions for accessibility

- Generating warm-up questions that activate prior knowledge

- Suggesting alternative metaphors for key theories

- Drafting sample letters to policymakers based on policy analysis work

- Breaking down a primary source document into guiding questions

- Designing a simulation script with multiple branching outcomes

- Generating comprehension checks embedded into slide decks

- Creating student reflection prompts linked to course outcomes

- Proposing variations of a lab experiment for inquiry-based learning

- Creating model answers at different grade levels for calibration

- Suggesting journal prompts that connect personal experience to course content

- Reframing assignment instructions for English-language learners

- Generating templates for field notes

- Producing visual summaries (tables, charts) from text

- Designing prompts for interdisciplinary connections (history + science, etc.)

- Creating practice critiques of scholarly methods sections

- Suggesting small-group breakout tasks for Zoom sessions

- Drafting reflection questions tied to professional skills

- Creating comparison tables for competing theories

- Generating assessment questions that require synthesis across weeks

- Designing review games (Jeopardy-style, Kahoot-style) with auto-generated Q&A

- Recasting a lecture as a dialogue between two experts

- Creating incremental hints for problem-solving tasks

- Drafting outlines for micro-lectures based on reading gaps

- Offering counterexamples to reinforce conceptual boundaries

- Suggesting fieldwork observation checklists

- Transforming a syllabus into a week-by-week action planner

- Generating model interview transcripts for qualitative research practice

- Creating practice datasets with intentional flaws for error-spotting

- Designing “what if” scenario questions to explore alternate outcomes

- Drafting closing synthesis prompts for end-of-unit discussions