# Stage 2: Canvas / Artifacts Across LLMs

The chat box is where ideas start; canvases are where those ideas persist. They’re half-notebook, half-mini-IDE—letting you keep code and outputs side-by-side while you poke, prod, and iterate.

**Why this matters:** vibe coding isn’t just “get it working once.” It’s about staying in the flow as you add features, fix surprising errors, and keep the context alive.

### Tools we’ll use

* **Claude Artifacts** — persistent code/output blocks attached to a chat thread.

* **ChatGPT Canvas (Labs/Custom GPTs)** — edit + live preview in one place, easy for HTML/CSS/JS and small Python demos.

* **Gemini w/ Apps & Colab** — quick handoff to Sheets/Docs; Colab for runnable notebooks when you outgrow the canvas.

## Gemini Canvas & Google Colab

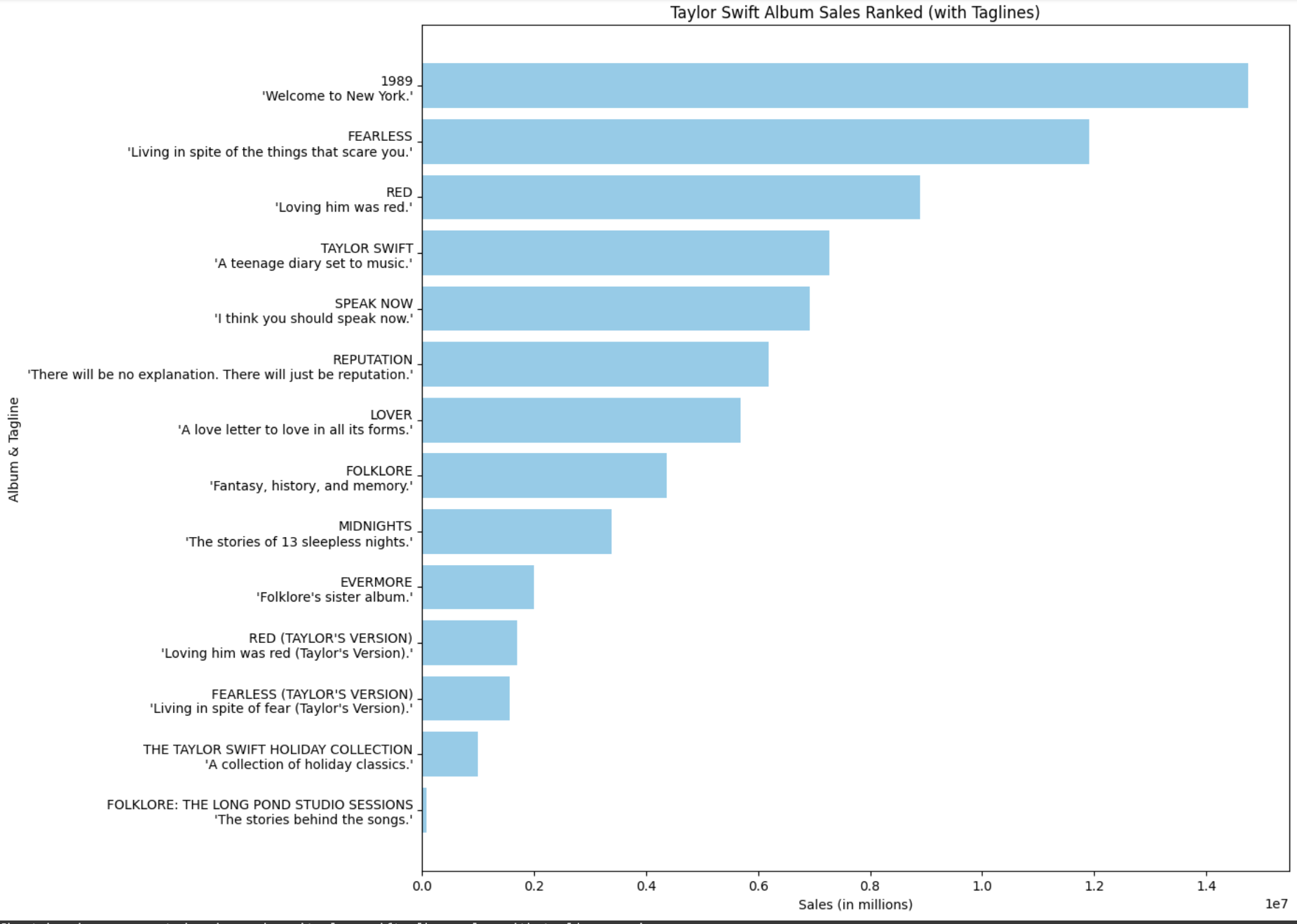

Head over and copy paste some [album sales data](https://bestsellingalbums.org/artist/12748) into Gemini. Ask Gemini to hold that data in a variable and write a py script in the canvas that visualizes it with matplotlib. But then click the Canvas tool button and ask Gemini to open it up in canvas.

Decide on something you'd like to change (maybe gemini should search the web and give each album a tagline, say). Note the difference between this UI and the regular chat interface.

Discuss.

Also note that when you're working in Python, you'll an "Export to Colab" button. Give that a try!

## Claude, ChatGPT and React

Grab that same data and head to either ChatGPT (Canvas again) or Claude (Artifacts) and this time ask for a **react** component that provides an interactive view of that data. Ask for it to be slick and modern. Compare and contrast with the python vis.

### Why React is a good standard for vibe coding

React is a **JavaScript library for building user interfaces**. It lets you describe what the UI should look like using small, reusable components, and it takes care of updating the browser efficiently when data changes.

* **Familiarity:** It’s the most widely taught and used UI framework, so the ecosystem (docs, tutorials, StackOverflow answers) is massive.

* **Declarative style:** You describe *what you want* the interface to be, not how to update the DOM step by step — which fits perfectly with LLM prompting.

* **Composability:** Components can be tiny, remixable “blocks” — easy for an LLM to generate, extend, and refactor in response to your prompts.

* **Immediate feedback:** You can see results quickly in the browser, making iteration fast and tangible.

* **Interoperability:** Plays well with other modern tools (Next.js, Vite, Tailwind), giving vibe coders a standard “canvas” to build anything from quick prototypes to production apps.

👉 In short: React is a natural fit for vibe coding because it turns **natural language intent** (“I want a button that turns red when clicked”) into **small, declarative components** that an LLM can generate and remix endlessly.

### Key Takeaway

Canvases are “chat-plus-memory.” They keep your build in view while you keep the conversation going.