# ai-lab-workshop-20251008-writeup

## one paragraph writeup

At last week’s AI Lab Experimental Workshop—Media Production with AI: Pre-Production—faculty, staff, and students explored how tools like ChatGPT, Gemini, and Midjourney can accelerate the earliest stages of creative and scholarly work. Participants shared real examples from their practice—using AI for storyboarding, lookbooks, research parsing, scheduling, and asset management—then experimented hands-on generating visuals and interview prompts from academic texts. The conversation moved easily between coding, design, and philosophy, surfacing questions about authorship, alignment, and creativity in an AI-saturated workflow. Next up: Media Production with AI: On Set (October 22), where we’ll test real-time transcription, logging, and augmented prompters in the studio.

## multiparagraph writeup

## Media Production with AI: Pre-Production (Session 1 of 3)

**AI Lab Experimental Workshop Series**

Harvard Bok Center · 50 Church Street · October 8, 2025

The second session in our *Experimental Workshop Series* brought together faculty, staff, and students working across media production, data visualization, and technical design to explore how AI can augment the earliest stages of creative and scholarly work.

This week’s focus—**Media Production with AI: Pre-Production**—centered on how tools like ChatGPT, Gemini, and Midjourney can support planning, research, and visualization before a single camera rolls.

---

### 🧠 Brainstorming: How We Already Use AI

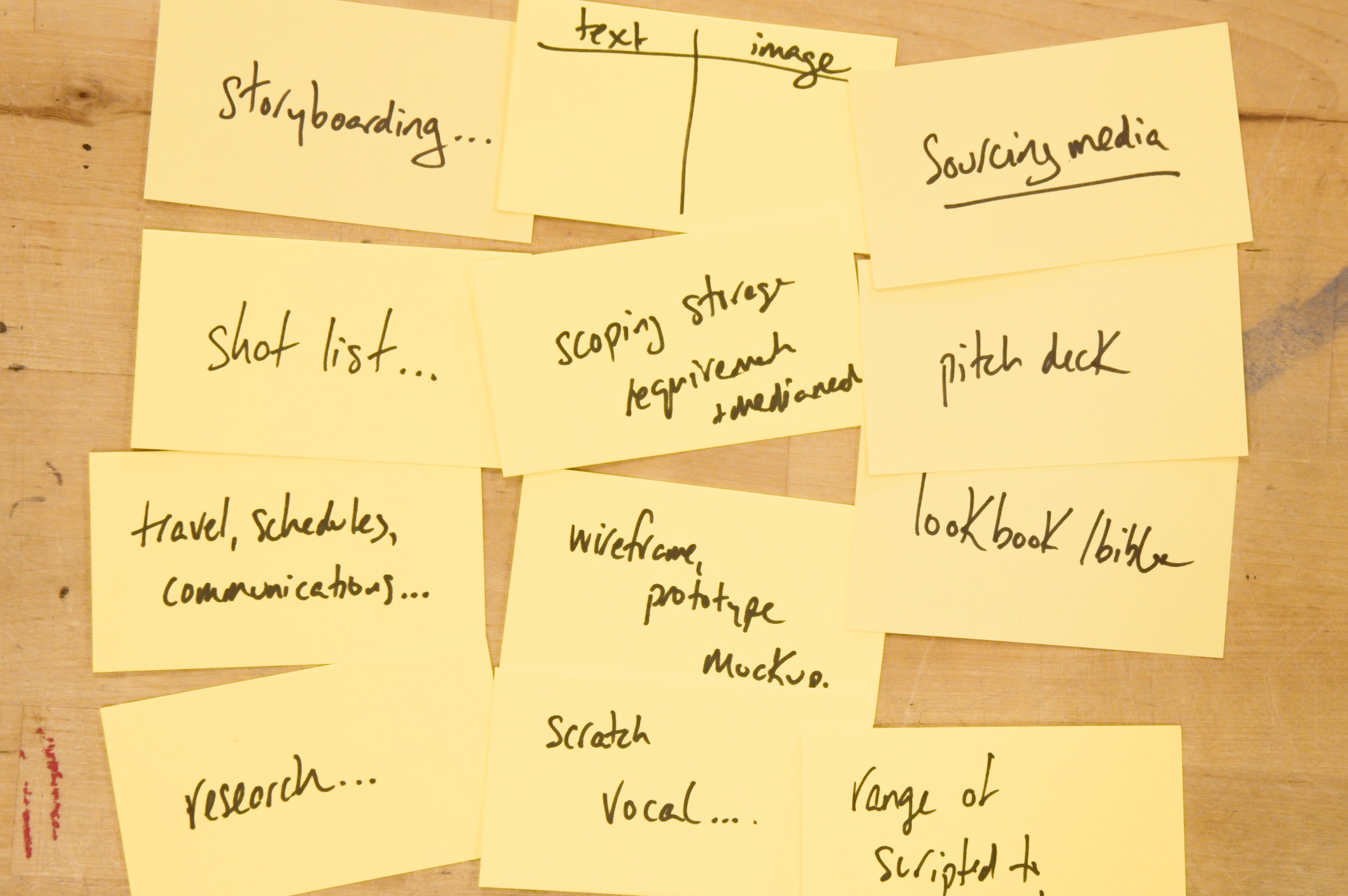

We began by mapping out participants’ current uses of AI in their creative and technical workflows. On index cards, people shared how they’re already experimenting with:

* **Coding and data visualization** — generating code snippets, figure templates, and web prototypes.

* **Image generation** — for textures, abstract assets, and motion-graphics backdrops.

* **Lookbooks, show bibles, and pitch decks** — using AI images to define tone and visual direction.

* **Storyboarding and shot lists** — describing text–image pairings in spreadsheet or two-column formats, similar to scratch vocals in music production.

* **Media sourcing and asset inventories** — assembling visual or audio references before production begins.

* **Research and reading** — parsing books or articles (including faculty dissertations) to generate interview questions and project framing.

* **Logistical planning** — storage estimates, scheduling, travel coordination, and communication templates.

* **Audio tasks** — transcription, cleanup, and denoising filters.

* **Spreadsheet automation** — formula generation and data organization.

* **Creative coding** — data-driven graphics and web-based visualization tools.

* **AI already inside professional tools** — especially Adobe Creative Suite features that quietly incorporate machine learning.

The conversation also surfaced distinctions between **scripted vs. unscripted** pre-production—how AI can help structure both fixed narratives and open-ended encounters such as interviews or lectures.

---

### 🎨 Experimentation: Storyboards, Prompts, and Research Assistants

After the group brainstorm, participants experimented hands-on in the **Gemini** and **Midjourney** playgrounds—generating visual storyboards and exploring how text models could assist in **developing prompts for image generation**.

In one exercise, we uploaded an **academic dissertation PDF** and used AI to prototype **interview questions**—illustrating how research workflows and media planning can converge. This bridged our academic context with creative production, showing how AI can support the interpretive and logistical work behind scholarly storytelling.

---

### 🧭 Reflections

The session closed with several shared insights:

* AI can take on *junior-editor* or *assistant-producer* tasks—string-outs, first cuts, or draft lists.

* In image generation, models often **default to symmetry**, rarely placing subjects off-center—reminding us that compositional accidents must now be deliberately reintroduced.

* Questions of **alignment**—whether a tool’s creators share the user’s goals—remain central, touching on privacy, intellectual property, and creative intent.

---

### 🔄 What’s Next

Next week’s workshop, **Media Production with AI: On Set**, will extend these ideas into live studio environments—experimenting with real-time transcription, logging, and augmented prompters.

The AI Lab’s *Experimental Workshop Series* continues every Wednesday at 3:00pm in the Bok Center’s Learning Lab at 50 Church Street, Suite 374—a public prototyping space where Harvard’s faculty, students, and staff can explore, test, and rethink how AI might reshape scholarly and creative practice.