# Whisk: A practical shuffle-based SSLE protocol for Ethereum

## Introduction

We propose and specify Whisk, a privacy-preserving protocol for electing block proposers on the Ethereum beacon chain.

## Motivation

The beacon chain currently elects the next 32 block proposers at the beginning of each epoch. The results of this election are public and everyone gets to learn the identity of those future block proposers.

This information leak enables attackers to **launch DoS attacks** against each proposer *sequentially* in an attempt to disable Ethereum.

## Overview

This proposal attempts to plug the information leak that occurs during the block proposer election.

We suggest using a secret election protocol for electing block proposers. We want the election protocol to produce a **single** winner per slot and for the election results to be **secret** until winners announce themselves. Such a protocol is called a *Single Secret Leader Election* (SSLE) protocol.

Our SSLE protocol, Whisk, is a modified version of the `SSLE from DDH and shuffles` scheme from the paper ["Single Secret Leader Election" by Boneh et al.](https://eprint.iacr.org/2020/025.pdf). Our [zero-knowledge proving system](https://ethresear.ch/t/provable-single-secret-leader-election/7971) is an adaptation of the [Bayer-Groth shuffle argument](http://www0.cs.ucl.ac.uk/staff/J.Groth/MinimalShuffle.pdf).

This proposal is joint work with Justin Drake, Dankrad Feist, Gottfried Herold, Dmitry Khovratovich, Mary Maller and Mark Simkin.

### High-level protocol overview

Let's start with a ten thousand feet view of the protocol.

The beacon chain first randomly picks a set of election candidates. Then and for an entire day, block proposers continuously shuffle that candidate list thousands of times. After the shuffling is finished, we use the final order of the candidate list to determine the future block proposers for the following day. Due to all that shuffling, only the candidates themselves know which position they have in the final shuffled set.

For the shuffling process to work, we don't actually shuffle the validators themselves, but cryptographic randomizable commitments that correspond to them. Election winners can *open their commitments* to prove that they won the elections.

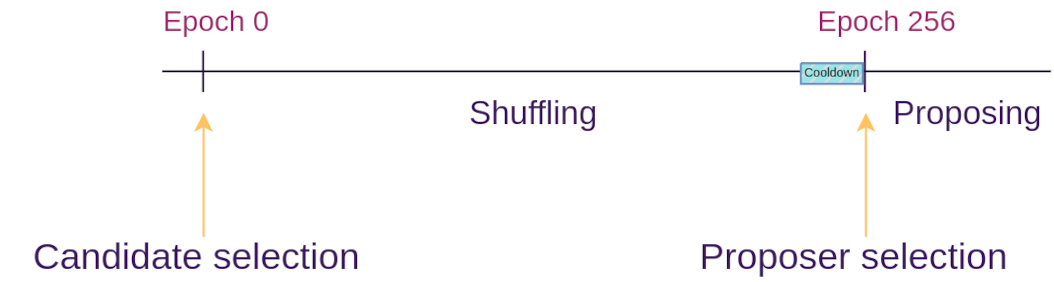

To achieve its goals, the protocol is split into events and phases as demonstrated by the figure below. We will give a high-level summary of each one in this section, and then dive into more details on the following sections:

* Bootstrapping event -- where the beacon chain forks and the protocol gets introduced

* Candidate selection event -- where we select a set of candidates from the entire set of validators

* Shuffling phase -- where the set of candidates gets shuffled and re-randomized

* Cooldown phase -- where we wait for RANDAO, our [randomness beacon](https://github.com/ethereum/annotated-spec/blob/master/phase0/beacon-chain.md#seeds), to collect enough entropy so that its output is unpredictable

* Proposer selection event -- where from the set of shuffled candidates we select the proposers for the next day

* Block proposal phase -- where the winners of the election propose new blocks

### Document overview

In the [following section](#Protocol) we will be diving into Whisk; specifying in detail the cryptography involved as well as the protocol that the validators and the beacon chain need to follow.

After that, we will perform a [security analysis](#Security-analysis) of Whisk, in an attempt to understand how it copes against certain types of attacks.

Following that we will [calculate the overhead](#Overhead-analysis) that Whisk imposes on Ethereum in terms of block and state size, as well as computational overhead.

We will then move into an analysis of [alternative approaches](#Related-work) to solve the problem of validator privacy and compare their trade-offs to Whisk.

We close this proposal with a [discussion section](#Discussion) which touches upon potential simplifications and optimizations that could be done to Whisk.

---

## Protocol

In this section, we go through the protocol in detail.

We start by introducing the cryptographic commitment scheme used. We proceed with specifying the candidate and proposer selection events, and then we do a deep dive into the shuffling algorithm used.

We have written a [feature-complete draft consensus implementation of Whisk](https://github.com/ethereum/consensus-specs/pull/2800). Throughout this section, we link to specific parts of the code where applicable to better guide readers through the protocol.

### Commitment scheme

For the shuffling to work, we need a commitment scheme that can be randomized by third parties such that the new version is unlinkable to any previous version, yet its owner can still track the re-randomized commitment as her own. Ideally, we should also be able to open commitments in a zero-knowledge fashion so that the same commitment can be opened multiple times. We also need Alice's commitment to be *bound to her identity*, so that only she can open her commitment.

We use the commitment scheme suggested by the SSLE paper where Alice commits to a random long-term secret $k$ using a tuple $(rG, krG)$ (called a *tracker* [in this proposal](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L88)). Bob can randomize Alice's tracker with a random secret $z$ by multiplying both elements of the tuple: $(zrG, zkrG)$. Finally, Alice can prove ownership of her randomized tracker (i.e. open it) by providing a *proof of knowledge of a discrete log* (DLOG NIZK) that proves knowledge of a $k$ such that $k(zrG) == zkrG$.

Finally, we achieve *identity binding* by having Alice provide a deterministic commitment $com(k) = kG$ when she registers her tracker. We store $com(k)$ in [Alice's validator record](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L97), and use it to check that [no two validators have used the same $k$](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L266). We also use it at registration and when opening the trackers to check that both the tracker and $com(k)$ use the same $k$ using a *discrete log equivalence proof* (DLEQ NIZK). Fr more details see the [*"Selling attacks"* section](#Selling-attacks) of this document and the `duplication attack` section in the SSLE paper.

### Protocol flow

Now let's dive deeper into Whisk to understand how candidates are selected and how proposing works. For this section, we will assume that all validators have registered *trackers*. We will tackle registration later in this document.

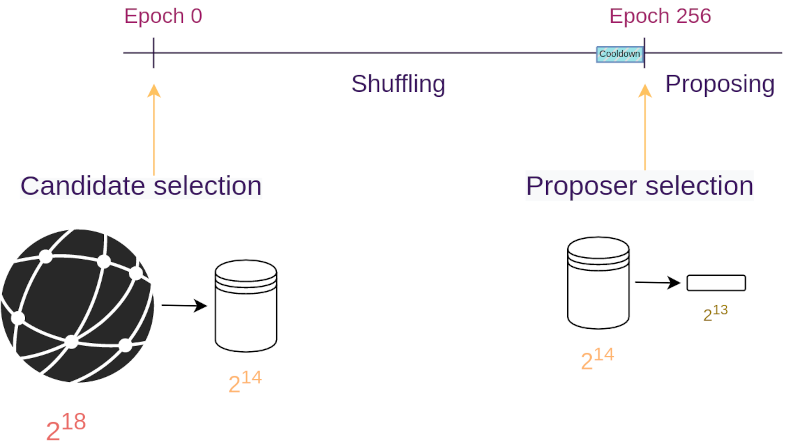

The protocol starts with the beacon chain using public randomness from RANDAO [to sample](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L109) 16,384 random trackers from the entire set of validators (currently around 250,000 validators). The beacon chain places those trackers into a *candidate list*.

After that and for an entire day (8,192 slots), block proposers *shuffle* and randomize the candidate list using private randomness. They also submit a zero-knowledge proof that the shuffling and randomization were performed honestly. During this shuffling phase, each shuffle only touches 128 trackers at a time; a limitation incurred by the ZKP performance and the bandwidth overhead of communicating the newly-shuffled list of trackers. The strategy used for shuffling will be detailed in the [next section](#Shuffling-phase).

After the shuffling phase is done, we use RANDAO to populate our *proposer list*; that is, to [select an ordered list](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L124) of the 8,192 winning trackers that represent the proposers of the next 8,192 slots (approximately a day). We [stop shuffling](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L251) one epoch before the *proposer selection* occurs, so that malicious shufflers can't shuffle based on the future result of the RANDAO (we call this epoch the *cooldown phase*).

Finally, for the entire next day (8,192 slots), we [sequentially map the trackers](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L174) on the proposer list to beacon chain slots. When Alice sees that her tracker corresponds to the current slot, she can open her tracker [using a DLEQ NIZK](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L156) and submit a valid *block proposal*.

This means that the *proposal phase* lasts for 8,192 slots and the same goes for the *shuffling phase*. This creates a day-long pipeline of Whisk runs, such that when the *proposal phase* ends, the *shuffling phase* has also finished and has prepared the 8,192 proposers for the next day.

### Shuffling phase

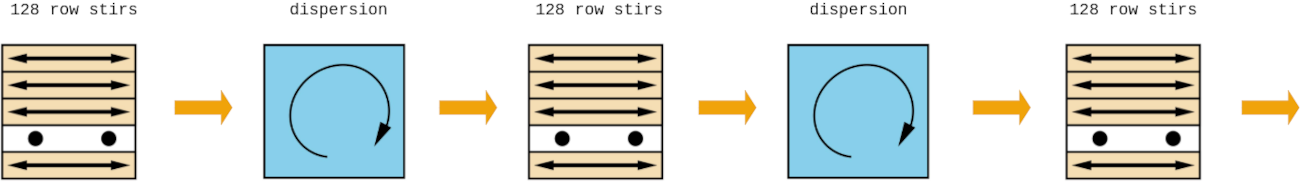

In the previous section, we glossed over the strategy used by validators to shuffle the candidate list (of size 16,384) with small shuffles (of size 128). Shuffling a big list using small shuffles (called *stirs* from now on) is not trivial, especially when a big portion of shufflers might be malicious or offline. We call our proposed algorithm **Feistelshuffle**.

##### Setup

Feistelshuffle lasts for the duration of the *shuffling phase* (8,192 slots). We [split time](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L242) into 64 rounds of 128 steps (i.e. slots) each.

We represent the set of candidates of size 16,384 as a 128x128 square *shuffling matrix* (where each cell contains a *number* between 0 and 16,383).

##### Algorithm

At every step of each round, a validator *stirs* a set of Whisk trackers [given by a row of the shuffling matrix](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L257) in sequential order (e.g. on the first step, the first row gets stirred). Each validator *stirs* by permuting and randomizing the Whisk trackers from the set.

After 128 steps, all rows of the shuffling matrix have been processed and that concludes a round.

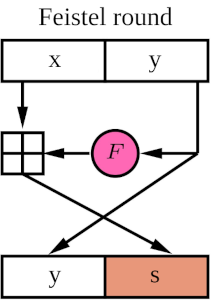

At the end of each round, we perform a *dispersion* transformation on the shuffling matrix. To perform the *dispersion*, we interpret every $(x,y)$ coordinate in the shuffling matrix as a plaintext and modify it using [a one-round Feistel mapping](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L210) that transforms every $(x,y)$ coordinate into another $(y,s)$ coordinate in a one-to-one fashion (see figure below).

The $F$ function is a bijective nonlinear mapping, which is $y\rightarrow y^3\bmod{128}$ in our case.

The *dispersion* transformations don't need to be performed on-chain. We can perform the needed rounds of Feistel encryption at each step of Feistelshuffle when [computing the indices that should get stired](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L235).

See the [*Feistelshuffle analysis* section](#Feistelshuffle-analysis) below for a security analysis of Feistelshuffle

### Proofs of correct shuffle

During the shuffling phase, validators permute and randomize each row of the shuffling matrix using private randomness.

Validators [must use zero-knowledge proofs](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L256) to show that they have shuffled honestly: that is, to prove that the shuffle output was a permutation of the input and that the input trackers actually got randomized. If no such proofs were used, a malicious shuffler could replace all trackers with her own trackers or with garbage trackers.

Furthermore, to avoid adaptive shuffling attacks, we force validators to commit to their permutation ahead of time. Validators [register their permutation commitment](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L309) when they publish a block, and they must use that permutation the next time they publish a block. We again ensure that this is the case using zero-knowledge proofs.

We will now take a look at the zero-knowledge proofs used by Whisk.

Verifiable shuffling has been a research topic [for decades](http://www.lix.polytechnique.fr/~tomc/P2P/Papers/Theory/MIXes.pdf) due to [its application in mixnets](https://www.iacr.org/archive/eurocrypt2000/1807/18070563-new.pdf) and hence to online anonymity and digital election schemes. Already since twenty years ago, zero-knowledge proofs [based on randomizable ElGamal ciphertexts](https://www.iacr.org/archive/crypto2001/21390366.pdf) have been proposed, and in recent years we've seen proofs [based on CRS and pairings](https://eprint.iacr.org/2017/894.pdf) as well as [post-quantum proofs based on lattices](https://eprint.iacr.org/2019/357.pdf).

In Whisk we use shuffling ZKPs that solely rely on the discrete logarithm assumption and don't require a trusted setup while still maintaining decent proving and verification performance. [Our proofs](https://ethresear.ch/t/provable-single-secret-leader-election/7971) are heavily based on [previous work on shuffling arguments by Bayer and Groth](http://www0.cs.ucl.ac.uk/staff/J.Groth/MinimalShuffle.pdf) and they also incorporate more recent optimizations inspired by the inner product arguments of [Bulletproofs](https://eprint.iacr.org/2017/1066.pdf).

We have [implemented an initial PoC](https://github.com/ethresearch/Shuffle_SSLE/tree/master/rust_code/src) of the shuffling proofs and we are working on a more robust implementation that includes test vectors and can be used as a solid basis for writing production-ready code for Ethereum clients.

### Bootstrapping

In all previous sections, we've been assuming that the system has bootstrapped and that all validators have Whisk trackers and commitments associated with them. In this section, we show how to do bootstrapping.

We bootstrap Whisk by having the beacon chain [initialize all validators](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/fork.md?plain=1#L76) with [dummy predictable commitments](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L338). We then allow validators to [register a secure commitment](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L299) when they propose a new block.

This means that the system starts in an insecure state where the adversary can predict future proposers (similar to the status quo), and as more validators register secure commitments, the system gradually reaches a secure steady state.

## Security analysis

In this section, we analyze Whisk's security and privacy.

We first see how Whisk effectively expands the anonymity set of proposers. We then explore various active attacks that could be performed against Whisk: either by malicious shufflers or by the Ethereum randomness beacon (RANDAO). We close this section by demonstrating how Whisk is protected against selling attacks, where an adversary attempts to buy specific proposer slots.

### Anonymity set

The core idea of Whisk is to significantly increase the anonymity set of block proposers. In particular, the anonymity set increases from the status quo of a single validator to at least 8,192 validators (which correspond to the *number of candidates that did not get selected as proposers*)

To make this concrete, the adversary knows the identities of the validators that got selected as *candidates*. However, because of the shuffling phase, she cannot tell which of those validators got selected as *proposers* at the end of the protocol. This means that the 8,192 candidates that were not selected as proposers become the anonymity set for all proposers.

It's worth noting that those 8,192 validators actually correspond to a significantly smaller number of nodes on the P2P layer. For example, the current validator set of 250,000 validators is represented [by about 5,000 P2P nodes](https://ethernodes.org/). While it's not known how validators are distributed to this small number of nodes, for this analysis we can assume that the distribution follows something close to the Pareto principle where "20% of the nodes run 80% of the validators". Doing [a simulation with this distribution](https://gist.github.com/asn-d6/1d972421667a23a0635f65cd5d12d0e7) we find that an anonymity set of 8,192 validators corresponds to 2,108 nodes on average. That is explained by the fact that even though there are some *"heavy nodes"* that will always appear in the anonymity set and will control a hefty portion of it, there is also a long tail of *"light nodes"* that run one or two validators that helps increase the size of the anonymity set.

Whisk can also be adapted to produce a bigger anonymity set by increasing the size of the *candidate list* while keeping the size of the *proposer list* the same. However, doing such a change requires analyzing our shuffling strategy to see if Feistelshuffle can sufficiently shuffle a bigger *candidate list*. Alternatively, we could increase the size of our stirs but we would need to be certain that this does not render our ZKPs prohibitively expensive.

### Feistelshuffle analysis

In this section, we analyze the security of our Feistelshuffle strategy. While we present the main results here, a detailed analysis can be found [in the Appendix](#Appendix-A-Shuffling-strategy-security-analysis) and in a [companion paper](https://github.com/khovratovich/whisk-analysis/blob/main/WhiskAnalysis.pdf).

Our threat model assumes an active adversary who controls a fraction of the shufflers and a fraction of the candidate trackers. We also assume that a fraction of honest proposers is offline and will not be stirring at all. The goal of the adversary is to disable the network by deanonymizing and attacking proposers.

Our analysis is based on identifying the trackers that did not get stirred by honest shufflers and hence don't have an anonymity set (we call those *0-touchers*). We also quantify the anonymity set of all other trackers (called *1-touchers*). The strategy of the adversary is to always attack the *0-touchers* since those are trivial to attack, and then attack the *1-touchers* with the smallest anonymity set.

To perform our analysis we use one concrete property of the Feistelshuffle dispersion: it is **uniform**, i.e. all trackers of a single stir will be processed by distinct proposers in the next round and in the round after that. Note that this property does not hold for most of other dispersion mechanisms, including random selection of trackers and matrix transposition.

Our analysis shows that almost all *1-touchers* have an anonymity set of at least 4000 trackers. On a network where 20% of all proposers are offline, a 50% attacker can shut down 2.5% of the network every day by attacking a total of 540K nodes, or 2630 machines per proposer *on average*. For more detailed results, please see [the Appendix](#Appendix-A-Shuffling-strategy-security-analysis).

### Active attacks through RANDAO biasing

In this section, we analyze Whisk's resilience against RANDAO biasing attacks. While we present the main results here, the detailed analysis can be found [in the Appendix](#Appendix-B-RANDAO-attacks).

Whisk uses RANDAO in the *candidate selection* and *proposer selection* events. This opens it up to potential RANDAO biasing attacks by malicious proposers. Since RANDAO is a commit-and-reveal protocol it enables attackers who control the last $k$ proposers before the candidate/proposer selection event to choose between $2^k$ possible candidate or proposer lists. This quickly becomes profitable for rational attackers, since they can increase profit by abandoning the commit-and-reveal protocol and choosing the list that contains the maximal number of validators they control or that gives them control over specific proposer slots.

Similar attacks can also be performed on the current Ethereum proposer selection where we publicly sample 32 proposers at the beginning of each epoch using RANDAO (see figure below).

By comparing Whisk with the status quo, we found that while big adversaries can extract bigger profits in the status quo, Whisk allows even small adversaries to extract profits by attacking RANDAO once per day. Please see [the Appendix](#Appendix-B-RANDAO-attacks) for more details on the results.

One way to completely address such RANDAO attacks is to use an unbiasable VDF-based randomness beacon. However, until a VDF beacon gets deployed, such attacks pose a risk both against the status quo and the Whisk protocol.

One possible variation is to make the security of Whisk identical to the security of the status quo with regards to RANDAO attacks by spreading *candidate selection* and *proposer selection* over an entire day (as seen in the figure below) instead of doing them on a single moment in time. However, even the status quo security is not ideal, and by implementing this defense we complicate the protocol further.

### Selling attacks

We want to prevent Mallory from being able to buy and open Alice's $k$.

It's important to prevent that since that would allow Mallory to buy proposer slots on the beacon chain from an automated auction smart contract, or it could also create a situation where a single $k$ is opened by two validators causing problems with the fork choice.

The commitment scheme presented above prevents that by doing a uniqueness check against $k$ using $com(k)$, and also by saving $com(k)$ in Alice's validator record and making sure that whoever opens using $k$ also has $com(k)$ in their validator record.

Let's walk through a potential selling scenario and see how the identity binding prevents it:

1) Alice [registers](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L275) $(rG, krG), com(k)$

2) Mallory registers $(rG, prG), com(p)$ (she can't register with $k$ because of [the uniqueness check](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L280))

3) ...

4) During the proposal phase, $(rG, krG)$ becomes the winning tracker

5) Mallory attempts to propose using $k$ by sending a DLEQ NIZK that proves that $k$ is both the dlog of $k(rG)$ and also the dlog of $com(k)=kG$

In this case the beacon chain [would dig into Mallory's validator record](https://github.com/asn-d6/consensus-specs/blob/whisk_ethresearch/specs/whisk/beacon-chain.md?plain=1#L180) and use $com(p)$ as the instance when verifying the NIZK. At that point, the verification would fail and Mallory's proposal would be discarded.

## Overhead analysis

In this section, we calculate Whisk's overhead in terms of block and state size, as well as the computational overhead imposed on validators.

#### State size overhead

The overhead of Whisk on the `BeaconState` is 59.9MB for 300k validators. In detail:

- a candidate list (16,384 trackers) (16,384*96 = 1.57 MB)

- a proposer list (8,192 trackers) (8,192*96 = 0.78 MB)

- a tracker for each validator (96 bytes per validator) (28.8 MB for 300k validators)

- a $com(k)$ for each validator (48 bytes per validator) (14.4 MB for 300k validators)

- a permutation commitment for each validator (48 bytes per validator) (14.4 MB for 300k validators)

This represents a dramatic increase in the size of the `BeaconState` (currently sitting at 30MB).

It's worth noting that the tracker and $com(k)$ of each validator (43.2MB for 300k validators) never change once they get registered which allows implementations to save space by keeping a reference of them in memory that can be used across multiple state objects.

For various ideas on how to reduce the state size, [see the discussion section](#Simplifications-Optimization).

#### Block size overhead

The overhead of Whisk on the `BeaconBlockBody` is 16.5 kilobytes. In detail:

- a list of shuffled trackers (128 trackers) (128*96 = 12,288 bytes)

- the shuffle proof (atm approx 82 G1 points, 7 Fr scalars) (4,272 bytes)

- one fresh tracker (two BLS G1 points) (48*2 = 96 bytes)

- one $com(k)$ (one BLS G1 point) (48 bytes)

- one permutation commitment (one BLS G1 point) (48 bytes)

- a registration DLEQ proof (two G1 points, two Fr scalars) (48*4 = 192 bytes)

The overhead of Whisk on the `BeaconBlock` is 192 bytes. In detail:

- an opening DLEQ proof (two G1 points, two Fr scalars) (48*4 = 192 bytes)

#### Computational overhead

The main computationally heavy part of this protocol is proving and verifying the zero-knowledge proofs involved.

We [wrote a PoC of the ZKPs](https://github.com/ethresearch/Shuffle_SSLE/tree/master/rust_code/src) using an old version of arkworks-rs (zexe) and the BLS12-381 curve and found that in an average laptop:

- Shuffling and proving the shuffle takes about 880ms (done by block proposers)

- Verifying the proof takes about 21ms (done by validators)

Our benchmarks were a PoC and we believe that a production-ready implementation of the shuffling/verifying procedure can provide a 4x-10x boost on performance by:

- Moving from zexe to the latest arkworks-rs or a more optimized library (e.g. blst)

- Moving from BLS12-381 to a curve with faster scalar multiplications

- Further optimizing the proofs and their implementation

If the proving overhead is considered too high, we can alter the shuffling logic so that validators have time to precompute their proofs in advance. For example, we can avoid shuffling on the last slot before each new round of the shuffling algorithm. At that point, the shuffling matrix for the next round is fully determined and hence validators have at least 12 seconds to shuffle and precompute their proofs for the next round.

## Related work

There are more ways to solve the problem of validator privacy. Each approach comes with its trade-offs and each use case should pick the most suitable approach. In this section, we go over different approaches and discuss their trade-offs.

### SASSAFRAS

The Polkadot team has designed an SSLE protocol called [SASSAFRAS](https://research.web3.foundation/en/latest/polkadot/block-production/SASSAFRAS.html) which works using a combination of a ring-VRF and a network anonymity system.

The scheme works by having each validator publish a VRF output (i.e. election ticket) through a native single-hop anonymity system. After some time, the chain sorts all received tickets to create the future proposer ordering, and when it's Alice's time to claim a slot, she submits a proof that it was actually her ticket that won this round.

SASSAFRAS turns their VRF scheme into a *ring-VRF* [through the use of SNARKs](https://github.com/w3f/ring-vrf) ensuring this way that the VRF output was generated from a specific set of public keys and no duplicate or garbage tickets were submitted.

#### Benefits

A significant benefit of SASSAFRAS over Whisk is that it doesn't require any shuffling which reduces the consensus complexity of the system. This means that state manipulation is minimal in SASSAFRAS, while in Whisk we are fiddling with the state at every slot. For the same reason, the state space required is significantly less, since the chain mainly needs to hold the VRF output of each validator (plus a pubkey), whereas in Whisk we are storing multiple commitments per validator plus the entire shuffling list. Finally and perhaps most importantly, the consensus simplicity of SASSAFRAS makes it more flexible when it comes to supporting other features (e.g. elect multiple leaders per slot or doing gradual RANDAO samplings).

A further benefit of SASSAFRAS is that the anonymity set of each proposer spans the entire validator set, in contrast to Whisk where the worst-case anonymity set is [8,192 validators](#Anonymity-set).

#### Drawbacks

The main drawback of SASSAFRAS is that instead of shuffling, it uses a network anonymity layer to detach the identity of the validator from her ticket.

For this purpose, SASSAFRAS builds a simple single-hop timed mixnet inside its p2p layer: When Alice needs to publish her ticket, she does `ticket (mod len(state.validators))` and that gives her Bob's validator index. She uses Bob as her proxy and sends her ticket to him. Then Bob uploads it on-chain by sending a transaction with it. Effectively, Bob acted as the identity guard of Alice.

An issue here is that network anonymity comes with a rich literature of attacks that can be applied in adversarial environments like blockchains. At the most basic level, in the above example, Bob can connect the identity of Alice with her published ticket. Effectively this means that a 10% adversary can deanonymize 10% of tickets.

At a more advanced level, an eavesdropper of a validator's network can launch *traffic correlation attacks* in an attempt to correlate the timing of received tickets with the timing of outbound tickets. Traffic correlation attacks effectively reduce the anonymity set of the system. The classic solution is for protocol participants to send fake padding messages; however designing such padding schemes requires careful consideration to not be distinguishable from real traffic.

Another interesting aspect of SASSAFRAS is that it assumes a mapping between validators and their P2P nodes. Creating and maintaining such a mapping can be done with a DHT protocol but it complicates the networking logic especially when a validator can correspond to multiple dynamic nodes. Fortunately, validators can still fallback to sending their ticket in the clear if such a system experiences a transient break.

Also, if the unique proxy validator of a ticket is faulty or offline, the validator is forced to publish the ticket themselves, effectively getting deanonymized.

With regards to cryptography, the current PoC implementation of the [ring-VRF](https://github.com/w3f/ring-vrf) uses Groth16 SNARKs which require a trusted setup. However, the SNARKs could potentially be rewritten to use Halo2 or Nova which don't require a ceremony.

Finally, not all parts of SASSAFRAS have been fully specified (e.g. the bootstrapping logic), which might produce some yet unseen complexity.

All in all, SASSAFRAS is an SSLE protocol with higher networking complexity but lower consensus complexity. It's important to weigh the trade-offs involved especially with regards to Ethereum's networking model and overall threat model.

### Other networking-level defenses

In this proposal we've been assuming that it's trivial for attackers to [learn the IP address of every beacon chain validator](http://www.cloud-conf.net/ispa2021/proc/pdfs/ISPA-BDCloud-SocialCom-SustainCom2021-3mkuIWCJVSdKJpBYM7KEKW/264600b402/264600b402.pdf).

[Dandelion](https://github.com/bitcoin/bips/blob/master/bip-0156.mediawiki) and [Dandelion++](https://arxiv.org/pdf/1805.11060.pdf) are privacy-preserving networking protocols designed for blockchains. They make it hard for malicious supernodes on the p2p network to track the origins of a message by propagating messages in two phases: a "stem" anonymity phase, and a spreading "fluff" phase. During the "stem" phase each message is passed to a single randomly-chosen node, making it hard to backtrack it.

Systems similar to Dandelion share [networking complexities](https://bitcoin.stackexchange.com/questions/81503/what-is-the-tradeoff-between-privacy-and-implementation-complexity-of-dandelion/81504#81504) similar to the ones mentioned above for SASSAFRAS. Furthermore, using Dandelion for block proposal needs careful consideration of latency so that it fits inside the four seconds slot model of Ethereum (see Figure 11 of [Dandelion++ paper](https://arxiv.org/pdf/1805.11060.pdf))

An even simpler networking-level defense would be to allow validator nodes to use different network interfaces for attestations and block proposals. This way, validators could use one VPN (or Tor) for attestations and a different one for proposals. The problem with this is that it increases setup complexity for validators and it also increases the centralization of the beacon chain.

### Drawbacks of Whisk

It's worth discussing the drawbacks of Whisk especially as we go through the process of comparing it against other potential solutions (e.g. VRF-based schemes).

First and foremost, Whisk introduces non-negligible complexity to our consensus protocol. This makes the protocol harder to comprehend and analyze, and it also makes it harder to potentially extend and improve.

For example, because of the lengthy shuffling phase it's not trivial to tweak the protocol to elect multiple leaders per slot (which could be useful in a sharded/PBS/crList world). That's because we would need bigger candidate and proposer lists, and hence a bigger shuffling phase. While it's indeed possible to tweak Whisk in such a way, it would probably be easier to do so in a VRF-based scheme.

Whisk also slightly increases the computational overhead of validators who now need to create and verify ZKPs when creating and verifying blocks. It also increases the computational overhead of P2P nodes, since they now need to do a DLEQ verification (four scalar multiplications) before gossiping.

Finally, a drawback of SSLE in general (not just of Whisk) is that it completely blocks certain protocol designs. For example, it becomes impossible to ever penalize validators who missed their proposal. SSLE also prevents any designs that require the proposer to announce themselves before proposing (e.g. [to broadcast a crList](https://notes.ethereum.org/Dh7NaB59TnuUW5545msDJQ?view)).

## Discussion

### Simplifications and Optimizations

In this section, we quickly mention various simplifications and optimizations that can be done on Whisk and the reason we did not adopt them:

- Moving the commitment scheme from BLS12-381 to a curve with a smaller base field size (but still 128 bits of security) would allow us to save significant state space. For a 256-bit curve, group elements would be 32 bytes which is a 33% improvement over the state space (BLS12-381 G1 elements are 48 bytes). We used BLS12-381 in this proposal because the consensus specs are already familiar with BLS12-381 types and it's unclear how much work it would be to incorporate a different curve in consensus clients (e.g. curve25519 or JubJub).

- We can store $H(kG)$ in the state instead of $com(k)$ to shave 12 bytes per validator. But then we would need to provide $kG$ when we propose a block and match it against $H(kG)$ which slightly complicates the protocol.

- We could avoid permutation commitments (and hence save state space) by introducing randomness in our shuffling strategy to fool active shuffling attackers. In particular, we could apply a RANDAO whitening step at the end of each shuffling round which completely permutes the shuffling matrix using RANDAO. However, this means that we would need to cooldown before RANDAO (to avoid biasing attacks) and that would reduce the number of shuffles that we could perform. It would also complicate the formal analysis of the shuffling strategy.

- Instead of using $com(k)$ to do identity binding on $k$, we could do identity binding by forcing the hash prefix of $H(kG)$ to match Alice's validator index. Alice would brute force $k$ until she finds the right one. This would save space on the state, but it would make it hard to bootstrap the system (we would need to use a lookup table of `{validator_index -> k}`)

- Instead of registering $(rG, krG)$ trackers, we could just register with $kG$ (i.e. not use a randomized base) to simplify the protocol and save space on the block and on the state. However, this would make it easier for adversaries to track trackers as they move through shuffling gates by seeing if they have the same base, which becomes a problem if the set of honest shufflers is small.

- Instead of storing a permutation commitment in every Validator record, we could use the $rG$ part of Alice's Whisk tracker as her permutation commitment, saving an entire G1 point per validator. However, that would require Alice to register a fresh tracker with every block proposal, which we are now avoiding by allowing only one registration.

- We could do identity binding by setting $k = H(nonce + pubkey)$ as the SSLE paper suggests which would simplify the protocol. However, we would need to completely reveal $k$ when opening which causes problems if the same validator gets selected as a candidate twice (either in the same run or in adjacent runs) since now adversaries can track the tracker around.

## Appendix

### Appendix A: Shuffling strategy security analysis

#### Adversarial model

We assume an active adversary, who controls some fraction of proposers, some fraction of trackers, and attacks some proposers in the hope they don't participate in the protocol. Additionally we allow some fraction of proposers to go offline and not stirring at their turn. Overall, we consider 4 groups of proposers during each day:

* Malicious: $\beta 2^{13}$ proposers that share stir permutations among themselves.

* Offline: $\gamma 2^{13}$ proposers that are not malicious but going offline for non-adversarial reasons.

* Attacked: $\delta 2^{13}$ are shut down by an active adversary using a sort of DoS attack.

* Alive: remaining $\alpha 2^{13} = (1-\beta-\delta-\gamma)2^{13}$ proposers that do their stirring privately.

Each attack conducted by an adversary has its cost. Each tracker whose owner is attacked has an anonymity set, and we assume that the attack cost is *linear* in its size.

In the ideal case, where all proposers of Day 1 are honest and do not reveal their stirs to anyone, the anonymity set of validators at the beginning of day two is $2^{14}$ and slowly decrease to $2^{13}$ over the course of the day.

However, in the case of an active adversary the anonymity set is reduced. Moreover, the smaller the anonymity set of a tracker becomes, the cheaper is for an adversary to shut down its owner. In an extreme case when a tracker does not undergo any stir done by an honest and alive proposer, the anonymity set is just one validator. We call such trackers *0-touchers* as they are not touched by any honest proposer. The other trackers are called *1-touchers*.

We finally assume that the fraction of trackers controlled by the adversary equals $\beta$, the fraction of malicious proposers. These trackers are excluded from anonymity sets.

#### Adversarial strategy

Assuming that the goal of the adversary is to minimize $\alpha$ given certain resources, we consider that the following strategy is almost optimal and should be pursued by the adversary:

* Shut down all 0-touchers as they are the cheapest to attack.

* Measure the anonymity set of 1-touchers and attack those with minimum ones as long as there are enough resources.

#### Attack costs

We use one concrete property of Feistel selection rule: it is **uniform**, i.e. all trackers of a single stir will be processed by distinct proposers in the next and the after-next rounds. Note that this property does not hold for most of other dispersion mechanisms, including random selection of trackers and matrix transposition.

Based on the uniformity of Feistel selection rule, we establish several results, which are elaborated in a [companion paper](https://github.com/khovratovich/whisk-analysis/blob/main/WhiskAnalysis.pdf).

In particular, we calculated the maximum number of 0-touchers for any $\alpha$, the anonymity set size for 1-touchers depending on the last round when they were touched, and the total cost measured in the sum of anonymity sizes of attacked validators. It is stated that there can be at most $\frac{150}{\alpha}$ 0-touchers before proposer selection, though our experiments can get at most $128(1-\alpha)$.

One can compute this cost by selecting $\beta,\gamma,\delta$ in https://www.desmos.com/calculator/hueqpnp8mc

An attacker who controls 50% of the network ($\beta=0.5$) and wishes to shut down 2.5% of the network ($\delta=0.025$). His cost depending on $\gamma$ is high up to $\gamma=0.15$ and then drops:

The cost can be interpreted as follows. If an attacker wants to shut down 0.025 of the network with 0.2 out of 0.5 honest fraction being offline, then he shuts down 204 proposers at the cost of attacking the total of 540K nodes, or 2630 machines per proposer *on average*. Note though that theoretically up to 272 of those proposers can be 0-touchers, which are easy to slash, but the rest are significantly more anonymous.

### Appendix B: RANDAO attacks

In this section, we analyze RANDAO attacks against Whisk and the status quo.

In Whisk, over the course of a day (256 epochs) an attacker, Mallory, has two opportunities (*candidate selection* and *proposer selection* events) to control the last RANDAO revealer. If Mallory controls the last RANDAO revealer before the *candidate selection event* she gets to choose between two lists of 16,384 trackers, whereas if she controls the last revealer before the *proposer selection event* she gets to choose between two lists of 8,192 trackers. At that point, she can simply choose the list that contains more of her validators. We [simulated RANDAO attacks](https://github.com/asn-d6/ssle-abort-sim) and found that if Mallory, a 10% adversary, gets to control the last RANDAO revealer before the candidate selection event, she will abort 48% of the time and by doing so she will get 31.5 extra proposals per abort on average. If she follows this strategy for an entire day she can get 1.69 extra proposals on average per day (subject to how often she gets to control the last RANDAO revealer).

On the context of RANDAO attacks, it's also important to compare Whisk against the status quo. In particular, RANDAO attacks are also possible against the status quo where we publicly sample 32 proposers at the beginning of each epoch using RANDAO (see figure above). In this case, over the course of a day (256 epochs), the attacker has 256 distinct opportunities to control the last RANDAO revealer, and every time that happens they get the opportunity to choose over two possible lists of 32 proposers. Our simulations show that while Malory is less likely to abort at each opportunity compared to Whisk (due to the smaller list size), if she follows this strategy for an entire day she can get much greater gains (14.7 extra proposals on average per day).

From the above and from looking at the figures below, we extract the following results:

- An adversary can get greater rewards over time in the status quo compared to Whisk [top-left graph]

- An adversary that exploits the status quo needs to do many aborts over the course of a day (bad for the beacon chain) [top-right graph]

- An adversary can exploit Whisk with only one abort per day for big gains (more deniable) [lower-right graph]

- Even small adversaries can exploit Whisk (a 0.01% adversary will abort with a 10% chance) [lower-left graph]

All in all, while big adversaries can extract bigger profits with the status quo, Whisk allows even small adversaries to extract profits by attacking RANDAO once per day.

Furthermore, Whisk makes the moments of candidate and proposer selection more juicy targets for attacks and bribes (imagine a RANDAO bias attack against *proposer selection* which places the adversary in a position to do another RANDAO bias attack against *proposer selection* on the next protocol run).