# Getting started with computer vision using deep learning in 2021: resources and comments for beginners and intermediate practitioners

There's many online resources for learning computer vision. The goal of this post is to put some of (what I consider) the best, along with a plan and suggestions, to become an adept practitioner in this field.

## Motivation

The last decade has seen as exponential increase in works that use computer vision (CV), and its endless derivative applications are only starting to get explored. The number of academic publications in the field is booming, and so is the interest from industries and governments, which is shown in an ever growing market all over the world.

<figure>

<img src="https://i.imgur.com/lWln4iv.png" alt="Computer vision research publications">

<figcaption style="align: left; text-align:center;"><a href="https://academic.microsoft.com/topic/31972630/publication/search?q=Computer%20vision&qe=And(Composite(F.FId%253D31972630)%252CTy%253D%270%27)&f=&orderBy=0">Computer vision research publications</a></figcaption>

</figure>

<figure>

<img src="https://www.marketsandmarkets.com/Images/computer-vision-market3.jpg" alt="Computer vision market projection">

<figcaption style="align: left; text-align:center;"><a href="https://www.marketsandmarkets.com/Market-Reports/computer-vision-market-186494767.html">Computer vision market projection</a></figcaption>

</figure>

A picture is worth a thousand words, and computer vision is all about making computers understand images (and video). Among the many applications of computer vision are multimedia analysis and generation, autonomous driving, inspection of crops and detection of diseases and pests in agriculture, more advanced industrial automation, and better image super-resolution, denoising and inpainting.

<figure>

<img src="https://i.imgur.com/8MyKQE1.png" alt="Computer vision for autonomous driving">

<figcaption style="align: left; text-align:center;"><a href="https://openai.com/blog/dall-e/">Computer vision for text prompt based media generation</a></figcaption>

</figure>

<figure>

<img src="https://appen.com/wp-content/uploads/2019/04/SLIDER-Appen_image_annotation_05.jpg" alt="Computer vision for autonomous driving">

<figcaption style="align: left; text-align:center;"><a href="https://appen.com/blog/computer-vision-vs-machine-vision/">Computer vision for autonomous driving</a></figcaption>

</figure>

<figure>

<img src="https://miro.medium.com/max/568/1*fQUQHziz2q7UASC8cTY8bg.png" alt="Computer vision for medical image analysis">

<figcaption style="align: left; text-align:center;"><a href="https://medium.com/@anushka.da3/application-of-computer-vision-in-health-care-9144899e2c54">Computer vision for medical image analysis</a></figcaption>

</figure>

<figure>

<img src="https://i.ytimg.com/vi/ihxOyq9M1vc/maxresdefault.jpg" alt="Computer vision for agriculture">

<figcaption style="align: left; text-align:center;"><a href="https://www.youtube.com/watch?v=ihxOyq9M1vc&ab_channel=nnimsm">Computer vision for agriculture</a></figcaption>

</figure>

<figure>

<img src="https://www.einfochips.com/blog/wp-content/uploads/2016/07/machine-vision-system-block-diagram.png" alt="Computer vision for industrial automation">

<figcaption style="align: left; text-align:center;"><a href="https://www.einfochips.com/blog/catching-up-with-latest-trends-in-industrial-automation-machine-vision/">Computer vision for industrial automation</a></figcaption>

</figure>

<figure>

<img src="https://www.gwern.net/images/gan/stylegan/2021-01-19-stylegan2ext-danbooru2019-3x10montage-0.png" alt="More computer vision for media generation" height="800px" width="200px">

<figcaption style="align: left; text-align:center;"><a href="https://www.gwern.net/Faces#extended-stylegan2-danbooru2019-aydao">More computer vision for media generation</a></figcaption>

</figure>

</p>

<figure>

<img src="https://ai.stanford.edu/~syyeung/cvweb/Pictures1/filters.png" alt="More computer vision (technically image processing) for multimedia">

<figcaption style="align: left; text-align:center;"><a href="https://ai.stanford.edu/~syyeung/cvweb/tutorial1.html">More computer vision (technically image processing) for multimedia</a></figcaption>

</figure>

## Computer vision using deep learning: overview

First of all, let's start with a few important definitions:

* Computer vision: interdisciplinary field that seeks to give computers with the ability to “see” and understand content of images and videos.

* Machine learning: provides systems the ability to automatically learn and improve from experience without being explicitly programmed.

* Deep learning: sub-class of machine learning algorithms that utilize multiple layers of an artificial neural network (ANN) to extract and process information and features from data.

### Computer vision: challenges, goal and history

#### Challenges

The fundamental problem in computer vision is that the way machines see the world and the way we see it is fundamentally different.

#### Goal

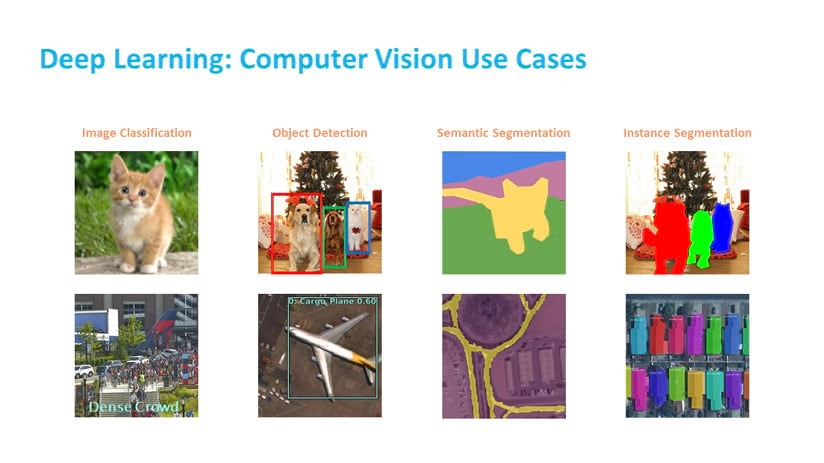

Therefore the goal of computer vision is to devise algorithms that aim to give computers a way of interpreting these matrixes of numeric values, providing value to us in the process. Among some of the classical computer vision tasks are: classification or object recognition(what object is in an image), detection (what object and where), segmentation (what and where at a pixel-by-pixel level), captioning (describing the image), etc.

#### Rise of deep learning in computer vision

Until just a few years ago, most of the work in computer vision was done by carefully crafting feature extractors and descriptors. Among these were SIFT (Scaled Invariant Feature Transform), HOG (Histogram of Oriented Gradients), SURF (Speed Up Robust Features). These algorithms would, based on mathematical filters, obtain representations, such as edges and contours, that we could then further process using traditional machine learning algorithms, such as SVMs (Support Vector Machines).

It wasn't until 2012, that deep learning picked up, when AlexNet, a convolutional neural network (CNN), vastly outperformed all traditional methods used in the task of image classification. A crucial advantage of CNNs is that they combined the feature extraction and learning components of the traditional computer vision pipeline into one. The CNN would learn useful features, such as edges and contours, in its first few layers, and use them for the end task, in this case, image classification.

![]()

<figure>

<img src="https://www.researchgate.net/profile/Zhengxia_Zou/publication/333077580/figure/fig2/AS:758306230501380@1557805702766/A-road-map-of-object-detection-Milestone-detectors-in-this-figure-VJ-Det-10-11-HOG.ppm" alt="Timeline of the development of machine and deep learning algorithms">

<figcaption style="align: left; text-align:center;"><a href="https://www.researchgate.net/figure/A-road-map-of-object-detection-Milestone-detectors-in-this-figure-VJ-Det-10-11-HOG_fig2_333077580">Traditional computer vision vs deep learning-based</a></figcaption>

</figure>

This combined with the massive increase in performance led to researchers to apply ANNs to all sorts of tasks in computer vision (and in other fields), marking the start of the deep learning era. Something to note is how deep learning had been around for a few decades, however it didn't pick up popularity until this last decade. Therefore, it's important to note some of the factors that contributed to its rise:

* Frameworks for DL: TensorFlow, theano, mxnet, PyTorch)

* Large, HQ, publicly available, labelled datasets, such as ImageNet

* Improvements in algorithms for vanishing gradient and regularization

* Advancements in hardware: GPUs and an ever-rising increase in computational resources

These factors have been, and will continue to be the catalyst behind this revolution, so it's important to acknowledge them, in order to design even better systems.

### Machine learning and deep learning

Going back to machine learning, it's important to note why learning algorithms have been so important in the advancement of artificial intelligence (AI) systems. I recommend readers to check out this short article, titled [The Bitter Lesson](http://www.incompleteideas.net/IncIdeas/BitterLesson.html), by Professor Richard Sutton. We can summarize the lesson as: “General methods that leverage computation are ultimately the most effective”. In the article, he expands on this by giving examples:

* Chess based on deep search (1997: Deep Blue)

* SIFT features to deep CNN representations (2012: AlexNet)

* Go based on self-play and deep reinforcement (2016: AlphaGo)

* NLP based on statistics (2017: Transformers, BERT/GPT-2: 2018)

With this in mind, he concludes by stating that search and learning scale with computation and datasets. Below is a summary of some advancements in the history of machine learning and deep learning algorithms.

<figure>

<img src="https://i.imgur.com/cbM4L7F.png" alt="Timeline of the development of machine and deep learning algorithms">

<figcaption style="align: left; text-align:center;"><a href="https://www.slideshare.net/mohamedloey/deep-learning-71352250">Timeline of the development of machine and deep learning algorithms</a></figcaption>

</figure>

![]()

With regards, to machine learning, let's relook at the definition, from a more concrete perspective, and with an example:

> A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E”

> – Tom Mitchell

In this case, our task **T** is the classification of **images as cats or not cats**, our performance measure **P** is the classification **accuracy**, and the experience **E** are **the images**.

With regards to deep learning, it focuses on the idea of using deep neural networks (DNNs). The power of NNs relies on the fact that a big enough network can approximate any mathematical functio. In practice, they follow the principle of "bigger is better". Therefore, as long as you have enough data, and a big enough network, in theory you can do anything.

<figure>

<img src="https://i.imgur.com/l8CVi9U.jpg" alt="Timeline of the development of machine and deep learning algorithms">

<figcaption style="align: left; text-align:center;"><a href="https://yangxiaozhou.github.io/data/2020/09/24/intro-to-cnn.html">Deep neural networks rely on size (and computational resources) to learn approximations of functions for any purpose</a></figcaption>

</figure>

## Getting started

There's many resources for getting started with computer vision using deep learning. I'll assume the readers fall into one of two categories:

1. A more applied approach. Objective would be applying computer vision to a task, without (much) knowledge of the underlying principles, at least at the start.

2. A more in-depth understanding of computer vision, with the goal of either doing research on the subject or working and improving state-of-the-art methods.

I'll refer to the former as the top-down approach and the latter as the bottom-up.

### Pre-requisites

For the top-down approach the main (and probably only) pre-requisite is programming, in Python preferably. I wrote a post where I [compile resources on how to get started](https://hackmd.io/@arkel23/start_programming_python_compilation_resources) with that.

For the bottom approach, while programming in Python is also required, additionally you may or may not need knowledge in linear algebra, calculus (specially multi-variable and derivatives/gradients), probability, and statistics. This all depends on the level of work you plan to do. In general, the deeper the understanding you want to obtain, and the more theoretical you aim your work to be, the deeper the understanding of mathemathics you will need.

As a general guideline, I would say that for most practitioners, I would recommend the top-down approach and then go back to the fundamentals as you find brick walls in your learning process.

### Basics: checklist and recommendations

This post is focused on computer vision with deep learning, so I'll ignore the traditional approaches and go with courses for starting with deep learning. By the time you finish this section, you should be able to understand these:

* What is machine and deep learning?

* Supervised vs unsupervised learning

* Regression vs classification

* Linear regression vs logistic regression

* Underfitting and overfitting

* What are artificial neural networks?

* Fully-connected layers

* Softmax layers

* Activation functions

* What are convolutional neural networks (CNNs)?

* Convolutional layers

* Pooling layers

* CNNs for image classification

These courses are both very good, and the main differences rely on personal preferences. Do you prefer a top-down approach, where first you learn aboyt applications, and then learn theory, or a bottom-up one, first learning theory, then applying it?

If it's the former, then I would recommend [Practical Deep Learning for Coders](https://course.fast.ai/) by [fast.ai](https://www.fast.ai/). However, if it's the latter I would recommend [Deep Learning Specialization](https://www.coursera.org/specializations/deep-learning) by [deeplearning.ai](https://www.deeplearning.ai/). Both of these courses have excellent instructors, and are available for free, though the Coursera one requires the user to apply for a free audit, so cannot check the homework results.

#### PyTorch vs TensorFlow

Another major difference is that the one from fast.ai uses [PyTorch](https://pytorch.org/) and a higher-level library they built on top it called *fastai*. PyTorch is already relatively high-level but fastai simplifies the process of building, training and employing a model even more, so it could be great for people who just would like to have a taste of the power of deep learning without getting their hands too dirty.

On the other side, the Deep Learning Specialization in Coursera uses [TensorFlow](https://www.tensorflow.org/) and [Keras](https://keras.io/), at some point. *Keras*, like *fastai*, is also a high-level library, but built on top of TensorFlow. It also simplifies the deep learning pipeline by taking advantage of the abstraction of some of the more cumbersome parts of using TensorFlow. [At some point](https://blog.tensorflow.org/2019/09/tensorflow-20-is-now-available.html), Keras officially became part of the TensorFlow pipeline.

However, this caused a problem since many people were releasing source code based on previous releases, without Keras. This combined with (major) changes in the way things work across different versions, sometimes can lead to confusion, so **I personally recommend PyTorch**, though being familiar with both can be good, since there's many other deep learning libraries and usually you would use the one your colleagues already use.

## Intermediate

Then the second thing I would recommend to anyone is to go through [Deep Learning with PyTorch: a 60 Minute Blitz](https://pytorch.org/tutorials/beginner/deep_learning_60min_blitz.html). It covers all of the basics you should already be familiar with but using pure PyTorch.

### Putting it all together: end-to-end project

After this, many people wonder where to go next. Some people just endlessly keep taking course after course, wandering aimlessly, me included at some point. But what I can say with security from my experience until now, is that once you get to a point where you're comfortable with the basics, is to just do an end-to-end project.

![]()

<figure>

<img src="https://t2.genius.com/unsafe/440x0/https%3A%2F%2Fimages.genius.com%2F2b790e48bcd9779bce4dc5bc74a01118.563x1000x1.png" alt="Shia LeBeouf's Just Do It." height="500" width="500">

<figcaption style="align: left; text-align:center;"><a href="https://www.youtube.com/watch?v=ZXsQAXx_ao0&ab_channel=MotivaShian">Just Do It!</a></figcaption>

</figure>

If you're passionate about agriculture, do a system for automatic detection of leaves diseases, or if you're passionate about medical imaging, classify medical images based on diseases or do object detection or segmentation for tumors. Alternatively, if like me, you like multimedia, work on conditional or unconditional image or video generation. The possibilities are endless. At this point, you're already familiar with the terminology in computer vision using deep learning, so you should just pick up the pieces like in any programming project.

I cannot understate how much did I learn by doing my first end-to-end project, [Animesion: A Framework for Anime Character Recognition](https://github.com/arkel23/animesion/tree/main/classification). While far from perfect, as seen by the results when we tried to classify an out-of-domain image of my friend's cat, I learned so much about all the components of an image classification pipeline, and worked with state-of-the-art models. During the process, I also got so many valuables insight that will help streamline the process of getting everything for a project ready in the future.

However, when I mean end-to-end, I don't mean to just copy-and-paste a cats-and-dogs image classifier using a dataset that's been reused a thousand times, along with a classification model from 2012. Ideally, you would work on all the steps of the pipeline. First, obtaining a suitable dataset. If one does not exist, you need to compile one, either by combining multiple, or scraping data from the web, followed by pre-processing it into a state that works with the rest of your pipeline. Then, choosing and training an appropriate model for your task. You may look into existing repositories, papers, blogs, etc. I personally find [Papers with Code](https://paperswithcode.com/) as a great tool to find state-of-the-art models with source code, from which you can build on, along with datasets and everything in between. Finally, interpret your results, make tables and plots, deploy it to the web, or make an app maybe, it's up to you and your desired learning goals.

### Research: beyond the state-of-the-art

At this point, you're well into the area of research, since you're familiar with current models, and how to use them. Now your goal should be to understand their shortcomings, and aim to address them. I wrote a post about [how to do research](https://hackmd.io/@arkel23/startresearch) and [how to read papers](https://hackmd.io/@arkel23/readpaper), which at this point is (probably) necessary for any further advances.

#### Books

While many people would say that you need an extremely solid theoretical foundation to proceed, in the form of books and graduate courses on the topic, I would say that depending on what exactly you want to accomplish you may still be able to do novel work without (such) a rigurous math foundation. I must say however, that I would still recommend reading a book or two on Machine Learning and you should brush up on any pre-requisites if you cannot keep up with those.

For books, I cannot give many comments. All of these are heavy in math (in my opinion), but they're well-established and used world-wide in graduate level courses on the topic.

1. [Deep Learning](https://www.deeplearningbook.org/) by Ian Goodfellow and Yoshua Bengio and Aaron Courville.

2. [Probabilistic Machine Learning: An Introduction](https://probml.github.io/pml-book/book1.html) by Kevin P. Murphy. This is an update (from Dec. 2020) on another book by his, [Machine Learning: A Probabilistic Perspective](https://mitpress.mit.edu/books/machine-learning-1).

3. [Pattern Recognition and Machine Learning](http://users.isr.ist.utl.pt/~wurmd/Livros/school/Bishop%20-%20Pattern%20Recognition%20And%20Machine%20Learning%20-%20Springer%20%202006.pdf) by Christopher M. Bishop.

#### Paper reading

What I would certainly recommend at this point is to gauge your level, by reading papers, both landmark papers (that are usually written in a clear style), and some more recent, common papers (which or may not be that clear).

For landmarks, I recognize this is far from a complete list, but it should accomplish its purpose of gauging the reader's level at this point:

* [ImageNet Classification with Deep Convolutional Neural Networks. Krizhevsky et al. 2012.](https://proceedings.neurips.cc/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45b-Abstract.html)

* AlexNet architecture along with many combined upgrades: "popularized" CNNs by winning ILSVRC12 by a land-slide.

* [Very Deep Convolutional Networks for Large-Scale Image Recognition. Simonyan et al. 2014.](https://arxiv.org/abs/1409.1556)

* VGG-16 and variants: deeper networks get better results.

* [Going deeper with convolutions. Szegedy et al. 2014.](https://arxiv.org/pdf/1409.4842.pdf)

* Inception block: simple building block that can be repeated to construct more complex networks; also, began exploring the idea of wider networks.

* [Generative Adversarial Networks. Goodfellow et al. 2014.](https://arxiv.org/abs/1406.2661)

* GAN and adversarial training: pit two networks (generator and discriminator) against each other to improve performance.

* [Deep Residual Learning for Image Recognition. He et al. 2015.](https://arxiv.org/abs/1512.03385)

* Residual block and ResNet variants: residual connections to speed up and improve training.

* [Image-to-Image Translation with Conditional Adversarial Networks. Isola et al. 2016](https://arxiv.org/abs/1611.07004).

* Applies GANs to the task of image-to-image translation.

* [EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. Tan et al. 2019.](https://arxiv.org/abs/1905.11946)

* Combine depth, width, and image resolution to achieve state-of-the-art in image classification with less computational cost.

If at this point you feel you cannot keep up, due to not being able to grasp key ideas and concepts, it may be better to slow down and go back to fundamentals.

#### Possible research directions in computer vision using deep learning

However, if you can at least keep up, you may consider just heading forward into further advancing the field. Before doing this, it may be good to focus on one particular direction. We live in exciting times, with many directions of computer vision explored from a more general, application-agnostic point-of-view, and a more specific, application-oriented approach. I'll list a few of the many (general) areas of computer vision research, including both traditional and new topics:

* Image classification/recognition

* Generic and instance object detection

* Semantic and instance segmentation

* Image generation, translation and enhancement

* Multimodal: vision+text, vision+audio, RGB+depth

* Spatiotemporal/sequential visual data: videos

* 3D visual data: point clouds and voxels

* Few-shot, one-shot and zero-shot learning

* Weakly supervised, semi-supervised, self-supervised learning

* New architectures: attention and transformers for vision

* Generalization: bias and long-tailed datasets

* Interpretability and explainability

* Vision for edge/mobile devices

If you're interested in a particular application, just look for papers regarding the topic and the application, such as segmentation for medical images, and so on.

I hope to write another post specifically targeting this last section, where I can go through some review papers on some of these interesting topics, but it has to be in another post since this one is already long enough.

## Conclusion

This post provides a starting point for people interested in doing research or work in computer vision using deep learning. It provides a roadmap and checklist for getting started, and an overview along with comments on possible research directions on this highly competitive but rewarding field.

If you like this post, or have any questions, feel free to leave a comment or contact me on any of my socials, found at the bottom of my [Github Pages](https://arkel23.github.io/).

## Acknowledgements

This post has been largely inspired by lectures given by my professors in National Chiao Tung University, Hsinchu, Taiwan. In particular, I would like to thank Professor Wen-Huang Cheng for his inspiring lectures on the topic.