# Account abstraction providers

Cornerstone of DeFi.app is account management through concept of the account abstraction. We need to examine multiple AA providers to select the one for building DeFi.app on top of it.

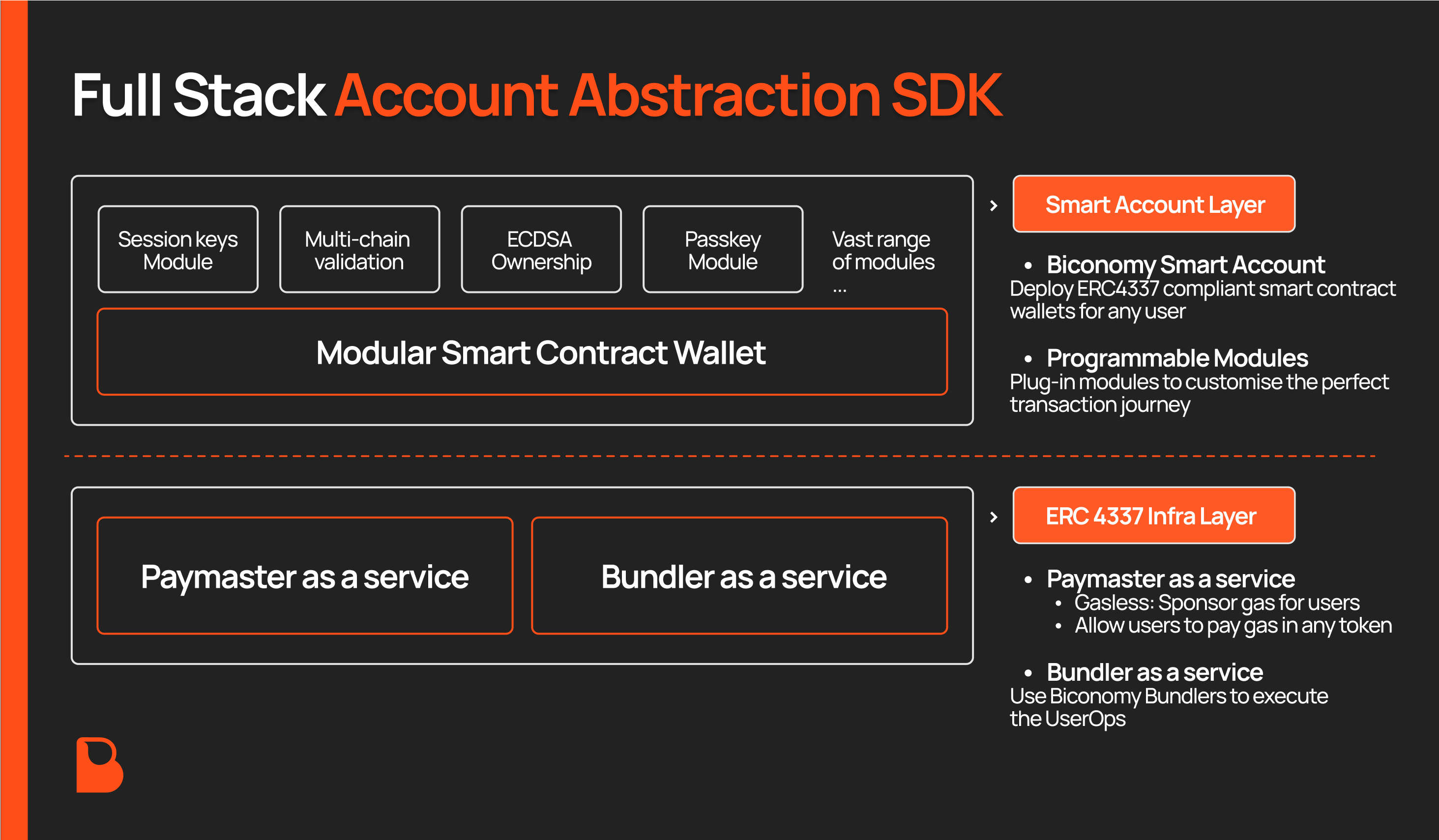

## Biconomy

Biconomy is a leader in the space of the account abstraction. They implemented ERC-4337 and enabled deployment of smart accounts as smart contracts, they have SDK that faciliates composition, signing and submitting of user operations to the alternative memepools. They support ECDSA signatures, but they also allow custom signature validation modules that resulted in number of different account management providers such as Dynamic, Privy, Magic Link utilizing Google's Passkey and variety of other signatures to build on top of Biconomy. Biconomy offers bundler services, effectively EOA account, that picks up userOp from alternative memepool and submits tx on behalf of smart accounts. Finally, they offer paymaster services, where users can have full gasless experience.

They support Ethereum, Arbitrum One & Nova, Optimism, Polygon, Polygon PoS, Avalanche C-chain, BNB, opBNB, Base, Linea, Chilliz, Astar, Manta Pacific, Mantle, Combo, Core and Berachain Artio.

### Smart accounts

Every smart account is a smart contract that gets deployed to EVM-based chain with the first transaction that is submitted on behalf of the user. One smart account has the same address on all EVM chains allowing user-friendly deposits. User has a single EVM address and can use any CEX or other service to deposit to this address, regardless of EVM chain.

Smart accounts are dervided from the "secret", which can be anything. If ECDSA validation module is used, it can be private key of the signer, but other protocols can build custom validation modules allowing utilization of different secrets (e.g. Google passkey). Validation module is a smart contract that is called by smart account to verify the signature. It accepts hash of userOp, signature and smart account address and returns information if the signature is valid. As said, signature can be validated by Elliptic curve, but also with any custom scheme.

From the perspective of smart contracts, `msg.sender` is the smart account for all interactions. However, if smart contract reads `tx.origin` for any reason, this could be a problem, becomes it would read bundler's address and not user's address.

Example of fetching smart account:

```

async function getSmartAccount(signer: Wallet) {

const bundler: IBundler = new Bundler({

// get from biconomy dashboard https://dashboard.biconomy.io/

bundlerUrl: "https://bundler.biconomy.io/api/v2/{chainId}/{apiKey}",

chainId: ChainId.POLYGON_MUMBAI, // or any supported chain of your choice

entryPointAddress: DEFAULT_ENTRYPOINT_ADDRESS,

});

const paymaster: IPaymaster = new BiconomyPaymaster({

// get from biconomy dashboard https://dashboard.biconomy.io/

paymasterUrl: "https://paymaster.biconomy.io/api/v1/{chainId}/{apiKey}",

});

const module = await ECDSAOwnershipValidationModule.create({

signer: signer,

moduleAddress: DEFAULT_ECDSA_OWNERSHIP_MODULE,

});

const biconomyAccount = await BiconomySmartAccountV2.create({

chainId: ChainId.POLYGON_MUMBAI, //or any chain of your choice

bundler: bundler, // instance of bundler

//paymaster: paymaster, // instance of paymaster

entryPointAddress: DEFAULT_ENTRYPOINT_ADDRESS, //entry point address for chain

defaultValidationModule: module, // either ECDSA or Multi chain to start

activeValidationModule: module, // either ECDSA or Multi chain to start

});

console.log(`Smart account address: ${await biconomyAccount.getAccountAddress()}`);

return biconomyAccount;

}

```

In order to get account, ethers.js `Wallet` object was used. Combined with validation module, this function returns unique smart account. This account doesn't necessarily need to be deployed yet, but it returns its logical representation, containing address and signing capabilities.

### User operations (UserOps)

UserOp can consist of multiple transactions, where each of them interacts with different contract. E.g. one tx can approve ERC20 spending to DeFi protocol, second can call deposit function on some DeFi protocol. Each tx is defined by usual params such as to, data and value. Biconomy combines all txs into a single userOp and creates hash. This hash is signed by the smart account and submitted to alternative memepool from which it will be picked up by the bundler. From user's perspective, if ECDSA scheme is used, browser wallet would pop up with request to sign. Alternatively, Google Passkey validation (e.g. fingerprint signature) pops up and user only need to sign it / confirm it. In the background, signed userOp gets submitted and picked up by the bundler.

Really interesting thing is that userOp can be multichain, meaning that it consists of multiple txs submitted to various chains, but signed with a single signature. Under the hood, userOp for every chain is created sperately, userOpHash is calculated and Merkle tree is built from all userOpHashes. Merkle root gets signed by smart account. In this case, userOpHash is submitted together with Merkle route and is validated against Merkle root.

In the following example, smart accounts approves spending to Compound V2 token and deposits into Compound V2, everything with a single signature. `await biconomyAccount.buildUserOp(txs)` would prompt user with scheme-specific signing flow on the frontend. Also, in this case, smart account gets charged for the gas in the gas token.

```

async function getTxs(signer: Wallet) {

const amount = ethers.utils.parseUnits("1", 6);

const token = new ethers.Contract(tokenAddress, tokenAbi, signer.provider);

const cToken = new ethers.Contract(cTokenAddress, cTokenAbi, signer.provider);

const approveTxPrep = await token.populateTransaction.approve(cToken.address, amount);

const approveTx = {

to: approveTxPrep.to!,

data: approveTxPrep.data!

}

const mintTxPrep = await cToken.populateTransaction.mint(amount);

const mintTx = {

to: mintTxPrep.to!,

data: mintTxPrep.data!

}

return [approveTx, mintTx];

}

const signer = await provider.getSigner();

const biconomyAccount = await createSmartAccount(signer);

const txs = await getTxs(signer, provider);

const userOp = await biconomyAccount.buildUserOp(txs); //userOp gets signed

const userOpResponse = await biconomyAccount.sendUserOp(userOp); //userOp gets submitted

console.log("userOpHash", userOpResponse);

const { receipt } = await userOpResponse.wait(1);

console.log("txHash", receipt.transactionHash);

```

### Paymaster

Paymaster is a Biconomy service that allows subsidizing gas costs of txs. In the previous example, smart account was charged for gas costs which required smart account to have sufficient balance of native coin (gas token). As this is not optimal, Biconomy offers 2 additional methods for paying the gas: sponzoring txs by dApp and charging smart account in ERC20 token.

In any case, paymaster instance needs to be set through Biconomy dashboard. It's chain-specific URL with embedded GUID that needs to be referenced when fetching smart account. Paymaster has gas tank associated and we would need to fill it up with gas token of associated chain. Through dashboard, we can monitor number of userOps and gas consumption. We also need to whitelist smart contracts and its functions, and txs can only be sponzored if smart accounts interact with whitelisted functions of whitelisted smart contracts. The idea is to prevent abuse of paymaster and only limit it to protocol's smart contracts, but this is not optimal because, at very least, we need to whitelist `approve` functions of all ERC20 tokens our smart contracts would interact with.

This is how dashboard looks like:

#### Sponsored transactions

If we decide to sponzor transactions of users when they interact with our protocol, we would need to set paymasters on every chain, fill their gas tanks, monitor their balances and automate refilling. We would also need to whitelist functions and smart contracts on every chain.

When fetching smart accounts, we would need to specify paymaster and add paymaster data to every userOp. When userOp is submitted and tx is executed, bundler would pay for gas fees, but would take equivalent amount (increased by 10%, To be confirmed with Biconomy) from the gas tank.

This is how submission of of userOp would look like:

```

const signer = await provider.getSigner();

const biconomyAccount = await createSmartAccount(signer);

const txs = await getTxs(signer, provider);

const userOp = await biconomyAccount.buildUserOp(txs);

const paymaster = biconomyAccount.paymaster as IHybridPaymaster<SponsorUserOperationDto>;

let paymasterServiceData: SponsorUserOperationDto = {

mode: PaymasterMode.SPONSORED,

smartAccountInfo: {

name: 'BICONOMY',

version: '2.0.0'

},

};

const paymasterAndDataResponse = await paymaster.getPaymasterAndData(

userOp,

paymasterServiceData

);

userOp.paymasterAndData = paymasterAndDataResponse.paymasterAndData;

userOp.callGasLimit = paymasterAndDataResponse.callGasLimit;

userOp.verificationGasLimit = paymasterAndDataResponse.verificationGasLimit;

userOp.preVerificationGas = paymasterAndDataResponse.preVerificationGas;

const userOpResponse = await biconomyAccount.sendUserOp(userOp);

```

#### Transactions with ERC20 token gas payments

Alternative approach is utilization of Biconomy's token paymaster. In this case, we would not need to set up our own paymasters with their gas tanks, but we would use Biconomy's paymaster. It is not clear if we could develop our own token paymaster or we need to use Biconomy's paymaster.

Biconomy's token paymaster has limited list of tokens they support for paying gas and it's chain specific. When composing userOp, we need to specify in which ERC20 token we would like to charge smart account and token paymaster gives us the amount of token that will be spent. We need to make sure that the smart account has sufficient balance in that token to pay for that amount. Empirically, it seems that they add 40% on top of the actual gas consumption. Generally, this is very convinient if userOp consists of tx taking out (e.g. swapping) one of supported tokens because in that case, we only add gas costs on top of that. E.g. if user is swapping 100 USDC for ARB, we charge smart account 100.1 USDC and that's all - no need for gas token and we don't have any extra costs.

Using token paymaster in composition of userOp would look like this:

```

const signer = await provider.getSigner();

const biconomyAccount = await createSmartAccount(signer);

const txs = await getTxs(signer, provider);

const userOp = await biconomyAccount.buildUserOp(txs);

const paymaster = biconomyAccount.paymaster as IHybridPaymaster<SponsorUserOperationDto>;

const feeQuotesResponse =

await paymaster.getPaymasterFeeQuotesOrData(userOp, {

mode: PaymasterMode.ERC20,

tokenList: ["0x9c3C9283D3e44854697Cd22D3Faa240Cfb032889"], //USDC

});

const feeQuotes = feeQuotesResponse.feeQuotes as PaymasterFeeQuote[];

const spender = feeQuotesResponse.tokenPaymasterAddress || "";

const usdcFeeQuotes = feeQuotes[0];

const finalUserOp = await biconomyAccount.buildTokenPaymasterUserOp(userOp, {

feeQuote: usdcFeeQuotes,

spender: spender,

maxApproval: false,

});

let paymasterServiceData = {

mode: PaymasterMode.ERC20,

feeTokenAddress: usdcFeeQuotes.tokenAddress,

calculateGasLimits: true, // Always recommended and especially when using token paymaster

};

const paymasterAndDataWithLimits = await paymaster.getPaymasterAndData(finalUserOp, paymasterServiceData);

finalUserOp.paymasterAndData = paymasterAndDataWithLimits.paymasterAndData;

finalUserOp.callGasLimit = paymasterAndDataWithLimits.callGasLimit;

finalUserOp.verificationGasLimit = paymasterAndDataWithLimits.verificationGasLimit;

finalUserOp.preVerificationGas = paymasterAndDataWithLimits.preVerificationGas;

const userOpResponse = await biconomyAccount.sendUserOp(finalUserOp);

```

### Challenges

1. **Limited number of ERC20 payment tokens:** for every chain, Biconomy lists ERC20 tokens that can be used to pay for the gas. This is not optimal UX as we would ideally want users to be able to swap any token into any token. In case that selected token is not listed as vetted token for gas payment, user would need to have balance in the token for swapping and the token for paying the gas, which is not optimal. Of course, it is understandable that selection of gas tokens is limtied by available DEX liquidity on specific chain and that not every token can be used for paying gas, but we would ideally want great flexibility in choosing gas tokens.

Also, we need to explore if Biconomy plans to allow custom token paymasters so we can set up our own token paymaster.

2. **Biconomy fees**: The whole business model of Biconomy and bundlers is not transparent enough and should be checked with Biconomy. Also, we need to check decentralization of bundlers - can anybody be a bundler, which is important aspect in terms of resiliance and service availability.

What I deduced empirically is that amount charged for gas is higher than gas that's actually spent. When smart accounts pay for gas in the gas token, they were charged 70% more than what was spent. In the sponzored mode, our gas tank was charged 10% more and in ERC20 tokens, smart account was charged 40% more. These numbers should be checked with Biconomy.

Generally, we would like to keep extra costs low, so we can take our own margin.

3. **Bundler currently only on Testnet**: It seems that Biconomy bundler is currently only available on Testnet networks. It needs to be confirmed with Biconomy if this is true and if so, when we can expect their services on the mainnet.

4. **Potential misuse of paymaster**: as userOp is composed on the frontend, paymaster URL (with GUID) can be easily inspected. Afterwards, some other dApp can use our paymaster to sponzor txs of users interacting with their protocol. This is relatively limited by whitelisting smart contracts for which we sponzor txs, but as said, we also need to whitelist ERC20 token interactions as they will be part of usual userOps.

Potential way to mitigate this is by composing userOp on the backend service and giving it to frontend app for signature.

5. **UserOps are not transparent enough**: the whole process of signing and inspecting and verifying what was executed is not user-friendly. Users are actually signing userOp hash and they don't have idea which contract interactions are included in userOp. If they sign through browser wallet they only see signature of userOp and some phishing site can easily navigate them to sign userOp containing malicious interactions. The same problem is with signing using Google passkey.

Additionally, when tx gets submitted, it's actually tx from bundler EOA to Biconomy's entry point contract. This is [example of this transaction](https://mumbai.polygonscan.com/tx/0x0d8c01bb7e2fdb778b048177891bfba0f43bda00f261dbe5a1fa195c417daa2a). It is not really clear what happened because it's hard to understand calldata by user. The only relevant part are emitted events, but they can also be faked.

6. **Limited interoperability**: one of the benefits of the way how DeFi currently works is that wallets with funds and gas token on a specific chain can be used to interact with any protocol on that chain. With smart accounts, that's quite limited. E.g. if we set up a smart account for user, this user can't use it to interact with existing DeFi protocols, such as Radiant or GMX, unless they a. withdraw funds to EOA b. we (or somebody else) provide interface for composing userOps that would interact with these protocols. On one side it gives us great leverage, but on the other side, it limits what users can do.

7. **Lack of support for non-EVM chains**: Biconomy doesn't work with non-EVM chains such as Solana. We need to explore further if that plan to support them as well and if not (which is most likely), how to tackle account abstraction on these chains.

### Integration with DeFi.app

None of the listed challenges is a showstoper for integration with Biconomy (except of bundlers being Testnet only) and it would make sense to integrate Biconomy.

We would show users their smart account address (the same for all EVM chains) and allow them to deposit to this address (from CEX, EOA or through fiat on-ramp services). When displaying balance, we would need to aggregate their balances across all supported EVM chains.

Swaps (and any other DeFi protocol interactions) would happen through userOps which would be signed using one of the supported signing schemes (e.g. Google keypass or browser wallet). We would use sponzored transactions, meaning we would pay for the gas on behalf of the users.

Every swap would also include our fee that will be passed to our treasury and it should be enough to cover for gas costs + contain our margin. We can set fee as %, but also limit it to some minimal amount. This opens a great **use case for our token**: if a user holds our token on our smart account, they can opt in to pay their fee in our token. In that case, we can offer fee discount of 20%-50%. If we start DeFi.app without token, then hinting airdrop for users could help us build substantial userbase before token launch.

### Meeting notes

- Bundler is available on majority of Mainnet EVM chains, but they need to be requested. They are free of charge

- Only bundler specified by smart account can be used to validate userOp and submit the tx. That makes us to have hard dependency on Biconomy and availability of their off-chain service which listens to userOp memepool

- They have a plan to provide bundler as a service, meaning that others can validate userOps as well, but not in the near future

- Combination of PK and the bundler will always result in the same smart account which means that any other protocol integrated with Biconomy will give the control over the same smart account and funds held by it, partially solving interoperability issue

- They have plan to additionally protect paymaster from abuse through daily limits and [webhooks](https://docs.biconomy.io/Paymaster/api/webhookapi)

- They don't plan to allow token paymaster as a service in near future, but they would be flexible on extending the list of supported ERC20 tokens

- UserOps can be inspected through [Jiffyscan](https://jiffyscan.xyz/)

- Biconomy's business model revolves around taking fees on top of gas spent. They charge 7% - 12% on top of estimated gas costs

- There is no way to avoid EOAs. UserOps need to be signed by ECDSA type of signatures, meaning that PK needs to be involved which brings up the topic of custody of PK

- They are expanding number of chains they support, but they don't plan to support non-EVM blockchains

## ZeroDev

ZeroDev is AA provider built on top of StackUp. They fully implement Account Abstraction in the same way as Biconomy. In short, ZeroDev:

- integrates well with Dynamic and Privy and many other auth providers

- smart accounts which are called Kernel accounts get deployed on the first user operation

- ZeroDev by default use ECDSA validation, meaning that all kernel accounts are controlled by EOAs

- they hint support for passkeys in the future

- userOps can consist of multiple txs, but only on a single chain

- the paymaster allows kernel accounts to pay gas for themselves, but also supports sponsored and ERC20 token gas payments

ZeroDev supports only the following networks: Ethereum, Arbitrum, Optimism, Polygon, Avalanche C-Chain, BNB, opBNB, Base and Linea.

Example of integration with Privy:

```

const signer = await providerToSmartAccountSigner(privyProvider);

const publicClient = createPublicClient({

transport: http("https://rpc-mumbai.maticvigil.com/"), // use your RPC provider or bundler

});

const ecdsaValidator = await signerToEcdsaValidator(publicClient, {

signer,

});

const account = await createKernelAccount(publicClient, {

plugins: {

sudo: ecdsaValidator,

},

});

const kernelClient = createKernelAccountClient({

account,

chain: polygonMumbai,

transport: http('https://rpc.zerodev.app/api/v2/bundler/6c120895-f15c-4a12-a829-fc370c5d529a'),

sponsorUserOperation: async ({ userOperation }) => {

const zerodevPaymaster = createZeroDevPaymasterClient({

chain: polygonMumbai,

transport: http('https://rpc.zerodev.app/api/v2/paymaster/6c120895-f15c-4a12-a829-fc370c5d529a'),

})

return zerodevPaymaster.sponsorUserOperation({

userOperation

})

}

})

console.log("Kernel account: " + kernelClient.account.address);

const txs = await getTxs();

const userOperation = {

callData: await kernelClient.account.encodeCallData(txs)

}

console.log(userOperation);

const userOpHash = await kernelClient.sendUserOperation({

userOperation

});

console.log("UserOp hash:", userOpHash);

const bundlerClient = kernelClient.extend(bundlerActions)

const res = await bundlerClient.waitForUserOperationReceipt({

hash: userOpHash,

});

console.log("UserOp completed with hash: " + res.receipt.transactionHash);

```

Interesting thing is that I could "hack" ZeroDev kernel accounts to be submitted to Biconomy's bundler and vice versa.

What was not clear is how we manage gas tank for paymaster when we want to have sponsored transactions.

Very interesting feature they offer is Guardian & Recovery. When creating & deploying kernel account, one EOA is assigned which can sign on behalf of kernel account (the same as with Biconomy). However, ZeroDev allows setting Guardian wallet for that kernel account. It's primary purpose is to help recover access to kernel account in case that EOA access is lost. Besides recovery, additional function calls can be set that guardian can execute on behalf of the kernel account.

Recovery can be executed through the code or through [their UI](https://recovery.zerodev.app/). Guardian would basically choose kernel account and change EOA that controls it. This is very powerful feature that could help us minimize dependency on authentication provider and their management of PKs and could help users recover funds in case of a black swan event at authentication provider.

Besides kernel accounts validated by signatures of a signle EOA, they allow creating kernel accounts as multisig accounts, where multiple EOAs can sign, each of them having different voting power and setting minimal threshold of power to execute userOp. Not very useful for DeFi.app, but worth keeping in mind.

Finally, they plan to support passkeys, but this still needs to be clarified more, while session keys are supported in the same way Biconomy uses them.

Regarding price model, they have different price packages. The biggest disclosed package costs $399/month and includes $5000 monthly gas sponsorships, 6% gas sponsorship premium, 100k userOps, and $0.01 per additional userOp. What is actually meant by $5000 monthly gas sponsorships, still needs to be clarified.

Questions:

1. How gas tank works for sponsored transactions?

2. When and how they plan to support passkeys? Is that using EIP-7212?

3. What $5000 monthly gas sponsorships means in the pricing?

## Pimlico

Pimlico offers 3 products:

- permissionless.js that is already used by ZeroDev to construct and retrieve kernel accounts and to communicate with the kernel account client in charge of signing and submitting userOps.

- bundler & paymaster that can be replace ZeroDev's bundler and paymaster. We connect debit/credit card with the paymaster, Pimlico will pay for the gas costs and take 10% of premium and charge our card.

## ZeroDev vs Biconomy

- Biconomy supports multichain userOps => 1 signature for multiple userOps on different chains

- ZeroDev accounts can have guardians that can help recover accounts

- they are top two AA providers outperforming all others by market share, which can be seen on [BundleBear](https://www.bundlebear.com/factories/all), though, ZeroDev deployed 1.5M accounts vs Biconomy with 1M accounts

- ZeroDev's bundling txs are slightly more gas efficient

- ZeroDev has larger number of supported tokens for token gas payments, which can be seen [here](https://docs.stackup.sh/docs/supported-erc-20-tokens) vs. [Biconomy list](https://docs.biconomy.io/Paymaster/supportedNetworks#erc20-gas-payments)

- ZeroDev has upfront payment for using their services, but charge 6% on top the gas spent. On the other hand, Biconomy is free, but charges 7%-12% on top of the gas spent.

- Biconomy's bundler proved to be slightly faster than ZeroDev. Biconomy team also pointed out their infra resilience and speed (<5sec vs circa 9sec of the competition).

# User management providers

## Dynamic

Dynamic is authentication, authorization and user management product that allows embedding widget in React application that handles creating and connecting wallets and creating and managing accounts. They also expose functions for signing transactions by connected wallets. They created a network of integrations that allows us to easily plug in multiple account abstraction providers, authentication providers, and fiat on-ramp providers. Finally, they offer us, as their client, nicely looking dashboard where we can see all users.

For authentication, users can choose whether to authenticate with their wallet, with e-mails, or with socials. Currently, Google, Twitter, Discord, Github, Twitch, Apple and Facebook are supported, which is somewhat inferior to Privy that offers Farcaster, SMS and TikTok. When users logs in, JWT token is created that we can use for permissioned actions on our side. On top of authentication with primary factor, there is MFA that can be set as required for signing txs. MFA can be performed using one-time codes sent to e-mail or by passkeys.

When it comes to wallets, Dynamic offers embedded wallets, Magic wallets, Blockto wallets and browser wallets. Embedded wallets are created automatically for users using Turnkey integration. Turnkey stores private keys in KMS and exposes API for signing and exporting PKs. Transaction/message that needs to be signed is sent to Turnkey via API, Turnkey loads private key into Nitro security enclave, signs transaction/message and returns signature via API. If user wants to see their PK, they need to set MFA set & authenticate against it, and then PK is shown in Turnkey iframe, so Dynamic never gets access to PKs. Even though that's somewhat secure, it introduces big dependence on Turnkey. In case of some black swan event on Turnkey side, users may permanently loose access to their wallets.

Dynamic has some nice fine-tuning using their dashboard. We can select if we want to allow users to have multiple linked ccounts, can users be without wallet, can wallets be transferred between users, how we want to sort and pririotize authentication methods, etc. When it comes to registration, we can add additional fields and make them required/optional. They include alias, first name, last name, phone number, job title, t-shirt size and username. We can allow users to link some of their social accounts too. We can track user IP addresses if we want using Dynamic and we can place hCaptcha on their widget. Finally, they are rolling out React Native SDK soon.

Regarding account abstraction, Dynamic works with ZeroDev, Biconomy, Pimlico and Alchemy, however, they optimize developer experience for ZeroDev. ZeroDev became part of their SDK and it can be used to get smart account signer without need for permissionless.js. From there, ZeroDev can be used as any other AA provider. For fiat on-ramp, they have out-of-the-box integration wtih Banxa. Very interesting integration they have is with Chainalysis. We can integrate to check wallet addresses.

Where Dynamic stands out is number of different chains they support. They support embedded and external wallets for all EVM chains and Solana, but they also support connecting external wallets on Flow, Algorand, Starknet and Cosmos.

Dynamic has very information-rich dashboard that we can use to track and manage users and that contains all captured information:

When it comes to [pricing](https://www.dynamic.xyz/pricing), highest disclosed pricing package costs $99/month and includes 2000 MAUs and $0.05 for every additional. To compare against other providers, 10 000 MAUs would cost us $500/month.

## Privy

Privy is similar to Dynamic in terms that it allows authentication, authorization and user management. It is used in the similar way to Dynamic: it embeds widget in React app that handles creating and connecting wallets and creating and managing accounts.

For authentication, users can choose whether to authenticate with their wallet, with e-mails, SMS or with socials. Currently, Google, Twitter, Discord, Github, TikTok, LinkedIn, Apple and Farcaster are supported. Compared with Privy, it's missing Facebook and Twitch, but has SMS, TikTok, LinkedIn and Farcaster. In my opinion, both are completely acceptable (maybe SMS, TikTok and Farcaster gives slight leverage to Privy over Dynamic). We can configure which out of these options will be supported. When user logs in, JWT token is built which can be used for permissioned actions.

Users can use external wallets to sign transactions, but they also support embedded wallets. Embedded wallets are EOAs created by Privy, allowing seamless creation of wallets for web2 users. That can happen on creation of the account or explicitly, when we need it in the code. They have superior approach to management of private keys: they break it down into 3 shares using [Shamir's secret sharing](https://en.wikipedia.org/wiki/Shamir%27s_secret_sharing) algorithm and 2 out of 3 shares are required to reconstruct private key. Creation and reconstruction of PK happens in the security enclave which doesn't have storage. Security enclave offers iframe to which shares can be passed to reconstruct PK and sign transactions. These shares are:

- **Device share** that is stored in the browser's local storage of iframe site (auth.privy.io)

- **Auth share** that is stored on Privy side and accessible by JWT token which was received upon authentication

- **Recovery share** which location is not mentioned explicitly, but it's used to recover PK. It's encrypted value and for encryption, user's password or Privy's password can be selected. Applying password will decrypt share. If user's password is used, ecryption/decryption happens on the local device only and if Privy encryption is used, user authenticates with JWT to Privy service which performs encryption/decryption. First option gives more power to the user, but in case they forgot their password, they can loose access to their PK.

To sign transaction, device and auth shares are used. If new browser or device is used, recovery and auth shares are used to reconstruct PK and calculate device share that gets stored in the user's local storage. The whole Shamir's shared storage design is open-sourced and audited, making this far superior that Dynamic's approach. Theoretically, in case of Privy disappearance, users should be able to reconstruct their PK and export it. However, this is only theoretical. Recovery share is stored on Privy side (in encrypted form), so in case of severe black swan event, users wouldn't be able to retreieve recovery share to decrypt it. When it comes to UI, we can include their confirmation UI for sending txs, we can implement custom or send txs completely in background.

On retrieval of Auth share, meaning when signing messages and transactions or exporting PK, MFA can be set and made required by us. MFA can be implemented as SMS, TOTP (Authenticators) and they plan to support Passkeys and Touch / Face IDs.

Privy supports integration with the same AA providers as Dynamic: Biconomy, ZeroDev, Alchemy and Pimlico. They plan to support Safe multisig transactions as well. Biconomy integration was tested and it went really smoothly. However, unlike Dynamic, Privy doesn't support non-EVM chains such as Solana or Flow which is a major disadvantage. They say they plan to support Solana and Cosmos in H1 2024.

Privy plans to support React Native and mobile development soon.

They have out-of-the-box integration with MoonPay for fiat on-ramp.

They have user management page where we could track all users:

Finally, Privy has [pricing packages](https://www.privy.io/pricing) that are disclosed for up to 10k MAUs. They would charge $300/month for that making them the cheapest option.

## Magic Link

Magic Link is another, probably most established, authentication provider. They have $80M in funding and 30M users. They are used by big names such as Salesforce, Starbacks and 7-Eleven. Even WalletConnect will use them for e-mail flow they are releasing.

Magic allows creation of account and authentication using e-mail, SMS, social network accounts and passkey (WebAuthn). They pitch passwordless account as neither of these methods require users to remember their passwords. E-mail authentication sends e-mail with code for every login. Regarding socials, they support Google, Facebook, Twitter, Apple, Discord, Github, Linkedin, Bitbucket, Twitch, Microsoft and Gitlab. Compared to Privy, they miss TikTok, Apple and Farcaster and comapred to Dynamic, they miss Apple. On the other side, they have Bitbucket and Gitlab. In my opinion, these differences are not important much, though I see TikTok as a great benefit of Privy. On top of primary authentication methods, Magic Link supports MFA that is performed using authenticators.

One big difference to Dynamic & Privy is that Magic Link doesn't support authentication flow using external wallets. While users can authenticate on Dynamic & Privy by connecting browser wallet, Magic Link doesn't support this flow, making UX somewhat hard for Web3 users. Existing users can connect external wallets, but they can't perform authentication with them. It seems they only support MetaMask and Coinbase wallet, which is quite limiting.

Magic Link creates a wallet for every authenticated user. They distinguish between dedicated wallets and universal wallets, where they plan to retire universal wallets. In both cases EOA is created and PK is stored on Magic side. They store them in KMS on AWS and attach them to Cognito records. It is similar to how Dynamic with TurnKey operates - in case of a disaster, they will become completely unrecoverable. The major difference between dedicated and unviersal wallets seems to be in the fact that user with universal wallet, when they authenticate to any app that uses Magic, get the same wallet -> it gets shared across all apps, unlike dedicated wallet which is app-specific.

After the call, I received [the page about security](https://trust.magic.link/) from them that shows results of different audits and that they are complaint against various standards.

Integration is quite simple. There is a Magic object initiated with app public key. From Magic object, we can retrieve ethers Provider that contains authenticated user signer. Provider and signer objects can be used with ether.js, web3.js or any other package for interactions with blockchain. Magic link has SDKs for Web, React Native, but also variety of different mobile and server-side programming languages (Ruby, Python, Go, Flutter, Swift...). This is example of integration:

```

import { ethers } from 'ethers';

import { Magic } from 'magic-sdk';

const magic = new Magic('API_KEY');

const provider = new ethers.BrowserProvider(magic.rpcProvider);

const signer = await provider.getSigner();

const txnParams = {

to: toAddress,

value: ethers.parseUnits('0.001', 'ether'),

};

const tx = await signer.sendTransaction(txnParams);

console.log(tx);

```

Besides EVM chains, Magic link supports Solana, Bitcoin, Flow, Aptos, Algorand, Cosmos, ICON, Near, Polkadot, Tezos and Zilliqa. Magic supports integration with AA providers and can work with Alchemy, Biconomy and ZeroDev, but probably with all others as well.

Magic is also directly integrated with various fiat on-ramp providers. They specifically named PayPal, Sardine, Onramper and Stripe. It should be examined how each of them works and what are their fees.

We would be able to see list of all accounts, but very little insight is provided for them. I basically only see authentication-related information, but not wallet-related. Dashboard looks like this:

Finally, when it comes to pricing, the highest disclosed package is up to 5000 MAUs. First 1000 users are free, 1000-5000 cost $0.05/user and above 5000 users, each costs $0.10. For a reference, 10 000 users would cost $900/month.

Questions:

1. How they differ from Privy & Dynamic?

2. How universal wallets will be used as part of dedicated wallets?

3. 3rd party wallets except MM and Coinbase wallet?

4. PK management & disaster recovery. Where signing happens?

5. Creating account using 3rd party wallet

Conclusion:

Magic Link seems to be inferior to Dynamic and Privy. Reasons are:

- Privy has the best PK management with Shamir's secret sharing

- Magic Link doesn't allow authentication with wallets

- Limited support for browser wallets

- Limited information about users on the dashboard

- Missing Apple & TikTok for social log-in

- The most expensive ($900/month for 10k users)

and on the positive side, it supports biggest number of non-EVM chains and is the most bettle-tested.

## Dynamic vs Privy

- Privy made some attempts to decrease dependancy on their services for PK management with Shamir's secret sharing

- Privy supports more somewhat relevant authentication options: TikTok, Farcaster and SMS

- Dynamic supports Solana embedded wallets, while they are on Privy's roadmap

- Dynamic supports bigger number of non-EVM chains for external wallets

- Dynamic allows more fine-tuned configuration and more extensive information capture

- Dynamic supports passkey MFA, while Privy has that only on the roadmap

- Privy has integration with MoonPay, Dynamic with Banxa. Magic seems the most superior in this aspect.

- Dynamic is integrated with Chainalysis

- Privy seems cheaper, but enterprise packages should be discussed

# X-chain swaps

The main suggestion would be to select one chain as a primary chain which should be at good intersection between high liquidity and low gas costs. Arbitrum could be a good candidate, but lot of benchmarking still needs to be done. If there is sufficient liquidity on the primary chain, majority of swaps can be actually performed without bridging to other chains. The only thing we would need are DEX aggregators to perform these same chain swaps.

For minority of swaps, we need to ensure fast and inexpensive solution for moving the liquidity between chains. If user wants to swap their primary chain asset A to asset B which doesn't have sufficient liuidity on the primary chain X, then we need to find chain Y with the sufficient liquidity of B. In that case, for most of scenarios, we would need to move asset A from chain X to chain Y and swap A -> B on chain Y. In very extreme cases, where token A doesn't have liquidity on chain Y, we need to find an asset with sufficient liquidity on both chains, asset C. On chain X, we need to swap A->C, move C from X to Y and swap C->B on chain Y. However, for the purpose of analysis, we can assume that this extreme scenario is the default one and make a subset of cases, where A = C. This subset is, of course, more favorable as it avoids A->C swap, making overall slippage, gas costs and DEX fees lower. Problem we are solving is finding way to move C between chains X and Y with lowest costs and the lowest latency possible.

## Bridges

Default option for moving asset C between chains is usage of one of many bridges. However, the current landscape of bridges is very limiting. Most of "unofficial bridges" operate in a way that they require liquidity providers to stake their assets in their pools across chains they operate on. When they detect deposit of asset C on chain X, they release asset C on chain Y from these pools. However, in order to attract liquidity to their pools, they charge substential fee which they distribute to the liquidity providers, making bridging of asset C quite expensive. These bridges usually have relatively low latency, where their LPs take a risk of front-running chain X finality and possibily release asset C on chain Y, without having tx that deposits C on chain X being added to the blockchain X. Compensating LPs for this risk is another reason why bridging fees are this substential. On the other hand, there are "official bridges", that are run by chains themselves or by issuers of asset C (e.g. Circle's CCTP bridge for USDC). These bridges are usually free or inexpensive, but very slow, because they rarely take a risk of finality on chain X. They rather wait for enough block confirmations on L1 chains, passing of challenging period for optimistic L2 chains or verification of ZK proof (and sufficient block confirmations) for ZK L2s, before they release funds on chain Y. Final limitation is most of these bridges, especially "unofficial", are heavily centralized and whether user will get asset C on chain Y depends on willingness and capabilities of the bridge to release funds.

Using these bridges directly introduces huge complexity on our side as we need to decide between slow and expensive for every A(x) -> B(y) pair and need to monitor liquidity of C on bridge pools on chains X and Y. Luckily, **Li.Fi** product, as a bridge aggregator, takes care of these complexities and allows us to get variety of routes for A(X) -> B(Y) with estimation on costs and latency. Users (end-users or we, as their integrators) can then decide which route to take => we can take cheapest, fastest or safest route or a route on the intersection between these three extremes. In majority of the routes, A = C, but some of the routes also introduce intermediary asset C.

The easiest way to evaluate Li.Fi is using [Jumper Exchange](https://jumper.exchange/). It's a frontend dApp integrating Li.Fi, and I created PoC to confirm they are 1:1 integrated. If we bridge asset C from Arbitrum, we can get it on the destination below 2 minutes for all EVM chains. For Solana, it takes 24 min. Fees for these fastest options depend on the amount (as they include some fixed costs), but they can be up to 0.3% if chain Y is Mainnet, BNB, Avalanche or Gnosis, up to 2% in case of Optimism, Polygon PoS or Base, and up to 5% in case of zkSync, Solana and Linea. Howver, changing chain X yields completely different results. E.g. if source chain is Polygon PoS, then fastest routes to other EVM chains take 4-10 min and fee between 1% and 4%. Bridging to Solana would take 8 min and a similar fee. This underlines the importance of properly selecting primary chain where not only on-chain liquidity and gas costs are determining factors, but also availability and performance of bridging routes.

As shown, these results are still very superficial and can't be used to determine the most optimal X-chain swap strategy. However, they give rough image of the landscape and show general limitations: bridging between EVM chains can usually be done below 2 min with a fee that goes up to 5% for the fastest routes. bridging between EVM and Solana is significantly slower, while routes to other non-EVM chains do not exist on Li.Fi. If we want to avoid these limitations, we can't rely only on bridge liquidity, but we need to engage additional capital.

## Gateway for Market makers

If presented limitations of bridge landscape are too limiting and for some routes (e.g. EVM chain -> Solana) and they are indeed limiting, we would need to raise liquidity which should mimic what unofficial bridges do and offer it as an alternative route. This liquidity needs to be spread across all chains we support, it can be in one or more tokens and we can provide it ourselves or engage with market makers. By looking at alternatives, I deduced two approaches that could be used.

For simplicity and generalization, let's assume we raise liquidity in only one token, asset C. Let's also call liquidity provider "Filler" and our user "Swapper", terminology used by UniswapX. Also, let's recap keys:

- asset A is an asset that swapper holds and wants to swap it. It's on the source chain only

- asset B is an asset that swapper wants to get. It's on the destination chain only

- chain X is the source chain

- chain Y is the destination chain

- asset C is intermediary asset that exists on both chains, X and Y. Swapping A->C needs to happen on the source chain and swapping C->B needs to happen on the destination chain

As an example, let's say that user holds GNS token (existing only on Polygon PoS) and wants to swap it to RNDT token (existing only on Arbitrum). USDC as intermedairy asset is used. A = GNS, B = RDNT, C = USDC, X = Polygon PoS, Y = Arbitrum.

### Bridge-based solution

First approach is to utilize existing bridges, use the cheapest bridge and front-run release of asset C on chain Y by Filler. Filler would provide asset C (decreased by filler's fee) to the swapper as soon as bridging tx is confirmed on chain X and would collect bridged asset C when it eventually becomes available. We would deploy `Router` contracts to all chains we support to faciliate this process and to make it as trustless as possible.

This is how it would look like:

1. Swapper calls `deposit` function of the `Router` and deposits asset A. Pre-requisite is that swapper approved spending by the Router (by approving or by permitting). This function takes asset A from the swapper, swaps it to asset C using DEX aggregator and sends C to "C token bridge". C token bridge is the cheapest available bridge, regardless how slow it is. If C is USDC, it would be Circle's CCTP bridge. `Router` on the destination chain should be set as the recipient.

2. Filler should listen to deposit events on `Router` contracts on all chains and as soon as it detects tx emitting this event, it should call `fill` function of the `Router` contract on the destination chain. This function would take filler's C tokens, swap C for B token using DEX aggregator, denote what amount of C filler provided and transfer B tokens to the swapper. Swapper gets asset B on the chain Y as soon as this tx is processed.

3. When "C token bridge" makes funds available on the chain Y, Filler submits `Withdraw` tx to the `Router`. This function withdraws C asset from the bridge, checks if filler has right to withdraw (right gained in step 2) and transfers token C back to the filler.

This architecture is quite clean as:

- it doesn't require any off-chain communication between swapper and filler

- it uses existing bridge infrastructure making it easy to build

- filler doesn't need to rebalance inventory between chains: they provide C on chain Y and they get C on chain Y (+ fee)

- it's probably less capital-intensive than intent-based solution

On the other side, this architecture depends on the will and capabilities of the filler and requires filler to be permissioned role (managed by us directly, preferrably). There are many situations which we need to handle:

- what if filler doesn't respond to deposit tx by swapper? We could allow Swapper to withdraw asset C on chain Y when it becomes available, but the chance of obtaining asset B timely with set slippage was missed

- what if filler manipulates DEX aggregator route on chain Y? Swapper doesn't have a guarantee on the amount on B received. We can solve this by slippage set by the user.

- what if swap can't be performed with the set slippage on the chain Y? We would need to allow reverting process: moving C back to chain X and swapping C -> A. This might incur losses for the swapper.

-

And finally, even though this design is easy to build, it's limiting as it enforces one flow only: it limits number of fillers we need, it limits liquidity only to asset C and it limits how filler performs swaps (enforces DEX aggregator we select).

### Intent-based solution

This architecture is heavily inspired by UniswapX design and similar intent-based protocols. It introduces Intent API as off-chain service through which swapper and filler communicate. Swapper requests quote via API (RFQ) and various fillers can give quotes. Swapper selects one quote and builds order based on this. Order consists of amount of token A on chain X and the amount of token B on chain Y as well as deadline for processing the order. Swapper signs this order and posts it to Intent API. Filler takes this order and submit tx on chain X which takes swapper's token A and optionally swaps it for token C. Then, filler submits tx to the destination chain which swaps filler's token C for B and releases B to the swapper. Finally, swapper can withdraw C (or A) on the chain X. For this to work, we need to deploy `Router` contract to every chain we support.

This is how it would work:

1. Swapper sends RFQ via Intent API. Various fillers provide quotes as amounts of B on chain Y. Fillers can continiously update tokens and amounts they are willing to work on, effectively building an orderbook

2. We take the best quote on behalf of the swapper and construct order and sign it. Order needs to set deadline and min amount of B that's expected. Signed order is posted to the Intent API

3. Swapper needs to approve spending of token A to the `Router` contract. For tokens that support permits, this step can be avoided, leading effectively that swapper doesn't submit any txs

4. Filler should deploy their own `Executor` contracts on every chain they want to work with. These contracts should implement their own logic how to 1. provide C to the `Router` by taking A from it on chain X and 2. how to provide B to the `Router`. There could be numerous ways, such as filler having reserves of A, B and C tokens and willing to hold all of them, but in this example, most streight-forward solution is shown: `Executor` contract swaps A->C on the chain X and C->B on the chain Y, meaning that the filler needs only to keep inventory in token C on all chains. This step starts by filler reading signed order on Intent API and submitting `execute` tx to it's own `Executor` contract. This function calls `execute` function on the `Router` which takes token A from the swapper and transfers it to `Executor`. Custom logic of `Executor` is executed which includes swapping asset A for asset C. Asset C is deposited back to the `Router`.

5. Filler calls function `finalizeExecution` on `Executor` contract on the chain Y. Contract can hold asset C or it takes it from the filler. This function swaps C for B and transfers asset B to the `Router`. `Router` contract utilizes LayerZero (or any other cross-chain messaging protocol) to send message about this tx to the `Router` on the chain X and then, transfers asset B to the swapper.

6. Finally, when message is received on the chain Y, `Router` transfers asset C to the filler's `Executor`.

This solution offers quite a few advantages:

- it allows anybody to become the filler. We can onboard any number of market makers, but can we? This solution would be technically feasible, but the question is if we would really find sufficient number of market makers willing to trade against the swappers

- it gives flexibility to the fillers to decide however they want to perform the trade, as long as they take A on the chain X and give B on the chain Y

- it solves issues of price unpredictibility with the bridging solution

- theoretically, it could help swappers get better prices then using DEX aggregator -> bridge -> DEX aggregator

- with off-chain Intent API, MMs could even use CEX liquidity and connect CEX APIs to the Intent API

- there is no dependence on any bridge, though, cross-chain messaging still needs to be in place

However, it has many downsides:

- it's technically more complex as it requires building and maintaining off-chain services

- it introduces centralization and a single point of failure (Intent API)

- it requires high involvement of the fillers. They need to actively provide quotes, maintain their own off-chain infrastructure and deploy `Executor` contracts on every chain (though, we can provide template or take some MM liquidity and be one of the fillers)

- fillers need to continiously rebalance their liquidity on all chains. Filler provides asset C on the chain Y and gets it back on the chain X, unlike bridge-based solution where they keep the same portfolio for the whole time on all chains

- it's questionable how much interest from MMs this product can attract and how competitive fees can be. Other products on the market didn't prove much of the attraction

- it could prove to be hard to ensure that all quotes provided from fillers are real and that fillers would actually fill orders created based on their quotes

- Dutch auction proposed by UniswapX seems to be incentivizing fillers to add latency, especially in the low-competition environment