---

outline: deep

---

# OSS Commercialization

## 1. Overview

In the Commercialization chapter of the Open-source Annual Report for the past two years, the underlying drivers of successful commercialization of open-source software, possible commercialization paths for open-source software companies, decision making criteria of investors in open-source projects, and case studies are presented. Last year, combined with some trends and changes in the market environment at that time, we discussed the drivers, challenges and realization paths for domestic open-source projects to explore the process of globalizationand commercial development, which triggered a lively discussion among many open-source buddies.

In 2022-2023, the field of AI has seen an explosion of pre-trained large language model (LLM) technology, which has sparked widespread interest across society and is predicted to continue to deepen its impact on life and work in the future. It is not difficult to find that in this wave of AI technology iteration, the open-source ecosystem has also played a essential role in promoting the development of technology, and there are many open-source models as well as open-source projects actively seeking commercialization. However, there are numerous differences between open-source models and traditional open-source software. In such an era, the commercial development of AI open-source projects and open-source models has become a topic worthy of in-depth research and discussion.

The security and controllability of open-source projects, including open-source software and open-source models, is one of the key considerations for business users in the commercialization process. Combined with the current technology trends, the analysis of the security of open-source software, the controllability of open-source models, and open-source commercial licenses are topics of interest.

Capital is an important participant in promoting the development of open-source markets. For investment institutions, when judging an open-source project, they will often consider the following points:In the product development stage, the focus should be on whether the team has the ownership and control of the code, and whether it has international competitiveness; in the community operation stage, the main point is to see whether the operating ability is strong enough; in the commercialization stage, the market matching ability and the maturity of the business model will become the main focus.

As the first organization in the field to focus on open-source and continue to work on it, Yunqi Partners has successfully identified and invested in open-source companies such as PingCAP, Zilliz, Jina AI, RisingWave Lab, TabbyML, etc., and continues to participate in the construction of the open-source ecosystem.

In order to further enrich the content of the report, this year we are honored to jointly organize a series of closed-door discussion Meetup with Open-source Society. We had a deep discussion on the development of open-source commercialization related to the development of AI Infrastructure, the development of open-source LLMs, together with industry guests including Microsoft, Google, Apple, Meta, Huawei, Baidu and other domestic and international manufacturers, Stanford University, Shanghai Jiao Tong University, China University of Science and Technology, UCSD and other universities and research institutes, as well as a large number of domestic and international front-line entrepreneurs open-source open-source LLMSome of the key insightsare included in this report.

This chapter is written by the investment team of Yunqi Partners, the topics discussed this year focusing oncutting-edge trends and technology, together with some outlookand prediction.We combined industry participants experience and opinions to put forward our views, if there are inconsiderate or different ideas, further discussion is highly welcomed.

Key elements include:

**Open source ecosystem for rapid AI growth**

**Open source security challenges**

**Capital market situation for open source projects**

## 2. Open source ecosystem fuels rapid AI development

### 2.1 The proliferation of pre-trained LLM is strongly driven by open source

#### 2.1.1 Rapid development of pre-trained LLMs

The development of pre-trained LLM has been groundbreaking over the past few years, and they have become a major landmark in the field of AI. These models, not only are growing in scale, but also have made huge leaps in their intelligent processing capabilities. From the complexity of the language processing to the finesse of image processing, and the depth of advanced data analysis, these models demonstrate unprecedented capability and precision. Especially in the field of Natural Language Processing (NLP), pre-trained LLM, such as the GPT series, have been able to simulate complex human languages by learning a large amount of textual data for high-quality text generation, translation and comprehension. These models not only show significant improvements in expression fluency, but also show an increasing ability to understand context and capture subtle linguistic differences.

In addition, these LLMs perform extremely well in complex data analysis. They are capable of extracting meaningful patterns and correlations from huge data sets to support a wide range of fields such as scientific research, financial analysis, and market forecasting. It is worth noting that the development of these models is not limited to their own enhancements. As they are popularized and applied, they are driving technological advances across the industry and society as a whole, facilitating the creation of new applications such as intelligent assistants, automated writing tools, advanced diagnostic systems, etc. Their development opens up more new development directions for incoming AI applications and research, indicating a new round of technological innovation.

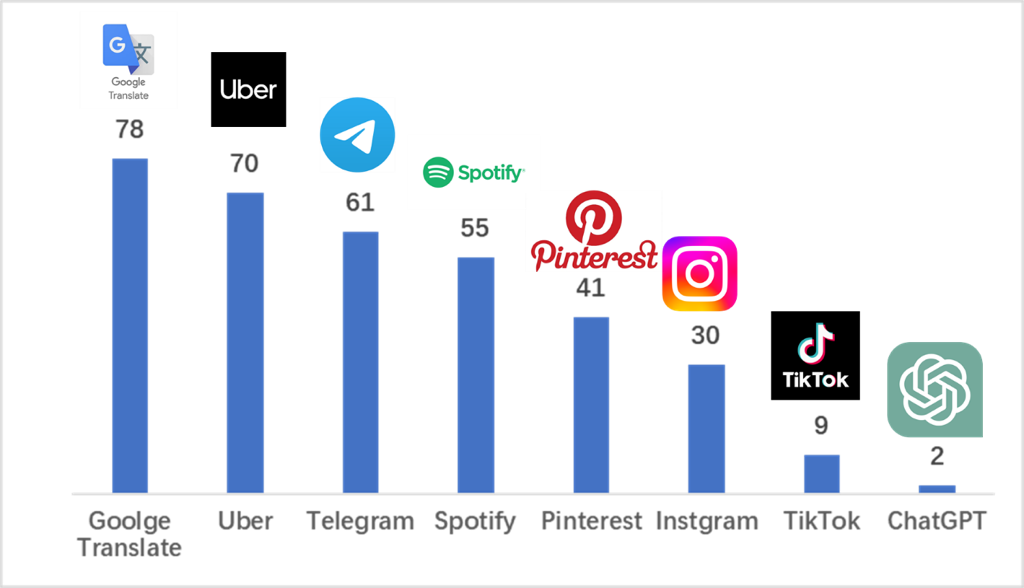

Enthusiasm for AI among public users is surging rapidly. Number of ChatGPT users reached 100 million in just 2 months, compared to TikTok's 9-month-record. This is not only a huge commercial success, but also a major milestone in the history of AI technology development.

<div style="margin-left: auto; margin-right: auto; width: 75%">

|  |

| -------------------------------------------------------- |

</div>

<center> Figure 2.1 Time to reach 100 million users for major apps (in months)</center>

<br>

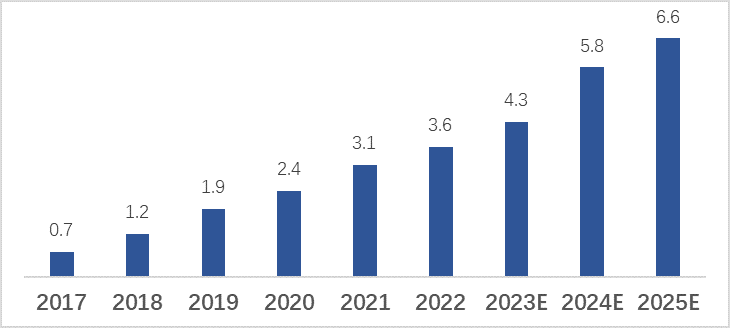

Along with the growing AI popularity, the global AI market size is also growing rapidly. According to Deloitte, it will grow at a CAGR of 23% during 2017-2022, and is expected to reach seven trillion dollars in 2025.

<div style="margin-left: auto; margin-right: auto; width: 75%">

|  |

| -------------------------------------------------------- |

</div>

<center> Figure 2.2 Global AI Market Size (Trillions of Dollars)</center>

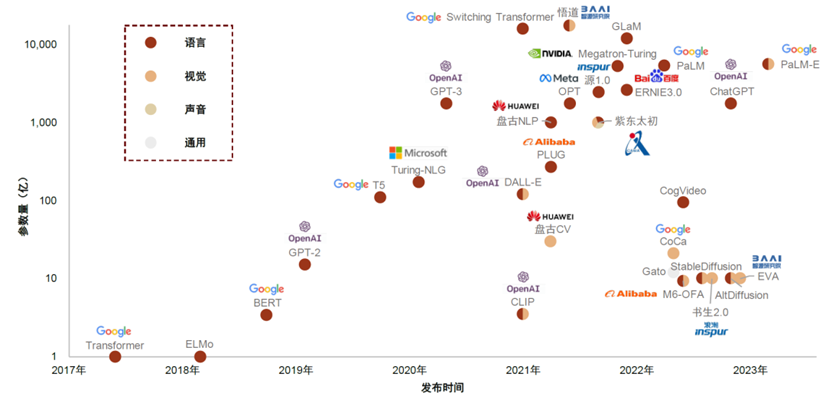

#### 2.1.2 Open Source Power for AI

The power of the open-source ecosystem has played an essential role in making such great strides in pre-trained models. This includes not only research from academia but also support from industry. Under the joint efforts of the open-source ecosystem, the performance of the open-source-based LLM is rapidly developing and gradually rivaling that of closed-source.

**The power of open source from academia has contributed significantly to the evolution of AI technology**

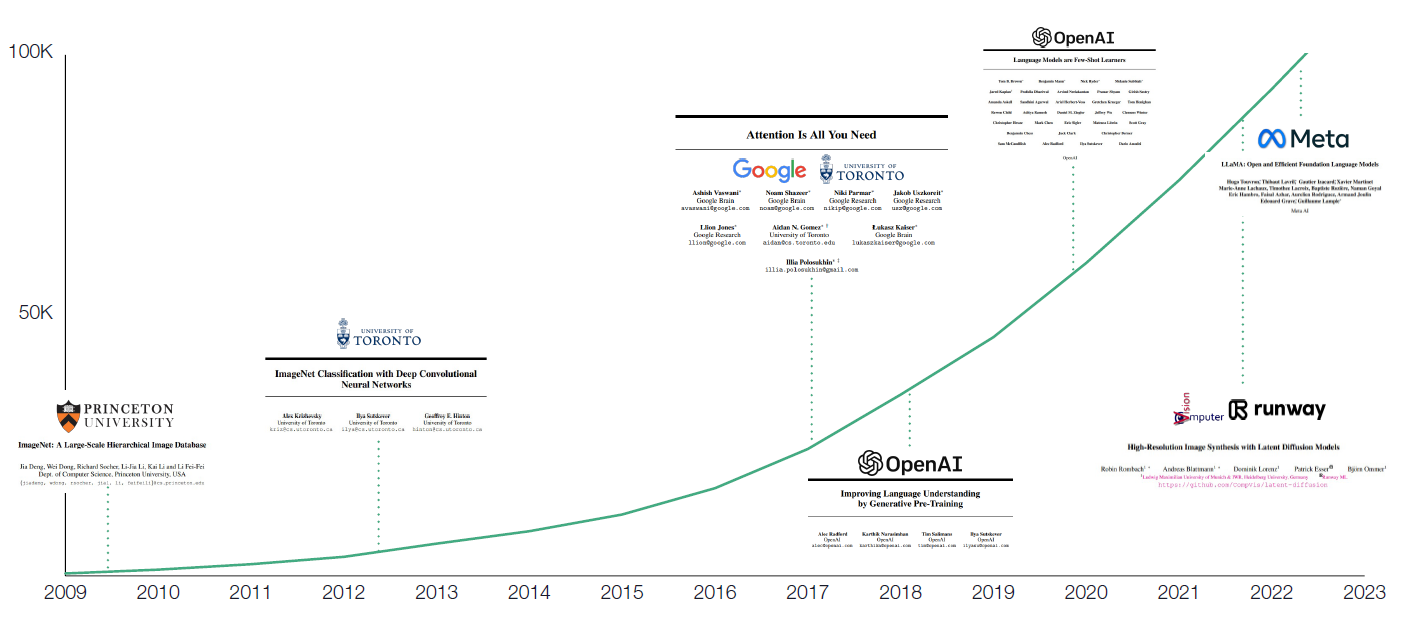

Since Princeton University published ImageNET in 2009, a significant paper in computer vision, there has been a gradual increase in the number of papers related to AI machine learning. Over the years, researchers have proposed many open-source algorithms. By 2017, the number of papers on AI machine learning on Arxiv had reached over 25,000. The "Attention Is All You Need" paper was published that same year, introducing the open-source Transformer model. The publication of this paper led to a concentrated surge in research and papers on LLM. As a result, from 2017 to 2023, the number of Arxiv papers related to LLM surged to over 100,000. This surge also considerably accelerated the open-source development of related models and laid the theoretical foundation for the subsequent explosion of LLM technology.

<div style="margin-left: auto; margin-right: auto; width: 100%">

|  |

| -------------------------------------------------------- |

</div>

<center> Figure 2.3 Cumulative number of AI / Machine Learning related papers published on Arxiv</center>

<br>

:::info Expert Review

**Willem Ning JIANG**:This insight is quite exciting, and academic open-source plays a very important role.

:::

**The industry's open source power fuels rapid development of LLM**

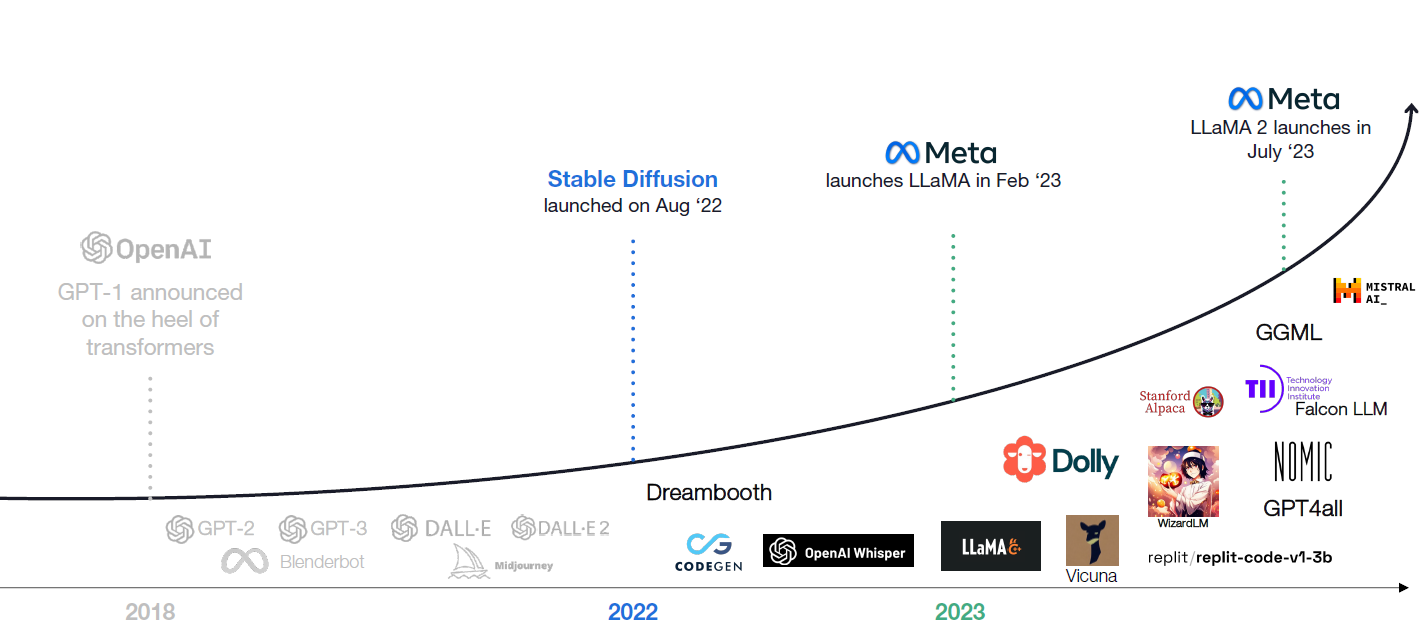

With the ChatGPT LLM popularity, more and more technicians are devoted to the research and development of LLMs. In addition to closed-source products, many great open-source LLMs are also leading the industry. Stable Diffusion in 2022, with its powerful graphical capabilities and community strength, quickly caught up with Midjourney, a famous closed-source graphical model, and has already taken the lead in some aspects; the robust capabilities of open-source large language models, represented by Meta LLaMA 2, have made Google researchers reflect that "we don't have a moat, and neither does OpenAI"; and there are also emerging open-source leaders in various fields, such as Dolly, Falcon, etc. With its powerful community resources and cheaper cost of use, Open-source LLM quickly gained many business and individual users, acting as an indispensable force in the development of LLM.

<div style="margin-left: auto; margin-right: auto; width: 100%">

|  |

| -------------------------------------------------------- |

</div>

<center> Figure 2.4 Emerging Open Source LLMs </center>

<br>

**Performance of open-source LLMs is rapidly catching up with closed-source**

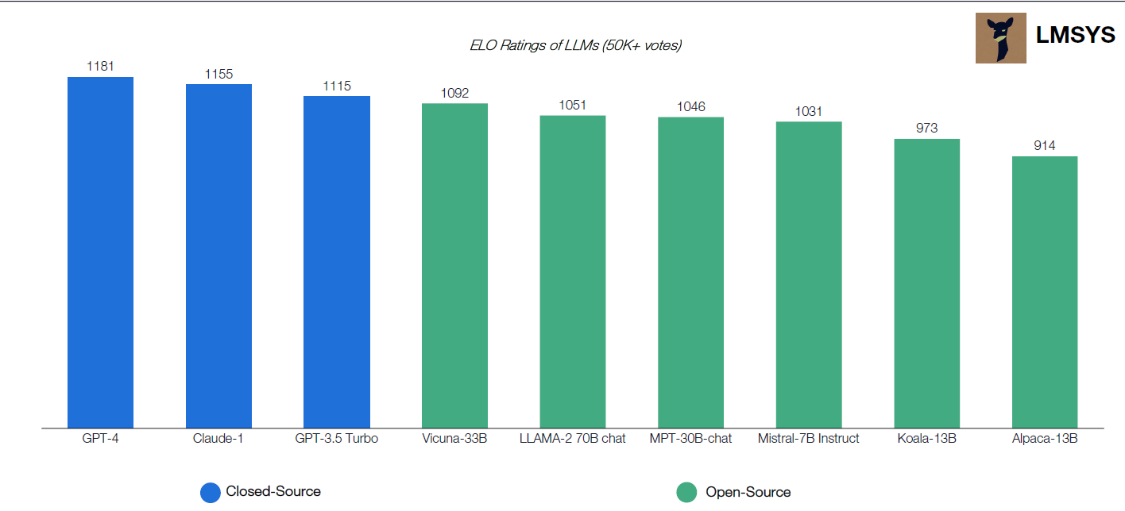

Closed-source LLM represented by OpenAI ChatGPT4 started earlier, and the number of parameters and various performance metrics showed a tendency to outperform open-source models in the early stage. However, open-source models have a strong community and technical support, resulting in rapid performance growth. The most mature version of ChatGPT4 scored 1,181, while Llama 2, an open-source LLM launched less than four months ago, scored 1,051, with a difference of only 11%. It's worth noting that the rankings 4-9 are all open-source LLMs, indicating that the growth in open-source LLM performance is not an isolated case but an industry trend. Open-source LLMs are highly cost-effective due to their low usage costs and smaller performance gap compared to closed-source LLMs, which makes them attractive to increasing numbers of business and individual users. Please see the more detailed discussion of costs later.

Benefiting from the open nature of open-source models, users can easily fine-tune LLMs to fit different vertical application scenarios. Fine-tuned LLMs are more industry-specific than general-purpose LLMs, which is an advantage that closed-source models cannot provide.

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| -------------------------------------------------------- |

</div>

<center>

Figure 2.5 [ELO ratings](https://en.wikipedia.org/wiki/Elo_rating_system) of LLMs based on user feedback

</center>

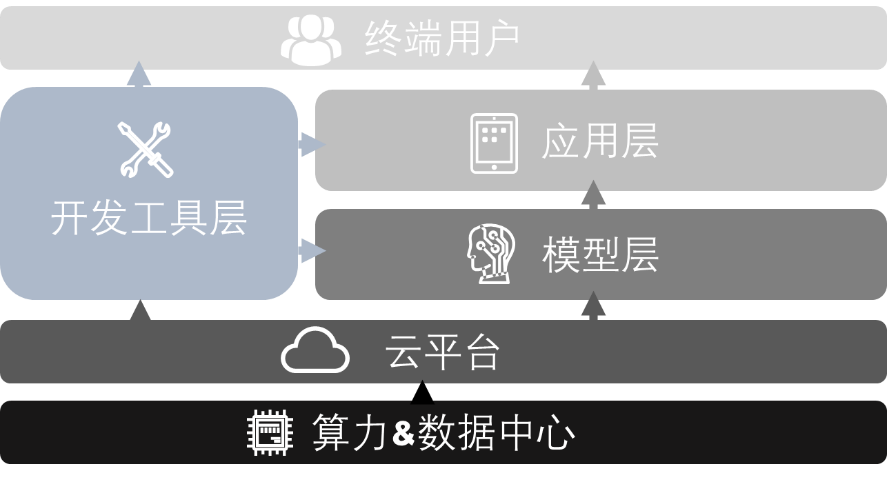

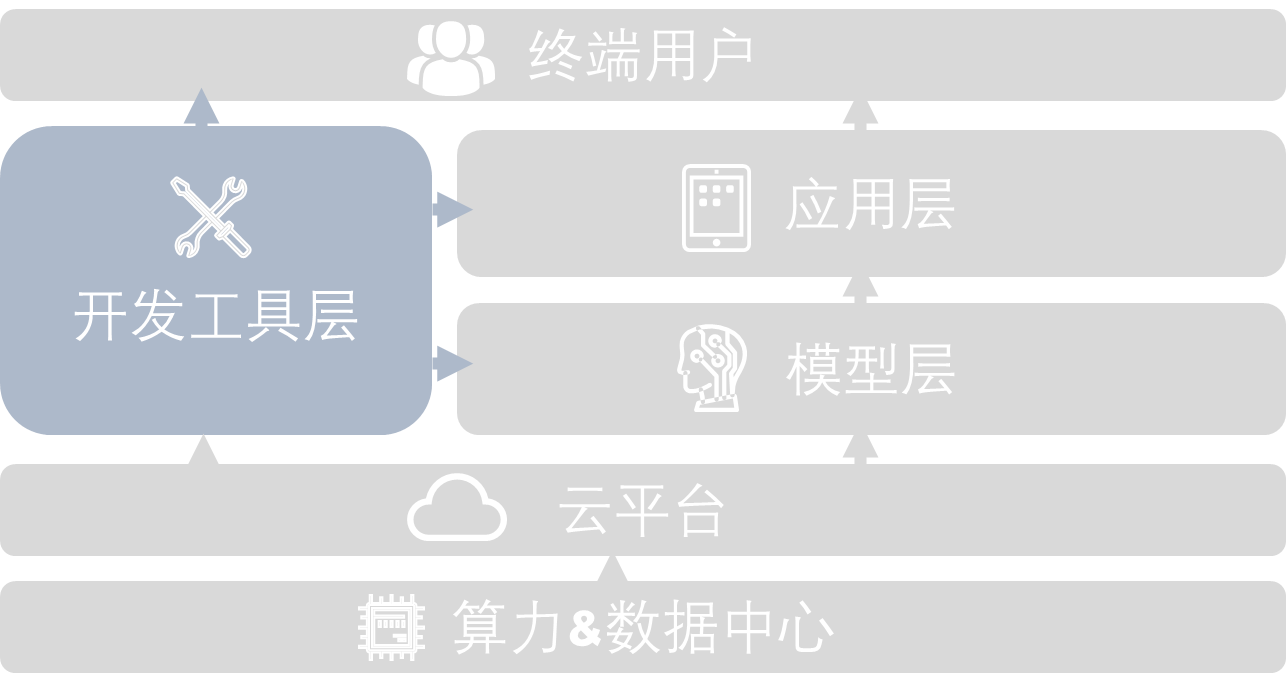

#### 2.1.3 The three layers of the LLM

The technical architecture of the LLM is divided into three main layers, as shown in the figure below. Open source has made significant contributions to the model layer, the developer tools layer, and the application layer. Each layer has its unique function and importance, and together, they form the complete architecture of the large-scale model technology. The subsequent sections (2.2, 2.3, 2.4) will discuss each layer in detail.

<div style="margin-left: auto; margin-right: auto; width: 80%">

|  |

| -------------------------------------------------------- |

</div>

<center> Figure 2.6 Technical Layers of the LLM </center>

<br>

- **Model layer**

The model layer is the foundation of the entire architecture, including the core algorithms and computational frameworks that make up the LLM, typical models such as GPT and Diffusion are the core of generative AI. This layer involves model training, including pre-processing of large amounts of data, feature extraction, model optimization and parameter tuning. The key to the model layer is efficient algorithm design and large-scale data processing capabilities.

- **Development tools layer**

The development tools layer provides the necessary tools and platforms to support the development and deployment of LLM, including various machine learning frameworks (e.g., TensorFlow, PyTorch) and APIs that simplify the process of building, training, and testing models. The development tools layer may also include cloud services and computing resources that support model training and deployment. In addition, this layer is responsible for version control, testing, maintenance, and updating of the model.

- **Application layer**

The application layer mainly considers how to access the LLM capabilities in real applications. This layer integrates models into specific business scenarios, such as intelligent assistants, automated customer service, personalized recommendation systems, etc. The key to the application layer is translating complex models into user-friendly, efficient, and valuable applications while ensuring good performance and scalability.

These three layers are interdependent and constitute the complete architecture of the LLM technology; from the basic construction of the model to the realization of specific applications, each layer plays an important role. The corresponding open-source content for each of the three layers is discussed in detail next.

### 2.2 Open source is the second driving force fuelling the development of foundation models

#### 2.2.1 Supply side:Concentrate on R&D

**Saving the number of developers and centralizing R&D capabilities**

The development of AI models requires technical expertise, and there is a shortage of related talent in China. Open-source technology can promote the development of advanced AI functionality and alleviate pressure on SMEs. Open-source Language Models lower the entry barrier and save development time, enabling more researchers to access advanced AI technologies directly.

Based on efficient pre-trained models, developers can directly innovate and improve in a targeted way rather than being distracted from building the infrastructure. This concentration on innovation rather than infrastructure has greatly contributed to rapid technological advances and the expansion of applications. At the same time, sharing open-source models facilitates the dissemination of knowledge and technology, providing a platform for developers worldwide to learn and collaborate, which plays a crucial role in driving overall progress across the industry.

**Saving computational power and avoiding reinventing the wheels**

As the performance of the LLM continues to grow, so does its number of parameters, which has jumped 1,000 times in the past five years. According to estimates, ChatGPT chip demand reaches more than 30,000 NVIDIA A100 GPUs, corresponding to an initial investment of about 800 million U.S. dollars, with daily electricity costs of $50,000. The computational requirements for training are becoming more and more costly, so reinventing wheels over and over again is a massive waste of resources. Coupled with the U.S. ban on NVIDIA's A100/H100 supply to mainland China, it's becoming increasingly difficult for domestic companies to train on LLMs. The open-source pre-trained LLM has become a perfect choice, which can solve the current dilemma so that more companies can leverage LLMs for secondary development.

Four steps are required for LLM training:pre-training, supervised fine-tuning, reward modeling, and reinforcement learning. The computational time for pre-training occupies more than 99% of the entire training cycle. Thus, the open-source model can help developers of LLM platforms directly skip the steps, with 99% of the cost of investing limited funds and time in fine-tuning steps, which is a significant help to most application layer developers. Many SMEs need model service providers to customize models for them. The open-source ecosystem can save a lot of costs for the secondary development of LLMs and thus can give birth to many startups.

<div style="margin-left: auto; margin-right: auto; width: 100%">

|  |

| -------------------------------------------------------- |

</div>

<center> Figure 2.7 Increasing number of large model parameters </center>

<br>

**Open source allows for exploration of a wider range of technological possibilities**

Whether the world-shattering Transformer model is the optimal solution is still unanswered, and whether the next best thing is an RNN (Recurrent Neural Network) is still in question. However, due to the open-source ecology, developers can try on different branches of the AI family cohesive with various new development forces, ensuring the diversity of technological development. Therefore, the human exploration of the LLM will be unrestricted to the local optimal solution and will promote the possibility of continuous development of AI technology in all directions.

#### 2.2.2 Demand side: lowering the barriers to capture the market

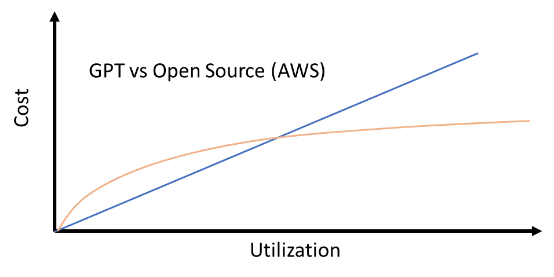

**Open source models significantly reduce costs for model users**

Deploying an open-source model initially requires some investment, but as usage increases, it exhibits scale economy, and the cost of usage is more controllable compared to closed-source. If you have a usage scenario where the average daily request frequency has an upper limit, then directly invoking the API is less expensive. However, if you have a higher request frequency, deploying the open-source model is less costly, so you should choose the appropriate method based on your actual usage. <br>

<div style="margin-left: auto; margin-right: auto; width: 75%">

|  |

| -------------------------------------------------------- |

</div>

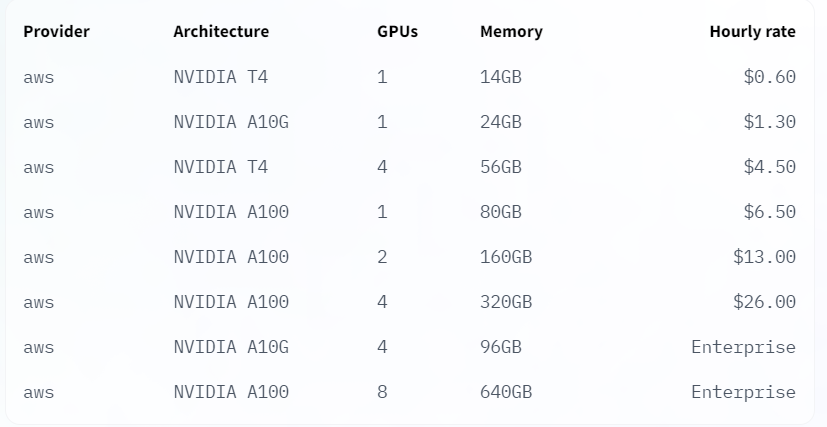

<center> Figure 2.8 Cost Comparison of Calling OpenAI APIs and Deploying Open Source Models on the AWS Cloud </center>

<br>

Comparison of directly calling OpenAI's API and deploying Flan UL2 model on public cloud as an example:

According to the latest data from OpenAI's official website, using ChatGPT4 model, the input is $0.03 / 1000 tokens and the output is $0.06 / 1000 tokens. Considering the relationship between input and output and assuming an average cost of $0.04 / 1000 tokens, each token is about 3/4 of an English word, and the number of tokens in a request is equal to the prompt word + the output tokens. Assuming a block of text is 500 words, or about 670 tokens, the cost of a block of text is 670 x 0.004/1000 = $0.00268.

Suppose the open-source model is deployed on the AWS cloud, taking the Flan UL2 model with 20 billion parameters, as mentioned in the related tutorial published by AWS, as an example. In that case, the cost consists of three parts:

- Fixed cost of deploying models as endpoints using AWS SageMaker is about $5-6 per hour or $150 per day

- Connecting SageMaker endpoints to AWS Lambda: Assume responses are returned to users in 5s, using 128MB of memory. The price per request is: 5000 x 0.0000000021 (unit price per millisecond for 128MB) = $0.00001

- Open this Lambda function as an API via API Gateway. The Gateway costs about $1 / 1 million requests or $0.000001 per request.

Based on the above data, it can be calculated that the total cost of the two is equal when the number of requests is 56,200 in a day. When the number of requests reaches 100,000 per day, the cost of using ChatGPT4 is about $268, while the cost of the open-source big model is $151; when the number of requests reaches 1,000,000 per day, the cost of using ChatGPT4 is about $2,680, while the cost of the open-source big model is $161. It can be found that the cost savings of the open-source big model are significant as the request volume increases.

**Open source improves the explanability and transparency of models and lowers the barrier to technology adoption**

Open-source models are more accessible for evaluation than closed models. Open-source models provide access to their pre-training results, and some even disclose their training datasets, model architectures, and more, making it easier for researchers and users to conduct in-depth analyses of the LLMs and comprehend their strengths and weaknesses. Scientists and developers worldwide can review, evaluate, explore, and understand each other's underlying principles, improving security, reliability, explanability, and trust. Sharing knowledge widely is crucial to promote technological progress and also helps to reduce the possibility of technology misuse. Closed-source models can only be evaluated through performance tests, essentially a "black box." It is hard to measure the strengths and weaknesses, applicability scenarios, and other factors of the closed-source models, and their explanability and transparency are considerably lower than that of open-source models.

Closed-source models can face the risk of being questioned for their originality. Users cannot be sure that closed-source models are genuinely original, leading to concerns about copyright and sustainability issues. On the other hand, open-source models are more convincing to users because the code is available to validate their originality. According to Hugging Face technician comments, open-source models like Llama2, which have published details of training data, methods, labeling, and so on, are more transparent than the black box of closed-source LLMs. With transparency in the articles and the code, users know what's in there when they use it.

Higher explainability and transparency are conducive to enhancing users' trust, especially business users, in the LLMs.

**Business users can realize specific needs with open source base models**

Business users have multiple types of specific needs, such as:industry-specific fine-tuning, local deployment to ensure privacy, and so on.

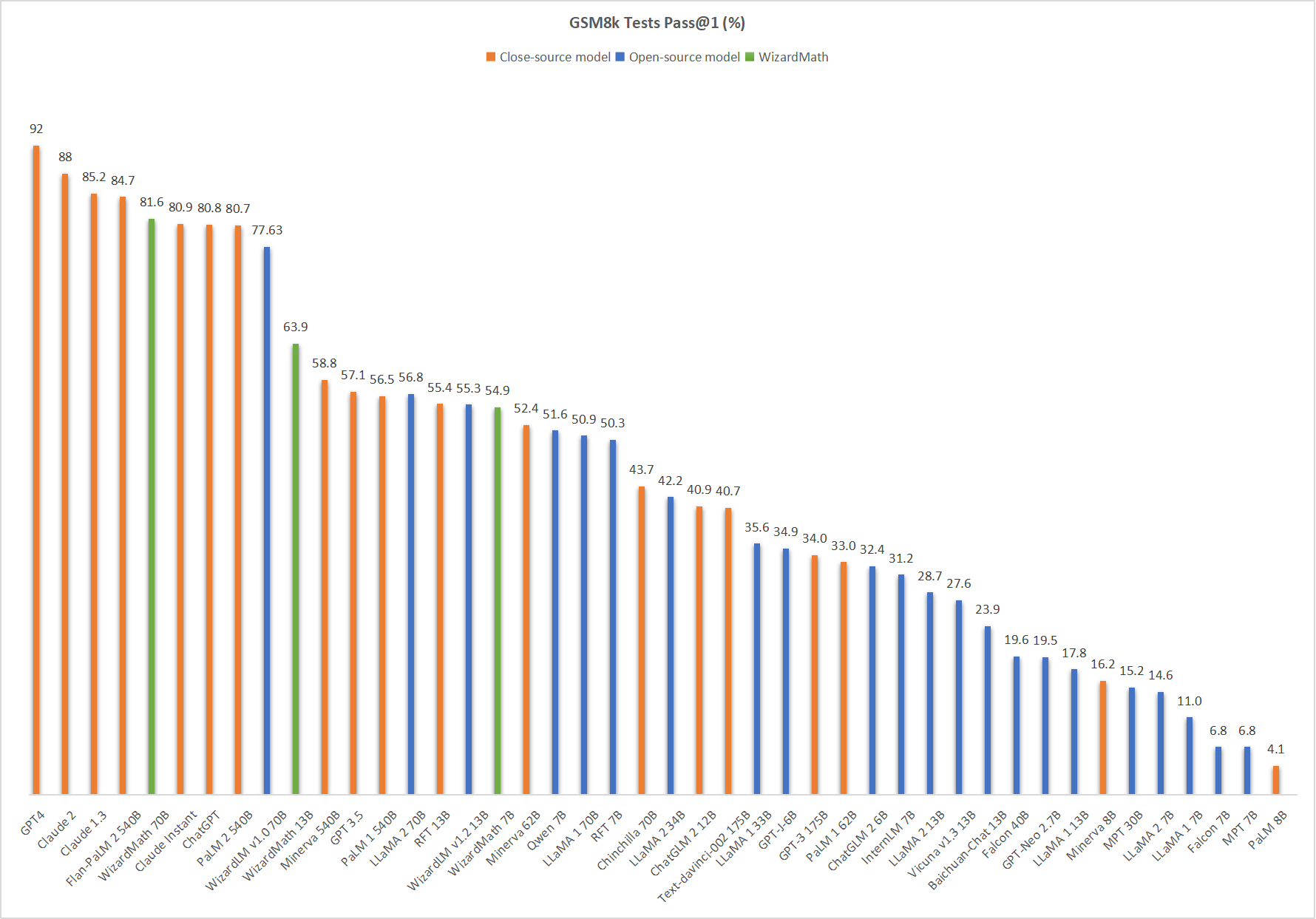

As the amount of LLM parameters continues to increase, the training cost continues to rise. There are better solutions than simply growing the LLM parameters to improve performance. On the contrary, fine-tuning for a specific problem can quickly improve the performance of LLM targeting to achieve better results with less effort. For example, WizardMath, an open-source LLM of mathematics fine-tuned by Microsoft based on LLaMA2, has only 70 billion parameters, but after testing on the GSM8k dataset, the mathematical ability of WizardMath directly beats that of several LLMs such as ChatGPT, Claude Instant 1, PaLM 2-540B, and so on, which fully shows the critical role of fine-tuning in improving the professional problem-solving ability of LLMs, which is also a significant advantage of the open-source LLM. <br>

<div style="margin-left: auto; margin-right: auto; width: 100%">

|  |

| -------------------------------------------------------- |

</div>

<center> Figure 2.9 Performance Ranking of WizardMath </center>

<br>

Many business users have incredibly high data privacy requirements, and the ability to deploy open-source LLMs locally greatly protects business privacy. When clients call closed-source LLMs, the closed-source models always run on the servers of companies such as OpenAI. Clients can only send their data remotely to the servers of the LLM providers, which is very unfavorable to privacy protection. Enterprises in China also face related compliance issues. While the open-source LLM can be locally deployed, all the data is processed within the company owning the data, even allowing offline processing, significantly protecting the clients' data security.

**Open source model facilitates long-lasting customer experience**

FCreating a reliable dataset for enterprises is crucial to keeping up with the constant changes in open-source models. Open-source models can be customized to fit an enterprise's specific needs, but this requires a high-quality dataset. By investing in a dataset, enterprises can fine-tune multiple models and avoid constantly replacing them with newer versions, which saves money in the long run, as the dataset does not need to be updated continuously. Enterprises can leverage the model's capabilities without incurring significant costs.

Open-source models are updated quickly to meet the changing needs of users. The power of R&D in the open-source community quickly fills the shortcomings of open-source LLMs. LLaMA2 itself lacks a Chinese corpus, leading to unsatisfactory Chinese comprehension; however, only the day after LLaMA2 was made available, the first open-source Chinese LLaMA2 model, "Chinese LLaMA27B", appeared in the community and could be downloaded and run. Adequate community power support can meet the different needs of users. In contrast, closed-source companies usually need help to take care of the distinct needs of various types of users comprehensively.

**Open source helps to capture market opportunities**

Open-source models are more accessible to users and can expand the market quickly due to their low barrier to entry. Stable Diffusion, an open-source image generation model, has become an essential competitor to MidJourney, a closed-source model, because of its large developer community and diverse application scenarios. Although not as good as MidJourney in some ways, Stable Diffusion has captured a significant share of the image generation market with its open-source and free features, making it one of the most popular image generation models. Its success has also brought widespread attention and investment to the companies after it, RunwayML and Stability AI.

#### 2.2.3 Ecological Side:Converging Diversity for Long-Term Growth

**Open source facilitates large model companies to quickly seize ecological resources**

The low threshold and easy accessibility of open-source models will also help the models quickly capture relevant ecological resources. StableDiffusion is an open-source project that has received positive responses and support from many freelance developers worldwide. Many enthusiastic programmers were actively involved in building the easy-to-use graphical user interface (GUI). Many LoRA modules have been developed to provide Stable Diffusion with features such as accelerated generating, more vivid images, etc. According to the official website of Stable Diffusion, one month after the release of Stable Diffusion 2.0, four of the top ten apps in the Apple App Store are AI painting apps based on Stable Diffusion. A thriving ecosystem has become the solid foundation of Stable Diffusion.

At the time of the original release of the open-source LLM LlaMA2, there were 5,600 projects on GitHub containing the "LLaMA" keyword and 4,100 projects containing the "GPT4" keyword. After two weeks, the LLaMA-related ecosystem has grown significantly, with 6,200 related projects compared to 4,400 "GPT4"-related projects. For LLM companies, ecosystem means markets, technological power, and inexhaustible driver for growth. With lower barriers, open source can grab ecological resources faster than closed-source models. Therefore, open-source LLM companies should seize this advantage, strengthen communication with community developers, and provide them with sufficient support to promote the rapid development of relevant ecosystems.

**Open source facilitates large model vendors to pry the market and gain business alliances**

After LLaMA2 was commercially open-sourced, Meta quickly cooperated with Microsoft and Qualcomm. As the major shareholder of OpenAI, Microsoft chose to collaborate with open-source vendor Meta, which means that open-source has become a force to be reckoned with. For future collaboration, Meta stated that users of Microsoft Azure cloud service will be able to fine-tune the deployment of Llama2 directly on the cloud. Microsoft disclosed that Llama2 has been optimized for Windows and can run directly on Windows locally.

The collaboration between the two companies highlights that open-source LLMs and cloud vendors have a natural cooperation foundation. Not coincidentally, there is a similar trend in domestic open-source LLM vendors: Baidu's ERNIE and Ali's Qwen are both open-source LLMs. Although users usually do not pay for using open-source LLMs, they need to pay for the computational power using Baidu Cloud and Ali Cloud as computational platforms.

Meta's partnership with Qualcomm also signals its expansion into the mobile sector. Due to its broad audience, open-source LLMs can be deployed locally. With other advantages, mobile phones have become the future of convenient use of LLMs of a vital carrier. This also attracts mobile phone chip manufacturers to collaborate with open-source model vendors.

In summary, the open-source LLM, with its broad reach, facilitates the company behind it to find partners and pry into the market.

**Open source can mobilize a wide range of communities and bring together diverse development forces**

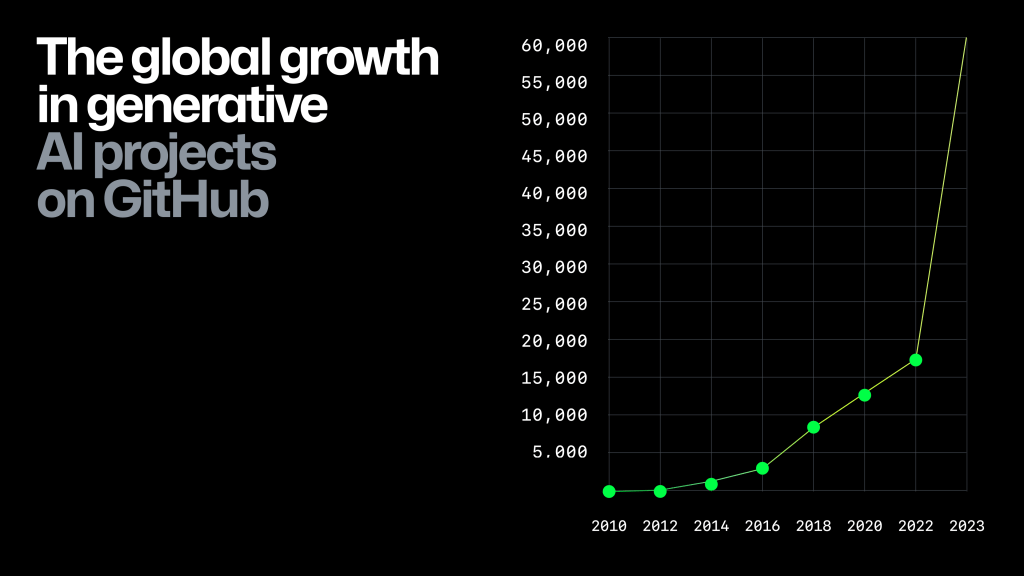

The power of the community has always been an essential strength of open source. As shown in the figure below, the generative AI projects on GitHub have realized rapid growth in 2022, soaring from 17,000 to 60,000. The rapidly growing community can not only quickly provide a large amount of technical feedback for open-source LLM developers but also fully enhance the end reach of open-source LLMs and fine-tune the application of open-source models to various vertical domains to bring more users to the LLMs.

<div style="margin-left: auto; margin-right: auto; width: 80%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.10 Changes in the number of generative AI-related projects open-sourced on GitHub (Source: GitHub)</center>

<br>

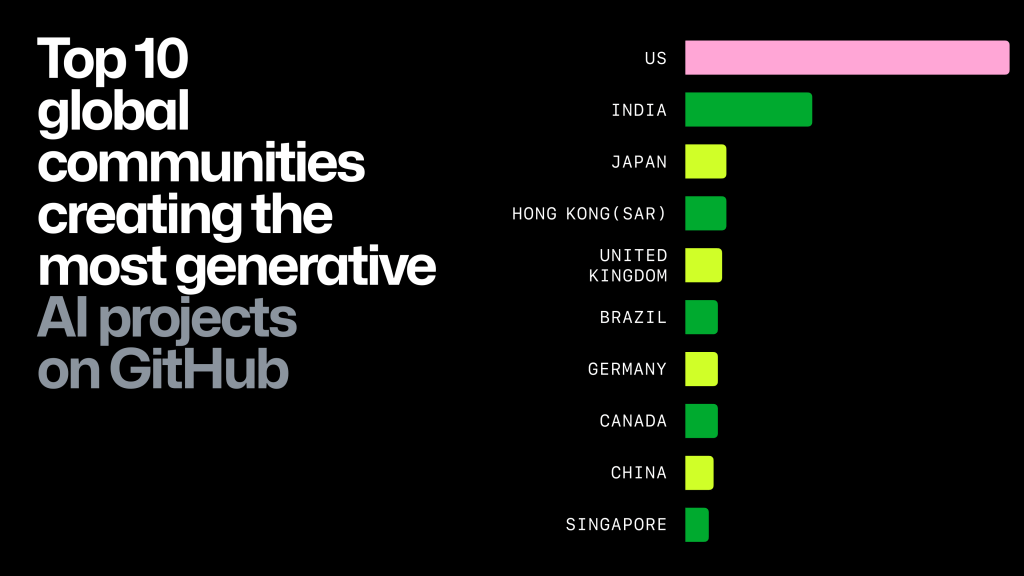

Open-source language models (LLMs) are built with contributions from developers worldwide from different cultures, regions, and technical backgrounds. This is in contrast to closed-source models. The graph below shows that contributors from various countries, including China, India, Japan, Brazil, and others, have made significant contributions to the open-source community for generative AI and the United States. By including contributions from developers worldwide, the open-source LLM can be adapted to suit different regions' customs, languages, industries, and other usage habits. This will make the open-source LLM more versatile and appealing to a broader audience. <br>

<div style="margin-left: auto; margin-right: auto; width: 80%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.11 Top 10 global communities creating the most generative AI projects on GitHub (Source:Github)</center>

<br>

**Domestic open source base LLM is booming, keeping pace with global leaders**

Based on the domestic ecosystem of tech companies, the country's open-source pre-trained foundation LLMs are also booming, keeping pace with global leaders.

In June, Tsinghua ChatGLM was upgraded to the second generation, which took the "top spot" in the Chinese circle (Chinese C-Eval list), and ChatGLM3 launched in October not only has a performance comparable to that of GPT-4V at the multimodal level, but also is the first LLM product with code interaction capability in China ( Code Interpreter.)

In October, the Aquila LLM series has been fully upgraded to Aquila2, and Aquila2-34B with 34 billion parameters have been added. At that time, in 22 evaluation benchmarks in four dimensions, namely, code generation, examination, comprehension, reasoning, and language, Aquila2-34B strongly dominated the top 1 of several lists.

On November 6, the LLM startup company Zero One Everything, led by Dr. Kai-Fu Lee, officially open-sourced and released its first pre-trained LLM, Yi-34B, which has achieved amazing results in a number of leaderboards, including Hugging Face's Open LLM Leaderboard.

In December, Qwen-72B, a model with 72 billion parameters from AliCloud's Tongyi Qianwen, topped the Open LLM Leaderboard of Hugging Face, the world's largest modeling community, by overpowering domestic and international open-source LLM models such as Llama 2.

Domestic open-source pre-trained base LLMs are far more numerous than the above; the booming open-source pre-trained base LLM ecology is exciting, and it includes academic institutions, Internet giants, and some excellent startups. At the end of the report, the statistics of startups and models with open-sourced LLMs are summarized.

#### 2.2.4 PPaths to Commercialization of Open-source LLMs

Currently, we are in the era of rapid development of open-source LLM technology, a field that, while promising, also faces significant business model exploration challenges. Based on exchanges with practitioners and case studies, this paragraph attempts to summarize some of the directions of commercialization exploration at this stage.

**Provision of support services**

With the emergence of more and more basic open-source technologies, the complexity and professionalism of the software have increased dramatically, and the user's demand for software stability has increased simultaneously, requiring professional technical support. At this time, the emergence of Redhat as a representative of the enterprise began to try to achieve commercialization of the operation based on open-source software, the main business model for the "Support Services" model, for the use of open-source software customers to provide paid technical support and consulting services.The overall complexity and specialization of the current foundation model is high, and the user needs professional technical support as well.

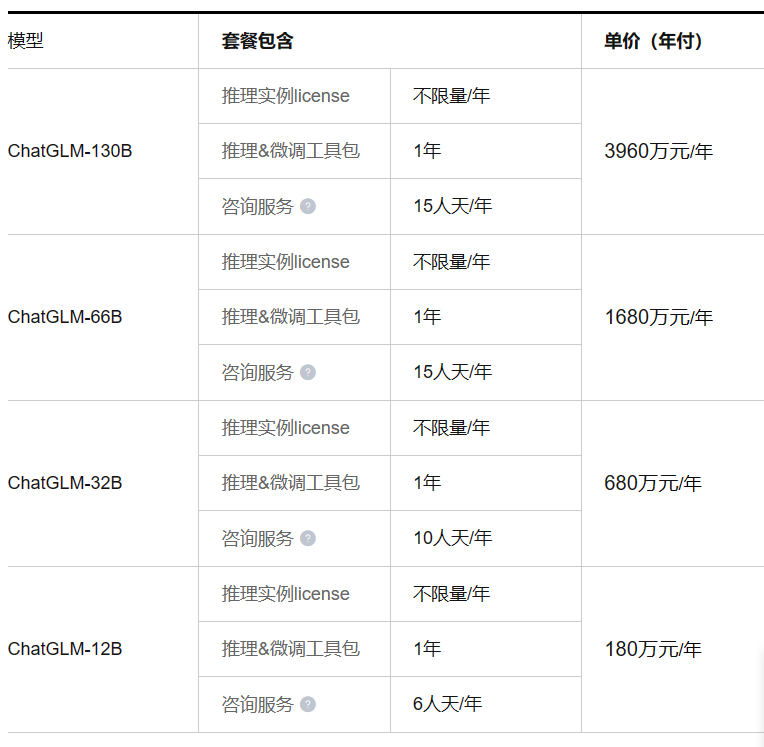

In the LLM space, Zhipu AI's business model is more similar to Redhat. It provides enterprises with local private deployment services of ChatGLM, a self-developed LLM, providing efficient data processing, model training and deployment services.Provide Wisdom Spectrum LLM files and related toolkits, users can train their own fine-tuned model and deploy reasoning services, on top of which Wisdom Spectrum will provide technical support and consulting related to the deployment of the application, updates of primary model. With this solution, companies can achieve complete control of data and run their models securely.<br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.12 Zhipu AI's Pricing Model for Private Deployment </center>

<br>

**Provision of cloud hosting services**

Cloud growth has continued to exceed expectations since the development of cloud computing technology.The growing need for flexible and scalable infrastructure is driving IT organizations' cloud spending and increasing cloud penetration worldwide. Against this technological backdrop, there is a growing demand from users to reduce software O&M costs. Cloud hosting services are SaaS that enable customers to skip on-premise deployment and host software as a service directly on a cloud platform. By subscribing to SaaS services,clients can turn high upfront capital expenditures into small recurring expenditures, and relieve O&M pressure to a large extent. Some of the more successful open-source software companies include Databricks, HashiCorp, and others.

In the field of LLMs, Zhipu AI directly provides standard API products based on ChatGLM, so that customers can quickly build their own proprietary LLM applications, pricing according to the number of tokens of text actually processed by the model. The service is suitable for scenarios that require high level of knowledge, reasoning ability and creativity, such as advertisement copywriting, novel writing, knowledge-based writing, code generation, etc. The pricing is:0.005 yuan / thousand tokens.

At the same time, Zhipu AI also provides API interfaces for super-simulated LLMs (supporting character-based role-playing, extended multi-round memory, and individualized character dialogues) and vector LLMs (vectorizing the input text information so as to combine with vector databases, provide external knowledge bases for LLMs, and improve the accuracy of LLM inference).

Hugging Face also offers a cloud-hosted business model. The Hugging Face platform hosts a large number of open-source models and also offers a cloud-based solution, the Hugging Face Inference API, which allows users to easily deploy and run these models in the cloud via an API.This model combines the accessibility of an open-source model with the convenience of cloud hosting, allowing users to use it on demand without having to set up and manage a large infrastructure on their own. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.13 Hugging Face Cloud Platform Charges </center>

<br>

**Development of commercial applications based on a foundation model**

Based on the base model to charge fees, refers to part of the open-source vendor's own base model is free open source, but the vendor based on the base model and developed a series of commercial applications, and for commercial applications to charge for the model, typical cases, such as Tongyi Qianwen.

AliCloud has developed eight applications based on its open-source base model Tongyi Qianqi:Tongyi Tingwu (speech recognition), Tongyi Xiaomei (to improve customer service efficiency), Tongyi Zhiwen (text comprehension), Tongyi Stardust (personalized roles), Tongyi Spirit Codes (to assist in programming), Tongyi Faerui (legal industry), Tongyi Renxin (pharmaceutical industry), and Tongyi Diaojin (financial industry).Each of these applications has a corresponding enterprise-level payment model. Also some of the apps include a individual-level payment model , such as Tongyi Tingwu. It mainly provides voice-to-text related services such as meeting minutes, and its charges are mainly calculated based on the length of the audio. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.14 Tongyi Tingwu Pricing Model </center>

<br>

**Model-as-a-Service business model**

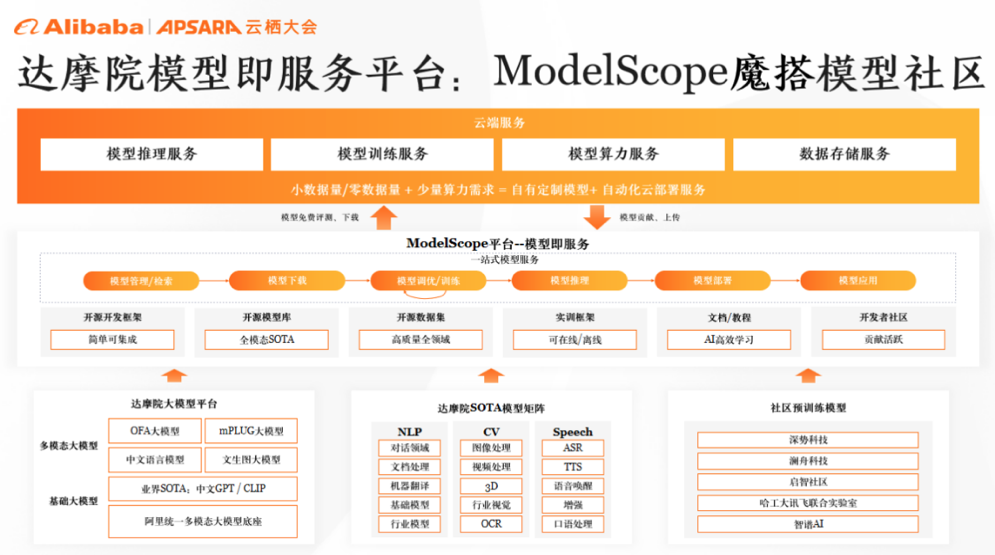

The lowest level of Model as a Service (abbreviated to:MaaS) means to take the model as an important production element, design products and technologies around the model life cycle, and provide a wide variety of products and technologies starting from the development of the model, including data processing, feature engineering, training and tuning of the model, and services for the model.

AliCloud initiated the "ModelScope Community" as the advocate of MaaS. In order to realize MaaS, AliCloud has made preparations in two aspects:One is to provide a model repository, which collects models, provides high-quality data, and can also be tuned for business scenarios. Model usage and computational need to be combined in order to provide a quick experience of the model so that a wide range of developers can quickly experience the effects of the model without having to coding. The second is to provide abstract interfaces or API interfaces so that developers can do secondary development for the model. In the face of specific application scenarios, providing fewer samples or zero samples, it is easy for developers to carry out secondary optimization of the model, which really allows the model to be applied to different scenarios. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.15 AliCloud:Model-as-a-Service </center>

<br>

**LLM business models need to be explored and experimented with**

Currently, the business path of open-source LLM companies has not yet been validated by the market, so a large number of companies are actively exploring different business models without sticking to a single pricing strategy. However, so far, no effective business model has been found to cover their high development and operating and maintenance costs, thus making their economic sustainability questionable.This situation reflects, to some extent, the nature of this emerging industry:While technological breakthroughs have been made, the question of how to translate these technologies into economic benefits remains an open one.

However, it is worth noting that despite such challenges, the rise and development of open-source LLMs still marks the birth of a new industry. This industry has its own unique value and potential, offering unprecedented technical support and innovation possibilities for a wide range of industries. In this process, all participants (including research institutions, enterprises, developers and users) are actively exploring and trying to find a model that can balance technological innovation and economic returns.

This exploration is not an overnight process; it takes time, experimentation, and a deep understanding of market and technology trends. We are likely to see a variety of innovative business models emerge, such as technical support services, cloud hosting, MaaS, etc. as mentioned above. Although the current business models for these open-source LLMs are not yet mature, it is this kind of exploration and experimentation that will drive the entire LLM field forward and ultimately find a business path to sustainable growth with profitable returns.

### 2.3 Making AI developer tools open-source has become an industry consensus at this stage

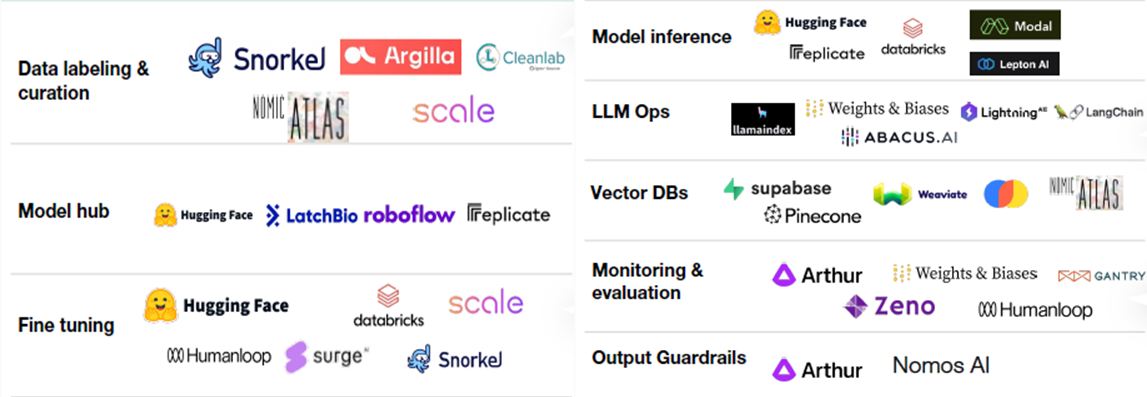

#### 2.3.1 Developer Tools Play an Important Role in the AI Chain

The Develop Tools layer is an important link in the chain of AI LLM development. As shown in the figure below, the development tools layer plays the role of the top and bottom, linking the middle layer:

For taking on computational resources, the development tool layer plays a PaaS-like role.Cloud-based platforms help LLM developers more easily deploy computational, development environments, invoke, and allocate resources, allowing them to focus on the logic and functionality of model development and realize their own innovations.

For linking pre-trained models, the development tool layer provides a series of tools to accelerate the development of the model layer, including dataset cleaning and labeling tools. <br>

<div style="margin-left: auto; margin-right: auto; width: 70%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.16 Location of Developer Tools in the AI LLM Chain </center>

<br>

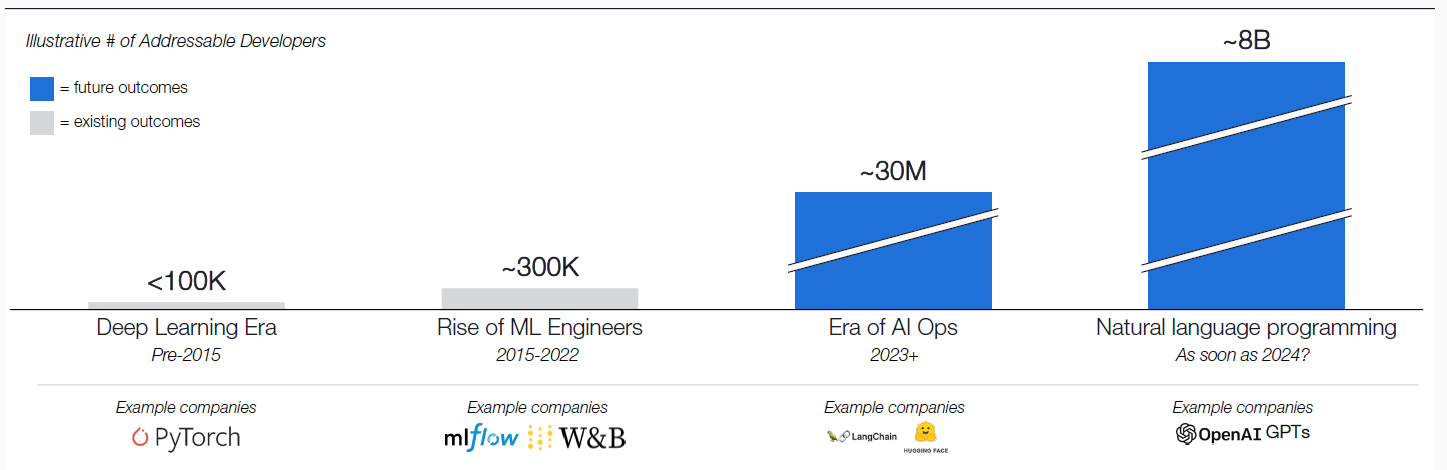

To promote the development of AI applications, the developer tools layer plays an essential role in helping enterprises and individual developers to develop and deploy their final products. For enterprise developers, developer tools help to realize the deployment of LLMs in the industry, as well as the monitoring of the model to ensure the regular operation of the enterprise model. Other related functions include model evaluation, database inference, and supplementation of the model running process. For individual developers, developer tools help them simplify deployment steps and reduce development costs, inspiring the creation of more fine-tuned models for specific functions, such as Autotrain by Hugging Face, which allows developers to fine-tune open-source models based on private data with just a few mouse clicks. At the same time, the developer tools also help to establish the connection between the end-user and the LLM APP and even the deployment of the LLM on the end-user's device.

With the increasing maturity and advancement of development tools, more and more developers are venturing into development related to LLMs. These tools not only improve development efficiency but also lower the barrier to entry, enabling more innovative-thinking talent to participate in the field. From data processing and model training to performance optimization, these tools provide comprehensive support for developers. As a result, we have witnessed the birth of a diverse and active LLM development community with some cutting-edge projects and innovative applications. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.17 Growing Number of AI LLM Developers </center>

<br>

LLM development tools are blossoming, covering everything from data preparation and model construction to performance tuning, and they continue to push the frontiers of AI technology. Some tools focus on data annotation and cleaning so that developers can more easily obtain high-quality data; some tools are committed to improving the efficiency of fine-tuning so that the LLM is more in line with the customization needs; there are also tools responsible for the operation of the LLM monitoring, to provide timely feedback to the developers, users. These diverse tools promote technological innovation and provide developers with more choices, together building a vibrant and creative ecosystem for LLM development. There is no shortage of great open-source projects that greatly benefit both users and open-source companies. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.18 Large number of development tools covering different levels of LLM development </center>

<br>

#### 2.3.2 Open source for developer tools is important

**Supply-side benefits**

Open-source developer tools are conducive to polishing and upgrading the product in different scenarios, which contributes to its rapid maturity. One of the main advantages of open-source developer tools is that they provide an extensive testing and application environment. Because open-source tools are freely available for use and modification by a variety of users and organizations, they are often applied and tested in diverse real-world scenarios and are thus "battle-tested. "This extensive use and feedback helps the product identify and fix potential defects more quickly while facilitating the development of new features and improvements to existing ones. Especially for startups,this is the fastest and most cost-effective way to get product feedback, promote product improvement, and help quickly bring more mature commercialized products to market.

Open-source developer tools underlying products with high user stickiness are conducive to rapidly spreading the market. As mentioned earlier, developer tools contain many indispensable components of the LLM development process. Once developers become accustomed to specific tools, they tend to use them consistently because changing tools means relearning and adapting to the new tool's features and usage. Therefore, these products naturally have high user stickiness. <br>

<center> Figure 2.19 High user stickiness of open source development tools </center>

<br>

The chart shows the net revenue retention rate for major SaaS products, which reflects the retention rate of regular customers, their ability to keep paying, and their loyalty to the product. Developer product stickiness is generally higher than the median, with Snowflake at the top of the list at 174% and Hashicorp, Gitlab, and Confluent at over 120%.

As you can see, with such high stickiness, the faster the customer acquisition rate, the higher future revenues will be. When these tools are available as open source, they can be more quickly and widely adopted because open source lowers the barrier to trying and adopting new tools. This rapid market expansion is critical to building brand awareness and a user base.

**Demand-side benefits**

Open-source developer tools reduce the cost for SMEs to enter the LLM market, making it easier for them to focus more on application layer development. For SMEs, entering the market to develop large-scale models and complex systems often requires significant technical investment and financial support. Open-source developer tools lower this barrier because they are usually free or less expensive overall and contain many proven features and components. SMEs can utilize these resources to develop and test their products without creating all the essential elements from scratch. In this way, they can focus more resources and energy on application-level innovations and solutions for specific business needs rather than spend much time and money building the underlying technology. This reduces the cost of entry and speeds up product development, enabling SMEs to compete more effectively with larger firms.

Due to the ecological effect of open-source development tools, their technology iterations usually outpace closed-source tools. In such an open-source ecosystem, the latest research results from the lab can be quickly integrated and shared, and such a mechanism ensures the rapid updating and dissemination of technology. Active participation in the open-source community facilitates the rapid exchange of innovative ideas and technologies, making the latest development tools and technological achievements accessible and usable by many developers. The strength of this open-source culture is that it is open and collaborative, providing developers with a quick and easy way to access and utilize state-of-the-art tools. It not only accelerates the development of technology but also offers individual developers or small teams the opportunity to compete with large corporations, promoting the healthy development and innovation of the entire technology sector.

#### 2.3.3 Open-source developer tools need to emphasize ecological construction.

**Making developer tools open source requires technical support to maintain a stable community ecosystem**

Open-source development tools rely on the support and maintenance provided by the community and partners. This is essential to ensure the stability and reliability of the tool. For example, the success of an open-source database management system depends not only on its functionality but also on the community's ability to respond to user-reported problems and provide fixes promptly. At the same time, market feedback from partners and users in the ecosystem is critical to optimizing open-source development tools. If an open-source code analysis tool is widely used in an enterprise environment, the feedback from those enterprise users will directly influence the future direction of the tool. This feedback helps developers understand which features are most popular and which need improvement to tailor the tool to market needs.

**Open source developer tools need to complement the strengths of cloud vendors to expand market reach and user base**

The developer tools themselves are to be deployed based on the platform provided by the cloud vendor, whose strength lies in its specialization and technical strength. In contrast, the cloud vendor's advantage lies in delivering the just-needed computational platform and its broader user base. The two collaborate on developer tools, and developers can leverage cloud vendors to offer better computational power deals to attract more users while benefiting from the cloud vendors' own sales channels to gain more substantial end-to-end reach. This virtuous cycle helps to extend open-source development tools to a broader user base. This increases the tool's visibility and provides more opportunities for its practical application and improvement. More users means more feedback, which promotes continuous tool optimization and adaptation to changing market needs.

MongoDB, for example, started its cloud transformation early by launching Atlas, a SaaS service. Even though Atlas accounted for only 1% of total revenue when MongoDB went public in 2017, when MongoDB had already built all of its systems based on the Open Core model, MongoDB still spent a lot of resources on building SaaS-related products and marketing systems. Since then, Atlas's revenue has increased at a compound annual growth rate of more than 40%.In contrast, its competitor, CouchBase, has relied too heavily on its traditional model and has spent a lot of effort on mobile platform support services. This slow-growing market has dragged the company into a quagmire.SaaS service-based product systems are essential for developer tools vendors today and must emphasize cooperation with cloud vendors. <br>

<div style="margin-left: auto; margin-right: auto; width: 75%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.20 MongoDB Sales Revenue by Product </center>

<br>

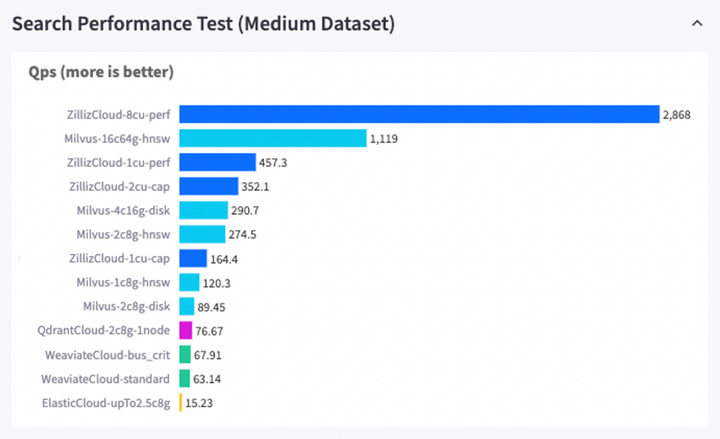

**Establishing an ecology conducive to building open source industry standards**

Developer tools, as the underlying tool layer, are decisive for the principle architecture of the upper model development. Collaboration with partners such as cloud vendors, open-source model vendors, and others helps to build consensus and establish industry standards, which is critical to ensure interoperability, compatibility, and consistency of user experience with development tools. Standardization reduces compatibility issues and enables easier integration and use of different products and services. For example, MongoDB leverages the community to form the industry standard for NoSQL RDMS. This active community not only brought high-quality, low-cost licenses to the early commercial versions of MongoDB but also served as the basis for the later Atlas (managed service). Based on the collaboration of the open-source community, Milvus launched Vector DB Bench (which can measure the performance of vector databases through the measurement of key metrics, allowing vector databases to maximize their potential), thus gradually establishing an industry standard for vector databases, and facilitating the selection of vector databases tailored to the needs of users. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.21 Vector database evaluation results </center>

<br>

#### 2.3.4 Exploring the Commercialization Path of Open Source Developer Tools

The commercialization dimension of AI developer tools can draw on traditional software developer tools; the overall commercialization is still in the early stage of exploration; based on the research and analysis of open-source developer tools that have attempted commercialization, we found that there are currently following commercial paths:

**Cloud Hosting Managed Service - Consumption-Based Pricingg**

With the popularity of cloud computing, more and more developer tools have defaulted to serving users directly through hosted resources on the cloud. Such hosting services on the cloud can reduce the user's threshold for use but also directly provide the latest and most professional product services; in the absence of data, security, and privacy concerns, it is a good commercialization option for open-source AI developer tools projects.

Under the business model of hosting services on the cloud, more and more projects are choosing Consumption-Based Pricing (CBP) with different product offerings; the pricing unit can be computational resources, data volume, number of requests, etc.

AutoTrain by Hugging Face is a platform that automatically selects suitable models and fine-tunes them based on a user-supplied dataset. It has selectable model categories, including text categorization, text regression, entity recognition, summarization, question answering, translation, and tables. AutoTrain provides non-researchers with the ability to train high-performance NLP models and deploy them at scale quickly and efficiently. AutoTrain's pricing rules are not disclosed; rather, an estimated fee is charged before training based on the amount of training data and model variants.

Scale AI focuses on data annotation products with a simple pricing model that starts at 2 cents per image and 6 cents per annotation for Scale image, 13 cents per frame and 3 cents per annotation for Scale Video, 5 cents per task and 3 cents per annotation for Scale Text, and 7 cents per annotation for Scale Document Al. Scale Text starts at 5 cents per task and 3 cents per entry; Scale Document begins at 2 cents per task and 7 cents per entry. In addition, there are enterprise-specific charging options based on the amount of data and services for specific enterprise-level projects.

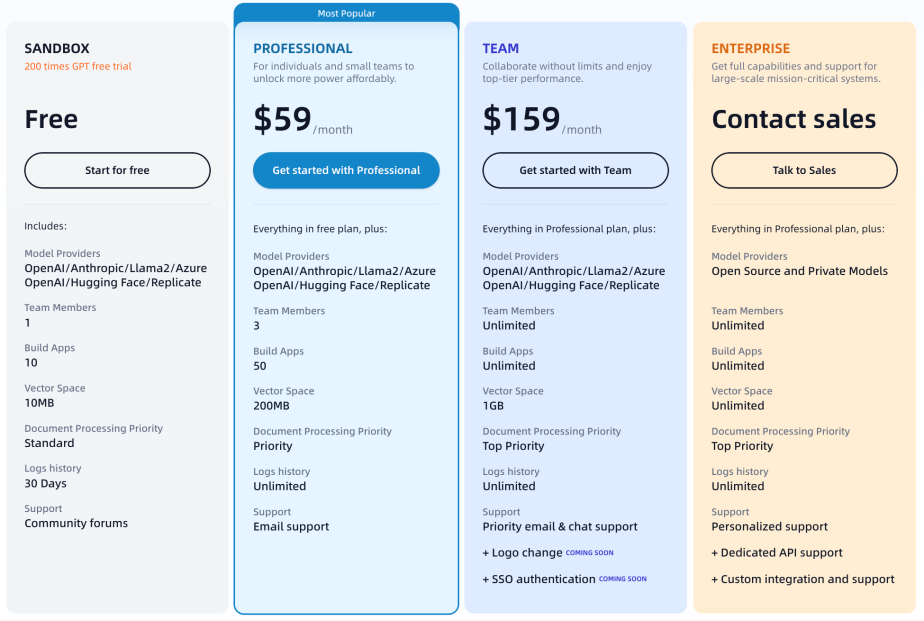

**Cloud Hosting Managed Service - tiered subscription pricing**

Some development tool layer projects also use Cloud Hosting Managed Services but offer subscription services yearly or monthly. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.22 Dify.AI subscription pricing </center>

<br>

The subscription business model allows different tiers to balance cost and price according to users' needs and willingness to pay. The company Dify.ai, pictured above, for example, has tiered pricing for different volumes of users: There is a free version for individual users, but given the cost overhead, there are many limitations set; for professional individual developers and small teams, there are fewer limitations for a lower price, but there is still an upper limit on usage; and for medium-sized teams, there is a higher price for a relatively complete service.

However, Cloud Hosting Managed Services, whether per-volume pricing or tiered subscriptions, can only offer standardized product services, and the data needs to flow to the public cloud. Some large enterprises still need to privatize and customize such a business model.

**Private Cloud / Dedicated cloud / Customized Deployment**

While more and more projects are utilizing services hosted directly on the cloud, hosted services on the cloud are no longer an option when larger enterprises need to have more private, customized requirements.

Usually, with such a business model, the program also offers different options to the users. The Bring Your Own Cloud (BYOC) model is prevalent in North America, while the On-Premise scenario is better suited for more data-compliance-sensitive scenarios.

The commercialization of open source projects at the development tool level often provides a variety of options, including the above three business models. This can be interpreted as the diversity and complexity of customer demand at this level. In exploring business models, various projects are also attempting to synchronize different paths. The future direction of development is worthy of long-term sustained attention.

#### 2.3.5 Successful cases of open source on the developer's tool side

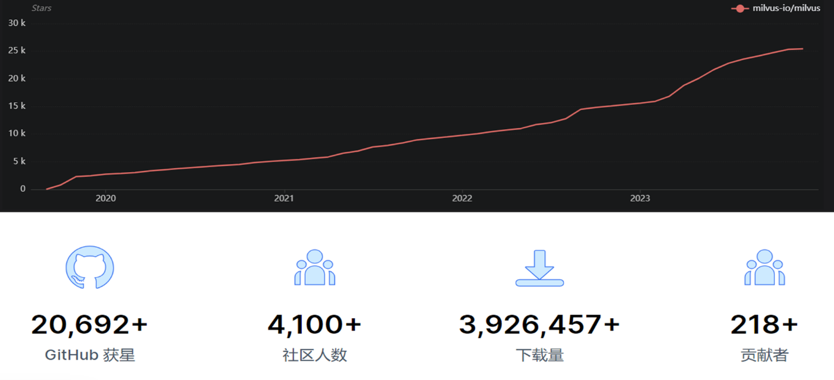

Zilliz is a next-generation data processing and analytics platform for AI that provides the underlying technology for application-oriented enterprises. Zilliz developed Mega, a GPU-accelerated AI data middleware solution, which includes MegaETL for data ETL, MegaWise for database, MegaLearning for model training in the Hadoop ecosystem, and Milvus for feature vector retrieval. These systems can meet the traditional scenarios and needs of accelerated data ETL, accelerated data warehousing, and accelerated data analytics, as well as emerging AI application scenarios. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.23 Zilliz Global Users (from company website)</center>

<br>

Zilliz's success represents a GPU-based giant data accelerator that provides an effective solution to organizations' growing data analytics needs. Zilliz's core project, the vector similarity search engine Milvus, is the world's first GPU-accelerated massive feature vector matching and retrieval engine. Relying on GPU acceleration, Milvus provides high-speed feature vector matching and multi-dimensional data joint query (joint query of features, labels, images, videos, text, and speech) and supports automatic database sharding and multi-replicas, which can interface with AI models such as TensorFlow, PyTorch, and MxNet, enabling second-level queries for billions of feature vectors. Milvus was open-sourced on GitHub in October 2019, and the number of Stars continues to grow at a high rate, reaching 25k+ in December 2023, with a developer community of over 200 contributors and 4000 + users. In the capital market, Zilliz received $43 million in Series B, the most significant single Series B financing for open-source infrastructure software worldwide. This indicates that investment institutions are optimistic about Zilliz's potential for future development. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.24 Zilliz Github Community Operations </center>

<br>

Zilliz's main product is the Vector Database, a key piece of developer tools. It is a database system specialized in storing, indexing, and querying embedded vectors. This allows LLMs to store and read knowledge bases more efficiently and fine-tune models at a much lower cost. It will also play an important role in the evolution of AI-native applications.

Zilliz is commercialized as Zilliz Cloud, with a monthly subscription business model. It is deployed in the form of SaaS, and determines the monthly subscription fee based on the number of vectors, vector dimensions, computational unit (CU) type, and average data length. Zilliz also offers a PaaS-based proprietary deployment service for scenarios with a high focus on data privacy and compliance, which is based on customized pricing.<br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.25 Example of Zilliz Price Calculator </center>

<br>

### 2.4 Open-source tools for the AI application layer are blooming

#### 2.4.1 Application Layer Open Source Tools Bloom

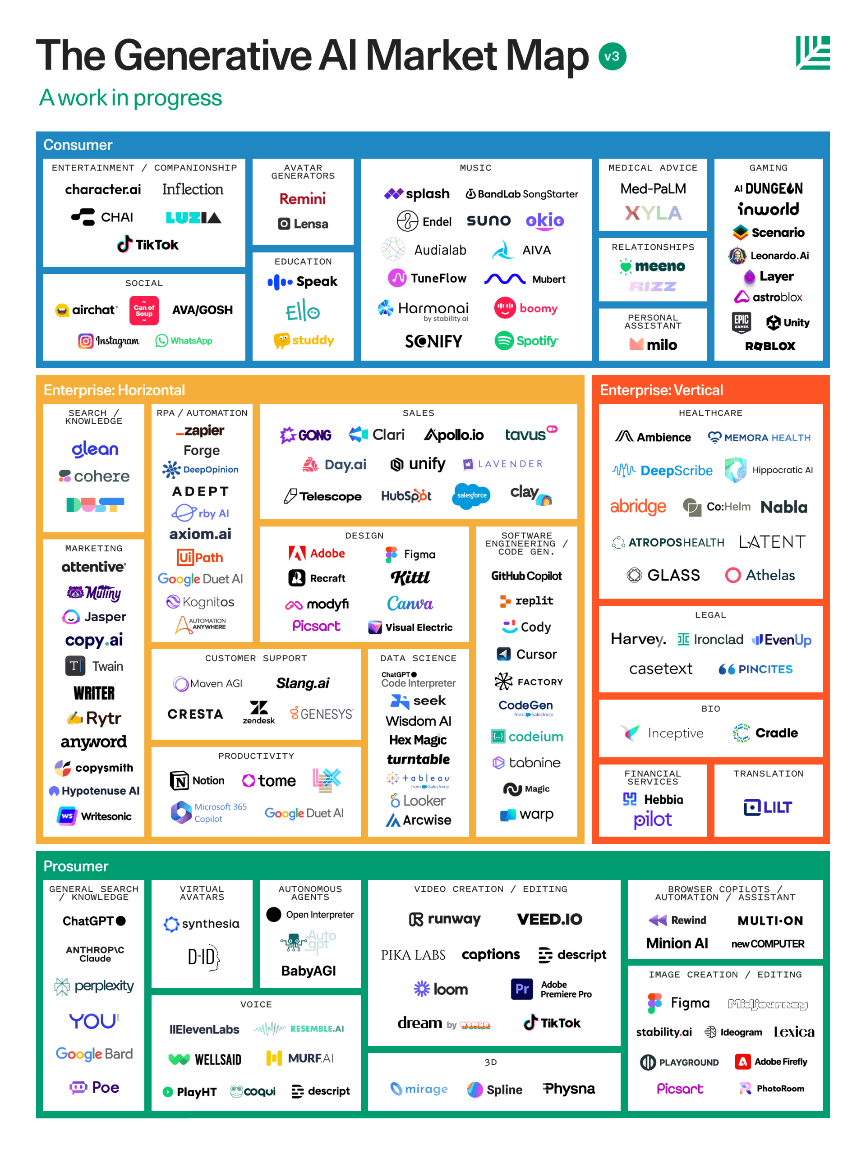

The development of application-layer AI is like a blossoming landscape, showing a spectacular picture of technological diversity and application breadth. Nowadays, the influence of application layer AI is expanding, some of them are oriented to consumer users, providing services covering all aspects of daily life, such as entertainment, socialization, music, personal health assistant, etc.; at the same time, they also play an important role in more specialized business fields, such as market analysis, legal processing, intelligent design, etc. These applications demonstrate the depth and breadth of AI technology, which not only improves efficiency and convenience, but also promotes innovation and technological advancement to a great extent. <br>

<div style="margin-left: auto; margin-right: auto; width: 60%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.26 A wildly diverse array of AI application layer products (source:Sequoia)</center>

<br>

A large number of open-source application layer products have also been born, which are mostly based on LLMs and fine-tuned with industry-specific datasets. Application layer tools customized for the industry offer better performance than the generic LLMs, and the open-source nature helps bueiness and consumer users using these applications to further customize their development to better fit the needs.

Open-source tools at the application layer facilitate integration across disciplines and industries. For example, industries such as medicine, finance, education, and retail are utilizing open-source AI tools to solve industry-specific problems, driving the adoption of the technology across all sectors. Open-source tools encourage experimentation and innovation due to low cost and low risk. Developers are free to experiment with new ideas and technologies, and this spirit of experimentation has greatly contributed to the application layer boom. <br>

<div style="margin-left: auto; margin-right: auto; width: 90%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.27 Mapping of open-source tools for application testing (with examples of selected products in each domain)</center>

<br>

#### 2.4.2 Drivers of open source at the application layer

**Open-source application layer products have a low threshold for use and are more easily accepted by users**

Application layer open-source tools are less expensive and more in line with the low willingness of domestic enterprises to pay. According toiResearch, domestic enterprises are not professional enough in their internal management processes, have low recognition of the value of software, and are more willing to pay for manpower. Manufacturers need to curve to indoctrinate companies, give them a reprieve from accepting the product, and gradually unleash the demand side. Based on the above background, open-source tools meet the needs of these markets with their low-cost features, making organizations more willing to try and adopt these tools. For domestic companies with limited budgets, low cost is a significant advantage. Low- or no-cost features allow these organizations to access and use advanced technology tools without additional financial burden.

At the same time the low-cost nature of open-source tools encourages companies to make long-term investments. Firms can build and expand their technology infrastructure over time without taking on significant financial risk. With the deepening of the enterprise's understanding of open-source products and the deepening of the degree of dependence, open-source products can gradually consider providing value-added services content, so as to achieve the purpose of long-term customer acquisition.

At the same time open-source products are conducive to achieve seamless integration with other systems to enhance the user experience. A distinguishing feature of open-source application layer products is that they are often highly flexible and customizable. Allows users to modify and adapt to their specific needs. This means that open-source products can be customized to better fit existing systems and workflows for seamless integration with other systems. Many open-source projects follow industry standards, which helps ensure compatibility between different systems and components. Standardization promotes interoperability between different software products and simplifies the integration process, thereby improving the overall user experience. Open-source communities are typically made up of developers and users from around the world who work together to improve products and provide support. This collaborative spirit not only fosters continuous improvement of the product, but also provides a resource for solving problems that may be encountered during the integration process.

**Open-source application layer products can receive contributions from the community to facilitate technology iteration and broaden the application scenarios**

Application layer open-source can receive strong support from community development forces. As the application scenarios are more diverse and decentralized, the needs of different sub-scenarios are more differentiated, and the expertise of contributors to the corresponding scenarios is more demanding. Stable Diffusion (SD) is an open-source text-to-image application that, with the power of the community, has been rapidly catching up in terms of performance since its release and in some ways surpassing the closed-source text-to-image application Midjourney. While there are some inconveniences when using Stable Diffusion, users have access to hundreds of LoRAs, fine-tuning settings, and text embeds from the community. For example, users of Stable Diffusion found it to be limited in its ability to process hand images. In response, the community reacted quickly and within the next few weeks a LoRA fix was developed specifically for the hand image issue. This timely and professional feedback from the community greatly contributes to the rapid advancement and improvement of application layer open-source tools.

Open-source products, due to a lower barrier to use, may be adopted by users from different industries and backgrounds in a variety of environments and contexts as soon as they are released. These application scenarios may go far beyond the developer's initial design and imagination. When products are used in these diverse scenarios, they may reveal new potential or needs, revealing previously unnoticed usage scenarios. This can provide product developers with valuable insights into how their products are performing in real-world use and potential room for improvement. Faced with these newly discovered usage scenarios, developers have the opportunity to innovate and improve.They can add new features, optimize existing features, or redesign products to better meet these needs based on actual user experience in different environments. The iteration based on real-world use cases, is a key driver for the continued progress of open-source products.

**Application layer open-source products have Product-Led Growth (PLG) model features that can drive paid conversions**

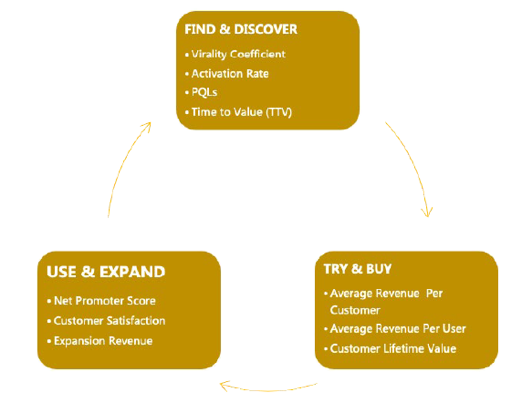

The PLG model focuses on customer acquisition through a bottom-up sales model, where the product is at the center of the entire sales process. The PLG model's growth flywheel has three main phases:Acquisition, Conversion, and Retention. In all three phases, open-source has advantages that distinguish it from traditional business models.

In the customer acquisition phase, the open-source operating model reduces the cost of customer acquisition and makes the customer acquisition process more targeted. The interactions between developers and the community-based collaboration brought about by platforms such as GitHub accelerate the spread of customer acquisition. The initial customer orientation of open-source products is usually participants in the open-source community, often developers or IT staff in the organization. By nurturing these quality prospects, you also have a "mass base". Communities help open up the boundaries of the enterprise and make word-of-mouth spreading of good open-source projects and products possible. Users spontaneously download and use it in order to solve their own problems and pain points. At this point, open-source software products are not just used as a way to solve user problems through functionality, but can also be a vehicle to help organizations spread and grow. In the long run, it will be possible to reduce the cost of customer acquisition for your organization, allowing for more and more automated customer acquisition and lowering expenses on the sales side.

At the conversion stage, open-source software tends to have a higher paid conversion rate compared to traditional commercial software. On the one hand, when the user has used the free version of the software, as long as the software's functions can well meet the user's needs, it can be converted into a paid conversion at the speed of a shorter cycle, and make it a long-term user. On the other hand, companies can conduct targeted conversion follow-up and up-selling by observing users' behavior with the free version of the software, for example, by providing the sales team with a list of customers who have exceeded their usage limits and are ready to pay. In addition to traditional sales conversions, conversions can also be made through self-service buying paths (Self-service selling), which largely reduces the cost of sales.

In the retention phase, open-source software allows users to avoid the risk of vendor lock-in, making them willing to engage in long-term use. Based on the same open-source project, there may be multiple vendors downstream that offer software with similar functionality, and the choice of vendor can be changed at a relatively small cost, so users can be confident in their choice of software for the long term. On the contrary, when a customer uses a closed-source product, if he/she wants to switch to another software after a period of time, he/she needs to redeploy hardware, data, etc., resulting in a significant transfer cost. Thus when users choose to use closed-source software, they may abandon their continued use of the software because the software's later development does not meet their needs or the cost of transferring it is too high. <br>

<div style="margin-left: auto; margin-right: auto; width: 60%">

|  |

| --------------------------------------------------------- |

</div>

<center> Figure 2.28 Application Layer Open-source Growth Flywheel </center>

<br>

#### 2.4.3 Market Status of LLM Application Layer Open Source

**Internet giants and startups working together**

There are opportunities for both Internet giants and startups to participate and compete in the LLM application layer open-source market. This is due to several factors:1) The lowered technology barrier. The open-source of the modeling layer and developer tools layer lowers the threshold of technology acquisition and application. Instead of having to develop complex LLM algorithms from scratch, startups can utilize open-source models and tools to develop solutions that meet specific needs. 2) Cost-effectiveness. Open-source models often do not require costly licenses or API fees, which is especially beneficial for SMEs with relatively limited capital. 3) Innovation and flexibility. Startups are often able to adapt more quickly to market changes and innovate for specific market segments or application scenarios.

At present, the Internet giants are mainly based on the LLM, on which they extend a series of vertical applications. For example, Ali's Tongyi Qianwen recently released Tongyi Qianwen 2.0 and derived eight applications based on it:Tongyi Tingwu (speech recognition), Tongyi Xiaomei (improving customer service efficiency), Tongyi Zhiwen (understanding text), Tongyi Stardust (personalized roles), Tongyi Lingyi (assisted programming), Tongyi Faryi (legal industry), Tongyi Renshen (pharmaceutical industry), and Tongyi Dijin (financial industry).

Startups mainly choose a certain niche industry for deep cultivation, such as Lanboat Technology's self-developed LLM focusing on marketing, finance, cultural creativity and other scenarios; XrayGPT focusing on medical radiology image analysis; Finchat focusing on financial field models, etc. Yunqi Partners has supported two open-source application layer startups this year, TabbyML, a tool to aid programming, and Realchar, an AI personal assistant that allows for real-time customization, both of which have quickly amassed a large number of users on Github.

**Competitive landscapes in Business-end and Consumer-end are different**

Significant differences in the competitive landscape between the business-end and consumer-end of the open-source market for LLM application layers:

- **To-Business Markets**:Enterprise-oriented applications are typically focused on improving efficiency, reducing costs, and enhancing decision-making capabilities. In this area, open-source LLMs can be used to automate processes, data analytics, customer service optimization, and more. The competition here focuses more on the practicality of the technology and the ability to customize it.

- **To-Consumer Markets**:Consumer-oriented applications are more focused on user experience, interactivity and ease of use. This includes personalized recommendations, virtual assistants, entertainment and social media apps, and more. Competition in the consumer market is more about innovative user interfaces and new features that appeal to users.

**Large number of sub-scenarios still belong to the blue ocean market, no obvious lead**

As technology evolves, market demand for AI applications becomes more segmented.For example, in industries such as healthcare, law, finance, and education, each field has its own unique needs and challenges. These market segments offer a great deal of opportunity, but also require targeted solutions.There are a number of relevant applications emerging in each of these areas, but most are at the start-up stage and have yet to produce a headline application. And because there are so many segments of the industry, there is not much competition, making it a better opportunity to get in. In these blue ocean markets, no clear market leader has yet formed due to the novelty and constant evolution of the market. This provides opportunities for new entrants and innovators to capture market share through unique solutions or innovative business models.

**Expect innovative applications to emerge based on the new capabilities of LLMs**

Although significant progress has been made in LLM technology, its deep integration and innovative application in specific application areas is still in its infancy. This means that there is plenty of room to explore and implement new ways of applying it in many sub-scenarios.With the rapid development of large-scale AI models, we are ushering in a new era of potential and innovation. These models will not only optimize and improve existing technology applications, but more importantly, they will be pioneers in leading completely new markets and application areas. In a future full of unknowns and surprises, we can look forward to the emergence of a huge variety of powerful new applications that will be integrated into our daily lives in unprecedented ways. These emerging markets and applications will open a window into never-before-seen possibilities for far-reaching social and cultural change. They will stimulate human creativity and imagination, pushing us to break through existing technological boundaries and explore a wider world.