---

tags: forge

title: Forge.AI ANvIL v06.40

---

# Forge.AI ANvIL v06.40

#### Table of Content

[TOC]

# Forge.AI Overview

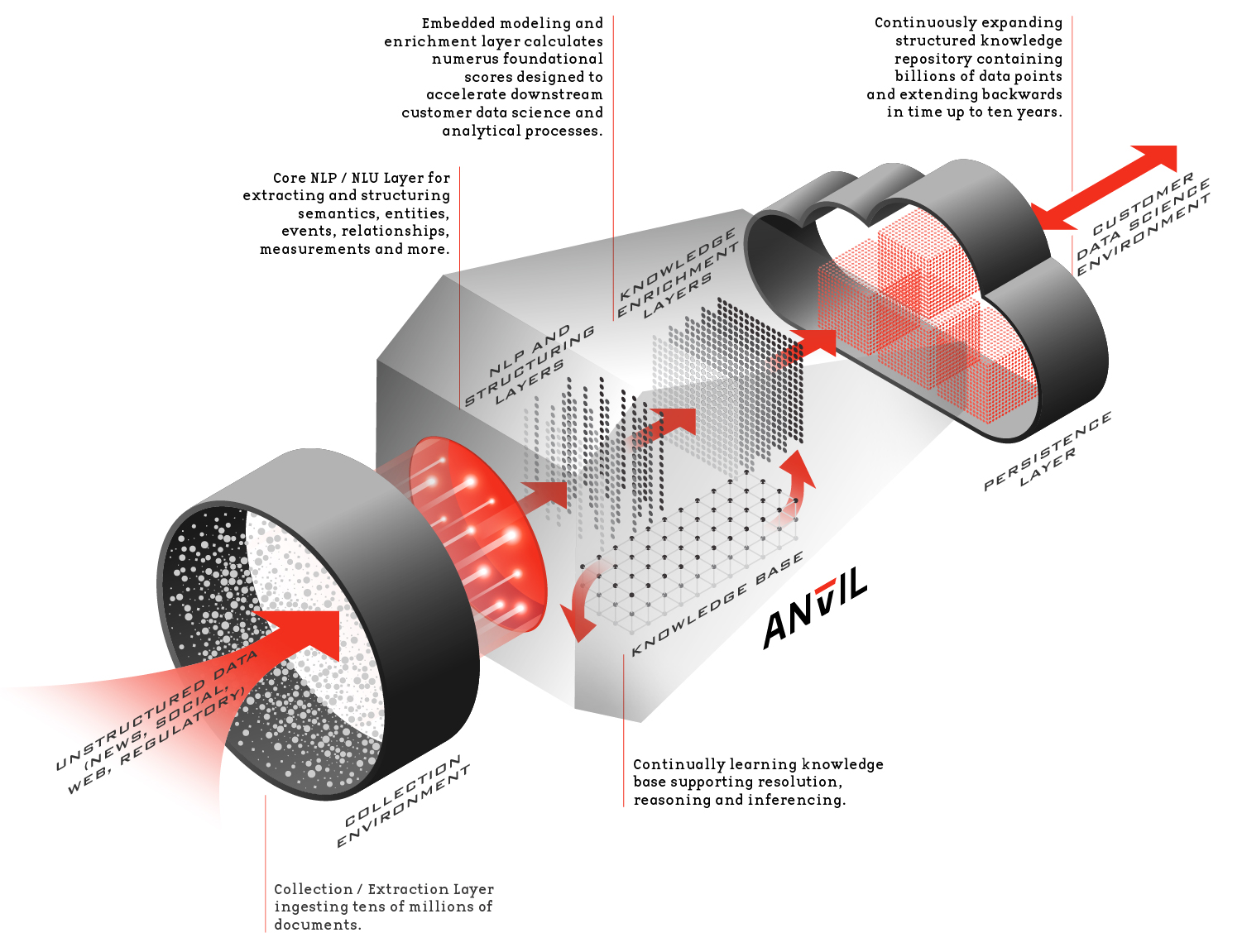

Forge.AI is a cloud-based data and knowledge platform that powers the analytical, model driven organization. We create real time structured knowledge and data feeds out of the chaotic ocean of unstructured data that flows across the globe and throughout your organization. Our customers can explore, discover, monitor and model the knowledge and information captured in that unstructured data in fundamentally new and powerful ways enabling the rapid delivery of high-impact, data driven models that create a competitive advantage.

Forge.AI amplifies the ++quantity++ of data science your organization can conduct. We eliminate the time, expense and technology challenges associated with trying to create and sustain a data pipeline that incorporates the at scale transformation of unstructured data into algorithmically relevant structured data. We fundamentally accelerate the speed at which your data scientist can, explore hypothesis, iterate on and deploy models that deliver business value.

Forge.AI creates a new paradigm in terms of the ++quality++ and sophistication of data science your organization can conduct. Historically, data science conducted with unstructured data has been “shallow,” meaning model inputs tend to be simple (e.g. word counts) and model outputs paint with a broad brush (e.g. traditional sentiment analysis). Forge.AI brings unparalleled fidelity to the identification, extraction, resolution and semantic framing of the interaction between the entities, events and relationships expressed in unstructured data. This fidelity enables a new level of sophistication and time series modeling and analysis within your organization. See more. See faster. Predict better.

## Forge.AI Platform Abstract

Forge.AI’s cloud-based ANvIL platform collects publicly available information in real time and transforms it into an ever-growing always accessible library of structured, intelligent data that lends itself to times series analysis and other forms of advanced analytics.

ANvIL supports the analytical enterprise and is resource that: (i) generates enhanced data that is suitable for a wide assortment of machine learning and artificial intelligence applications and enhancing data science environments; (ii) improves automated decision making and reasoning processes and (iii) enhances internal knowledge bases. The data resources created by Forge.AI are made available to our customers in either a streaming format or addressable through a SQL enabled cloud data warehouse (Snowflake).

Our customers simply “plug into” the ANvIL’s data environment and immediately, transformed and enriched data begins to flow is available to your data scientists, analysts and algorithmic infrastructure...

To give a sense as to what is happening “under the hood,” a brief abstract of the Forge.AI ANvIL platform is detailed below:

++**Collection**++: ANvIL’s collection and extraction environment operates in real time 7x24x365. Processing millions of documents monthly, ANvIL’s source information is collected from tens of thousands of national / international, local, and industry specific news sites, organizations web sites, different US and international sites (e.g. the SEC) and social media (in addition to private feeds and / or feeds that you provide directly to us). Specially designed algorithms continually learn and optimize the process by which we collect source information. We abide by all politeness clause and other requirements in our collection process. If there is a source of data or information, we are not collecting that is relevant to you we will work with you and that data source to incorporate it into our ecosystem.

++**NLP and Structuring Layer**++: Our proprietary natural language processing and natural language understanding environment extracts and structures relevant entities, events, relationships, measurements and relevant semantics. In addition, it extracts a host of meta information and generates AI informed “calculated data” derived from source documents directly and/or in conjunction with our knowledge base.

++**Knowledge Enrichment Layer**++: The Knowledge and Enrichment Layer calculates numerous foundational scores designed to accelerate downstream customer data science and analytical processes. Ranging from entity level sentiment through various momentum and saliency scores through various topic vectors, these foundational elements are immediately usable for various enhanced analytical processes that support various modeling tasks associated with risk engineering, trading, thematic exposure and the like.

++**Knowledge Base Layer**++: ANvIL’s semantic, temporal and probabilistic knowledge base works in concert with the other components of ANvIL to resolve all relevant data. Furthermore, in addition to supporting reasoning and inferencing tasks, ANvIL’s Knowledge Base is continually growing and learning as Forge is processing millions of documents monthly creating a source of real time compounding intelligence.

The knowledge base is also where Forge.AI maintains the various ontologies we support. An overview of the primary ontologies is listed below. It is worth noting that Forge.AI adds to the ontologies we support both organically and at the request of customers:

- Domain Specific Ontologies

- Corporate Structure

- People and Corporate Relationships

- Business Concepts

- Financial Concepts

- Marketing Concepts

- Risk (Operational Risk, Credit Risk, Market Risk)

- Science (Physics, Chemistry, Biology, etc.)

- Military and National Security

- Events

- Custom Ontologies / Ontologies as Requested

++**Persistence Layer**++: All knowledge and data processed by ANvIL persists in ANvIL’s expanding knowledge repository. This repository is predicated and built out of the tens of millions of documents ANvIL processes, is continually growing in real time and its footprint extends backwards in time up to ten years predicated on source.

++**Access to ANvIL and Data Output and Syndication**++: Data is syndicated to our customer environment in one of two ways.

- Option One: Customers connect with ANvIL’s real time cloud-based data warehouse. This data document which follows, describes how the data is managed in ANvIL’s cloud data warehouse. It is intended to orient the customer to nature of the data managed in the data warehouse, its structure, and how to query the data and integrate it into your operations.

- Option Two: Customers connect with our real-time streaming data environment. This environment is conceptually similar in structure our cloud-based data warehouse; however the representation is in XML or JSON and the delivery mechanism uses HTTPS 2.0 Push. Separate documentation is available which describes this environment.

The format of the data that is syndicated to our customers is extensively described below. There are three “classes” of data extracted and generated by Forge.AI:

- Direct data that corresponds directly with a published document (e.g. news story, SEC filing, tweet, etc.);

- Calculated information that is derived from a published document, such as an automated LDA topic vectors representing the source information;

- Aggregate information derived by looking at a large collection of the corpus of processed data.

## Connecting with the Forge.AI Platform

Connecting with the Forge.AI environment is simple, and an abstract is provided below. You simply choose to connect to our cloud-based data warehouse, or you choose to connect to our push API.

# Schema

`ARTICLES_V0640`

: The `ARTICLES` schema contains all general articles since Oct-2018.

`DJN_V0640`

: The `DJN` schema contains all the Dow Jones news feed since 2008 (when applicable.)

`SEC_8K_V0640`/`SEC_10K_V0640`/`SEC_10Q_V0640`

: The `SEC_*` schema contain all the SEC forms 8K/10K/10Q filings since 2009.

These schemas adhere to version [06.20](https://hackmd.io/@nbrachet/SyUkXRDJB).

### High-level Structure

The unit of processing within Forge's pipeline is a [Document](#Documents) which represents a web article, an SEC filing, a Dow Jones news, etc... Once processed, a document and all its associated data are effectively read-only.

Each document is divided into [Sections](#Sections) which compose the document.

For instance, SEC filings have well-defined sections.

Note that sections are not paragraphs.

Each section is divided into [Sentences](#Sentences).

Note that the document's title and the sections' titles are considered sentences.

Finally, [named entities](https://en.wikipedia.org/wiki/Named_entity) (in the natural language sense) within sentences are recognized and classified. Named entities are stored in the [NERAssertions](#NERAssertions) table.

#### Identifiers

All identifiers used in the data model are unique within a document, except for the document identifier which is globally unique.

A [Document](#Documents) is identified by a [UUID](https://en.wikipedia.org/wiki/Universally_unique_identifier) (stored in the `docUuid` column). For the purpose of joining within the data model, a document is also uniquely identified by a numeric id (stored in the `docId` column). The `docId` is globally unique but may change over time (after a document is reprocessed for example), whereas the `docUuid` will never change.

A [NER entity](#Entities) is also identified by a document unique identifier. As a result, the same entity referred to in different documents will get different IDs. Forge uses a resolution process to identify known entities and assign a globally unique identifier -- the [URI](https://en.wikipedia.org/wiki/Uniform_Resource_Identifier). See [EntityEquivalentTo](#EntityEquivalentTo), [EntitySubsidiaryOf](#EntitySubsidiaryOf) and [EntityHasTypes](#EntityHasTypes) tables.

For example, Apple may be referenced in one document, and be assigned id 34, and referred to as 'Apple, Inc.' in another document and be assigned id 57. In both instances however the entity will be resolved to its universal identifier: [http://forge.ai/entity/apple_inc]().

### Augmentations

Forge's pipeline executes many models to augment the document information:

1. Summarization

* at the document level (stored in the `summary` column of the [`Documents`](#Documents) table) and

* at the section level (stored in the `summary` column of the [`SourceTexts`](#SourceTexts) table.)

1. Sentiment scoring

* A document-level sentiment stored in the [`DocumentSentiments`](#DocumentSentiments) table and

* An entity-level sentiment stored in the [`EntitySentiments`](#EntitySentiments) table.

1. Saliency scoring

* stored in the [`EntitySaliencies`](#EntitySaliencies) table.

1. Theme classification

* stored in the [`DocumentTopics`](#DocumentTopics) table.

1. Event extraction

* stored in the [`IntrinsicEvents`](#IntrinsicEvents) table

1. Relationships extraction

* stored in the [`Relationships`](#Relationships) table

1. Quotes extraction

* stored in the [`Quotes`](#Quotes) table

1. Topic Vector models computation

* at the document level (stored in the [`DocumentTopicModelVectors`](#DocumentTopicModelVectors) table and

* at the section level (stored in the [`TopicModelVectors`](#TopicModelVectors) table.)

### ERD

<iframe width="100%" height="600" src='https://dbdiagram.io/embed/5e960c7239d18f5553fd992b'></iframe>

## Documents

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Join key |

| docUuid | CHAR(48) | ✖ | The UUID for this document. |

| parentUuid | CHAR(48) | ✔ | If the document has a parent document, this field will represent the id of the parent document. |

| url | VARCHAR(2048) | ✖ | The resolved URL for the document.<br/>This may be the same as the original URL if there was no redirection. |

| originalUrl | VARCHAR(2048) | ✔ | The source URL before any redirection. |

| docType | STRING | ✔ | The docType is used to determine how the document is published.<br/>Current types = `article` `secfiling` |

| title | STRING | ✔ | The title of the document (potentially translated into English.) |

| originalTitle | STRING | ✔ | The original title of the document (if not English.) |

| docDateTime | TIMESTAMP_NTZ | ✔ | The later of the document publish date and last update (UTC). |

| collectDateTime | TIMESTAMP_NTZ | ✔ | The UTC time the document was collected and processed. |

| summary | STRING | ✔ | Summary of the document text. |

##### Notes:

1. `docId` may change over time. Use `docUuid` to refer to a document.

1. `docDateTime` and `collectDateTime` are stored in UTC.

1. `docDateTime` is `NULL` if no document date could be identified for the document.

1. `docDatetime` is the _acceptance date_ for SEC filings.

1. If the document has too few sentences, `summary` will be the first 2 sentences.

1. `summary` is not computed for SEC filings. See section summary in [SourceTexts](#SourceTexts).

#### Use-Case

##### Documents time-series (by day)

```sql=

SELECT TO_DATE(IFF(docDatetime IS NULL OR docDatetime = '1970-01-01', collectDatetime, docDatetime)) AS date,

COUNT(*) AS documents

FROM Documents

WHERE date BETWEEN '2010-01-01' AND CURRENT_DATE()

GROUP BY 1

ORDER BY 1 DESC

```

### DocumentSource

The channel the document was collected through.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| sourceId | INTEGER | ✖ | The id for the source channel. |

| parentId | INTEGER | ✖ | Logical grouping id. |

| name | STRING | ✖ | The name of the channel the document was collected from. Examples include "The Wall Street Journal", and "Bloomberg". |

| type | STRING | ✔ | "RSS feed", "crawl", etc. |

#### Use-Case

##### Number of documents collected by sites over the past week

```sql=

SELECT ANY_VALUE(s.name) AS name,

REGEXP_REPLACE(d.url, 'https?:\/\/(www\.)?([^\/]+).*', '\\2') AS site,

COUNT(*) AS cnt

FROM Documents d

JOIN DocumentSource s ON (s.docId = d.docId)

WHERE d.url LIKE 'http%'

AND d.collectDatetime >= DATEADD(DAY, -7, CURRENT_DATE())

GROUP BY site

ORDER BY cnt DESC

```

### DocumentMetadata

Relevant HTTP header information acquired during document collection.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| name | STRING | ✖ | |

| value | STRING | ✔ | |

#### Additional metadata items for DJN

`forgeai:djAccessionNumber`:

: Dow Jones article _access number_

`forgeai:djSequenceNumber`:

: Dow Jones article _sequence number_

`forgeai:djBrandedHeadline`:

: Combination of the Dow Jones Brand Display along with the headline text

#### Additional metadata items for SEC 8-K/10-K/10-Q

`forgeai:company`:

: name of the filing company, e.g. `Guidewire Software, Inc.`

`forgeai:company-cik`:

: central index key for the filing company, e.g. `0001528396`

`forgeai:sec-accession-no`:

: accession number of the filing, e.g. `0001528396-19-000009`

`forgeai:sec-filing-type`:

: form type, e.g. `10-Q`

#### Trick

It can be useful to pivot the `DocumentMetadata` table:

```sql=

WITH Meta AS (

SELECT docId,

MAX(CASE WHEN name = 'forgeai:company' THEN value END) AS company,

MAX(CASE WHEN name = 'forgeai:company-cik' THEN value::INT END) AS cik,

MAX(CASE WHEN name = 'forgeai:sec-accession-no' THEN value END) AS accession,

MAX(CASE WHEN name = 'forgeai:sec-filing-type' THEN value END) AS filing,

MAX(CASE WHEN name = 'forgeai:sec-filing-type' THEN REGEXP_REPLACE(value, '^([0-9]+-.).*$', '\\1') END) AS filing_short

FROM SEC.DocumentMetadata

GROUP BY 1

)

SELECT *

FROM SEC.Documents d

JOIN Meta m ON (m.docId = d.docId)

```

Alternatively:

```sql=

SELECT *

FROM SEC.Documents d

JOIN SEC.DocumentMetadata PIVOT (MAX(value) FOR name IN ('forgeai:company-cik', 'forgeai:sec-accession-no', 'forgeai:sec-filing-type')) as p (docId, cik, accession, filing) ON (p.docId = d.docId)

```

For performance reason, only the relevant items should be pivoted, eg.

```sql=

WITH Meta AS (

SELECT docId,

MAX(CASE WHEN name = 'forgeai:sec-filing-type' THEN REGEXP_REPLACE(value, '^([0-9]+-.).*$', '\\1') END) AS filing

FROM SEC.DocumentMetadata

GROUP BY 1

)

SELECT *

FROM SEC.Documents d

JOIN Meta m ON (m.docId = d.docId)

WHERE filing = '8-K'

```

### SimilarDocuments

A collection of references to documents that are closely identical to this document.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| similarDocUuid | CHAR(48) | ✖ | The UUID of the similar document. |

| score | FLOAT | ✔ | A score from 0.0-1.0 reflecting the similarity of the documents.<br/>A score of 1.0 indicates the documents are identical. |

##### Notes:

1. `similarDocUuid` may not be present in the `Documents` table.

#### Trick

##### Find _canonical_ documents -- ie. documents with no similar document (for a given similarity score)

```sql=

WITH CanonicalDocuments AS (

SELECT *

FROM Documents d

WHERE NOT EXISTS (SELECT 1

FROM SimilarDocuments s

WHERE s.docId = d.docId

AND s.score >= 0.99)

)

```

##### Compute the number of similar documents (for a given similarity score)

```sql=

WITH SimilarDocCounts AS (

SELECT d.docId, COUNT(DISTINCT b.similarDocUuid) + COUNT(DISTINCT a.docId) AS similarDocCount

FROM Documents d

LEFT JOIN SimilarDocuments b ON (b.docId = d.docId

AND b.score >= 0.99)

LEFT JOIN SimilarDocuments a ON (a.similarDocUuid = d.docUuid

AND a.score >= 0.99)

GROUP BY 1

)

SELECT d.docUuid, s.similarDocCount

FROM Documents d

JOIN SimilarDocCounts s ON (s.docId = d.docId)

```

For `CanonicalDocuments` this can be simplified:

```sql=

WITH CanonicalDocuments AS (

SELECT d.*, COUNT(DISTINCT s.docId) AS similarDocCount

FROM Documents d

LEFT JOIN SimilarDocuments s ON (s.similarDocUuid = d.docUuid

AND s.score >= 0.99)

WHERE NOT EXISTS (SELECT 1

FROM SimilarDocuments t

WHERE t.docId = d.docId

AND t.score >= 0.99)

)

```

### DocumentScores

A set of scores for a document computed by different models.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| scoreType | STRING | ✖ | Category of the score. |

| modelName | STRING | ✖ | Instance of the type of score. |

| modelVersion | STRING | ✖ | Unique model version for the score. |

| score | STRING | ✖ | Value of the score. |

| confidence | FLOAT | ✔ | Confidence of the score. May not be computed for all scores. |

| index | INT | ✔ | For multi-dimensional scores, the dimension of the score value. |

#### Available Score Types

| score | ARTICLES | SEC |

|-------|:--------:|:---:|

| lexica | deprecated | ✖ |

| VADER | ✔ | ✖ |

lexica (`modelName = lexica-v0`)

: Measure, in logarithmic scale, of lexical-based stylistic divergence from a baseline.

`modelVersion`: current version `FunctionWordProbability.model-identifier-8b69522bbe583e38058a185e5fed6161a4472599-26402f3b687d41ba68b9596120a9292f23a7cc38`

`score`: float from `0` (identical to baseline) to `-Inf`

`confidence`: not computed

`index`: not applicable

VADER (`modelName = vader-v0`):

: Valence-based and word sense-aware sentiment analysis (http://comp.social.gatech.edu/papers/icwsm14.vader.hutto.pdf)

`modelVersion`: current version `VaderValance.train-2520d1b931dab7458a063a61c14c8ea411582936-2fdb6f68f4f976fe42d6febf9e37ed2ba8c6983c`

`confidence`: not applicable

`index 0` - combined score, float from `-1.0` (negative) to `+1.0` (positive)

`index 1` - positivity component, float from `0` to `+1.0`

`index 2` - negativity component, float from `0` to `+1.0`

`index 3` - neutrality component, float from `0` to `+1.0`

See also [DocumentSentiments](#DocumentSentiments).

### DocumentSentiments

Valence-based and word sense-aware sentiment analysis ([VADER](http://comp.social.gatech.edu/papers/icwsm14.vader.hutto.pdf))

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| modelName | STRING | ✖ | |

| sentiment | FLOAT | ✖ | Combined document score, from `-1.0` (negative) to `+1.0` (positive) |

## Sections

The collection of sections constituting the document.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| ++sectionId++ | INTEGER | ✖ | The document scope id of the section. |

| parentId | INTEGER | ✔ | The parent section id. Where appropriate this value is used to support representing an extracted document as a hierarchical collection of sections. |

| type | VARCHAR(1) | ✔ | An identifier for the type of information represented in the section.<br/>The possible types are: Text (`T`) |

### SectionMetadata

Any metadata associated with a section represented as name-value pairs.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Sections(docId, sectionId)` |

| sectionId | INTEGER | ✖ | Foreign key to `Sections(docId, sectionId)` |

| name | STRING | ✖ | |

| value | STRING | ✔ | |

#### Available metadata items for SEC 8-K/10-K/10-Q

`forgeai:sec-section-type`:

: section type of the filing: `1`, `1-A`, etc...

`forgeai:sec-section-title`:

: section type title, e.g. `Item 1. Financial Statements`

### SourceTexts

If the section is a text (`T`) section then this table will contain the source text.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Sections(docId, sectionId)` |

| ++sectionId++ | INTEGER | ✖ | Foreign key to `Sections(docId, sectionId)` |

| title | STRING | ✔ | The optional title for the section (translated if necessary.) |

| text | STRING | ✔ | The text for the section (translated if necessary.) |

| originalTitle | STRING | ✔ | Original text for the title (if not English.) |

| originalText | STRING | ✔ | Original text for the section (if not English.) |

| summary | STRING | ✔ | Summary of the section text. |

##### Notes:

1. `title` and `text` may be `NULL` because of licensing restrictions.

2. If the text has too few sentences, `summary` will be the first 2 sentences.

### Sentences

The set of extracted sentences from the text section.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Sections(docId, sectionId)` |

| ++sentenceId++ | INTEGER | ✖ | The document scope unique id for the sentence. |

| sectionId | INTEGER | ✖ | Foreign key to `Sections(docId, sectionId)` |

| text | STRING | ✔ | The sentence text. |

| startOffset | INTEGER | ✔ | Start offset in bytes into the section text for the sentence. |

| endOffset | INTEGER | ✔ | End offset in bytes into the section text for this sentence. |

##### Notes:

1. `text` may be `NULL` because of licensing restrictions.

## Entities

The collection of entities discovered in the source document. An entity is named object or domain concept.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| ++entityId++ | INTEGER | ✖ | The document scope unique id for the entity. |

| type | VARCHAR(1) | ✖ | An attribute used to identify the entity type as a simple (`S`) or complex (`C`) entity.<br/>A complex entity is composed of a set of other entities (e.g. `CEO Sally Smith` is composed of a title and a person element) while a simple entity consists of just one entity type. |

| label | STRING | ✖ | The extracted text associated with the entity. |

### EntityAliases

Alternate text from the document referring to the same entity (extracted text)

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| aliasId | INTEGER | ✔ | The document scope unique id for the alias. |

| altLabel | STRING | ✖ | Alias

| confidence | FLOAT | ✔ | |

#### Use-Cases

#### Get mentions of "President Donald Trump", whether explicit or aliased

```sql=

SELECT docUuid

FROM Entities

WHERE LOWER(label) = 'president donald trump'

```

#### Get mentions of "President Donald Trump", with aliases of a confidence of 0.9 or better

```sql=

SELECT e.docUuid

FROM Entities e

LEFT JOIN EntityAliases a ON (a.docId = e.docId

AND a.entityId = e.entityId)

WHERE LOWER(label) = 'president donald trump'

AND NVL(a.confidence, 1) >= 0.9

```

#### Get explicit mentions of "President Donald Trump", but not aliased

```sql=

SELECT docUuid

FROM Entities e

JOIN EntityLocations l ON (l.docId = e.docId

AND l.entityId = e.entityId)

WHERE LOWER(label) = 'president donald trump'

AND l.aliasId IS NULL

```

or

```sql=

SELECT docUuid

FROM Entities e

LEFT JOIN EntityAliases a ON (a.docId = e.docId

AND a.entityId = e.entityId)

WHERE LOWER(e.label) = 'president donald trump'

AND a.aliasId IS NULL

```

#### Get all aliases for "President Donald Trump"

```sql=

SELECT DISTINCT altLabel

FROM Entities e

JOIN EntityAliases a ON (a.docId = e.docId

AND a.entityId = e.entityId)

WHERE LOWER(e.label) = 'president donald trump'

```

### EntityComponents

The components are the constituent entities that collectively constitute the complex entity.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| componentId | INTEGER | ✖ | Entity id into `Entities` of the component |

### EntityAssertions

The extracted entities are disambiguated/resolved using the ForgeAI semantic knowledge base.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| assertedClass | VARCHAR(2048) | ✖ | The URI for the asserted semantic type. |

| predicate | STRING | ✔ | The predicate for the assertion such as "isA", "ownerOf", etc. |

| confidence | FLOAT | ✔ | A value from 0.0-1.0 of the confidence in the assertion. |

##### See Also:

* [NERASsertions](#NERAssertions) for NER assertions

* [EntityEquivalentTo](#EntityEquivalentTo) for resolved entities

* [EntitySubsidiaryOf](#EntitySubsidiaryOf) for resolved companies

* [EntityHasTypes](EntityHasTypes) for resolved types

### NERAssertions

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| ++entityId++ | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| label | STRING | ✖ | Repeat from `Entities` |

| class | VARCHAR(2048) | ✖ | The asserted semantic type class |

| confidence | FLOAT | ✖ | |

##### Supported Classes:

* `Organization`

* `Person`

* `Location`

* `GeopoliticalEntity`

* `Nationality`

* `Date`

* `RelativeDate`

* `TimeSpan`

* `Frequency`

* `MonetaryUnit`

* `Title`

* `Measure`

* `Ratio`

* `DomainTerm`

* `ComplexEntity`

##### Notes:

1. Implicitly `NERAssertions` entities have an `isA` predicate.

### EntityEquivalentTo

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| ++entityId++ | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| uri | VARCHAR(2048) | ✖ | The resolved URI for the entity. |

| confidence | FLOAT | ✖ | |

##### Notes:

1. Implicitly `EntityEquivalentTo` entities have an `equivalentTo` predicate.

2. Only the most confident resolution is reported. So there is at most one entry in `EntityEquivalentTo` for each entity.

### EntitySubsidiaryOf

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| uriPath | VARCHAR(2048) | ✖ | The resolved URI for the company as the parent entity if it is a subsidiary, or as itself if not.<br>Only the path of uri -- ie. `cable_news_network_inc` instead of `http://forge.ai/company/cable_news_network_inc` |

| subsidiaryUriPath | VARCHAR(2048) | ✔ | The resolved URI path of the company as itself. `NULL` for companies that are not a subsidiary. |

| confidence | FLOAT | ✖ | |

##### Notes:

1. Only _companies_ would be included in this table.

1. Only the direct parent company is included in `EntitySubsidiaryOf`.

1. The `equivalentTo` assertions would still be present in `EntityEquivalentTo`.

2. Only the most confident resolution is reported. So there is at most one entry in `EntitySubsidiaryOf` for each entity.

#### Example

The entities `CNN` and `Warner Media` would have the following entries in `EntitySubsidiaryOf` (label added for convenience and confidence omitted for simplicity):

| docId | entityId | label | uriPath | subsidiaryUriPath |

|-------|----------|-------|---------------|------------|

| 1 | 1 | CNN | warner_media_llc | cable_news_network_inc |

| 2 | 2 | Warner Media | warner_media_llc | |

and the following entries in `EntityEquivalentTo`:

| docId | entityId | label | URI |

|-------|----------|-------|-----|

| 1| 1 | CNN | [http://forge.ai/company/cable_news_network_inc]() |

| 2 | 2 | Warner Media | [http://forge.ai/company/warner_media_llc]() |

#### Use Cases

##### Documents about CNN but not TimeWarner

```sql=

SELECT docUuid

FROM EntityEquivalentTo

WHERE uri = 'http://forge.ai/company/cable_news_network_inc'

```

##### Documents about TimeWarner but not CNN

```sql=

SELECT docUuid

FROM EntityEquivalentTo

WHERE uri = 'http://forge.ai/company/warner_media_llc'

```

##### Documents about TimeWarner or any of its subsidiaries

```sql=

SELECT docUuid

FROM EntitySubsidiaryOf

WHERE uriPath = 'warner_media_llc'

```

##### Documents about TimeWarner or any of its subsidiaries except for CNN

```sql=

SELECT docUuid

FROM EntitySubsidiaryOf

WHERE uriPath = 'warner_media_llc'

AND subsidiaryURIPath != 'cable_news_network_inc'

```

### EntityHasTypes

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| uri | VARCHAR(2048) | ✖ | The resolved URI type for the entity. |

| confidence | FLOAT | ✖ | |

##### Notes:

1. Implicitly `EntityHasTypes` entities have a `hasType` predicate.

### EntityProperties

Entity properties intrinsic to the entity at the time the document was processed. Examples include "latitude=42.0016", "CIK=320193", etc.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| name | STRING | ✖ | |

| value | STRING | ✔ | |

#### Trick

It might be convenient to create a CTE with a specific property. For example:

```sql=

WITH EntityCIKs AS (

SELECT docid, entityid, TRY_CAST(value AS INT) AS CIK

FROM EntityProperties

WHERE name = 'cik'

)

```

#### Use-Cases

##### Find all documents mentioning a company from its CIK

```sql=

SELECT DISTINCT d.docUuid, url, title

FROM Documents d

JOIN EntityProperties p ON (p.docId = d.docId)

WHERE p.name = 'cik' AND TRY_CAST(p.value AS INT) = 320193

```

### EntityLocations

The entity label's location in the document.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| aliasId | INTEGER | ✔ | Reference to `EntityAliases(dodId, aliasId)` |

| quoteId | INTEGER | ✔ | Reference to `Quotes(docId, quoteId)`<br>The id of the quote in which the entity located. |

| sentenceId | INTEGER | ✖ | Reference to `Sentences(docId, sentenceId)`<br>The id of the document sentence that the entity is located in. |

| startOffset | INTEGER | ✖ | The offset (in bytes) into the sentence to the start of the entity label. |

| endOffset | INTEGER | ✖ | The offset (in bytes) into the sentence to the end of the entity label. |

### EntityScores

A set of scores for an entity computed by different models.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| scoreType | STRING | ✖ | Category of the score. |

| modelName | STRING | ✖ | Instance of the type of score. |

| modelVersion | STRING | ✖ | Unique model version for the score. |

| score | STRING | ✖ | Value of the score. |

| confidence | FLOAT | ✔ | Confidence of the score. May not be computed for all scores. |

| index | INTEGER | ✔ | For multi-dimensional scores, the dimension of the score value. |

#### Available Score Types

| type | ARTICLES | SEC |

|-------|:--------:|:---:|

| entity-sentiment | ✔ | ✖ |

| entity-salience | ✔ | ✖ |

entity-sentiment (`modelName = entity-sentiment-v0`)

: A numerical score which represents the polarity and intensity of a document's sentiment regarding an entity.

The score is produced via an ensemble over linguistically principled approaches as well as statistically trained models. The ensemble model leverages verb-dynamics, psychologically validated lexical-composition and negation rules, experimentally validated lexical assets, and a fine-tuned transformer network. The model is fit for general (non-jargon) news.

`modelVersion`: current version

`EntitySentiment.train-29dfe6524d8148205d36ae4b08e33de4efd933b6-e087cef86c781c9aee955342219cbf11cf4f7be1`

`score`: `+1` (high positive sentiment) to `-1` (high negative sentiment)

`confidence`: not computed.

`index`: not applicable.

##### Notes:

1. `entity-sentiment` is only calculated for the following NER types:

* `Organization`

* `Person`

* `GeopoliticalEntity`

1. See also [`EntitySentiments`](#EntitySentiments) for a convenient way to access `entity-sentiment` scores

entity-salience (`modelName = forge-saliency-1`)

: This score measures the degree to which a document is about an entity, i.e., how prominent an entity is in the discourse of the document.

`modelVersion`: current version `EntitySalienceV1.model-identifier-7261c2598cc08d237c25b27c27bc2e32c9729bed-e860d2a9ed42f903c8d591c79d70c607f7c65e75`

`score`: `0` (not salient) or `1` (salient)

`confidence`: not computed.

`index`: not applicable.

entity-salience (`modelName = entity-salience-v0`)

: A binary prediction of whether a document is about an entity, i.e., how prominent an entity is in the discourse of the document.

`modelVersion`: current version `EntitySalience.model-identifier-499e567feadbc69d5b1cc8bf0375c6c4bbbcb5f4-48a201be48e3a132568c5e5f7627a3bc60d57ad8`

`score`: `0` (not salient) or `1` (salient)

`confidence`: float from `0.5` to `1.0`.

`index`: not applicable.

##### Notes:

1. See also [EntitySaliencies](#EntitySaliencies) for a convenient way to access `entity-salience-v0` scores.

2. `entity-salience-v0` and `forge-saliency-1` are not compatible. Scores from `entity-saliency-v0`, even mapped to a continuous variable, and `forge-saliency-1` cannot be compared.

3. It might be more intuitive to use saliency (`entity-salience-v0`) as a continuous variable in the range `[0, 1]` as follows:

```sql=

WITH EntitySaliencies AS (

SELECT docId, entityId, modelName, modelVersion,

IFF(score = 0, 1.0 - confidence, confidence) AS saliency

FROM EntityScores

WHERE modelName = 'entity-salience-v0'

)

```

### EntitySentiments

A convenience table for the `entity-sentiment` scores.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| ++entityId++ | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| modelName | STRING | ✖ | `entity-sentiment-v0` |

| sentiment | FLOAT | ✖ | Entity sentiment, from `-1.0` (negative) to `+1.0` (positive) |

### EntitySaliencies

A convenience table for the `entity-salience-v0` scores.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| ++entityId++ | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| modelName | STRING | ✖ | `entity-salience-v0` |

| saliency | FLOAT | ✖ | Entity saliency, from `0.0` (not salient) to `+1.0` (salient) |

## DocumentTopics

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| topicId | INTEGER | ✔ | Unique topic id. |

| name | STRING | ✖ | The topic name (e.g. "finance", "management", "physics", "sports", etc.) |

| parent | STRING | ✔ | If the topic represents a hierarchy, then the parent is the name of the direct ancestor topic. |

| score | FLOAT | ✔ | A measure from `0.0` to `1.0` indicating the topic strength. |

| startSentenceId | INTEGER | ✔ | Sentence id marking the start of relevant topic information in the section. |

| endSentenceId | INTEGER | ✔ | Sentence id marking the end of relevant topic information in the section. |

### DocumentTopicWords

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `DocumentsTopics(docId, topics)` |

| topicId | INTEGER | ✔ | Foreign key to `DocumentTopics(docId, topics)` |

| word | STRING | ✖ | |

| value | FLOAT | ✔ | |

| count | INTEGER | ✔ | |

## IntrinsicEvents

An event is an activity involving people, companies, objects such as money or goods, perhaps government bodies, etc. It may have geospatial components and occurs in time. An event may be linked with or grouped with other events. An event may also have precursors and consequences. The digital model of an event is intended to represent all of this information in a structured format. We refer to this model of an event as an _event narrative_.

Alternatively, there is the concept of an **intrinsic event**. An intrinsic event is a reference to an actual event, but it is not the event itself. In the example below, “acquire” is an intrinsic event.

> Cupertino-based tech giant Apple is planning to acquire California-based virtual reality company NextVR in a deal said to be worth $100 million, the media reported.

An intrinsic event is identified in a document during the named entity recognition (NER) process.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Entities(docId, entityId)` |

| entityId | INTEGER | ✖ | Foreign key to `Entities(docId, entityId)` |

| sentenceId | INTEGER | ✖ | Repeat from `EntityLocations` |

| startOffset | INTEGER | ✖ | Repeat from `EntityLocations` |

| endOffset | INTEGER | ✖ | Repeat from `EntityLocations` |

| label | STRING | ✖ | Repeat from `Entities` |

| class | STRING | ✔ | |

| category | STRING | ✔ | |

| confidence | FLOAT | ✖ | |

##### Notes:

1. Intrinsic events may be mapped to multiple categories. For example 'Fraud' is both a 'BASEL' and a 'CRIMINAL' event.

#### Supported Categories

* `Basel`

* `Communication`

* `Contract`

* `Corporate`

* `Criminal`

* `Cyber`

* `Disruption`

* `Economic`

* `Financing`

* `Government`

* `HumanResources`

* `Investigation`

* `Labor`

* `Legal`

* `Meeting`

* `Named`

* `NaturalDisaster`

* `Political`

* `Product`

* `SupplyChain`

* `Terrorism`

* `Transaction`

## Quotes

The collection of extracted quotes found in the document text.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| ++quoteId++ | INTEGER | ✖ | The document scope unique id for the quote. |

| quoteType | CHAR(1) | ✖ | `D` = direct quote (verbatum)<br>`I` = indirect quote (paraphrased) |

| speakerId | INTEGER | ✔ | The id of the entity to which this quote is attributed. |

| attributionConfidence | FLOAT | ✔ | The confidence of attributing this quote to the speaker. |

| extractionConfidence | FLOAT | ✔ | The confidence of extraction. |

| text | STRING | ✖ | The text of the quote. |

### QuoteLocations

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Quotes(docId, quoteId)` |

| quoteId | INTEGER | ✖ | Foreign key to `Quotes(docId, quoteId)` |

| sentenceId | INTEGER | ✖ | The id of a sentence in which this part of the quote is found. |

| startOffset | INTEGER | ✖ | The offset (in bytes) into the sentence in which this part of the quote begins. |

| endOffset | INTEGER | ✖ | The offset (in bytes) into the sentence in which this part of the quote ends. |

## DocumentTopicModelVectors

The topic models (LDA) generate fixed-length (100 elements) vectors of floating point numbers representing the topics encountered within the text.

The topic vector models introduced are appropriate for numerical calculations, such as distances or velocity, between the vectors from the same model. With distance computations we get clustering via the distance function. Furthermore, these vectors can serve as the low-level features atop a supervised learning algorithm (usually fused with user-supplied labels and any other co-variate information) for assessing document similarity, change detection, for instance.

Comparisons should not be made between topic vectors generated using different topic models. Applications may demand multiple topic models drawn from different corpora. The set of topic vectors allows for associating multiple topic vector calculations with a text section.

One property of the current topic vector models is that they do not represent absolute semantic concepts. A particular dimension having a value of 0.6 does not have a meaning outside of the values of that dimension compared across the other documents and alongside a user-labeling procedure to imbue the semantics over clusters of distance metrics.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| modelName | STRING | ✔ |

| modelVersion | STRING | ✔ |

| dimensionIndex | INTEGER | ✖ | Topic vector index (0...n-1) |

| dimensionValue | FLOAT | ✖ | The contribution (0.0-1.0) that this dimension has to the document. |

## TopicModelVectors

Section level topic model vectors (LDA).

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Sections(docId, sectionId)` |

| sectionId | INTEGER | ✖ | Foreign key to `Sections(docId, sectionId)` |

| modelName | STRING | ✔ |

| modelVersion | STRING | ✔ |

| dimensionIndex | INTEGER | ✖ | Topic vector index (0...n-1) |

| dimensionValue | FLOAT | ✖ | The contribution (0.0-1.0) that this dimension has to the section. |

#### Available Section-Level Topic Model Vectors

general:

: general model used for most sections and documents

`modelName` = `general`

`modelVersion` = `VanillaLda.model-identifier-979b20fdc5691083320d4a61c515e64e0b53ecf9-a6728cf46f462fc4e38b53e8420a386dbe5d75ad

`

business:

: The model used for the "business" discussion sections of SEC filings.

`modelName` = `business-discussion`

`modelVersion` = `VocabularyLda.train-7e88246f08a48bb9a0eaaf928ab32a1969160424-a0f0f4c8da9aefa6ff594de804229b78839457f9`

management:

: The model used for the "management" discussion sections of SEC filings.

`modelName` = `management-discussion`

`modelVersion` = `VocabularyLda.train-7e88246f08a48bb9a0eaaf928ab32a1969160424-06092a01e977e851fb6f9fb94e4e158521c43104`

risk:

: The model used for the "risk" discussion sections of SEC filings.

`modelName` = `risk-discussion`

`modelVersion` = `VocabularyLda.train-7e88246f08a48bb9a0eaaf928ab32a1969160424-4062dab819e845468b13f84097cc47f54b556612`

| modelName | ARTICLES | SEC |

|-----------|:--------:|:---:|

| `general` | ✔ | ✔<br>unless a vocabulary model was run |

| `business-discussion` | ✖ | 10-K section 1|

| `management-discussion` | ✖ | 10-K section 7 <br> 10-Q section 2 |

| `risk-discussion` | ✖ | 10-K section 1A <br> 10-Q section 1A |

#### Topic Vector Models In-Depth

With the introduction of Topic Vector Models we introduce two new mathematical models: Latent Dirichlet Allocation (LDA) and Vocabulary LDA. The first model well documented in the machine learning literature so we will only briefly describe it here. We will follow with a description of our Vocabulary LDA model which builds upon the vanilla LDA.

##### LDA

In the Latent Dirichlet Allocation model, we take a sequence of words and try to represent them as a set of independent topic vectors. Each topic vector is a weight assigned to a topic (the element's index is the topic identifier). Each of the topics are treated as distributions over a set of vocabulary words. So, for the LDA model we take a sequence of words and treat them independently and together as a "bag of words". This bag of words is then factored into the weighting of each topic distribution (aka. each topic) by it's likelihood of coming from that distribution. The resulting topic weights are concatenated into a single vector resulting in our topic vector model result. Because the topics are distributions over a vocabulary, each different vocabulary and/or distribution will result in different topic vector that are not comparable. This is why we cannot compare the topic vector of different model versions: they are using completely different word distributions (and maybe even different word vocabularies.)

##### Vocabulary

From the previous section we know that the vocabulary and distribution over the vocabulary words forms the essence of a topic in the LDA framework. It turns out that certain domains of language use certain vocabularies that are distinct and informative to the domain. For example: the phrase "fiduciary responsibility" is more often used in financial statements and analysis than twitter rants. To capture this intuitive domain-specific language constraint, we developed the Vocabulary LDA model(s).

While the original LDA formulation formed a "bag of words" by treating words independently, our new Vocabulary LDA will actually work on "bags of phrases". Using our core NLP engine, we extract informative, highly salient phrases that appear for distinct sections of documents. These phrasings are then used as the core "vocabulary" for our LDA. Instead of representing distributions of words, our topics now represent distributions over salient phrases.

We have built three specific Vocabulary LDA models, each one trained on salient phrasings from SEC document sections relating to business, management, and risk. These three models allow for a domain-specific phrase-aware topic vectors which can be used to compute domain-specific phrase-aware distances.

The following thought experiment should elucidate the need for and utility of the Vocabulary LDA model. Think of a collection of twitter newsfeed items alongside a group of SEC Form 10-K filings. It is clear that the words and language used in the twits is different from the 10-K language and so using the vanilla LDA approach you will see a large distance between the twitter documents and the SEC documents. However, the SEC documents themselves all look like "non-twitter" and the twitter document all look like "non-SEC"; there is not a lot of dynamic range in the distance between same-source documents here. Now, consider the Vocabulary LDA model. Under a Vocabulary LDA model trained for SEC vocabulary we will still see that twitter and SEC filings are different, but we will also have the resolution to judge distances between the SEC filings.

## Relationships

A semantic association based on the ForgeAI relationship ontology between two entities in the document.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| ++docId++ | BIGINT | ✖ | Foreign key to `Documents(docId)` |

| ++relationshipId++ | INTEGER | ✖ | The document scope unique id for the relationship. |

| subjectLabel | STRING | ✖ | The entity that is acting as the source in the directed relationship. In an undirected relationship, the source and object entities are interchangeable. |

| subjectEntityId | INTEGER | ✔ | The entity id for this entity, if found in the entities section. |

| predicateLabel | STRING | ✖ | The extracted entity that is acting as the predicate joining the subject and object entities. |

| objectLabel | STRING | ✖ | The entity that is acting as the object or destination in the directed relationship. In an undirected relationship, the source and object entities are interchangeable. |

| objectEntityId | INTEGER | ✔ | The entity id for this entity, if found in the entities section. |

| confidence | FLOAT | ✖ | The calculated confidence of the extracted relationship. |

### RelationshipSupportingSentences

Information identifying the locations in the document supporting the relationship assertion.

| NAME | TYPE | NULLABLE? | COMMENT |

|------|------|:---------:|---------|

| docId | BIGINT | ✖ | Foreign key to `Relationships(docId, relationshipId)` |

| relationshipId | INTEGER | ✖ | Foreign key to `Relationships(docId, relationshipId)` |

| sentenceId | INTEGER | ✖ | The document scope id for the supporting sentence. |

# FAQ

## How can an entity have multiple sentiment scores in one document?

We score simple entities and components of a complex entity separately, which is why you see duplicates. You can consider all instances of the company, whether as a simple entity or as a component of a complex entity, as the same entity and average the sentiment.

## What is the difference between an _intrinsic event_ and a _theme/topic_?

**Themes** are significant ideas. They are the leitmotifs in language. They are the most terse answer to the question “what are they talking about?”

In a specific story or article, themes form an interwoven structure. As an example, consider how Netflix would classify the movie ‘Rocky’. It would likely be classified as a movie about boxing, a movie about the triumph of the human spirit, and a feel good movie. These are all themes. Watching the movie we could zoom into the scene where Rocky is running up the steps of the Museum of Art and say it is about Philadelphia, or at least that part of the movie is "iconic Philadelphia." Other parts of the movie we could identify with butchers, meat packers, etc.

In short, themes are “semantic classes inferred from observables.”

An **intrinsic event** is an activity that is directly observed as a specific word or phrase in a document. Unlike a theme, it is not inferred. Most frequently it is a gerund (like boxing) or part a verb phrase (as in “he likes to box”), or a “named event” such as the “golden gloves championship.”

Intrinsic events are often used as evidence (observables) to infer themes. For example, if a document contained a sentence that mentioned the “golden gloves championship” and “knock out”, then those two intrinsic events would allow the assignment of the theme (infer) “boxing” to that paragraph. If it also contained the concept "the Olympics" we would be more confident in the assertion, and if it also contained the named entities "Muhammed Ali" and "Joe Frazier" then we would be really confident in assigning the "boxing" theme label to that section of the article.

# Sample Queries

## Documents mentioning a company

### Using the company's name

```sql=

SELECT DISTINCT d.url, d.title

FROM NERAssertions a

JOIN Documents d ON (d.docId = a.docId)

WHERE a.class = 'Organization'

AND LOWER(a.label) = 'wallame'

```

### Using the company's URI

```sql=

SELECT NVL(d.docDatetime, d.collectDatetime), d.url, d.title

FROM EntitySubsidiaryOf e

JOIN Documents d ON (d.docId = e.docId)

WHERE e.uriPath = 'apple_inc'

AND d.collectDatetime >= DATEADD(DAYS, -1, CURRENT_TIMESTAMP)

```

#### Finding a company's URI

```sql=

SELECT LOWER(e.label) AS label, a.uri, COUNT(*) AS cnt

FROM Entities e

JOIN EntityEquivalentTo a ON (a.docId = e.docId AND a.entityId = e.entityId)

WHERE e.label ILIKE 'apple%'

AND a.uri LIKE 'http://forge.ai/company/%'

GROUP BY 1, 2

ORDER BY 3 DESC

```

### Using the company's CIK

```sql=

SELECT d.url, d.title

FROM Documents d

WHERE EXISTS (SELECT 1

FROM EntityProperties p

WHERE p.docId = d.docId

AND p.name = 'cik' AND TRY_TO_NUMBER(p.value) = 320193)

```

### Using the company's ticker

```sql=

SELECT d.url, d.title

FROM Documents d

WHERE EXISTS (SELECT 1

FROM EntityProperties p

WHERE p.docId = d.docId

AND p.name = 'ticker'

AND p.value LIKE '%:aapl')

```

## Documents mentioning a company from a specific source

```sql=

SELECT DISTINCT d.url, d.title

FROM EntitySubsidiaryOf e

JOIN DocumentSource s ON (s.docId = e.docId)

JOIN Documents d ON (d.docId = e.docId)

WHERE e.uriPath = 'apple_inc'

AND s.name = 'CNN'

```

## Documents mentioning a company with a saliency of at least 0.67

```sql=

SELECT DISTINCT d.url, d.title

FROM EntitySubsidiaryOf e

JOIN EntitySaliencies s ON (s.docId = e.docId AND s.entityId = e.entityId)

JOIN Documents d ON (d.docId = e.docId)

WHERE e.uriPath = 'apple_inc'

AND s.saliency >= 0.67

```

## Sentiment over time for a company

### Document Sentiment

Daily Time-series of the document sentiment for documents mentioning Danske Bank in the past 30 days:

```sql=

SELECT TO_DATE(NVL(d.docDatetime, d.collectDatetime)) AS "Date",

AVG(s.sentiment) AS "Document Sentiment",

COUNT(*) AS "Document Count"

FROM DocumentSentiments s

JOIN Documents d ON (d.docId = s.docId)

WHERE EXISTS (SELECT 1

FROM EntitySubsidiaryOf e

WHERE e.docId = s.docId

AND e.uriPath = 'danske_bank_a/s')

AND "Date" IS NOT NULL

AND "Date" >= DATEADD(DAYS, -30, CURRENT_TIMESTAMP)

GROUP BY 1

ORDER BY 1

```

### Entity Sentiment

Daily time-series of the entity sentiment for Danske Bank:

```sql=

SELECT TO_DATE(NVL(d.docdatetime, d.collectdatetime)) AS "Date",

PERCENTILE_CONT(0.1) WITHIN GROUP (ORDER BY s.sentiment) AS p1,

AVG(s.sentiment) AS sentiment,

PERCENTILE_CONT(0.9) WITHIN GROUP (ORDER BY s.sentiment) AS p9

FROM EntityEquivalentTo e

JOIN Documents d ON d.docid = e.docid

JOIN EntitySentiments s ON s.docid = e.docid

AND s.entityid = e.entityid

WHERE uri = 'http://forge.ai/company/danske_bank_a/s'

AND "Date" >= DATEADD(DAYS, -30, CURRENT_TIMESTAMP)

GROUP BY 1

ORDER BY 1 ASC

```

A more precise calculation is to first compute a document average:

```sql=

WITH Sentiments AS (

SELECT e.docId,

e.uriPath,

AVG(s.sentiment) AS sentiment

FROM EntitySubsidiaryOf e

JOIN EntitySentiments s ON (s.docId = e.docId AND s.entityId = e.entityId)

GROUP BY 1, 2

)

SELECT TO_DATE(IFF(d.docDatetime IS NULL, d.collectDatetime, d.docDatetime)) AS "Date",

AVG(s.sentiment) AS "Entity Sentiment"

FROM Sentiments s

JOIN Documents d ON (d.docId = s.docId)

WHERE s.uriPath = 'danske_bank_a/s'

AND "Date" >= DATEADD(DAYS, -30, CURRENT_TIMESTAMP)

GROUP BY 1

```

## Themes/Topics for a company

```sql=

SELECT t.name, COUNT(*) AS occurrences

FROM DocumentTopics t

JOIN EntitySubsidiaryOf e ON (e.docId = t.docId)

JOIN EntityLocations l ON (l.docId = e.docId

AND l.entityId = e.entityId

AND l.sentenceId BETWEEN t.startSentenceId AND t.endSentenceId)

JOIN Documents d ON (d.docId = e.docId)

WHERE e.uriPath = 'danske_bank_a/s'

AND d.collectDatetime >= '2019-01-01'

GROUP BY 1

ORDER BY 2 DESC

```

### Public Companies mentioned in articles about Merger & Acquisitions

```sql=

SELECT UPPER(SPLIT_PART(p.value, ':', -1)) AS ticker,

COUNT(DISTINCT p.docId) AS occurrences

FROM DocumentTopics t

JOIN EntitySubsidiaryOf e ON (e.docId = t.docId)

JOIN EntityProperties p ON (p.docId = e.docId AND p.entityId = e.entityId)

JOIN Documents d ON (d.docId = p.docId)

WHERE t.name = 'Merger and Acquisition'

AND p.name = 'ticker'

AND d.collectDatetime >= '2020-01-01'

GROUP BY 1

ORDER BY 2 DESC

```

## Intrinsic Events for a company

```sql=

SELECT TO_DATE(IFF(d.docDatetime IS NULL OR d.docDatetime = '1970-01-01', d.collectDatetime, d.docDatetime)) AS date,

NVL(ie.class, UPPER(ie.label)) AS ievent,

LISTAGG(DISTINCT ie.category, ', ') AS categories,

COUNT(*) AS observations

FROM IntrinsicEvents ie

JOIN EntitySubsidiaryOf e ON (e.docId = ie.docId)

JOIN EntityLocations l ON (l.docId = e.docId AND l.entityId = e.entityId

AND l.sentenceId = ie.sentenceId)

JOIN Sentences s ON (s.docId = l.docId AND s.sentenceId = l.sentenceId

AND ABS(ie.startOffset - s.startOffset) < 200)

JOIN Documents d ON (d.docId = s.docId)

WHERE e.uriPath = 'danske_bank_a/s'

AND ie.confidence > 0.4

AND date IS NOT NULL

AND date > '2019-01-01'

GROUP BY 1, 2

ORDER BY 1

```

## Relationships from a person

```sql=

SELECT LOWER(predicateLabel),

LOWER(objectLabel),

MAX(confidence) AS confidence,

COUNT(*) AS occurrences

FROM Relationships

WHERE LOWER(subjectLabel) = 'tim cook'

AND LENGTH(predicateLabel) > 2

GROUP BY 1, 2

ORDER BY 4 DESC

```

## Relationships with a company

```sql=

SET label = 'danske bank';

SELECT LOWER(subjectlabel) AS subject, UPPER(predicatelabel) AS relationship, LOWER(objectlabel) AS object,

COUNT(*) AS observed,

AVG(confidence) AS confidence,

MIN(NVL(d.docDatetime, d.collectDatetime)) AS t1,

MAX(NVL(d.docDatetime, d.collectDatetime)) AS t2

FROM Relationships r

JOIN Documents d ON (d.docId = r.docId)

WHERE (subjectlabel ILIKE $label OR objectlabel ILIKE $label)

GROUP BY 1, 2, 3

ORDER BY 4 DESC

```

## Co-occurrences to a company

```sql=

WITH A AS (

SELECT a.docId,

a.entityId,

LOWER(REGEXP_REPLACE(REGEXP_REPLACE(a.label, '[\\s\\W]+', ' '),

'^\\s*(\\S.*\\S)\\s*$', '\\1')) AS label, -- normalized label

a.class,

l.sentenceId

FROM NERAssertions a

JOIN EntityLocations l ON ( l.docId = a.docId

AND l.entityId = a.entityId)

WHERE class IN ('Person','Title','Nationality',

'Organization',

'GeopoliticalEntity','Location',

'DomainTerm')

)

SELECT a1.label AS label1,

a1.class AS class1,

a2.label AS label2,

a2.class AS class2,

COUNT(DISTINCT a1.docId) AS cnt,

MIN(d.t) AS t0,

MAX(d.t) AS t1

FROM A a1

JOIN A a2 ON ( a2.docId = a1.docId

AND a2.entityId != a1.entityId

AND a2.label != a1.label)

JOIN (

SELECT docId, TO_DATE(IFF(docDatetime = '1970-01-01' OR docDatetime IS NULL, collectDatetime, docDatetime)) AS t

FROM Documents) d ON (d.docId = a1.docId)

WHERE a1.label IN ('danske bank', 'danske bank a s') AND a1.class = 'http://forge.ai/entity#Organization'

AND a2.sentenceId BETWEEN a1.sentenceId - 1 AND a1.sentenceId + 1

GROUP BY 1, 2, 3, 4

ORDER BY cnt DESC, DATEDIFF(DAY, t0, t1) DESC

```

## Documents that cluster (in one dimension of the LDA space) around a company of interest

```sql=

WITH LDA AS (

-- The "VanillaLda.model-identifier-979b20fdc5691083320d4a61c515e64e0b53ecf9-a6728cf46f462fc4e38b53e8420a386dbe5d75ad" LDA vector

SELECT *

FROM DocumentTopicModelVectors

WHERE modelVersion = 'VanillaLda.model-identifier-979b20fdc5691083320d4a61c515e64e0b53ecf9-a6728cf46f462fc4e38b53e8420a386dbe5d75ad'

), Apple AS (

-- Apple's average LDA vector

SELECT dimensionindex, AVG(dimensionvalue) AS dimensionvalue

FROM LDA

WHERE EXISTS (SELECT 1

FROM EntityAssertions a

WHERE a.docId = LDA.docId

AND assertedClass = 'http://forge.ai/company/apple_inc')

GROUP BY 1

ORDER BY 2 DESC

LIMIT 1)

SELECT LDA.docId, url, title

FROM LDA

JOIN Apple ON (Apple.dimensionindex = LDA.dimensionindex

AND ABS(Apple.dimensionvalue - LDA.dimensionvalue) < 0.005)

JOIN Documents d ON (d.docId = LDA.docId)

WHERE NOT EXISTS (SELECT 1

FROM EntityAssertions a

WHERE a.docId = d.docId

AND assertedClass = 'http://forge.ai/company/apple_inc')

```

## Documents that cluster (in the LDA vector space) around a company of interest

```sql=

WITH LDA AS (

-- The "VanillaLda.model-identifier-979b20fdc5691083320d4a61c515e64e0b53ecf9-a6728cf46f462fc4e38b53e8420a386dbe5d75ad" LDA vector

SELECT *

FROM DocumentTopicModelVectors

WHERE modelVersion = 'VanillaLda.model-identifier-979b20fdc5691083320d4a61c515e64e0b53ecf9-a6728cf46f462fc4e38b53e8420a386dbe5d75ad'

), Apple AS (

-- Apple's average LDA vector

SELECT dimensionindex, AVG(dimensionvalue) AS dimensionvalue

FROM LDA

WHERE EXISTS (SELECT 1

FROM EntityAssertions a

WHERE a.docId = LDA.docId

AND assertedClass = 'http://forge.ai/company/apple_inc')

GROUP BY 1)

SELECT docId, url, title, SUM(SQUARE(NVL(lda.dimensionvalue, 0) - NVL(Apple.dimensionvalue, 0))) AS d

FROM LDA

FULL OUTER JOIN Apple ON (Apple.dimensionindex = LDA.dimensionindex)

WHERE NOT EXISTS (SELECT 1

FROM EntityAssertions a

WHERE a.docId = LDA.docId

AND assertedClass = 'http://forge.ai/company/apple_inc')

GROUP BY 1

HAVING d < 0.01

```

## List all 8-K filings for Apple (CIK=320193) in 2018Q3

```sql=

SELECT d.docId, d.title, TO_DATE(d.docDatetime)

FROM SEC_8K.Documents d

JOIN SEC_8K.DocumentMetadata md ON (md.docId = d.docId)

WHERE md.name = 'forgeai:company-cik'

AND TRY_CAST(md.value AS INT) = 320193

AND YEAR(d.docDatetime) = 2018

AND QUARTER(d.docDatetime) = 3

```

## List all organizations mentioned in a Risk Factors section (1A) of a 10-K filing for Apple (CIK=320193)

```sql=

SELECT smd.docId, d.url, a.label

FROM SEC_10K.SectionMetadata smd

JOIN SEC_10K.NERAssertions a ON (a.docId = smd.docId

AND a.class = 'Organization')

JOIN SEC_10K.EntityLocations l ON (l.docId = a.docId AND l.entityId = a.entityId)

JOIN SEC_10K.Sentences s ON (s.docId = l.docId AND s.sentenceId = l.sentenceId)

JOIN SEC_10K.Documents d ON (d.docId = l.docId)

WHERE smd.name = 'forgeai:sec-section-type'

AND smd.value = '1A'

AND EXISTS (SELECT 1

FROM SEC_10K.DocumentMetadata d

WHERE d.docId = smd.docId

AND d.name = 'forgeai:company-cik'

AND d.value::INT = 320193)

```

# Revision History

## 06.40

### New Tables

* [`NERAssertions`](#NERAssertions)

* [`EntityEquivalentTo`](#EntityEquivalentTo)

* [`EntitySubsidiaryOf`](#EntitySubsidiaryOf)

* [`EntityHasTypes`](#EntityHasTypes)

* [`IntrinsicEvents`](#IntrinsicEvents)

* [`EntitySaliencies`](#EntitySaliencies)

* [`Quotes`](#Quotes)

* [`QuoteLocations`](#QuoteLocations)

### Changed Tables

* `docId` moved to a `BIGINT` for all tables.

* [`Documents`](#Documents)

* Added `docUuid`

* `url` is now a URL to the wsj.com for DJN articles

* [`SimilarDocuments`](#SimilarDocuments)

* `similarDocId` renamed to `similarDocUuid`

* [`EntityAliases`](#EntityAliases)

* Added `aliasId` & `confidence`

* [`EntityLocations`](#EntityLocations)

* Added `aliasId`

* [`DocumentSentiments`](#DocumentSentiments)

* Renamed `combined` to `sentiment`

* Removed `modelVersion`

* Removed `positivity`, `negativity`, and `neutrality`

* [`EntitySentiments`](#EntitySentiments)

* Removed `modelVersion`

### New Score

* [`forge-saliency-1`](#EntityScores)

### Removed Tables

* `SectionScores`

* `RelationshipPredicateAssertions`

### Deprecated Score

* [`lexica`](#DocumentScores)

## 06.30

### New Tables

* [DocumentSentiments](#DocumentSentiments)

* [EntityScores](#EntityScores)

* [EntitySentiments](#EntitySentiments)

### New Scores

* [entity-sentiment](#EntitySentiments)

* [entity-saliency](#EntityScores)

### Removed Scores

* [Document dissonance](#DocumentScores) and [Section dissonance](#SectionScores)

* [Section lexica](#SectionScores) and [Section VADER](#SectionScores)

### Articles pipeline updates

* Extended historical coverage starting from Oct-2018.

* Full Dow Jones feed articles since 2008 in the `DJN` schema (when available)

## 06.20

### New Tables

* [DocumentScores](#DocumentScores)

* [EntityAliases](#EntityAliases)

* [SectionScores](#SectionScores)

### Changed Tables

* [DocumentTopics](#DocumentTopics)

* Added `topicId`

* Added `startSentenceId` and `endSentenceId`

* [DocumentTopicWords](#DocumentTopicWords)

* Added `topicId`

* Added `count`

* Removed `name` and `parent`.

Instead use [DocumentTopicWords.topicId](#DocumentTopics) to reference [DocumentTopics.name](#DocumentTopics) and [DocumentTopics.parent](#DocumentTopics).

### New Scores

* [VADER](#DocumentScores) -- aka sentiment

* [lexica](#DocumentScores)

* [dissonance](#DocumentScores)

### Articles pipeline updates

* Ontological Categorizer for more diverse and precise topics classification.

### SEC pipeline updates

* Split `SEC` schema into `SEC_8K`, `SEC_10K`, and `SEC_10Q`

* 10-yr historical: 2009 and up

* Improved extraction

* Entity resolution

* Re-trained jargon LDA models

* Relationships

* Removed `forgeai:sec-filing-year` and `forgeai:sec-filing-qtr` metadata.

Instead use `YEAR(docDatetime)` and `QUARTER(docDatetime)`.

# Useful links

* [Forge.AI Reference](https://reference.forge.ai)

* [Snowflake Documentation](https://docs.snowflake.net)

* [Connecting to Snowflake](https://docs.snowflake.net/manuals/user-guide-connecting.html)